Top Related Projects

An optimization-based multi-sensor state estimator

Real-Time SLAM for Monocular, Stereo and RGB-D Cameras, with Loop Detection and Relocalization Capabilities

ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM

OKVIS: Open Keyframe-based Visual-Inertial SLAM.

Visual Inertial Odometry with SLAM capabilities and 3D Mesh generation.

Quick Overview

VINS-Mono is an open-source visual-inertial state estimator for autonomous navigation of robots and drones. It utilizes monocular camera and IMU (Inertial Measurement Unit) data to provide accurate pose estimation in various environments. The system is designed to be efficient and robust, making it suitable for real-time applications on resource-constrained platforms.

Pros

- High accuracy and robustness in challenging environments

- Real-time performance on embedded systems

- Supports loop closure for drift correction

- Extensive documentation and ROS integration

Cons

- Requires careful calibration of camera and IMU

- May struggle in environments with limited visual features

- Learning curve for new users due to complexity

- Limited support for multi-camera setups

Code Examples

- Initializing the VINS-Mono system:

#include <vins/estimator/estimator.h>

Estimator estimator;

estimator.setParameter();

- Processing IMU data:

void imuCallback(const sensor_msgs::ImuConstPtr &imu_msg)

{

double t = imu_msg->header.stamp.toSec();

double dx = imu_msg->linear_acceleration.x;

double dy = imu_msg->linear_acceleration.y;

double dz = imu_msg->linear_acceleration.z;

double rx = imu_msg->angular_velocity.x;

double ry = imu_msg->angular_velocity.y;

double rz = imu_msg->angular_velocity.z;

Vector3d acc(dx, dy, dz);

Vector3d gyr(rx, ry, rz);

estimator.inputIMU(t, acc, gyr);

}

- Processing image data:

void imageCallback(const sensor_msgs::ImageConstPtr &img_msg)

{

double t = img_msg->header.stamp.toSec();

cv_bridge::CvImageConstPtr ptr = cv_bridge::toCvCopy(img_msg, sensor_msgs::image_encodings::MONO8);

cv::Mat image = ptr->image;

estimator.inputImage(t, image);

}

Getting Started

-

Clone the repository:

git clone https://github.com/HKUST-Aerial-Robotics/VINS-Mono.git -

Build the project:

cd VINS-Mono catkin_make -

Run the VINS-Mono system:

roslaunch vins_estimator euroc.launch -

Play your dataset or connect your sensors:

rosbag play YOUR_DATASET.bag

Competitor Comparisons

An optimization-based multi-sensor state estimator

Pros of VINS-Fusion

- Supports multi-sensor fusion (monocular/stereo cameras, IMU, GPS)

- Improved robustness and accuracy in challenging environments

- Includes loop closure for better global consistency

Cons of VINS-Fusion

- Higher computational complexity due to multi-sensor fusion

- Requires more careful sensor calibration and synchronization

- Potentially more complex setup and configuration

Code Comparison

VINS-Mono (feature tracking):

void FeatureTracker::readImage(const cv::Mat &_img, double _cur_time)

{

cv::Mat img;

TicToc t_r;

cur_time = _cur_time;

if (EQUALIZE)

{

cv::Ptr<cv::CLAHE> clahe = cv::createCLAHE(3.0, cv::Size(8, 8));

TicToc t_c;

clahe->apply(_img, img);

ROS_DEBUG("CLAHE costs: %fms", t_c.toc());

}

else

img = _img;

VINS-Fusion (feature tracking):

void FeatureTracker::readImage(const cv::Mat &_img, double _cur_time)

{

cv::Mat img;

TicToc t_r;

cur_time = _cur_time;

if (EQUALIZE)

{

cv::Ptr<cv::CLAHE> clahe = cv::createCLAHE(3.0, cv::Size(8, 8));

TicToc t_c;

clahe->apply(_img, img);

ROS_DEBUG("CLAHE costs: %fms", t_c.toc());

}

else

img = _img;

The feature tracking code is nearly identical in both projects, reflecting their shared codebase. The main differences lie in the sensor fusion and estimation modules.

Real-Time SLAM for Monocular, Stereo and RGB-D Cameras, with Loop Detection and Relocalization Capabilities

Pros of ORB_SLAM2

- More lightweight and computationally efficient

- Better performance in feature-rich environments

- Wider community support and adoption

Cons of ORB_SLAM2

- Less robust in feature-poor or dynamic environments

- Lacks built-in IMU integration for improved accuracy

- May struggle with rapid camera motions

Code Comparison

ORB_SLAM2 (feature extraction):

void Frame::ExtractORB(int flag, const cv::Mat &im)

{

if(flag==0)

(*mpORBextractorLeft)(im,cv::Mat(),mvKeys,mDescriptors);

else

(*mpORBextractorRight)(im,cv::Mat(),mvKeysRight,mDescriptorsRight);

}

VINS-Mono (feature tracking):

void FeatureTracker::readImage(const cv::Mat &_img, double _cur_time)

{

cv::Mat img;

TicToc t_r;

cur_time = _cur_time;

if (EQUALIZE)

{

cv::Ptr<cv::CLAHE> clahe = cv::createCLAHE(3.0, cv::Size(8, 8));

clahe->apply(_img, img);

}

else

img = _img;

}

ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM

Pros of ORB_SLAM3

- Multi-map SLAM capability, allowing for better handling of large-scale environments

- Supports monocular, stereo, and RGB-D cameras, as well as visual-inertial odometry

- Real-time loop closing and relocalization features

Cons of ORB_SLAM3

- Higher computational requirements compared to VINS-Mono

- May struggle in environments with limited visual features

- Initialization can be more sensitive in certain scenarios

Code Comparison

ORB_SLAM3:

// Feature extraction and matching

void Frame::ExtractORB(int flag, const cv::Mat &im, const int x0, const int x1)

{

vector<int> vLapping = {x0,x1};

monoLeft = (*mpORBextractorLeft)(im,cv::Mat(),mvKeys,mDescriptors,vLapping);

}

VINS-Mono:

// Feature tracking

void FeatureTracker::readImage(const cv::Mat &_img, double _cur_time)

{

cv::Mat img;

TicToc t_r;

cur_time = _cur_time;

if (EQUALIZE)

cv::equalizeHist(_img, img);

else

img = _img;

}

Both systems utilize OpenCV for image processing, but ORB_SLAM3 focuses on ORB feature extraction, while VINS-Mono emphasizes feature tracking across frames.

Pros of ROVIO

- Lightweight and computationally efficient, suitable for resource-constrained platforms

- Robust to rapid motions and dynamic environments

- Supports multi-camera setups for improved accuracy and coverage

Cons of ROVIO

- Limited to visual-inertial odometry, lacking loop closure and global optimization

- May struggle with feature-poor environments due to its reliance on image patches

- Less extensive documentation and community support compared to VINS-Mono

Code Comparison

ROVIO (C++):

rovio::RovioNode<rovio::FilterState> rovio_node;

rovio_node.makeTest();

rovio_node.makeFilterTest();

VINS-Mono (C++):

estimator.setParameter();

f_manager.setRic(Ric);

ProjectionFactor::sqrt_info = FOCAL_LENGTH / 1.5 * Matrix2d::Identity();

Both repositories use C++ and ROS integration, but ROVIO's codebase is more compact and focused on EKF-based estimation, while VINS-Mono includes additional components for optimization and loop closure.

OKVIS: Open Keyframe-based Visual-Inertial SLAM.

Pros of OKVIS

- Supports stereo and multi-camera setups, offering more flexibility in sensor configurations

- Includes a robust initialization process, potentially leading to better performance in challenging scenarios

- Implements a tightly-coupled visual-inertial estimator, which can provide more accurate results in certain situations

Cons of OKVIS

- Less actively maintained compared to VINS-Mono, with fewer recent updates

- May have a steeper learning curve due to its more complex architecture

- Limited documentation and community support compared to VINS-Mono

Code Comparison

OKVIS:

okvis::ThreadedKFVio okvis_estimator(parameters);

okvis_estimator.addImuMeasurement(timestamp, acc, gyro);

okvis_estimator.addImage(timestamp, image);

VINS-Mono:

estimator.processIMU(t, acc, gyr);

estimator.processImage(image, timestamp);

Both systems use similar function calls for processing IMU and image data, but OKVIS's interface is slightly more verbose. VINS-Mono's code appears more straightforward, which may contribute to its ease of use for newcomers to visual-inertial odometry.

Visual Inertial Odometry with SLAM capabilities and 3D Mesh generation.

Pros of Kimera-VIO

- Supports both stereo and monocular vision, offering more flexibility

- Includes a mesh reconstruction module for 3D environment mapping

- Provides a modular architecture, allowing easier customization and extension

Cons of Kimera-VIO

- Higher computational requirements due to additional features

- Steeper learning curve for new users due to increased complexity

- Less extensive documentation compared to VINS-Mono

Code Comparison

VINS-Mono (initialization):

void Estimator::processIMU(double dt, const Vector3d &linear_acceleration, const Vector3d &angular_velocity)

{

if (!first_imu)

{

first_imu = true;

acc_0 = linear_acceleration;

gyr_0 = angular_velocity;

}

}

Kimera-VIO (initialization):

void VioBackEnd::initialize(const Timestamp& timestamp_kf1,

const Timestamp& timestamp_kf2,

const gtsam::Pose3& B_Pose_leftCam,

const StereoFrame& stereo_frame_lkf,

const ImuFront& imu_data) {

CHECK(graph_);

initial_ground_truth_state_ = nullptr;

addInitialFactorsAndValues(timestamp_kf1, timestamp_kf2, B_Pose_leftCam,

stereo_frame_lkf, imu_data);

}

Both repositories focus on visual-inertial odometry, but Kimera-VIO offers additional features and flexibility at the cost of increased complexity.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

VINS-Mono

A Robust and Versatile Monocular Visual-Inertial State Estimator

11 Jan 2019: An extension of VINS, which supports stereo cameras / stereo cameras + IMU / mono camera + IMU, is published at VINS-Fusion

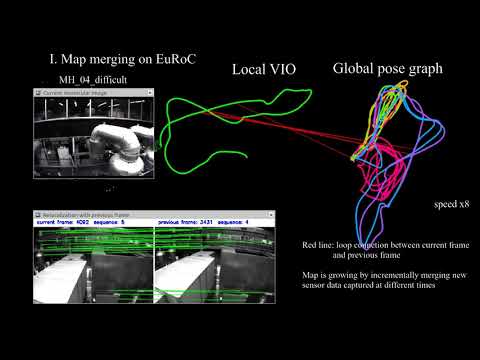

29 Dec 2017: New features: Add map merge, pose graph reuse, online temporal calibration function, and support rolling shutter camera. Map reuse videos:

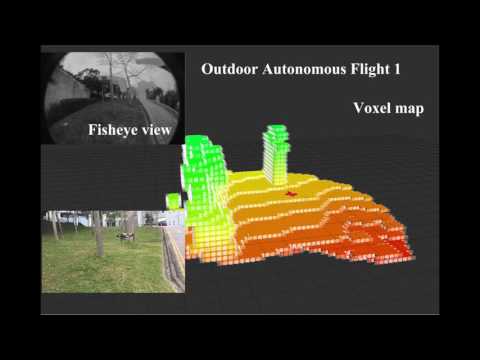

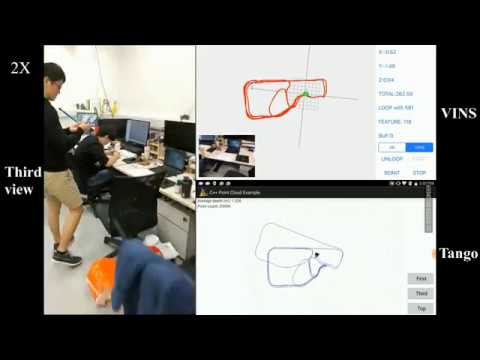

VINS-Mono is a real-time SLAM framework for Monocular Visual-Inertial Systems. It uses an optimization-based sliding window formulation for providing high-accuracy visual-inertial odometry. It features efficient IMU pre-integration with bias correction, automatic estimator initialization, online extrinsic calibration, failure detection and recovery, loop detection, and global pose graph optimization, map merge, pose graph reuse, online temporal calibration, rolling shutter support. VINS-Mono is primarily designed for state estimation and feedback control of autonomous drones, but it is also capable of providing accurate localization for AR applications. This code runs on Linux, and is fully integrated with ROS. For iOS mobile implementation, please go to VINS-Mobile.

Authors: Tong Qin, Peiliang Li, Zhenfei Yang, and Shaojie Shen from the HKUST Aerial Robotics Group

Videos:

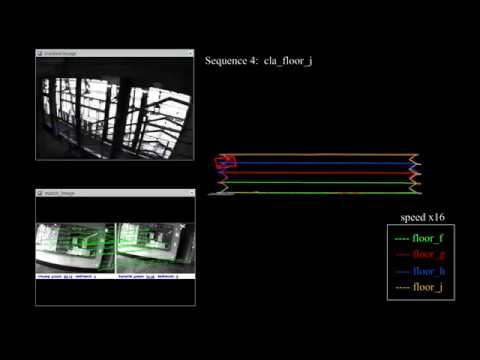

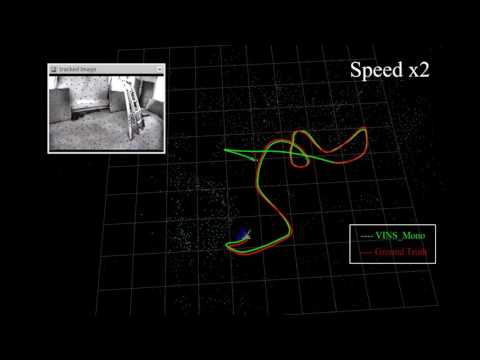

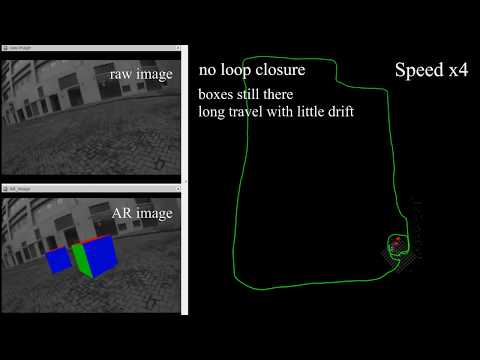

EuRoC dataset; Indoor and outdoor performance; AR application;

MAV application; Mobile implementation (Video link for mainland China friends: Video1 Video2 Video3 Video4 Video5)

Related Papers

-

Online Temporal Calibration for Monocular Visual-Inertial Systems, Tong Qin, Shaojie Shen, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS, 2018), best student paper award pdf

-

VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator, Tong Qin, Peiliang Li, Zhenfei Yang, Shaojie Shen, IEEE Transactions on Roboticspdf

If you use VINS-Mono for your academic research, please cite at least one of our related papers.bib

1. Prerequisites

1.1 Ubuntu and ROS Ubuntu 16.04. ROS Kinetic. ROS Installation additional ROS pacakge

sudo apt-get install ros-YOUR_DISTRO-cv-bridge ros-YOUR_DISTRO-tf ros-YOUR_DISTRO-message-filters ros-YOUR_DISTRO-image-transport

1.2. Ceres Solver Follow Ceres Installation, use version 1.14.0 and remember to sudo make install. (There are compilation issues in Ceres versions 2.0.0 and above.)

2. Build VINS-Mono on ROS

Clone the repository and catkin_make:

cd ~/catkin_ws/src

git clone https://github.com/HKUST-Aerial-Robotics/VINS-Mono.git

cd ../

catkin_make

source ~/catkin_ws/devel/setup.bash

3. Visual-Inertial Odometry and Pose Graph Reuse on Public datasets

Download EuRoC MAV Dataset. Although it contains stereo cameras, we only use one camera. The system also works with ETH-asl cla dataset. We take EuRoC as the example.

3.1 visual-inertial odometry and loop closure

3.1.1 Open three terminals, launch the vins_estimator , rviz and play the bag file respectively. Take MH_01 for example

roslaunch vins_estimator euroc.launch

roslaunch vins_estimator vins_rviz.launch

rosbag play YOUR_PATH_TO_DATASET/MH_01_easy.bag

(If you fail to open vins_rviz.launch, just open an empty rviz, then load the config file: file -> Open Config-> YOUR_VINS_FOLDER/config/vins_rviz_config.rviz)

3.1.2 (Optional) Visualize ground truth. We write a naive benchmark publisher to help you visualize the ground truth. It uses a naive strategy to align VINS with ground truth. Just for visualization. not for quantitative comparison on academic publications.

roslaunch benchmark_publisher publish.launch sequence_name:=MH_05_difficult

(Green line is VINS result, red line is ground truth).

3.1.3 (Optional) You can even run EuRoC without extrinsic parameters between camera and IMU. We will calibrate them online. Replace the first command with:

roslaunch vins_estimator euroc_no_extrinsic_param.launch

No extrinsic parameters in that config file. Waiting a few seconds for initial calibration. Sometimes you cannot feel any difference as the calibration is done quickly.

3.2 map merge

After playing MH_01 bag, you can continue playing MH_02 bag, MH_03 bag ... The system will merge them according to the loop closure.

3.3 map reuse

3.3.1 map save

Set the pose_graph_save_path in the config file (YOUR_VINS_FOLEDER/config/euroc/euroc_config.yaml). After playing MH_01 bag, input s in vins_estimator terminal, then enter. The current pose graph will be saved.

3.3.2 map load

Set the load_previous_pose_graph to 1 before doing 3.1.1. The system will load previous pose graph from pose_graph_save_path. Then you can play MH_02 bag. New sequence will be aligned to the previous pose graph.

4. AR Demo

4.1 Download the bag file, which is collected from HKUST Robotic Institute. For friends in mainland China, download from bag file.

4.2 Open three terminals, launch the ar_demo, rviz and play the bag file respectively.

roslaunch ar_demo 3dm_bag.launch

roslaunch ar_demo ar_rviz.launch

rosbag play YOUR_PATH_TO_DATASET/ar_box.bag

We put one 0.8m x 0.8m x 0.8m virtual box in front of your view.

5. Run with your device

Suppose you are familiar with ROS and you can get a camera and an IMU with raw metric measurements in ROS topic, you can follow these steps to set up your device. For beginners, we highly recommend you to first try out VINS-Mobile if you have iOS devices since you don't need to set up anything.

5.1 Change to your topic name in the config file. The image should exceed 20Hz and IMU should exceed 100Hz. Both image and IMU should have the accurate time stamp. IMU should contain absolute acceleration values including gravity.

5.2 Camera calibration:

We support the pinhole model and the MEI model. You can calibrate your camera with any tools you like. Just write the parameters in the config file in the right format. If you use rolling shutter camera, please carefully calibrate your camera, making sure the reprojection error is less than 0.5 pixel.

5.3 Camera-Imu extrinsic parameters:

If you have seen the config files for EuRoC and AR demos, you can find that we can estimate and refine them online. If you familiar with transformation, you can figure out the rotation and position by your eyes or via hand measurements. Then write these values into config as the initial guess. Our estimator will refine extrinsic parameters online. If you don't know anything about the camera-IMU transformation, just ignore the extrinsic parameters and set the estimate_extrinsic to 2, and rotate your device set at the beginning for a few seconds. When the system works successfully, we will save the calibration result. you can use these result as initial values for next time. An example of how to set the extrinsic parameters is inextrinsic_parameter_example

5.4 Temporal calibration: Most self-made visual-inertial sensor sets are unsynchronized. You can set estimate_td to 1 to online estimate the time offset between your camera and IMU.

5.5 Rolling shutter: For rolling shutter camera (carefully calibrated, reprojection error under 0.5 pixel), set rolling_shutter to 1. Also, you should set rolling shutter readout time rolling_shutter_tr, which is from sensor datasheet(usually 0-0.05s, not exposure time). Don't try web camera, the web camera is so awful.

5.6 Other parameter settings: Details are included in the config file.

5.7 Performance on different devices:

(global shutter camera + synchronized high-end IMU, e.g. VI-Sensor) > (global shutter camera + synchronized low-end IMU) > (global camera + unsync high frequency IMU) > (global camera + unsync low frequency IMU) > (rolling camera + unsync low frequency IMU).

6. Docker Support

To further facilitate the building process, we add docker in our code. Docker environment is like a sandbox, thus makes our code environment-independent. To run with docker, first make sure ros and docker are installed on your machine. Then add your account to docker group by sudo usermod -aG docker $YOUR_USER_NAME. Relaunch the terminal or logout and re-login if you get Permission denied error, type:

cd ~/catkin_ws/src/VINS-Mono/docker

make build

./run.sh LAUNCH_FILE_NAME # ./run.sh euroc.launch

Note that the docker building process may take a while depends on your network and machine. After VINS-Mono successfully started, open another terminal and play your bag file, then you should be able to see the result. If you need modify the code, simply run ./run.sh LAUNCH_FILE_NAME after your changes.

7. Acknowledgements

We use ceres solver for non-linear optimization and DBoW2 for loop detection, and a generic camera model.

8. Licence

The source code is released under GPLv3 license.

We are still working on improving the code reliability. For any technical issues, please contact Tong QIN <tong.qinATconnect.ust.hk> or Peiliang LI <pliapATconnect.ust.hk>.

For commercial inquiries, please contact Shaojie SHEN <eeshaojieATust.hk>

Top Related Projects

An optimization-based multi-sensor state estimator

Real-Time SLAM for Monocular, Stereo and RGB-D Cameras, with Loop Detection and Relocalization Capabilities

ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM

OKVIS: Open Keyframe-based Visual-Inertial SLAM.

Visual Inertial Odometry with SLAM capabilities and 3D Mesh generation.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot