Top Related Projects

The official NGINX Open Source repository.

The Cloud Native Application Proxy

Cloud-native high-performance edge/middle/service proxy

The Cloud-Native API Gateway and AI Gateway

HAProxy Load Balancer's development branch (mirror of git.haproxy.org)

Connect, secure, control, and observe services.

Quick Overview

Kong is a cloud-native, platform-agnostic API gateway. It's designed to secure, manage, and extend APIs and microservices in cloud-native environments. Kong acts as a layer between clients and your API, offering features like authentication, rate limiting, and analytics.

Pros

- Highly extensible with a wide range of plugins

- Supports multiple protocols (HTTP(S), gRPC, WebSockets)

- Can be deployed in various environments (on-premise, cloud, Kubernetes)

- High performance and low latency

Cons

- Steep learning curve for advanced configurations

- Documentation can be overwhelming for beginners

- Some features require enterprise version

- Configuration management can be complex in large deployments

Code Examples

- Basic Kong configuration in

kong.conf:

database = postgres

pg_host = 127.0.0.1

pg_port = 5432

pg_user = kong

pg_password = kong_password

pg_database = kong

- Adding a service and route using Kong's Admin API:

# Add a service

curl -i -X POST http://localhost:8001/services \

--data name=example-service \

--data url='http://example.com'

# Add a route

curl -i -X POST http://localhost:8001/services/example-service/routes \

--data 'paths[]=/example' \

--data name=example-route

- Enabling a plugin (rate limiting) for a service:

curl -X POST http://localhost:8001/services/example-service/plugins \

--data "name=rate-limiting" \

--data "config.minute=5" \

--data "config.policy=local"

Getting Started

-

Install Kong:

# On Ubuntu sudo apt update sudo apt install -y kong -

Set up the database:

kong migrations bootstrap -

Start Kong:

kong start -

Verify installation:

curl -i http://localhost:8001/

Kong is now running and ready to manage your APIs. You can start adding services, routes, and plugins using the Admin API or declarative configuration.

Competitor Comparisons

The official NGINX Open Source repository.

Pros of nginx

- Lightweight and highly efficient, consuming fewer resources

- Excellent performance for static content and reverse proxy scenarios

- Simpler configuration for basic use cases

Cons of nginx

- Limited built-in API management capabilities

- Fewer out-of-the-box features for microservices and service mesh architectures

- Requires additional modules or third-party tools for advanced functionality

Code Comparison

nginx configuration example:

http {

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}

Kong configuration example:

services:

- name: example-service

url: http://backend

routes:

- paths:

- /

While nginx uses a more traditional configuration syntax, Kong employs a declarative YAML format for defining services and routes. Kong's approach is often more intuitive for API-centric deployments, whereas nginx's configuration is more flexible for general-purpose web serving and proxying tasks.

Both projects are highly regarded in their respective domains, with nginx excelling in traditional web serving scenarios and Kong offering more advanced API gateway features out of the box.

The Cloud Native Application Proxy

Pros of Traefik

- Easier configuration with automatic service discovery

- Built-in Let's Encrypt support for automatic SSL/TLS

- More lightweight and faster performance in some scenarios

Cons of Traefik

- Less extensive plugin ecosystem compared to Kong

- Not as feature-rich for complex API management scenarios

- Steeper learning curve for advanced configurations

Code Comparison

Traefik configuration (YAML):

http:

routers:

my-router:

rule: "Host(`example.com`)"

service: my-service

services:

my-service:

loadBalancer:

servers:

- url: "http://localhost:8080"

Kong configuration (YAML):

services:

- name: my-service

url: http://localhost:8080

routes:

- name: my-route

hosts:

- example.com

service: my-service

Both Traefik and Kong are popular API gateways and reverse proxies, but they have different strengths. Traefik excels in ease of use and automatic configuration, while Kong offers more advanced features for complex API management. The choice between them depends on specific project requirements and the level of control needed over the API gateway infrastructure.

Cloud-native high-performance edge/middle/service proxy

Pros of Envoy

- More flexible and extensible architecture, allowing for easier customization and plugin development

- Better performance and lower latency in high-traffic scenarios

- Stronger focus on observability with built-in tracing and metrics

Cons of Envoy

- Steeper learning curve and more complex configuration

- Less out-of-the-box functionality compared to Kong

- Smaller ecosystem of plugins and integrations

Code Comparison

Kong configuration example:

http {

upstream backend {

server backend1.example.com;

server backend2.example.com;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}

Envoy configuration example:

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 80

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: backend_cluster

clusters:

- name: backend_cluster

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: backend_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: backend1.example.com

port_value: 80

- endpoint:

address:

socket_address:

address: backend2.example.com

port_value: 80

The Cloud-Native API Gateway and AI Gateway

Pros of APISIX

- Lightweight and more performant, with lower latency and higher throughput

- Built-in support for service discovery and dynamic upstream

- More flexible plugin system, allowing for hot reloading of plugins

Cons of APISIX

- Smaller community and ecosystem compared to Kong

- Less extensive documentation and fewer third-party integrations

- Steeper learning curve for newcomers due to its flexibility

Code Comparison

APISIX configuration (YAML):

routes:

-

uri: /hello

upstream:

type: roundrobin

nodes:

"127.0.0.1:1980": 1

Kong configuration (YAML):

services:

- name: example-service

url: http://example.com

routes:

- paths:

- /example

Both APISIX and Kong offer powerful API gateway capabilities, but they differ in their approach and feature set. APISIX focuses on performance and flexibility, while Kong provides a more mature ecosystem with extensive documentation and integrations. The choice between the two depends on specific project requirements, team expertise, and scalability needs.

HAProxy Load Balancer's development branch (mirror of git.haproxy.org)

Pros of HAProxy

- Lightweight and efficient, with lower resource consumption

- Excellent for high-performance TCP and HTTP load balancing

- Mature project with a long history of stability and reliability

Cons of HAProxy

- Less feature-rich compared to Kong, especially for API gateway functionalities

- Configuration can be more complex and less user-friendly

- Limited built-in support for modern microservices patterns

Code Comparison

HAProxy configuration example:

frontend http_front

bind *:80

default_backend http_back

backend http_back

balance roundrobin

server server1 127.0.0.1:8000 check

server server2 127.0.0.1:8001 check

Kong configuration example:

local kong = kong

kong.service.request.set_header("X-Custom-Header", "My Value")

kong.log("This is a custom plugin for Kong")

HAProxy focuses on load balancing configuration, while Kong allows for more complex API gateway logic through Lua plugins. HAProxy's configuration is typically done in a single file, whereas Kong uses a combination of configuration files and database-stored settings. Both projects are highly regarded in their respective domains, with HAProxy excelling in pure load balancing scenarios and Kong offering more extensive API management features.

Connect, secure, control, and observe services.

Pros of Istio

- More comprehensive service mesh solution with advanced traffic management, security, and observability features

- Better suited for complex microservices architectures and multi-cluster deployments

- Stronger integration with Kubernetes and cloud-native ecosystems

Cons of Istio

- Steeper learning curve and more complex setup compared to Kong

- Higher resource consumption and potential performance overhead

- Less flexibility for non-Kubernetes environments

Code Comparison

Istio (Envoy configuration):

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-service

spec:

hosts:

- my-service

http:

- route:

- destination:

host: my-service

subset: v1

Kong (declarative configuration):

_format_version: "2.1"

services:

- name: my-service

url: http://my-service

routes:

- name: my-route

paths:

- /my-service

Both examples show basic routing configuration, but Istio's approach is more Kubernetes-native and offers finer-grained control over traffic management. Kong's configuration is simpler and more straightforward for basic use cases.

Istio provides a more comprehensive service mesh solution with advanced features, while Kong offers a lighter-weight API gateway that can be easier to set up and manage for simpler architectures. The choice between the two depends on the specific requirements and complexity of the project.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

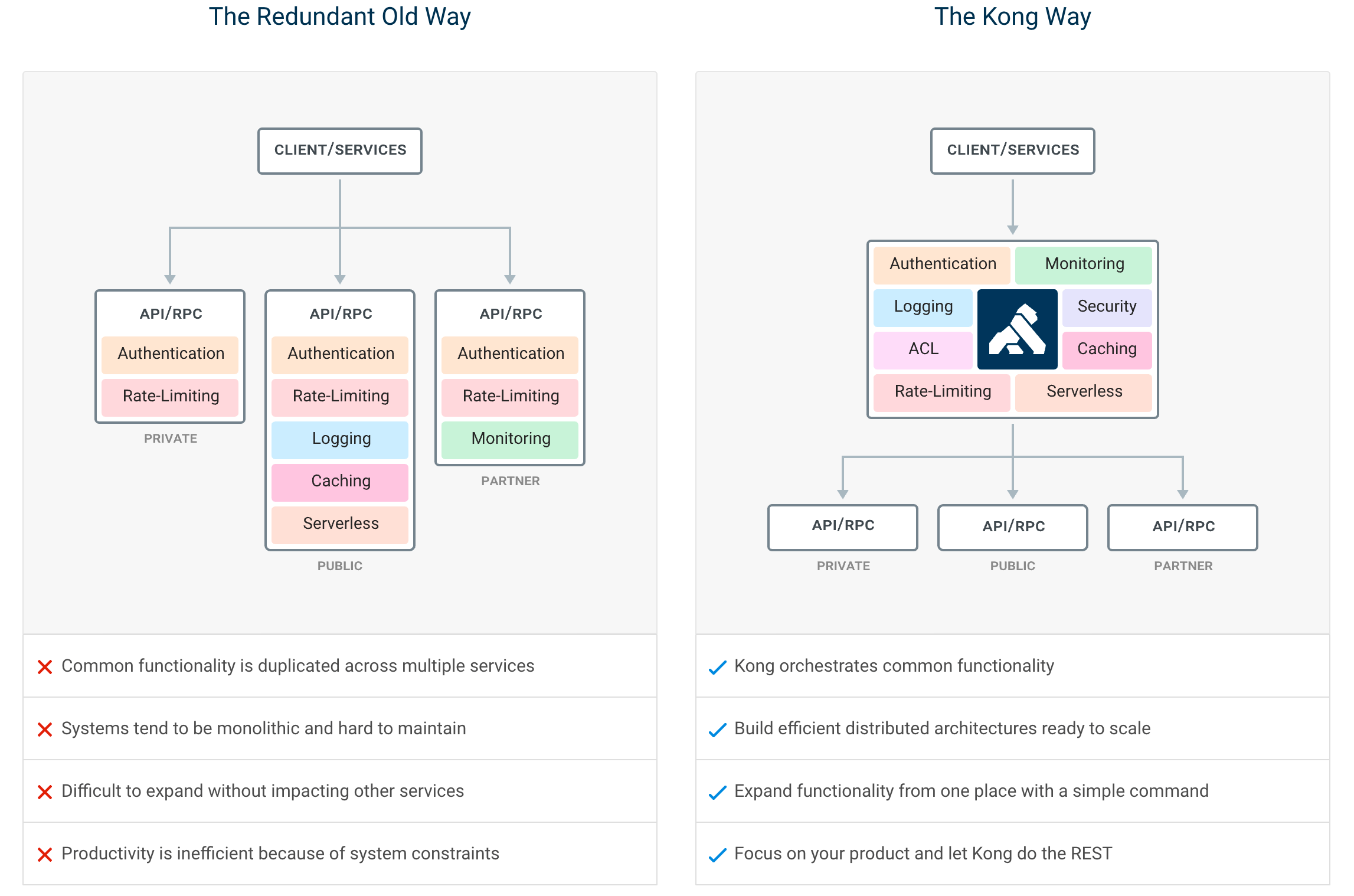

Kong or Kong API Gateway is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support.

By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease.

Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

Installation | Documentation | Discussions | Forum | Blog | Builds | Cloud Hosted Kong

Getting Started

If you prefer to use a cloud-hosted Kong, you can sign up for a free trial of Kong Konnect and get started in minutes. If not, you can follow the instructions below to get started with Kong on your own infrastructure.

Letâs test drive Kong by adding authentication to an API in under 5 minutes.

We suggest using the docker-compose distribution via the instructions below, but there is also a docker installation procedure if youâd prefer to run the Kong API Gateway in DB-less mode.

Whether youâre running in the cloud, on bare metal, or using containers, you can find every supported distribution on our official installation page.

- To start, clone the Docker repository and navigate to the compose folder.

$ git clone https://github.com/Kong/docker-kong

$ cd docker-kong/compose/

- Start the Gateway stack using:

$ KONG_DATABASE=postgres docker-compose --profile database up

The Gateway is now available on the following ports on localhost:

:8000- send traffic to your service via Kong:8001- configure Kong using Admin API or via decK:8002- access Kong's management Web UI (Kong Manager) on localhost:8002

Next, follow the quick start guide to tour the Gateway features.

Features

By centralizing common API functionality across all your organization's services, the Kong API Gateway creates more freedom for engineering teams to focus on the challenges that matter most.

The top Kong features include:

- Advanced routing, load balancing, health checking - all configurable via a RESTful admin API or declarative configuration.

- Authentication and authorization for APIs using methods like JWT, basic auth, OAuth, ACLs and more.

- Proxy, SSL/TLS termination, and connectivity support for L4 or L7 traffic.

- Plugins for enforcing traffic controls, rate limiting, req/res transformations, logging, monitoring and including a plugin developer hub.

- Plugins for AI traffic to support multi-LLM implementations and no-code AI use cases, with advanced AI prompt engineering, AI observability, AI security and more.

- Sophisticated deployment models like Declarative Databaseless Deployment and Hybrid Deployment (control plane/data plane separation) without any vendor lock-in.

- Native ingress controller support for serving Kubernetes.

Plugin Hub

Plugins provide advanced functionality that extends the use of the Gateway. Many of the Kong Inc. and community-developed plugins like AWS Lambda, Correlation ID, and Response Transformer are showcased at the Plugin Hub.

Contribute to the Plugin Hub and ensure your next innovative idea is published and available to the broader community!

Contributing

We â¤ï¸ pull requests, and weâre continually working hard to make it as easy as possible for developers to contribute. Before beginning development with the Kong API Gateway, please familiarize yourself with the following developer resources:

- Community Pledge (COMMUNITY_PLEDGE.md) for our pledge to interact with you, the open source community.

- Contributor Guide (CONTRIBUTING.md) to learn about how to contribute to Kong.

- Development Guide (DEVELOPER.md): Setting up your development environment.

- CODE_OF_CONDUCT and COPYRIGHT

Use the Plugin Development Guide for building new and creative plugins, or browse the online version of Kong's source code documentation in the Plugin Development Kit (PDK) Reference. Developers can build plugins in Lua, Go or JavaScript.

Releases

Please see the Changelog for more details about a given release. The SemVer Specification is followed when versioning Gateway releases.

Join the Community

- Check out the docs

- Join the Kong discussions forum

- Join the Kong discussions at the Kong Nation forum: https://discuss.konghq.com/

- Join our Community Slack

- Read up on the latest happenings at our blog

- Follow us on X

- Subscribe to our YouTube channel

- Visit our homepage to learn more

Konnect Cloud

Kong Inc. offers commercial subscriptions that enhance the Kong API Gateway in a variety of ways. Customers of Kong's Konnect Cloud subscription take advantage of additional gateway functionality, commercial support, and access to Kong's managed (SaaS) control plane platform. The Konnect Cloud platform features include real-time analytics, a service catalog, developer portals, and so much more! Get started with Konnect Cloud.

License

Copyright 2016-2025 Kong Inc.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

https://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

Top Related Projects

The official NGINX Open Source repository.

The Cloud Native Application Proxy

Cloud-native high-performance edge/middle/service proxy

The Cloud-Native API Gateway and AI Gateway

HAProxy Load Balancer's development branch (mirror of git.haproxy.org)

Connect, secure, control, and observe services.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot