PaddleOCR

PaddleOCR

Awesome multilingual OCR and Document Parsing toolkits based on PaddlePaddle (practical ultra lightweight OCR system, support 80+ languages recognition, provide data annotation and synthesis tools, support training and deployment among server, mobile, embedded and IoT devices)

Top Related Projects

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Tesseract Open Source OCR Engine (main repository)

Ready-to-use OCR with 80+ supported languages and all popular writing scripts including Latin, Chinese, Arabic, Devanagari, Cyrillic and etc.

Text recognition (optical character recognition) with deep learning methods, ICCV 2019

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

Quick Overview

PaddleOCR is an open-source Optical Character Recognition (OCR) toolkit developed by Baidu's PaddlePaddle team. It provides a comprehensive set of tools for text detection, recognition, and layout analysis, supporting multiple languages and offering both lightweight and accurate models for various OCR tasks.

Pros

- Comprehensive OCR solution with support for multiple languages and tasks

- Offers both lightweight models for mobile devices and high-accuracy models for server-side applications

- Active development and frequent updates from the PaddlePaddle team

- Extensive documentation and examples for easy integration

Cons

- Primarily based on the PaddlePaddle deep learning framework, which may have a steeper learning curve for those familiar with other frameworks

- Some advanced features may require more computational resources

- Documentation is sometimes not fully up-to-date with the latest features

- Limited community support compared to some other popular OCR libraries

Code Examples

- Basic text detection and recognition:

from paddleocr import PaddleOCR

ocr = PaddleOCR(use_angle_cls=True, lang='en')

result = ocr.ocr('image.jpg')

for line in result:

print(line)

- Extracting text from a specific region of an image:

import cv2

from paddleocr import PaddleOCR

image = cv2.imread('image.jpg')

roi = image[100:300, 200:400] # Define region of interest

ocr = PaddleOCR(use_angle_cls=True, lang='en')

result = ocr.ocr(roi)

for line in result:

print(line[1][0]) # Print recognized text

- Using a custom dictionary for text recognition:

from paddleocr import PaddleOCR

custom_dict = 'path/to/custom_dict.txt'

ocr = PaddleOCR(use_angle_cls=True, lang='en', rec_char_dict_path=custom_dict)

result = ocr.ocr('image.jpg')

for line in result:

print(line)

Getting Started

To get started with PaddleOCR:

- Install PaddleOCR:

pip install paddleocr

- Use PaddleOCR in your Python script:

from paddleocr import PaddleOCR

# Initialize PaddleOCR

ocr = PaddleOCR(use_angle_cls=True, lang='en')

# Perform OCR on an image

result = ocr.ocr('path/to/your/image.jpg')

# Print the results

for line in result:

print(line)

For more advanced usage and customization options, refer to the official documentation on the PaddleOCR GitHub repository.

Competitor Comparisons

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Pros of UniLM

- Broader scope: Supports a wide range of natural language processing tasks beyond OCR

- More advanced language understanding: Utilizes large-scale pre-trained models for improved performance

- Active research focus: Regularly updated with cutting-edge NLP techniques and models

Cons of UniLM

- Less specialized for OCR: May not offer as many OCR-specific features and optimizations

- Potentially more complex to use: Broader scope may require more setup and configuration for OCR tasks

- Larger resource requirements: Pre-trained models can be computationally intensive

Code Comparison

PaddleOCR:

from paddleocr import PaddleOCR

ocr = PaddleOCR(use_angle_cls=True, lang='en')

result = ocr.ocr('image.jpg')

UniLM (using LayoutLM for OCR):

from transformers import LayoutLMForTokenClassification, LayoutLMTokenizer

model = LayoutLMForTokenClassification.from_pretrained("microsoft/layoutlm-base-uncased")

tokenizer = LayoutLMTokenizer.from_pretrained("microsoft/layoutlm-base-uncased")

Note: The code snippets demonstrate basic setup and may not reflect the full complexity of using each library for OCR tasks.

Tesseract Open Source OCR Engine (main repository)

Pros of Tesseract

- Mature and widely adopted OCR engine with a long history

- Supports a wide range of languages and scripts

- Highly customizable with extensive documentation

Cons of Tesseract

- Generally slower performance compared to modern deep learning-based approaches

- May struggle with complex layouts or low-quality images

- Requires more manual configuration for optimal results

Code Comparison

Tesseract

import pytesseract

from PIL import Image

image = Image.open('image.png')

text = pytesseract.image_to_string(image)

print(text)

PaddleOCR

from paddleocr import PaddleOCR

ocr = PaddleOCR(use_angle_cls=True, lang='en')

result = ocr.ocr('image.png', cls=True)

for line in result:

print(line[1][0])

PaddleOCR offers a more streamlined API for OCR tasks, with built-in support for text detection, recognition, and angle classification. Tesseract, while powerful, often requires additional preprocessing steps for optimal results. PaddleOCR's deep learning approach generally provides better performance on complex layouts and low-quality images, but Tesseract's extensive language support and customization options make it a versatile choice for specific use cases.

Ready-to-use OCR with 80+ supported languages and all popular writing scripts including Latin, Chinese, Arabic, Devanagari, Cyrillic and etc.

Pros of EasyOCR

- Simpler installation process and easier to use for beginners

- Supports a wider range of languages (80+) out of the box

- Better documentation and examples for quick start

Cons of EasyOCR

- Generally slower inference speed compared to PaddleOCR

- Less flexibility and customization options for advanced users

- Smaller community and fewer pre-trained models available

Code Comparison

EasyOCR:

import easyocr

reader = easyocr.Reader(['en'])

result = reader.readtext('image.jpg')

PaddleOCR:

from paddleocr import PaddleOCR

ocr = PaddleOCR(use_angle_cls=True, lang='en')

result = ocr.ocr('image.jpg')

Both libraries offer simple APIs for OCR tasks, but PaddleOCR provides more options for fine-tuning and optimization. EasyOCR's code is more straightforward, making it easier for beginners to get started quickly. PaddleOCR's approach allows for more advanced configurations, which can be beneficial for complex OCR tasks or when performance optimization is crucial.

Text recognition (optical character recognition) with deep learning methods, ICCV 2019

Pros of deep-text-recognition-benchmark

- Focuses specifically on text recognition, providing a comprehensive benchmark for various models

- Implements multiple state-of-the-art architectures, allowing for easy comparison and experimentation

- Offers a modular design, making it easier to swap components and test different combinations

Cons of deep-text-recognition-benchmark

- Limited to text recognition, while PaddleOCR offers a more comprehensive OCR pipeline

- Less extensive documentation and fewer pre-trained models compared to PaddleOCR

- Smaller community and fewer updates, potentially leading to slower development and support

Code Comparison

deep-text-recognition-benchmark:

model = Model(opt)

converter = AttnLabelConverter(opt.character)

criterion = torch.nn.CrossEntropyLoss(ignore_index=0).to(device)

PaddleOCR:

model = build_model(config['Architecture'])

loss_class = build_loss(config['Loss'])

optimizer = build_optimizer(config['Optimizer'], model)

Both repositories use similar approaches for model initialization and loss function definition. However, PaddleOCR's code structure is more modular and configurable, allowing for easier customization of the OCR pipeline.

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

Pros of Mask_RCNN

- Specialized in instance segmentation, offering precise object detection and segmentation

- Well-documented with extensive tutorials and examples

- Supports both TensorFlow 1.x and 2.x

Cons of Mask_RCNN

- Limited to object detection and segmentation tasks

- Less frequent updates and maintenance compared to PaddleOCR

- Steeper learning curve for beginners

Code Comparison

Mask_RCNN:

import mrcnn.model as modellib

from mrcnn import utils

class InferenceConfig(coco.CocoConfig):

GPU_COUNT = 1

IMAGES_PER_GPU = 1

model = modellib.MaskRCNN(mode="inference", config=InferenceConfig(), model_dir=MODEL_DIR)

PaddleOCR:

from paddleocr import PaddleOCR

ocr = PaddleOCR(use_angle_cls=True, lang='en')

result = ocr.ocr('image.jpg', cls=True)

The code snippets highlight the difference in focus between the two repositories. Mask_RCNN requires more setup for object detection and segmentation, while PaddleOCR offers a simpler interface for OCR tasks.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

English | ç®ä½ä¸æ | ç¹é«ä¸æ | æ¥æ¬èª | íêµì´ | Français | Ð ÑÑÑкий | Español | اÙعربÙØ©

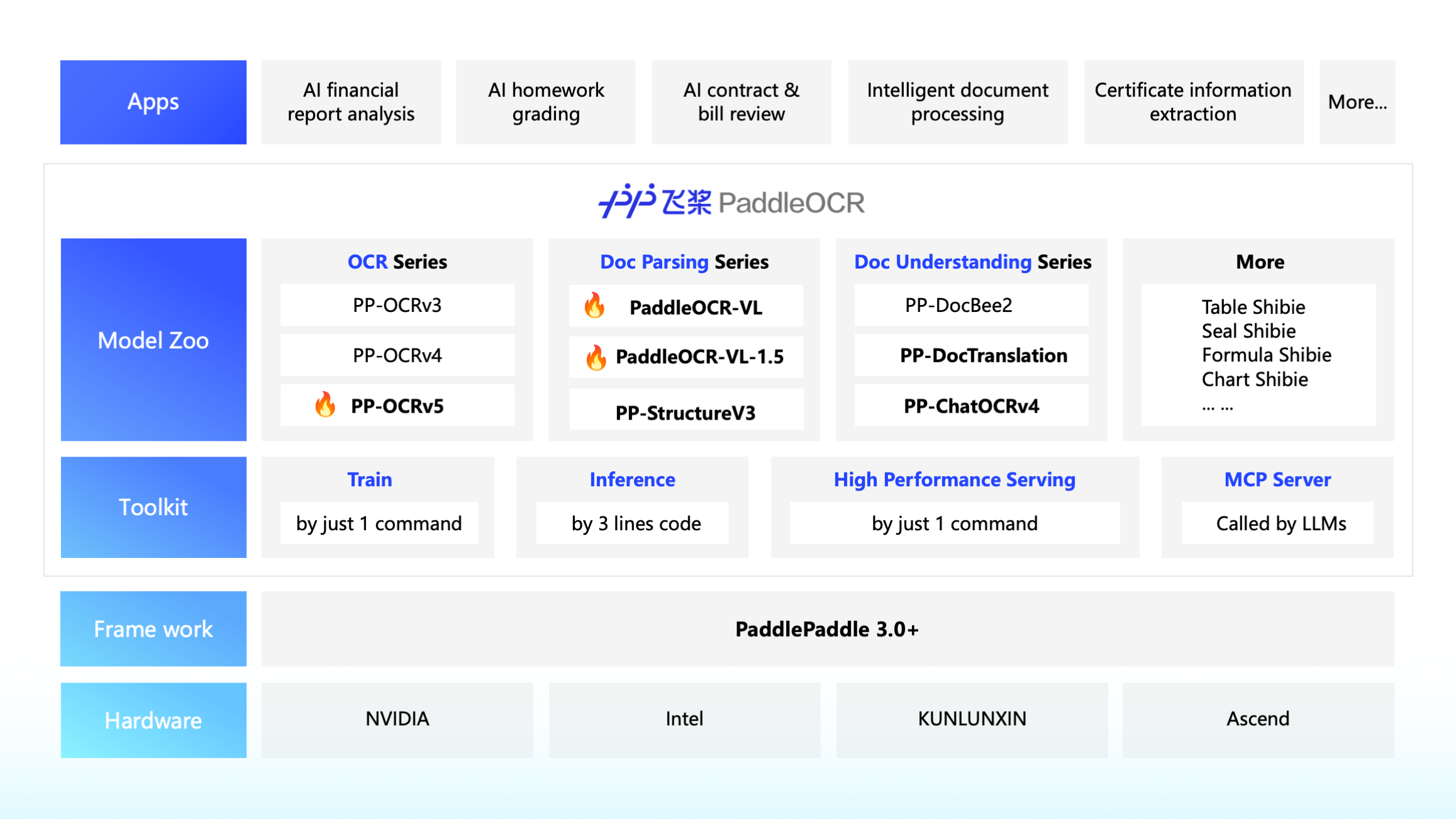

PaddleOCR is an industry-leading, production-ready OCR and document AI engine, offering end-to-end solutions from text extraction to intelligent document understanding

PaddleOCR

[!TIP] PaddleOCR now provides an MCP server that supports integration with Agent applications like Claude Desktop. For details, please refer to PaddleOCR MCP Server.

The PaddleOCR 3.0 Technical Report is now available. See details at: PaddleOCR 3.0 Technical Report

PaddleOCR converts documents and images into structured, AI-friendly data (like JSON and Markdown) with industry-leading accuracyâpowering AI applications for everyone from indie developers and startups to large enterprises worldwide. With over 50,000 stars and deep integration into leading projects like MinerU, RAGFlow, and OmniParser, PaddleOCR has become the premier solution for developers building intelligent document applications in the AI era.

PaddleOCR 3.0 Core Features

-

PP-OCRv5 â Universal Scene Text Recognition

Single model supports five text types (Simplified Chinese, Traditional Chinese, English, Japanese, and Pinyin) with 13% accuracy improvement. Solves multilingual mixed document recognition challenges. -

PP-StructureV3 â Complex Document Parsing

Intelligently converts complex PDFs and document images into Markdown and JSON files that preserve original structure. Outperforms numerous commercial solutions in public benchmarks. Perfectly maintains document layout and hierarchical structure. -

PP-ChatOCRv4 â Intelligent Information Extraction

Natively integrates ERNIE 4.5 to precisely extract key information from massive documents, with 15% accuracy improvement over previous generation. Makes documents "understand" your questions and provide accurate answers.

In addition to providing an outstanding model library, PaddleOCR 3.0 also offers user-friendly tools covering model training, inference, and service deployment, so developers can rapidly bring AI applications to production.

Special Note: PaddleOCR 3.x introduces several significant interface changes. Old code written based on PaddleOCR 2.x is likely incompatible with PaddleOCR 3.x. Please ensure that the documentation you are reading matches the version of PaddleOCR you are using. This document explains the reasons for the upgrade and the major changes from PaddleOCR 2.x to 3.x.

ð£ Recent updates

ð¥ð¥2025.08.21: Release of PaddleOCR 3.2.0, includes:

-

Significant Model Additions:

- Introduced training, inference, and deployment for PP-OCRv5 recognition models in English, Thai, and Greek. The PP-OCRv5 English model delivers an 11% improvement in English scenarios compared to the main PP-OCRv5 model, with the Thai and Greek recognition models achieving accuracies of 82.68% and 89.28%, respectively.

-

Deployment Capability Upgrades:

- Full support for PaddlePaddle framework versions 3.1.0 and 3.1.1.

- Comprehensive upgrade of the PP-OCRv5 C++ local deployment solution, now supporting both Linux and Windows, with feature parity and identical accuracy to the Python implementation.

- High-performance inference now supports CUDA 12, and inference can be performed using either the Paddle Inference or ONNX Runtime backends.

- The high-stability service-oriented deployment solution is now fully open-sourced, allowing users to customize Docker images and SDKs as required.

- The high-stability service-oriented deployment solution also supports invocation via manually constructed HTTP requests, enabling client-side code development in any programming language.

-

Benchmark Support:

- All production lines now support fine-grained benchmarking, enabling measurement of end-to-end inference time as well as per-layer and per-module latency data to assist with performance analysis. Here's how to set up and use the benchmark feature.

- Documentation has been updated to include key metrics for commonly used configurations on mainstream hardware, such as inference latency and memory usage, providing deployment references for users.

-

Bug Fixes:

- Resolved the issue of failed log saving during model training.

- Upgraded the data augmentation component for formula models for compatibility with newer versions of the albumentations dependency, and fixed deadlock warnings when using the tokenizers package in multi-process scenarios.

- Fixed inconsistencies in switch behaviors (e.g.,

use_chart_parsing) in the PP-StructureV3 configuration files compared to other pipelines.

-

Other Enhancements:

- Separated core and optional dependencies. Only minimal core dependencies are required for basic text recognition; additional dependencies for document parsing and information extraction can be installed as needed.

- Enabled support for NVIDIA RTX 50 series graphics cards on Windows; users can refer to the installation guide for the corresponding PaddlePaddle framework versions.

- PP-OCR series models now support returning single-character coordinates.

- Added AIStudio, ModelScope, and other model download sources, allowing users to specify the source for model downloads.

- Added support for chart-to-table conversion via the PP-Chart2Table module.

- Optimized documentation descriptions to improve usability.

2025.08.15: PaddleOCR 3.1.1 Released

-

Bug Fixes:

- Added the missing methods

save_vector,save_visual_info_list,load_vector, andload_visual_info_listin thePP-ChatOCRv4class. - Added the missing parameters

glossaryandllm_request_intervalto thetranslatemethod in thePPDocTranslationclass.

- Added the missing methods

-

Documentation Improvements:

- Added a demo to the MCP documentation.

- Added information about the PaddlePaddle and PaddleOCR version used for performance metrics testing in the documentation.

- Fixed errors and omissions in the production line document translation.

-

Others:

- Changed the MCP server dependency to use the pure Python library

puremagicinstead ofpython-magicto reduce installation issues. - Retested PP-OCRv5 performance metrics with PaddleOCR version 3.1.0 and updated the documentation.

- Changed the MCP server dependency to use the pure Python library

2025.06.29: PaddleOCR 3.1.0 Released

-

Key Models and Pipelines:

- Added PP-OCRv5 Multilingual Text Recognition Model, which supports the training and inference process for text recognition models in 37 languages, including French, Spanish, Portuguese, Russian, Korean, etc. Average accuracy improved by over 30%. Details

- Upgraded the PP-Chart2Table model in PP-StructureV3, further enhancing the capability of converting charts to tables. On internal custom evaluation sets, the metric (RMS-F1) increased by 9.36 percentage points (71.24% -> 80.60%).

- Newly launched document translation pipeline, PP-DocTranslation, based on PP-StructureV3 and ERNIE 4.5, which supports the translation of Markdown format documents, various complex-layout PDF documents, and document images, with the results saved as Markdown format documents. Details

-

New MCP server: Details

- Supports both OCR and PP-StructureV3 pipelines.

- Supports three working modes: local Python library, AIStudio Community Cloud Service, and self-hosted service.

- Supports invoking local services via stdio and remote services via Streamable HTTP.

-

Documentation Optimization: Improved the descriptions in some user guides for a smoother reading experience.

2025.06.26: PaddleOCR 3.0.3 Released

- Bug Fix: Resolved the issue where the `enable_mkldnn` parameter was not effective, restoring the default behavior of using MKL-DNN for CPU inference.2025.06.19: PaddleOCR 3.0.2 Released

- **New Features:**-

The default download source has been changed from

BOStoHuggingFace. Users can also change the environment variablePADDLE_PDX_MODEL_SOURCEtoBOSto set the model download source back to Baidu Object Storage (BOS). -

Added service invocation examples for six languagesâC++, Java, Go, C#, Node.js, and PHPâfor pipelines like PP-OCRv5, PP-StructureV3, and PP-ChatOCRv4.

-

Improved the layout partition sorting algorithm in the PP-StructureV3 pipeline, enhancing the sorting logic for complex vertical layouts to deliver better results.

-

Enhanced model selection logic: when a language is specified but a model version is not, the system will automatically select the latest model version supporting that language.

-

Set a default upper limit for MKL-DNN cache size to prevent unlimited growth, while also allowing users to configure cache capacity.

-

Updated default configurations for high-performance inference to support Paddle MKL-DNN acceleration and optimized the logic for automatic configuration selection for smarter choices.

-

Adjusted the logic for obtaining the default device to consider the actual support for computing devices by the installed Paddle framework, making program behavior more intuitive.

-

Added Android example for PP-OCRv5. Details.

-

Bug Fixes:

- Fixed an issue with some CLI parameters in PP-StructureV3 not taking effect.

- Resolved an issue where

export_paddlex_config_to_yamlwould not function correctly in certain cases. - Corrected the discrepancy between the actual behavior of

save_pathand its documentation description. - Fixed potential multithreading errors when using MKL-DNN in basic service deployment.

- Corrected channel order errors in image preprocessing for the Latex-OCR model.

- Fixed channel order errors in saving visualized images within the text recognition module.

- Resolved channel order errors in visualized table results within PP-StructureV3 pipeline.

- Fixed an overflow issue in the calculation of

overlap_ratiounder extremely special circumstances in the PP-StructureV3 pipeline.

-

Documentation Improvements:

- Updated the description of the

enable_mkldnnparameter in the documentation to accurately reflect the program's actual behavior. - Fixed errors in the documentation regarding the

langandocr_versionparameters. - Added instructions for exporting pipeline configuration files via CLI.

- Fixed missing columns in the performance data table for PP-OCRv5.

- Refined benchmark metrics for PP-StructureV3 across different configurations.

- Updated the description of the

-

Others:

- Relaxed version restrictions on dependencies like numpy and pandas, restoring support for Python 3.12.

History Log

2025.06.05: PaddleOCR 3.0.1 Released, includes:

- Optimisation of certain models and model configurations:

- Updated the default model configuration for PP-OCRv5, changing both detection and recognition from mobile to server models. To improve default performance in most scenarios, the parameter

limit_side_lenin the configuration has been changed from 736 to 64. - Added a new text line orientation classification model

PP-LCNet_x1_0_textline_oriwith an accuracy of 99.42%. The default text line orientation classifier for OCR, PP-StructureV3, and PP-ChatOCRv4 pipelines has been updated to this model. - Optimized the text line orientation classification model

PP-LCNet_x0_25_textline_ori, improving accuracy by 3.3 percentage points to a current accuracy of 98.85%.

- Updated the default model configuration for PP-OCRv5, changing both detection and recognition from mobile to server models. To improve default performance in most scenarios, the parameter

- Optimizations and fixes for some issues in version 3.0.0, details

ð¥ð¥2025.05.20: Official Release of PaddleOCR v3.0, including:

-

PP-OCRv5: High-Accuracy Text Recognition Model for All Scenarios - Instant Text from Images/PDFs.

- ð Single-model support for five text types - Seamlessly process Simplified Chinese, Traditional Chinese, Simplified Chinese Pinyin, English and Japanese within a single model.

- âï¸ Improved handwriting recognition: Significantly better at complex cursive scripts and non-standard handwriting.

- ð¯ 13-point accuracy gain over PP-OCRv4, achieving state-of-the-art performance across a variety of real-world scenarios.

-

PP-StructureV3: General-Purpose Document Parsing â Unleash SOTA Images/PDFs Parsing for Real-World Scenarios!

- 𧮠High-Accuracy multi-scene PDF parsing, leading both open- and closed-source solutions on the OmniDocBench benchmark.

- ð§ Specialized capabilities include seal recognition, chart-to-table conversion, table recognition with nested formulas/images, vertical text document parsing, and complex table structure analysis.

-

PP-ChatOCRv4: Intelligent Document Understanding â Extract Key Information, not just text from Images/PDFs.

- ð¥ 15-point accuracy gain in key-information extraction on PDF/PNG/JPG files over the previous generation.

- ð» Native support for ERNIE 4.5, with compatibility for large-model deployments via PaddleNLP, Ollama, vLLM, and more.

- ð¤ Integrated PP-DocBee2, enabling extraction and understanding of printed text, handwriting, seals, tables, charts, and other common elements in complex documents.

â¡ Quick Start

1. Run online demo

2. Installation

Install PaddlePaddle refer to Installation Guide, after then, install the PaddleOCR toolkit.

# If you only want to use the basic text recognition feature (returns text position coordinates and content), including the PP-OCR series

python -m pip install paddleocr

# If you want to use all features such as document parsing, document understanding, document translation, key information extraction, etc.

# python -m pip install "paddleocr[all]"

Starting from version 3.2.0, in addition to the all dependency group demonstrated above, PaddleOCR also supports installing partial optional features by specifying other dependency groups. All dependency groups provided by PaddleOCR are as follows:

| Dependency Group Name | Corresponding Functionality |

|---|---|

doc-parser | Document parsing: can be used to extract layout elements such as tables, formulas, stamps, images, etc. from documents; includes models like PP-StructureV3 |

ie | Information extraction: can be used to extract key information from documents, such as names, dates, addresses, amounts, etc.; includes models like PP-ChatOCRv4 |

trans | Document translation: can be used to translate documents from one language to another; includes models like PP-DocTranslation |

all | Complete functionality |

3. Run inference by CLI

# Run PP-OCRv5 inference

paddleocr ocr -i https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_ocr_002.png --use_doc_orientation_classify False --use_doc_unwarping False --use_textline_orientation False

# Run PP-StructureV3 inference

paddleocr pp_structurev3 -i https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/pp_structure_v3_demo.png --use_doc_orientation_classify False --use_doc_unwarping False

# Get the Qianfan API Key at first, and then run PP-ChatOCRv4 inference

paddleocr pp_chatocrv4_doc -i https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/vehicle_certificate-1.png -k 驾驶室åä¹äººæ° --qianfan_api_key your_api_key --use_doc_orientation_classify False --use_doc_unwarping False

# Get more information about "paddleocr ocr"

paddleocr ocr --help

4. Run inference by API

4.1 PP-OCRv5 Example

# Initialize PaddleOCR instance

from paddleocr import PaddleOCR

ocr = PaddleOCR(

use_doc_orientation_classify=False,

use_doc_unwarping=False,

use_textline_orientation=False)

# Run OCR inference on a sample image

result = ocr.predict(

input="https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_ocr_002.png")

# Visualize the results and save the JSON results

for res in result:

res.print()

res.save_to_img("output")

res.save_to_json("output")

4.2 PP-StructureV3 Example

from pathlib import Path

from paddleocr import PPStructureV3

pipeline = PPStructureV3(

use_doc_orientation_classify=False,

use_doc_unwarping=False

)

# For Image

output = pipeline.predict(

input="https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/pp_structure_v3_demo.png",

)

# Visualize the results and save the JSON results

for res in output:

res.print()

res.save_to_json(save_path="output")

res.save_to_markdown(save_path="output")

4.3 PP-ChatOCRv4 Example

from paddleocr import PPChatOCRv4Doc

chat_bot_config = {

"module_name": "chat_bot",

"model_name": "ernie-3.5-8k",

"base_url": "https://qianfan.baidubce.com/v2",

"api_type": "openai",

"api_key": "api_key", # your api_key

}

retriever_config = {

"module_name": "retriever",

"model_name": "embedding-v1",

"base_url": "https://qianfan.baidubce.com/v2",

"api_type": "qianfan",

"api_key": "api_key", # your api_key

}

pipeline = PPChatOCRv4Doc(

use_doc_orientation_classify=False,

use_doc_unwarping=False

)

visual_predict_res = pipeline.visual_predict(

input="https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/vehicle_certificate-1.png",

use_common_ocr=True,

use_seal_recognition=True,

use_table_recognition=True,

)

mllm_predict_info = None

use_mllm = False

# If a multimodal large model is used, the local mllm service needs to be started. You can refer to the documentation: https://github.com/PaddlePaddle/PaddleX/blob/release/3.0/docs/pipeline_usage/tutorials/vlm_pipelines/doc_understanding.en.md performs deployment and updates the mllm_chat_bot_config configuration.

if use_mllm:

mllm_chat_bot_config = {

"module_name": "chat_bot",

"model_name": "PP-DocBee",

"base_url": "http://127.0.0.1:8080/", # your local mllm service url

"api_type": "openai",

"api_key": "api_key", # your api_key

}

mllm_predict_res = pipeline.mllm_pred(

input="https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/vehicle_certificate-1.png",

key_list=["驾驶室åä¹äººæ°"],

mllm_chat_bot_config=mllm_chat_bot_config,

)

mllm_predict_info = mllm_predict_res["mllm_res"]

visual_info_list = []

for res in visual_predict_res:

visual_info_list.append(res["visual_info"])

layout_parsing_result = res["layout_parsing_result"]

vector_info = pipeline.build_vector(

visual_info_list, flag_save_bytes_vector=True, retriever_config=retriever_config

)

chat_result = pipeline.chat(

key_list=["驾驶室åä¹äººæ°"],

visual_info=visual_info_list,

vector_info=vector_info,

mllm_predict_info=mllm_predict_info,

chat_bot_config=chat_bot_config,

retriever_config=retriever_config,

)

print(chat_result)

5. Chinese Heterogeneous AI Accelerators

𧩠More Features

- Convert models to ONNX format: Obtaining ONNX Models.

- Accelerate inference using engines like OpenVINO, ONNX Runtime, TensorRT, or perform inference using ONNX format models: High-Performance Inference.

- Accelerate inference using multi-GPU and multi-process: Parallel Inference for Pipelines.

- Integrate PaddleOCR into applications written in C++, C#, Java, etc.: Serving.

â°ï¸ Advanced Tutorials

ð Quick Overview of Execution Results

⨠Stay Tuned

â Star this repository to keep up with exciting updates and new releases, including powerful OCR and document parsing capabilities! â

ð©âð©âð§âð¦ Community

| PaddlePaddle WeChat official account | Join the tech discussion group |

|---|---|

|  |

ð Awesome Projects Leveraging PaddleOCR

PaddleOCR wouldn't be where it is today without its incredible community! ð A massive thank you to all our longtime partners, new collaborators, and everyone who's poured their passion into PaddleOCR â whether we've named you or not. Your support fuels our fire!

| Project Name | Description |

|---|---|

RAGFlow  | RAG engine based on deep document understanding. |

MinerU  | Multi-type Document to Markdown Conversion Tool |

Umi-OCR  | Free, Open-source, Batch Offline OCR Software. |

OmniParser | OmniParser: Screen Parsing tool for Pure Vision Based GUI Agent. |

QAnything | Question and Answer based on Anything. |

PDF-Extract-Kit  | A powerful open-source toolkit designed to efficiently extract high-quality content from complex and diverse PDF documents. |

Dango-Translator | Recognize text on the screen, translate it and show the translation results in real time. |

| Learn more projects | More projects based on PaddleOCR |

ð©âð©âð§âð¦ Contributors

ð Star

ð License

This project is released under the Apache 2.0 license.

ð Citation

@misc{cui2025paddleocr30technicalreport,

title={PaddleOCR 3.0 Technical Report},

author={Cheng Cui and Ting Sun and Manhui Lin and Tingquan Gao and Yubo Zhang and Jiaxuan Liu and Xueqing Wang and Zelun Zhang and Changda Zhou and Hongen Liu and Yue Zhang and Wenyu Lv and Kui Huang and Yichao Zhang and Jing Zhang and Jun Zhang and Yi Liu and Dianhai Yu and Yanjun Ma},

year={2025},

eprint={2507.05595},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2507.05595},

}

Top Related Projects

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Tesseract Open Source OCR Engine (main repository)

Ready-to-use OCR with 80+ supported languages and all popular writing scripts including Latin, Chinese, Arabic, Devanagari, Cyrillic and etc.

Text recognition (optical character recognition) with deep learning methods, ICCV 2019

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot