Top Related Projects

Fast key-value DB in Go.

LevelDB key/value database in Go.

RocksDB/LevelDB inspired key-value database in Go

An embedded key/value database for Go.

BuntDB is an embeddable, in-memory key/value database for Go with custom indexing and geospatial support

A high performance memory-bound Go cache

Quick Overview

Pogreb is a fast, embedded key-value store for read-heavy workloads written in Go. It's designed to be simple, efficient, and suitable for projects that require quick access to large amounts of data stored on disk.

Pros

- High performance for read operations

- Supports concurrent reads and writes

- Simple API, easy to integrate into Go projects

- Suitable for handling large datasets

Cons

- Write performance may be slower compared to read performance

- Limited feature set compared to full-fledged databases

- Not suitable for complex querying or relational data

- Lacks built-in support for data compression

Code Examples

- Opening and closing a database:

db, err := pogreb.Open("my_database", nil)

if err != nil {

log.Fatal(err)

}

defer db.Close()

- Writing key-value pairs:

err := db.Put([]byte("key"), []byte("value"))

if err != nil {

log.Fatal(err)

}

- Reading values:

value, err := db.Get([]byte("key"))

if err != nil {

log.Fatal(err)

}

fmt.Println(string(value))

- Iterating over key-value pairs:

it := db.Items()

for {

key, value, err := it.Next()

if err == pogreb.ErrIterationDone {

break

}

if err != nil {

log.Fatal(err)

}

fmt.Printf("Key: %s, Value: %s\n", string(key), string(value))

}

Getting Started

To use Pogreb in your Go project, follow these steps:

-

Install Pogreb:

go get -u github.com/akrylysov/pogreb -

Import the package in your Go code:

import "github.com/akrylysov/pogreb" -

Open a database and start using it:

db, err := pogreb.Open("my_database", nil) if err != nil { log.Fatal(err) } defer db.Close() // Use db.Put(), db.Get(), and other methods to interact with the database

Remember to handle errors appropriately and close the database when you're done using it.

Competitor Comparisons

Fast key-value DB in Go.

Pros of Badger

- More feature-rich, including support for transactions, iterators, and merge operations

- Better suited for larger datasets and high-performance scenarios

- Active development and maintenance with a larger community

Cons of Badger

- Higher memory usage and potentially slower for small datasets

- More complex API and configuration options

- Larger codebase and dependencies

Code Comparison

Pogreb:

db, _ := pogreb.Open("test.db", nil)

defer db.Close()

db.Put([]byte("key"), []byte("value"))

value, _ := db.Get([]byte("key"))

Badger:

opts := badger.DefaultOptions("test.db")

db, _ := badger.Open(opts)

defer db.Close()

_ = db.Update(func(txn *badger.Txn) error {

return txn.Set([]byte("key"), []byte("value"))

})

Summary

Pogreb is a simpler, lightweight key-value store suitable for smaller datasets and basic operations. It offers a straightforward API and lower memory footprint. Badger, on the other hand, is a more powerful and feature-rich database designed for high-performance scenarios and larger datasets. It provides advanced features like transactions and iterators but comes with increased complexity and resource usage. Choose Pogreb for simplicity and small-scale use cases, and Badger for more demanding applications requiring advanced functionality and scalability.

LevelDB key/value database in Go.

Pros of goleveldb

- More mature and widely used project with a larger community

- Supports more advanced features like snapshots and iterators

- Better documentation and examples available

Cons of goleveldb

- Higher memory usage compared to Pogreb

- Slower write performance, especially for small key-value pairs

- More complex API, which may be overkill for simple use cases

Code Comparison

Pogreb:

db, _ := pogreb.Open("example.db", nil)

defer db.Close()

db.Put([]byte("key"), []byte("value"))

value, _ := db.Get([]byte("key"))

goleveldb:

db, _ := leveldb.OpenFile("example.db", nil)

defer db.Close()

db.Put([]byte("key"), []byte("value"), nil)

value, _ := db.Get([]byte("key"), nil)

Both libraries offer similar basic functionality for key-value storage, but goleveldb provides more advanced features at the cost of increased complexity. Pogreb focuses on simplicity and performance for specific use cases, while goleveldb offers a more comprehensive solution for general-purpose key-value storage needs.

RocksDB/LevelDB inspired key-value database in Go

Pros of Pebble

- More feature-rich and optimized for high-performance database systems

- Better suited for large-scale distributed environments

- Actively maintained and backed by a commercial company (Cockroach Labs)

Cons of Pebble

- More complex and potentially overkill for simple key-value storage needs

- Larger codebase and potentially steeper learning curve

- May have higher resource requirements due to its advanced features

Code Comparison

Pogreb (simple key-value operations):

db, _ := pogreb.Open("example.db", nil)

defer db.Close()

db.Put([]byte("key"), []byte("value"))

value, _ := db.Get([]byte("key"))

Pebble (more advanced operations):

db, _ := pebble.Open("example.db", &pebble.Options{})

defer db.Close()

batch := db.NewBatch()

batch.Set([]byte("key"), []byte("value"), nil)

batch.Commit(nil)

value, closer, _ := db.Get([]byte("key"))

defer closer.Close()

Pebble offers more advanced features like batching and fine-grained control, while Pogreb provides a simpler interface for basic key-value operations. Pebble is better suited for complex database systems, while Pogreb is ideal for lightweight, embedded key-value storage needs.

An embedded key/value database for Go.

Pros of bbolt

- More mature and widely adopted project with a larger community

- Supports nested buckets for better data organization

- Offers ACID transactions with full support for read-write transactions

Cons of bbolt

- Generally slower performance compared to Pogreb

- Higher memory usage, especially for large datasets

- Less optimized for SSDs and modern hardware

Code Comparison

bbolt:

db, _ := bolt.Open("my.db", 0600, nil)

defer db.Close()

db.Update(func(tx *bolt.Tx) error {

b, _ := tx.CreateBucketIfNotExists([]byte("MyBucket"))

return b.Put([]byte("answer"), []byte("42"))

})

Pogreb:

db, _ := pogreb.Open("my.db", nil)

defer db.Close()

db.Put([]byte("answer"), []byte("42"))

Both bbolt and Pogreb are key-value stores written in Go, but they have different design goals and trade-offs. bbolt offers more features and stronger consistency guarantees, while Pogreb focuses on simplicity and performance. The choice between them depends on specific project requirements, such as data consistency needs, performance expectations, and the complexity of data structures to be stored.

BuntDB is an embeddable, in-memory key/value database for Go with custom indexing and geospatial support

Pros of Buntdb

- Supports spatial indexing and geospatial operations

- Offers transaction support with ACID compliance

- Provides a built-in HTTP server for remote access

Cons of Buntdb

- Higher memory usage compared to Pogreb

- Slower write performance for large datasets

- Less suitable for embedded systems due to resource requirements

Code Comparison

Pogreb:

db, _ := pogreb.Open("example.db", nil)

defer db.Close()

db.Put([]byte("key"), []byte("value"))

value, _ := db.Get([]byte("key"))

Buntdb:

db, _ := buntdb.Open("example.db")

defer db.Close()

db.Update(func(tx *buntdb.Tx) error {

_, _, _ = tx.Set("key", "value", nil)

return nil

})

Both Pogreb and Buntdb are key-value stores written in Go, but they have different focuses. Pogreb is designed for high performance and low memory usage, making it suitable for embedded systems and applications with limited resources. Buntdb, on the other hand, offers more features like spatial indexing and transaction support, making it a good choice for applications that require these advanced functionalities.

Pogreb's simpler API makes it easier to use for basic key-value operations, while Buntdb's transaction-based API provides more flexibility for complex operations. The choice between the two depends on the specific requirements of your project, such as performance needs, memory constraints, and required features.

A high performance memory-bound Go cache

Pros of Ristretto

- Designed for high concurrency with a focus on performance

- Supports automatic cache eviction based on cost and time-to-live (TTL)

- Provides additional features like metrics and ring buffer

Cons of Ristretto

- More complex API compared to Pogreb's simplicity

- Higher memory overhead due to additional features

- Requires more configuration to optimize performance

Code Comparison

Pogreb:

db, _ := pogreb.Open("test.db", nil)

defer db.Close()

db.Put([]byte("key"), []byte("value"))

value, _ := db.Get([]byte("key"))

Ristretto:

cache, _ := ristretto.NewCache(&ristretto.Config{

NumCounters: 1e7, // number of keys to track frequency of (10M).

MaxCost: 1 << 30, // maximum cost of cache (1GB).

BufferItems: 64, // number of keys per Get buffer.

})

cache.Set("key", "value", 1)

value, _ := cache.Get("key")

Summary

Pogreb is a simple key-value store focused on disk-based storage, while Ristretto is an advanced in-memory cache with more features and configuration options. Pogreb offers simplicity and persistence, whereas Ristretto provides high-performance caching with automatic eviction policies. Choose Pogreb for straightforward disk-based storage or Ristretto for complex in-memory caching needs.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Pogreb

Pogreb is an embedded key-value store for read-heavy workloads written in Go.

Key characteristics

- 100% Go.

- Optimized for fast random lookups and infrequent bulk inserts.

- Can store larger-than-memory data sets.

- Low memory usage.

- All DB methods are safe for concurrent use by multiple goroutines.

Installation

$ go get -u github.com/akrylysov/pogreb

Usage

Opening a database

To open or create a new database, use the pogreb.Open() function:

package main

import (

"log"

"github.com/akrylysov/pogreb"

)

func main() {

db, err := pogreb.Open("pogreb.test", nil)

if err != nil {

log.Fatal(err)

return

}

defer db.Close()

}

Writing to a database

Use the DB.Put() function to insert a new key-value pair:

err := db.Put([]byte("testKey"), []byte("testValue"))

if err != nil {

log.Fatal(err)

}

Reading from a database

To retrieve the inserted value, use the DB.Get() function:

val, err := db.Get([]byte("testKey"))

if err != nil {

log.Fatal(err)

}

log.Printf("%s", val)

Deleting from a database

Use the DB.Delete() function to delete a key-value pair:

err := db.Delete([]byte("testKey"))

if err != nil {

log.Fatal(err)

}

Iterating over items

To iterate over items, use ItemIterator returned by DB.Items():

it := db.Items()

for {

key, val, err := it.Next()

if err == pogreb.ErrIterationDone {

break

}

if err != nil {

log.Fatal(err)

}

log.Printf("%s %s", key, val)

}

Performance

The benchmarking code can be found in the pogreb-bench repository.

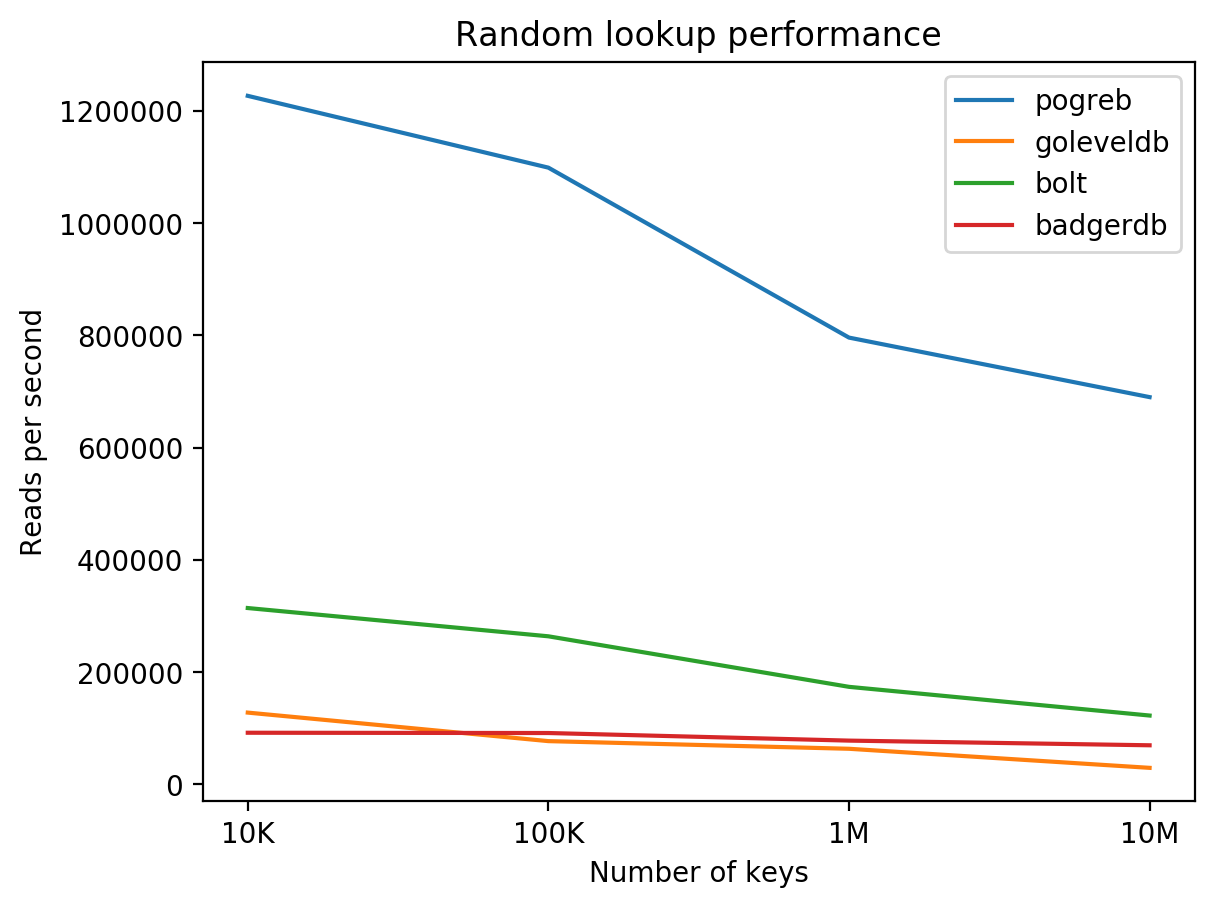

Results of read performance benchmark of pogreb, goleveldb, bolt and badgerdb on DigitalOcean 8 CPUs / 16 GB RAM / 160 GB SSD + Ubuntu 16.04.3 (higher is better):

Internals

Limitations

The design choices made to optimize for point lookups bring limitations for other potential use-cases. For example, using a hash table for indexing makes range scans impossible. Additionally, having a single hash table shared across all WAL segments makes the recovery process require rebuilding the entire index, which may be impractical for large databases.

Top Related Projects

Fast key-value DB in Go.

LevelDB key/value database in Go.

RocksDB/LevelDB inspired key-value database in Go

An embedded key/value database for Go.

BuntDB is an embeddable, in-memory key/value database for Go with custom indexing and geospatial support

A high performance memory-bound Go cache

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot