DeepMoji

DeepMoji

State-of-the-art deep learning model for analyzing sentiment, emotion, sarcasm etc.

Top Related Projects

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

Library for fast text representation and classification.

💫 Industrial-strength Natural Language Processing (NLP) in Python

Topic Modelling for Humans

An Open Source Machine Learning Framework for Everyone

Tensors and Dynamic neural networks in Python with strong GPU acceleration

Quick Overview

DeepMoji is a deep learning model for understanding emoji usage and emotional content in text. It was trained on a large dataset of tweets and can predict emoji usage, analyze sentiment, and detect sarcasm in text. The project includes pre-trained models and tools for using DeepMoji in various natural language processing tasks.

Pros

- Powerful emotion and sentiment analysis capabilities

- Pre-trained models available for immediate use

- Can be fine-tuned for specific tasks or domains

- Includes visualization tools for emoji predictions

Cons

- May have biases based on the Twitter dataset used for training

- Limited to English language text

- Requires deep learning expertise for advanced usage or modifications

- Emoji predictions may not always align with human interpretations

Code Examples

- Predicting emojis for a given text:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

from deepmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

model = deepmoji_emojis(PRETRAINED_PATH)

st = SentenceTokenizer(VOCAB_PATH)

text = "I love machine learning!"

tokenized, _, _ = st.tokenize_sentences([text])

prob = model.predict(tokenized)

print(f"Top 5 emojis for '{text}':")

for i in prob[0].argsort()[-5:][::-1]:

print(f"{emoji.emojize(EMOJI_UNICODE[i])} - {prob[0][i]:.4f}")

- Extracting features from text:

from deepmoji.model_def import deepmoji_feature_encoding

from deepmoji.global_variables import PRETRAINED_PATH

model = deepmoji_feature_encoding(PRETRAINED_PATH)

text = "This project is amazing!"

tokenized, _, _ = st.tokenize_sentences([text])

encoding = model.predict(tokenized)

print(f"Feature encoding for '{text}':")

print(encoding)

- Fine-tuning the model for sentiment analysis:

from deepmoji.finetuning import load_benchmark

from deepmoji.class_avg_finetuning import class_avg_finetune

data = load_benchmark("SE0714", vocab_path=VOCAB_PATH)

model = deepmoji_transfer(PRETRAINED_PATH)

model, f1 = class_avg_finetune(model, data['texts'], data['labels'], nb_classes=2, batch_size=32, epochs=1)

print(f"Fine-tuned model F1 score: {f1:.4f}")

Getting Started

- Install DeepMoji:

pip install deepmoji

- Download pre-trained models:

from deepmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

import urllib.request

urllib.request.urlretrieve("https://github.com/bfelbo/DeepMoji/raw/master/model/vocabulary.json", VOCAB_PATH)

urllib.request.urlretrieve("https://github.com/bfelbo/DeepMoji/raw/master/model/deepmoji_weights.hdf5", PRETRAINED_PATH)

- Use DeepMoji in your project:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

from deepmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

model = deepmoji_emojis(PRETRAINED_PATH)

st = SentenceTokenizer(VOCAB_PATH)

# Your code here

Competitor Comparisons

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

Pros of transformers

- Broader scope: Supports a wide range of NLP tasks and models

- Active development: Regularly updated with new models and features

- Extensive documentation and community support

Cons of transformers

- Higher complexity: Steeper learning curve for beginners

- Resource-intensive: Requires more computational power for many models

Code comparison

DeepMoji:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

maxlen = 30

model = deepmoji_emojis(maxlen, weight_path)

tokenizer = SentenceTokenizer()

tokens, _, _ = tokenizer.tokenize_sentences([text])

prob = model.predict(tokens)

transformers:

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

result = classifier(text)[0]

label = result['label']

score = result['score']

Summary

transformers offers a more versatile and actively maintained toolkit for various NLP tasks, while DeepMoji focuses specifically on emoji prediction and sentiment analysis. transformers provides a higher-level API, making it easier to use out-of-the-box for many tasks, but may require more resources. DeepMoji offers a more specialized approach for emoji-related tasks with potentially lower resource requirements.

Library for fast text representation and classification.

Pros of fastText

- Efficient for large-scale text classification and word representation learning

- Supports multiple languages and can handle out-of-vocabulary words

- Lightweight and fast, suitable for production environments

Cons of fastText

- Less specialized for emotion analysis compared to DeepMoji

- May require more data preprocessing for specific tasks like sentiment analysis

- Limited in capturing complex contextual information

Code Comparison

DeepMoji:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

from deepmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

model = deepmoji_emojis(PRETRAINED_PATH)

tokenizer = SentenceTokenizer(VOCAB_PATH)

fastText:

import fasttext

model = fasttext.train_supervised("train.txt")

result = model.predict("example text")

DeepMoji focuses on emoji prediction and sentiment analysis, while fastText is more versatile for general text classification tasks. DeepMoji requires specific pretrained models and tokenizers, whereas fastText has a simpler API for training and prediction. fastText is more suitable for large-scale applications and multi-language support, while DeepMoji excels in emotion-related tasks with its specialized architecture.

💫 Industrial-strength Natural Language Processing (NLP) in Python

Pros of spaCy

- Comprehensive NLP library with a wide range of functionalities

- Highly optimized for performance and efficiency

- Large community support and regular updates

Cons of spaCy

- Steeper learning curve due to its extensive features

- Larger memory footprint compared to more specialized libraries

Code Comparison

spaCy:

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("This is a sentence.")

for token in doc:

print(token.text, token.pos_, token.dep_)

DeepMoji:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

maxlen = 30

model = deepmoji_emojis(maxlen, weight_path)

tokenizer = SentenceTokenizer()

Key Differences

- spaCy is a general-purpose NLP library, while DeepMoji focuses on emoji prediction and sentiment analysis

- spaCy offers more comprehensive language processing capabilities, whereas DeepMoji specializes in understanding emotional content

- spaCy has a larger user base and more frequent updates, while DeepMoji is more specialized but less actively maintained

Use Cases

- Choose spaCy for general NLP tasks, including tokenization, part-of-speech tagging, and named entity recognition

- Opt for DeepMoji when working specifically with emoji prediction or sentiment analysis in social media contexts

Topic Modelling for Humans

Pros of Gensim

- Broader scope for general-purpose text processing and topic modeling

- More extensive documentation and community support

- Actively maintained with frequent updates

Cons of Gensim

- Less specialized for emoji-related tasks

- May require more setup and configuration for specific use cases

- Potentially steeper learning curve for beginners

Code Comparison

DeepMoji example:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

maxlen = 30

model = deepmoji_emojis(maxlen, weight_path)

tokenizer = SentenceTokenizer()

tokens, _, _ = tokenizer.tokenize_sentences(["Hello world!"])

prob = model.predict(tokens)

Gensim example:

from gensim.models import Word2Vec

from gensim.utils import simple_preprocess

sentences = [["Hello", "world"], ["Another", "example"]]

model = Word2Vec(sentences, min_count=1)

vector = model.wv["Hello"]

similar_words = model.wv.most_similar("Hello")

Both libraries offer powerful text processing capabilities, but DeepMoji focuses on emoji prediction and sentiment analysis, while Gensim provides a broader range of natural language processing tools. The choice between them depends on the specific requirements of your project.

An Open Source Machine Learning Framework for Everyone

Pros of TensorFlow

- Comprehensive machine learning framework with broad capabilities

- Large community and extensive documentation

- Supports multiple programming languages and platforms

Cons of TensorFlow

- Steeper learning curve for beginners

- Can be resource-intensive for smaller projects

- More complex setup and configuration

Code Comparison

DeepMoji:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

from deepmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

model = deepmoji_emojis(PRETRAINED_PATH)

tokenizer = SentenceTokenizer(VOCAB_PATH)

TensorFlow:

import tensorflow as tf

model = tf.keras.Sequential([

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

DeepMoji is focused on emoji prediction and sentiment analysis, while TensorFlow is a general-purpose machine learning framework. DeepMoji offers a simpler API for its specific use case, whereas TensorFlow provides more flexibility but requires more setup. TensorFlow's code example demonstrates a basic neural network model, while DeepMoji's code shows how to load a pre-trained model for emoji prediction.

Tensors and Dynamic neural networks in Python with strong GPU acceleration

Pros of PyTorch

- Broader scope and functionality as a general-purpose deep learning framework

- Larger community and more extensive documentation

- More frequent updates and active development

Cons of PyTorch

- Steeper learning curve for beginners

- Larger codebase and installation size

- Not specifically optimized for emoji prediction or sentiment analysis

Code Comparison

DeepMoji:

from deepmoji.sentence_tokenizer import SentenceTokenizer

from deepmoji.model_def import deepmoji_emojis

from deepmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

model = deepmoji_emojis(PRETRAINED_PATH)

tokenizer = SentenceTokenizer(VOCAB_PATH)

PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

model = nn.Sequential(

nn.Linear(input_size, hidden_size),

nn.ReLU(),

nn.Linear(hidden_size, output_size)

)

optimizer = optim.Adam(model.parameters(), lr=0.001)

DeepMoji is specifically designed for emoji prediction and sentiment analysis, while PyTorch is a more versatile deep learning framework. DeepMoji provides pre-trained models and a simpler API for its specific use case, whereas PyTorch offers greater flexibility for building custom neural network architectures across various domains.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

------ Update September 2023 ------

The online demo is no longer available as it's not possible for us to renew the certificate. The code in this repo still works, but you might have to make some changes for it to work in Python 3 (see the open PRs). You can also check out the PyTorch version of this algorithm called torchMoji made by HuggingFace.

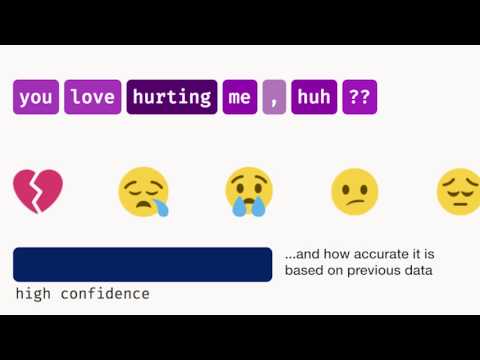

DeepMoji

(click image for video demonstration)

DeepMoji is a model trained on 1.2 billion tweets with emojis to understand how language is used to express emotions. Through transfer learning the model can obtain state-of-the-art performance on many emotion-related text modeling tasks.

See the paper or blog post for more details.

Overview

- deepmoji/ contains all the underlying code needed to convert a dataset to our vocabulary and use our model.

- examples/ contains short code snippets showing how to convert a dataset to our vocabulary, load up the model and run it on that dataset.

- scripts/ contains code for processing and analysing datasets to reproduce results in the paper.

- model/ contains the pretrained model and vocabulary.

- data/ contains raw and processed datasets that we include in this repository for testing.

- tests/ contains unit tests for the codebase.

To start out with, have a look inside the examples/ directory. See score_texts_emojis.py for how to use DeepMoji to extract emoji predictions, encode_texts.py for how to convert text into 2304-dimensional emotional feature vectors or finetune_youtube_last.py for how to use the model for transfer learning on a new dataset.

Please consider citing our paper if you use our model or code (see below for citation).

Frameworks

This code is based on Keras, which requires either Theano or Tensorflow as the backend. If you would rather use pyTorch there's an implementation available here, which has kindly been provided by Thomas Wolf.

Installation

We assume that you're using Python 2.7 with pip installed. As a backend you need to install either Theano (version 0.9+) or Tensorflow (version 1.3+). Once that's done you need to run the following inside the root directory to install the remaining dependencies:

pip install -e .

This will install the following dependencies:

- Keras (the library was tested on version 2.0.5 but anything above 2.0.0 should work)

- scikit-learn

- h5py

- text-unidecode

- emoji

Ensure that Keras uses your chosen backend. You can find the instructions here, under the Switching from one backend to another section.

Run the included script, which downloads the pretrained DeepMoji weights (~85MB) from here and places them in the model/ directory:

python scripts/download_weights.py

Testing

To run the tests, install nose. After installing, navigate to the tests/ directory and run:

nosetests -v

By default, this will also run finetuning tests. These tests train the model for one epoch and then check the resulting accuracy, which may take several minutes to finish. If you'd prefer to exclude those, run the following instead:

nosetests -v -a '!slow'

Disclaimer

This code has been tested to work with Python 2.7 on an Ubuntu 16.04 machine. It has not been optimized for efficiency, but should be fast enough for most purposes. We do not give any guarantees that there are no bugs - use the code on your own responsibility!

Contributions

We welcome pull requests if you feel like something could be improved. You can also greatly help us by telling us how you felt when writing your most recent tweets. Just click here to contribute.

License

This code and the pretrained model is licensed under the MIT license.

Benchmark datasets

The benchmark datasets are uploaded to this repository for convenience purposes only. They were not released by us and we do not claim any rights on them. Use the datasets at your responsibility and make sure you fulfill the licenses that they were released with. If you use any of the benchmark datasets please consider citing the original authors.

Twitter dataset

We sadly cannot release our large Twitter dataset of tweets with emojis due to licensing restrictions.

Citation

@inproceedings{felbo2017,

title={Using millions of emoji occurrences to learn any-domain representations for detecting sentiment, emotion and sarcasm},

author={Felbo, Bjarke and Mislove, Alan and S{\o}gaard, Anders and Rahwan, Iyad and Lehmann, Sune},

booktitle={Conference on Empirical Methods in Natural Language Processing (EMNLP)},

year={2017}

}

Top Related Projects

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

Library for fast text representation and classification.

💫 Industrial-strength Natural Language Processing (NLP) in Python

Topic Modelling for Humans

An Open Source Machine Learning Framework for Everyone

Tensors and Dynamic neural networks in Python with strong GPU acceleration

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot