Top Related Projects

Mirror of Apache Kafka

Provides Familiar Spring Abstractions for Apache Kafka

Sarama is a Go library for Apache Kafka.

Dockerfile for Apache Kafka

Kafka (and Zookeeper) in Docker

Bitnami container images

Quick Overview

The confluentinc/examples repository is a comprehensive collection of demos and examples for Confluent Platform and Apache Kafka®. It provides hands-on resources for developers to learn and implement various Kafka-based streaming solutions, including connectors, KSQL, and stream processing applications.

Pros

- Extensive range of examples covering multiple Kafka use cases and integrations

- Well-documented and maintained by Confluent, ensuring up-to-date and reliable code

- Includes both simple and complex scenarios, catering to beginners and advanced users

- Provides Docker-based setups for easy local testing and experimentation

Cons

- Some examples may require a Confluent Platform license for full functionality

- The repository's size and breadth can be overwhelming for newcomers

- Certain examples might be specific to Confluent's commercial offerings, limiting their applicability in pure open-source Kafka environments

- Regular updates may lead to occasional breaking changes in example code

Code Examples

Here are a few code examples from the repository:

- Creating a Kafka topic using the Kafka CLI:

kafka-topics --create --topic my-topic --bootstrap-server localhost:9092 --partitions 3 --replication-factor 1

- Producing messages to a Kafka topic using a Java producer:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new KafkaProducer<>(props);

producer.send(new ProducerRecord<>("my-topic", "key", "value"));

producer.close();

- Consuming messages from a Kafka topic using a Python consumer:

from confluent_kafka import Consumer, KafkaError

c = Consumer({

'bootstrap.servers': 'localhost:9092',

'group.id': 'mygroup',

'auto.offset.reset': 'earliest'

})

c.subscribe(['my-topic'])

while True:

msg = c.poll(1.0)

if msg is None:

continue

if msg.error():

if msg.error().code() == KafkaError._PARTITION_EOF:

continue

else:

print(msg.error())

break

print('Received message: {}'.format(msg.value().decode('utf-8')))

c.close()

Getting Started

To get started with the examples:

-

Clone the repository:

git clone https://github.com/confluentinc/examples.git cd examples -

Choose an example directory and follow the README instructions.

-

Most examples use Docker Compose for setup:

docker-compose up -d -

Run the example code or scripts as specified in the example's documentation.

-

Clean up when finished:

docker-compose down

Competitor Comparisons

Mirror of Apache Kafka

Pros of Kafka

- Core Apache Kafka project with full source code and implementation details

- Extensive documentation and community support for the core Kafka platform

- Provides the foundational streaming platform that other tools build upon

Cons of Kafka

- Steeper learning curve for beginners compared to example-focused repositories

- Less focus on practical examples and quick-start guides

- Requires more setup and configuration to get running

Code Comparison

Kafka (producer example):

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new KafkaProducer<>(props);

Examples (producer example):

String bootstrapServers = "localhost:9092";

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

The Examples repository focuses on practical implementations and tutorials, making it easier for developers to get started with Kafka quickly. However, the Kafka repository provides a deeper understanding of the core platform and its internals, which is valuable for advanced users and contributors.

Provides Familiar Spring Abstractions for Apache Kafka

Pros of spring-kafka

- Tighter integration with Spring Framework, offering seamless development for Spring-based applications

- More comprehensive documentation and extensive community support

- Provides higher-level abstractions and utilities specific to Spring applications

Cons of spring-kafka

- Limited to Spring ecosystem, less flexible for non-Spring projects

- May have a steeper learning curve for developers not familiar with Spring

- Fewer examples of integration with other Kafka-related tools and services

Code Comparison

spring-kafka:

@KafkaListener(topics = "myTopic")

public void listen(String message) {

System.out.println("Received: " + message);

}

examples:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", "test-group");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

consumer.subscribe(Arrays.asList("myTopic"));

Summary

spring-kafka is ideal for Spring-based applications, offering tight integration and Spring-specific features. examples provides a broader range of Kafka usage examples across different languages and frameworks, making it more versatile for diverse projects. The choice between them depends on your project's ecosystem and requirements.

Sarama is a Go library for Apache Kafka.

Pros of sarama

- Focused Go client library for Apache Kafka

- More lightweight and specialized for Kafka interactions

- Active development with frequent updates and bug fixes

Cons of sarama

- Limited to Go language, unlike the multi-language examples in examples

- Lacks comprehensive tutorials and demo applications

- Steeper learning curve for beginners compared to examples

Code Comparison

sarama (Go):

producer, err := sarama.NewSyncProducer([]string{"localhost:9092"}, nil)

if err != nil {

log.Fatalln(err)

}

defer func() { _ = producer.Close() }()

msg := &sarama.ProducerMessage{Topic: "test", Value: sarama.StringEncoder("test message")}

examples (Java):

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new KafkaProducer<>(props);

producer.send(new ProducerRecord<>("test", "test message"));

Both repositories serve different purposes. sarama is a dedicated Go client library for Kafka, offering a more focused and lightweight approach. examples provides a broader range of examples across multiple languages and Kafka-related technologies, making it more suitable for learning and exploring various Kafka use cases.

Dockerfile for Apache Kafka

Pros of kafka-docker

- Lightweight and focused specifically on Kafka Docker setup

- Easy to customize and extend for specific use cases

- Widely adopted in the community with frequent updates

Cons of kafka-docker

- Limited to Kafka-only setup, lacking additional ecosystem components

- Less comprehensive documentation compared to examples

- May require more manual configuration for advanced scenarios

Code Comparison

kafka-docker:

version: '2'

services:

zookeeper:

image: wurstmeister/zookeeper

ports:

- "2181:2181"

kafka:

build: .

ports:

- "9092:9092"

examples:

version: '2'

services:

zookeeper:

image: confluentinc/cp-zookeeper:latest

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

kafka:

image: confluentinc/cp-kafka:latest

depends_on:

- zookeeper

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

The kafka-docker repository provides a simpler Docker Compose configuration, focusing solely on Kafka and Zookeeper. In contrast, the examples repository offers a more comprehensive setup with additional configuration options and environment variables, reflecting its broader scope within the Confluent ecosystem.

While kafka-docker is ideal for quick Kafka setups and experimentation, examples provides a more production-ready configuration with additional components and best practices from Confluent.

Kafka (and Zookeeper) in Docker

Pros of docker-kafka

- Lightweight and focused solely on Kafka containerization

- Easier to set up and run for simple Kafka environments

- Suitable for quick prototyping and development purposes

Cons of docker-kafka

- Limited scope compared to the comprehensive examples in examples

- Lacks advanced configurations and integrations with other Confluent ecosystem components

- May not be suitable for production-grade deployments without additional customization

Code Comparison

examples:

---

version: '2'

services:

zookeeper:

image: confluentinc/cp-zookeeper:7.3.0

hostname: zookeeper

container_name: zookeeper

ports:

- "2181:2181"

docker-kafka:

FROM openjdk:8-jre

ENV KAFKA_VERSION=2.8.0

ENV SCALA_VERSION=2.13

RUN apt-get update && apt-get install -y wget

RUN wget -q https://archive.apache.org/dist/kafka/${KAFKA_VERSION}/kafka_${SCALA_VERSION}-${KAFKA_VERSION}.tgz

The examples repository provides a more comprehensive set of examples and configurations for various Confluent Platform components, making it suitable for learning and implementing complex Kafka-based solutions. It offers a wider range of use cases and integrations with other Confluent tools.

On the other hand, docker-kafka focuses specifically on containerizing Kafka, providing a simpler and more lightweight solution for running Kafka in Docker. It's ideal for developers who need a quick Kafka setup for testing or development purposes but may require additional work for production environments.

Bitnami container images

Pros of containers

- Broader scope, covering a wide range of containerized applications

- More frequent updates and active maintenance

- Larger community and user base, leading to better support and resources

Cons of containers

- Less focused on specific technologies or use cases

- May require more configuration for specialized deployments

- Potentially steeper learning curve for beginners due to its breadth

Code comparison

examples:

---

version: '2'

services:

zookeeper:

image: confluentinc/cp-zookeeper:7.3.0

hostname: zookeeper

container_name: zookeeper

containers:

FROM docker.io/bitnami/minideb:bullseye

ARG TARGETARCH

LABEL org.opencontainers.image.base.name="docker.io/bitnami/minideb:bullseye" \

org.opencontainers.image.created="2023-06-22T14:04:44Z" \

org.opencontainers.image.description="Application packaged by VMware, Inc" \

Summary

While examples focuses on Confluent Platform and related technologies, containers offers a broader range of containerized applications. examples provides more specialized and targeted examples for Kafka and streaming data ecosystems, while containers offers a wider variety of containerized applications with frequent updates and a larger community. The choice between the two depends on the specific needs of the project and the level of specialization required.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

- Overview

- Where to Start

- Confluent Cloud

- Stream Processing

- Data Pipelines

- Confluent Platform

- Build Your Own

- Additional Demos

Overview

This is a curated list of demos that showcase Apache Kafka® event stream processing on the Confluent Platform, an event stream processing platform that enables you to process, organize, and manage massive amounts of streaming data across cloud, on-prem, and serverless deployments.

Where to start

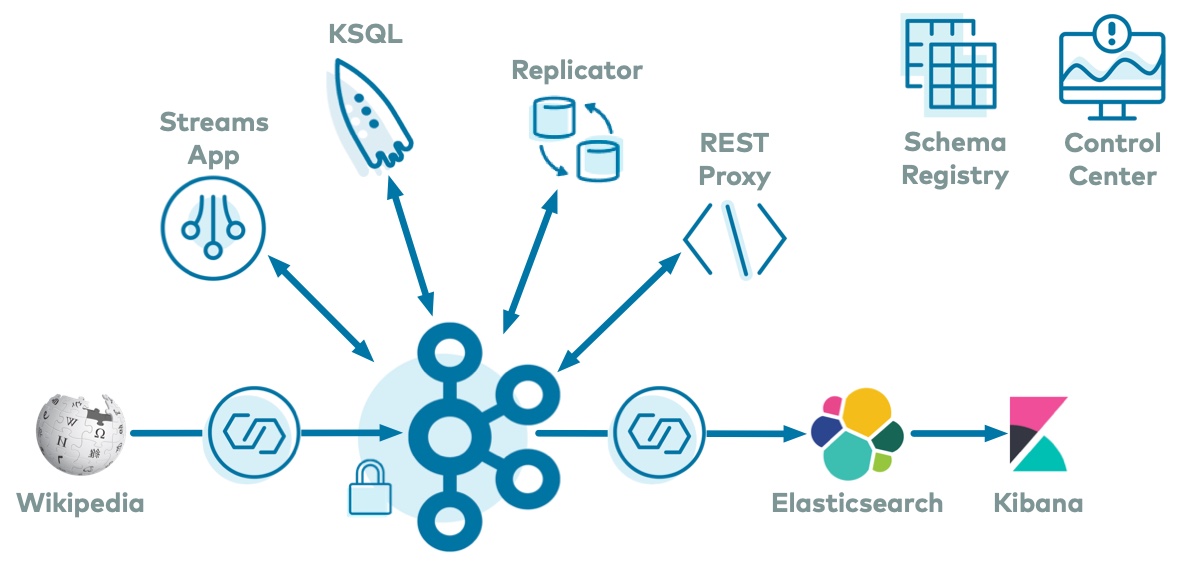

The best demo to start with is cp-demo which spins up a Kafka event streaming application using ksqlDB for stream processing, with many security features enabled, in an end-to-end streaming ETL pipeline with a source connector pulling from live data and a sink connector connecting to Elasticsearch and Kibana for visualizations.

cp-demo also comes with a tutorial and is a great configuration reference for Confluent Platform.

Confluent Cloud

There are many examples from full end-to-end demos that create connectors, streams, and KSQL queries in Confluent Cloud, to resources that help you build your own demos. You can find the documentation and instructions for all Confluent Cloud demos at https://docs.confluent.io/platform/current/tutorials/examples/ccloud/docs/ccloud-demos-overview.html

| Demo | Local | Docker | Description |

|---|---|---|---|

| Confluent CLI | Y | N | Fully automated demo interacting with your Confluent Cloud cluster using the Confluent CLI  |

| Clients in Various Languages to Cloud | Y | N | Client applications, showcasing producers and consumers, in various programming languages connecting to Confluent Cloud  |

| Cloud ETL | Y | N | Fully automated cloud ETL solution using Confluent Cloud connectors (AWS Kinesis, Postgres with AWS RDS, GCP GCS, AWS S3, Azure Blob) and fully-managed ksqlDB  |

| ccloud-stack | Y | N | Creates a fully-managed stack in Confluent Cloud, including a new environment, service account, Kafka cluster, KSQL app, Schema Registry, and ACLs. The demo also generates a config file for use with client applications. |

| On-Prem Kafka to Cloud | N | Y | Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters  |

| DevOps for Apache Kafka® with Kubernetes and GitOps | N | N | Simulated production environment running a streaming application targeting Apache Kafka on Confluent Cloud using Kubernetes and GitOps  |

Stream Processing

| Demo | Local | Docker | Description |

|---|---|---|---|

| Clickstream | N | Y | Automated version of the ksqlDB clickstream demo  |

| Kafka Tutorials | Y | Y | Collection of common event streaming use cases, with each tutorial featuring an example scenario and several complete code solutions  |

| Microservices ecosystem | N | Y | Microservices orders Demo Application integrated into the Confluent Platform  |

Data Pipelines

| Demo | Local | Docker | Description |

|---|---|---|---|

| Clients in Various Languages | Y | N | Client applications, showcasing producers and consumers, in various programming languages  |

| Connect and Kafka Streams | Y | N | Demonstrate various ways, with and without Kafka Connect, to get data into Kafka topics and then loaded for use by the Kafka Streams API  |

Confluent Platform

| Demo | Local | Docker | Description |

|---|---|---|---|

| Avro | Y | N | Client applications using Avro and Confluent Schema Registry  |

| CP Demo | N | Y | Confluent Platform demo (cp-demo) with a playbook for Kafka event streaming ETL deployments  |

| Kubernetes | N | Y | Demonstrations of Confluent Platform deployments using the Confluent Operator  |

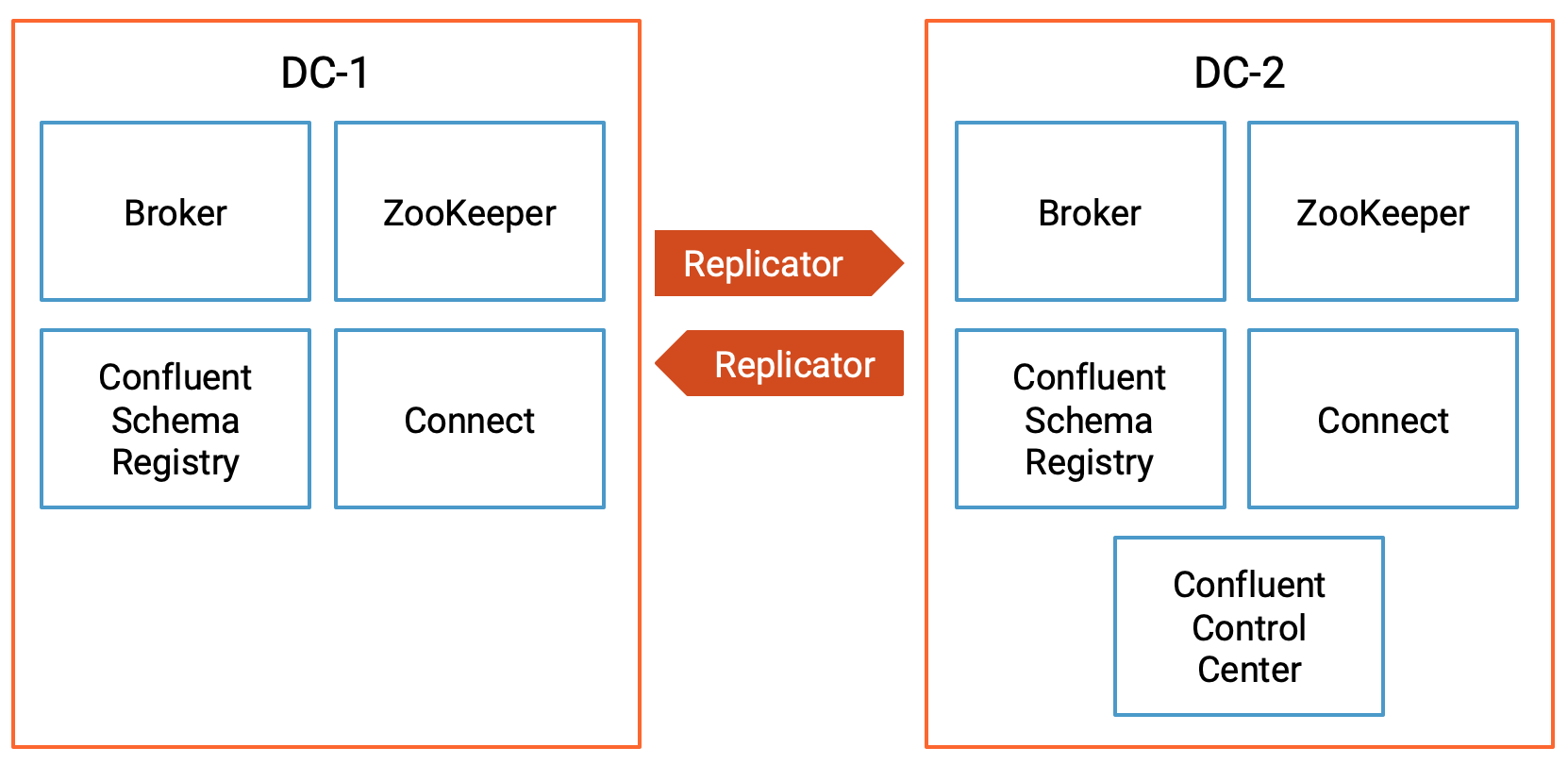

| Multi Datacenter | N | Y | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters  |

| Multi-Region Clusters | N | Y | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement |

| Quickstart | Y | Y | Automated version of the Confluent Quickstart: for Confluent Platform on local install or Docker, community version, and Confluent Cloud  |

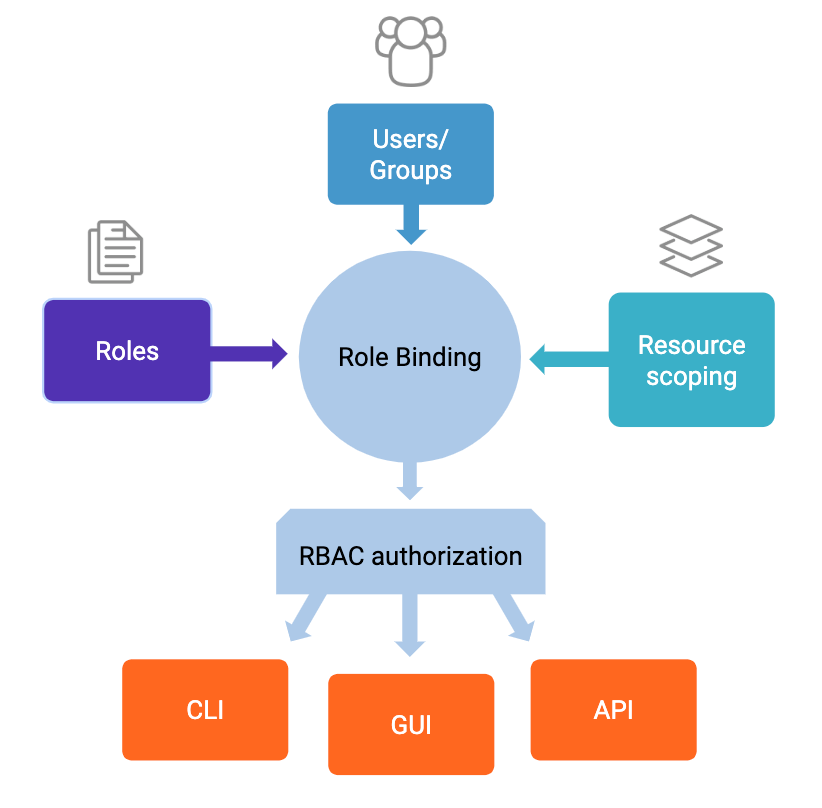

| Role-Based Access Control | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts  |

| Replicator Security | N | Y | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them  |

Build Your Own

As a next step, you may want to build your own custom demo or test environment. We have several resources that launch just the services in Confluent Cloud or on prem, with no pre-configured connectors, data sources, topics, schemas, etc. Using these as a foundation, you can then add any connectors or applications. You can find the documentation and instructions for these "build-your-own" resources at https://docs.confluent.io/platform/current/tutorials/build-your-own-demos.html.

Additional Demos

Here are additional GitHub repos that offer an incredible set of nearly a hundred other Apache Kafka demos. They are not maintained on a per-release basis like the demos in this repo, but they are an invaluable resource.

Top Related Projects

Mirror of Apache Kafka

Provides Familiar Spring Abstractions for Apache Kafka

Sarama is a Go library for Apache Kafka.

Dockerfile for Apache Kafka

Kafka (and Zookeeper) in Docker

Bitnami container images

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot