diffusionbee-stable-diffusion-ui

diffusionbee-stable-diffusion-ui

Diffusion Bee is the easiest way to run Stable Diffusion locally on your M1 Mac. Comes with a one-click installer. No dependencies or technical knowledge needed.

Top Related Projects

Stable Diffusion web UI

Invoke is a leading creative engine for Stable Diffusion models, empowering professionals, artists, and enthusiasts to generate and create visual media using the latest AI-driven technologies. The solution offers an industry leading WebUI, and serves as the foundation for multiple commercial products.

High-Resolution Image Synthesis with Latent Diffusion Models

🤗 Diffusers: State-of-the-art diffusion models for image, video, and audio generation in PyTorch.

Quick Overview

DiffusionBee is a user-friendly macOS application for running Stable Diffusion locally on Apple Silicon Macs. It provides an easy-to-use interface for generating images using AI, without requiring technical knowledge or complex setup processes.

Pros

- Easy installation and setup for non-technical users

- Runs locally on Apple Silicon Macs, ensuring privacy and offline usage

- User-friendly interface for generating and managing AI-generated images

- Regular updates and improvements based on user feedback

Cons

- Limited to macOS and Apple Silicon devices

- May have performance limitations compared to more powerful GPU setups

- Lacks some advanced features found in other Stable Diffusion implementations

- Dependent on the development team for updates and new features

Getting Started

- Visit the DiffusionBee website

- Download the latest version of DiffusionBee for your macOS device

- Open the downloaded file and drag the DiffusionBee application to your Applications folder

- Launch DiffusionBee from your Applications folder

- Follow the on-screen instructions to start generating AI images

Note: This is not a code library, so code examples and detailed quick start instructions are not applicable.

Competitor Comparisons

Stable Diffusion web UI

Pros of stable-diffusion-webui

- More extensive features and customization options

- Supports a wider range of models and extensions

- Active development with frequent updates and community contributions

Cons of stable-diffusion-webui

- Requires more technical knowledge to set up and use

- May have higher system requirements for optimal performance

- Not as user-friendly for beginners compared to DiffusionBee

Code Comparison

DiffusionBee (Python):

def generate_image(prompt, num_inference_steps=50, guidance_scale=7.5):

with torch.no_grad():

image = pipeline(prompt, num_inference_steps=num_inference_steps, guidance_scale=guidance_scale).images[0]

return image

stable-diffusion-webui (Python):

def generate(prompt, steps=50, cfg_scale=7.0, sampler_name='Euler a', batch_size=1):

p = StableDiffusionProcessing(

sd_model=shared.sd_model,

outpath_samples=opts.outdir_samples or opts.outdir_txt2img_samples,

prompt=prompt,

steps=steps,

cfg_scale=cfg_scale,

sampler_name=sampler_name,

batch_size=batch_size,

)

processed = processing.process_images(p)

return processed.images

The code comparison shows that stable-diffusion-webui offers more granular control over the generation process, with additional parameters and options available for fine-tuning the output.

Invoke is a leading creative engine for Stable Diffusion models, empowering professionals, artists, and enthusiasts to generate and create visual media using the latest AI-driven technologies. The solution offers an industry leading WebUI, and serves as the foundation for multiple commercial products.

Pros of InvokeAI

- More advanced features and customization options

- Supports multiple AI models and pipelines

- Active development with frequent updates and community contributions

Cons of InvokeAI

- More complex setup and installation process

- Steeper learning curve for beginners

- Higher system requirements

Code Comparison

DiffusionBee:

def generate_image(prompt, num_inference_steps=50, guidance_scale=7.5):

with autocast("cuda"):

image = pipe(prompt, num_inference_steps=num_inference_steps, guidance_scale=guidance_scale).images[0]

return image

InvokeAI:

def generate_image(prompt, steps=50, cfg_scale=7.5, width=512, height=512, sampler_name='k_lms'):

generator = Generator(model, device)

result = generator.generate(prompt, sampler_name=sampler_name, steps=steps, cfg_scale=cfg_scale,

width=width, height=height)

return result.image

The code snippets show that InvokeAI offers more parameters and flexibility in image generation, while DiffusionBee provides a simpler interface. InvokeAI's code allows for specifying dimensions and sampler type, reflecting its more advanced feature set.

High-Resolution Image Synthesis with Latent Diffusion Models

Pros of stablediffusion

- More comprehensive and flexible codebase for advanced users and researchers

- Supports a wider range of models and architectures

- Offers more customization options and fine-tuning capabilities

Cons of stablediffusion

- Steeper learning curve and more complex setup process

- Requires more technical knowledge to use effectively

- May have higher hardware requirements for optimal performance

Code Comparison

diffusionbee-stable-diffusion-ui:

def generate_image(prompt, num_inference_steps=50, guidance_scale=7.5):

with torch.no_grad():

latents = torch.randn((1, 4, 64, 64))

scheduler.set_timesteps(num_inference_steps)

for t in scheduler.timesteps:

# Simplified generation process

latents = pipeline(prompt, latents, t, guidance_scale)

return latents_to_image(latents)

stablediffusion:

@torch.no_grad()

def sample(model, x, t, c, steps, eta):

alphas, sigmas = make_schedule(steps, device=x.device)

for i in trange(steps):

with torch.enable_grad():

x = x.detach().requires_grad_()

t_enc = torch.tensor([t]).to(x.device)

c_enc = model.encode_first_stage(c)

e_t = model.apply_model(x, t_enc, c_enc)

# More advanced sampling process

x = x - alphas[i] * e_t + sigmas[i] * torch.randn_like(x)

return x

The code comparison shows that stablediffusion offers more advanced and customizable sampling processes, while diffusionbee-stable-diffusion-ui provides a simpler, more user-friendly approach to image generation.

🤗 Diffusers: State-of-the-art diffusion models for image, video, and audio generation in PyTorch.

Pros of diffusers

- More comprehensive library with support for multiple diffusion models and techniques

- Actively maintained by Hugging Face with frequent updates and improvements

- Extensive documentation and examples for various use cases

Cons of diffusers

- Steeper learning curve for beginners compared to DiffusionBee's user-friendly interface

- Requires more setup and configuration to get started

- May be overkill for users who only need basic image generation capabilities

Code Comparison

DiffusionBee (simplified usage):

from diffusionbee import DiffusionBee

bee = DiffusionBee()

image = bee.text_to_image("A beautiful sunset over the ocean")

image.save("sunset.png")

diffusers:

from diffusers import StableDiffusionPipeline

import torch

pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

image = pipe("A beautiful sunset over the ocean").images[0]

image.save("sunset.png")

The diffusers library offers more flexibility and control over the generation process, while DiffusionBee provides a simpler interface for quick image generation. diffusers is better suited for developers and researchers who need advanced features, while DiffusionBee is ideal for users who want a straightforward, GUI-based solution for Stable Diffusion image generation.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Diffusion Bee - Stable Diffusion GUI App for MacOS

Diffusion Bee is the easiest way to run Stable Diffusion locally on your Intel / M1 Mac. Comes with a one-click installer. No dependencies or technical knowledge needed.

- Runs locally on your computer no data is sent to the cloud ( other than request to download the weights or unless you chose to upload an image ).

- If you like Diffusion Bee, consider checking https://Liner.ai , a one-click tool to train machine learning models

Download at https://diffusionbee.com/

For prompt ideas visit https://arthub.ai

Join discord server : https://discord.gg/t6rC5RaJQn

Features

- Full data privacy - nothing is sent to the cloud ( unless you chose to upload an image )

- Clean and easy to use UI with one-click installer

- Image to image

- Supported models : - SD 1.x, SD 2.x, SD XL, Inpainting, ControlNet, LoRA

- Download models from the app

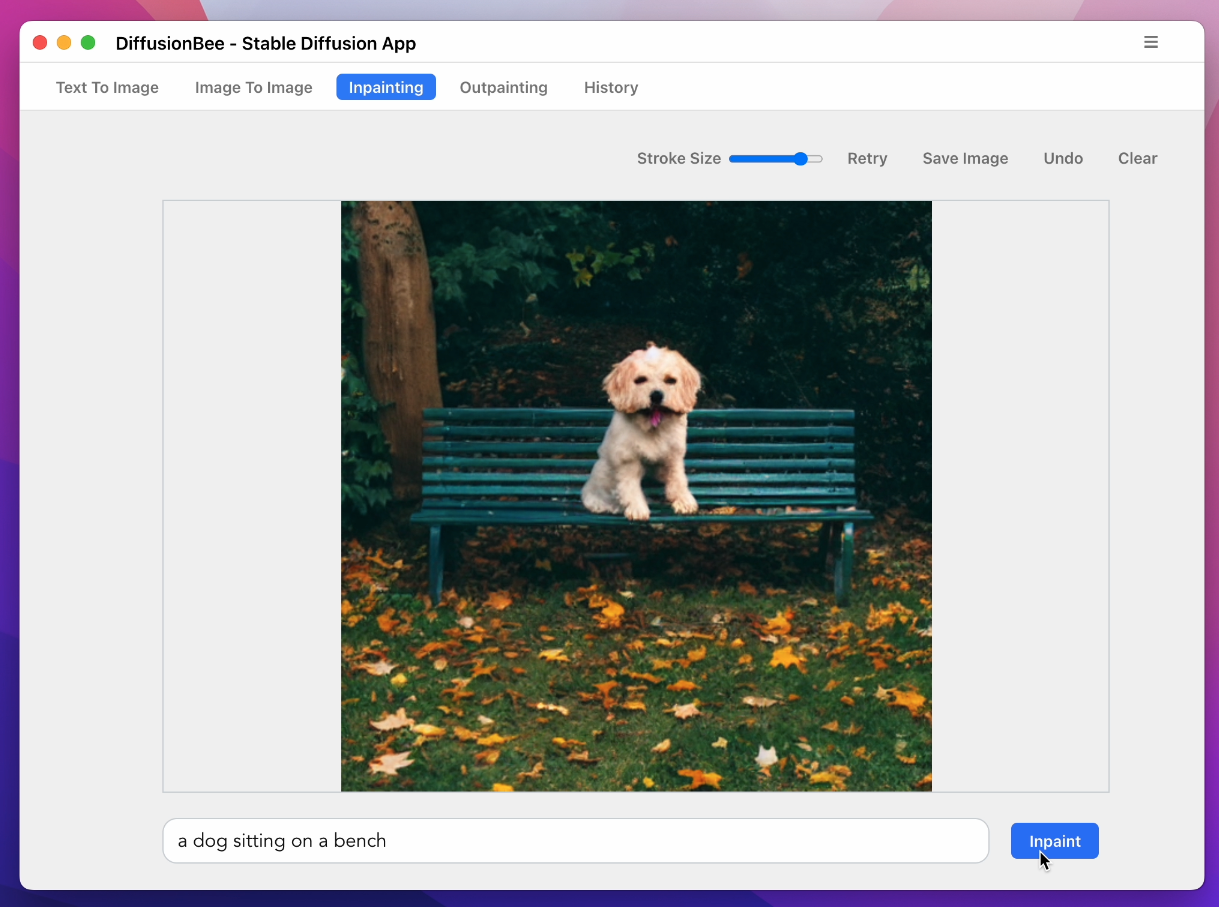

- In-painting

- Out-painting

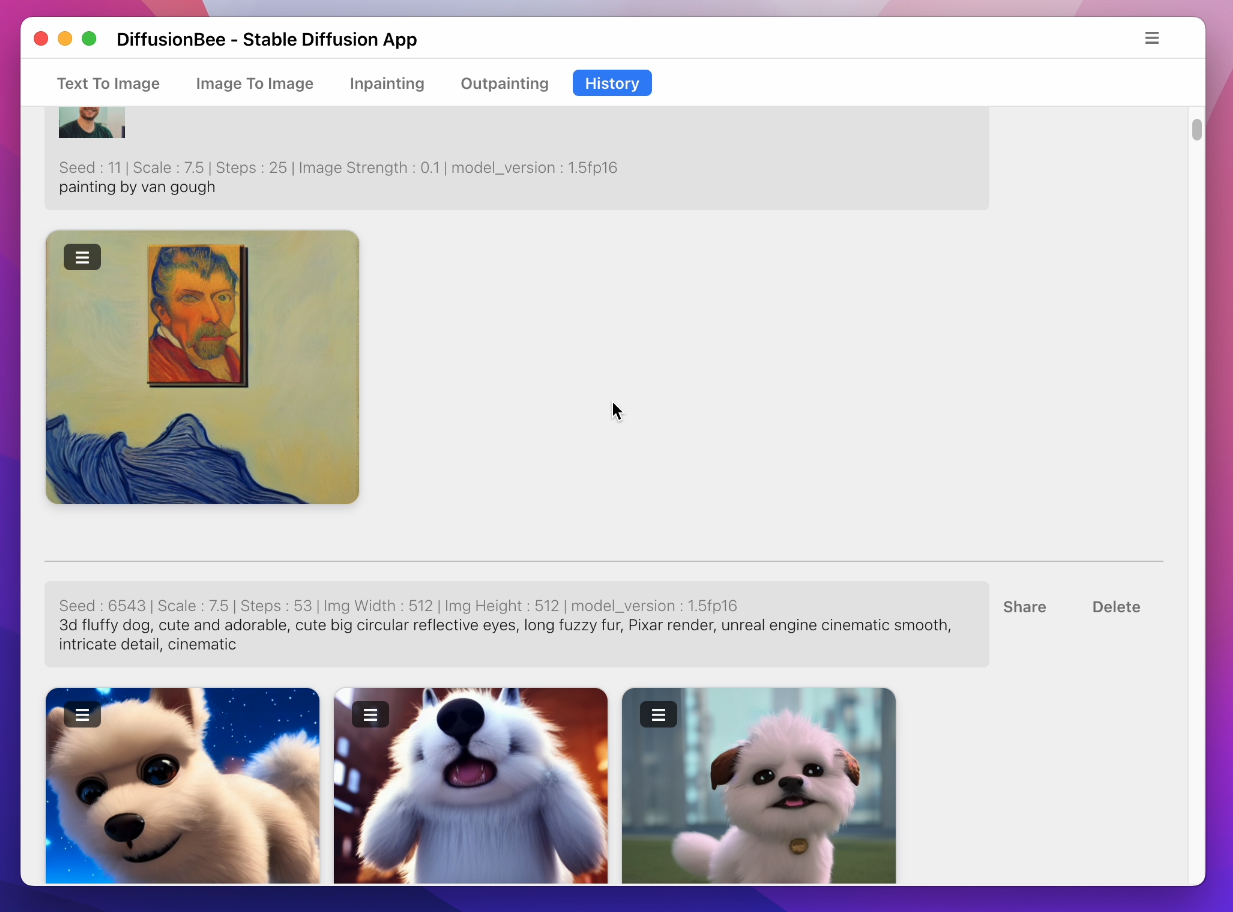

- Generation history

- Upscaling images

- Multiple image sizes

- Optimized for M1/M2 Chips

- Runs locally on your computer

- Negative prompts

- Advanced prompt options

- ControlNet

How to use

- Download and start the application

- Enter a prompt and click generate

Text to image:

Image to image:

Multiple Apps:

Image to image with mask:

Inpainting:

Advanced AI Canvas:

ControlNet:

Download Models:

History:

To learn more, visit the documentation.

Requirements

- Mac with Intel or M1/M2 CPU

- For Intel : MacOS 12.3.1 or later

- For M1/M2 : MacOS 11.0.0 or later

License : Stable Diffusion is released under the CreativeML OpenRAIL M license : https://github.com/CompVis/stable-diffusion/blob/main/LICENSE Diffusion Bee is just a GUI wrapper on top of Stable Diffusion, so all the term of Stable Diffusion are applied on the outputs.

References

Top Related Projects

Stable Diffusion web UI

Invoke is a leading creative engine for Stable Diffusion models, empowering professionals, artists, and enthusiasts to generate and create visual media using the latest AI-driven technologies. The solution offers an industry leading WebUI, and serves as the foundation for multiple commercial products.

High-Resolution Image Synthesis with Latent Diffusion Models

🤗 Diffusers: State-of-the-art diffusion models for image, video, and audio generation in PyTorch.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot