libmdbx

libmdbx

A potentia Ad Actum ★ Fullfast transactional key-value memory-mapped B-Tree storage engine without WAL ★ Surpasses the legendary LMDB in terms of reliability, features and performance

Top Related Projects

Fast persistent recoverable log and key-value store + cache, in C# and C++.

A library that provides an embeddable, persistent key-value store for fast storage.

LevelDB is a fast key-value storage library written at Google that provides an ordered mapping from string keys to string values.

Seamless multi-primary syncing database with an intuitive HTTP/JSON API, designed for reliability

FoundationDB - the open source, distributed, transactional key-value store

Quick Overview

libmdbx is a robust, compact, and high-performance key-value database library. It's an improved and extended version of LMDB, offering enhanced features, better performance, and increased reliability. libmdbx is designed for embedded use and can be easily integrated into various applications.

Pros

- Extremely fast and efficient, with minimal memory footprint

- ACID-compliant with full CRUD functionality

- Supports multiple processes and threads concurrently

- Offers advanced features like range lookups and multi-versioning

Cons

- Limited documentation compared to more mainstream databases

- Steeper learning curve for developers unfamiliar with key-value stores

- May require more manual management compared to higher-level database solutions

- Not as widely adopted as some other database options

Code Examples

- Opening a database and performing a simple put operation:

MDBX_env *env;

MDBX_dbi dbi;

MDBX_txn *txn;

MDBX_val key, data;

mdbx_env_create(&env);

mdbx_env_open(env, "./mydb", MDBX_NOSUBDIR, 0664);

mdbx_txn_begin(env, NULL, 0, &txn);

mdbx_dbi_open(txn, NULL, 0, &dbi);

key.iov_base = "mykey";

key.iov_len = 5;

data.iov_base = "myvalue";

data.iov_len = 7;

mdbx_put(txn, dbi, &key, &data, 0);

mdbx_txn_commit(txn);

- Retrieving data from the database:

MDBX_txn *txn;

MDBX_val key, data;

mdbx_txn_begin(env, NULL, MDBX_RDONLY, &txn);

key.iov_base = "mykey";

key.iov_len = 5;

if (mdbx_get(txn, dbi, &key, &data) == MDBX_SUCCESS) {

printf("Value: %.*s\n", (int)data.iov_len, (char *)data.iov_base);

}

mdbx_txn_abort(txn);

- Iterating through database entries:

MDBX_txn *txn;

MDBX_cursor *cursor;

MDBX_val key, data;

mdbx_txn_begin(env, NULL, MDBX_RDONLY, &txn);

mdbx_cursor_open(txn, dbi, &cursor);

while (mdbx_cursor_get(cursor, &key, &data, MDBX_NEXT) == MDBX_SUCCESS) {

printf("Key: %.*s, Value: %.*s\n",

(int)key.iov_len, (char *)key.iov_base,

(int)data.iov_len, (char *)data.iov_base);

}

mdbx_cursor_close(cursor);

mdbx_txn_abort(txn);

Getting Started

To use libmdbx in your project:

- Clone the repository:

git clone https://github.com/erthink/libmdbx.git - Build the library:

cd libmdbx mkdir build && cd build cmake .. make - Include the header in your C/C++ project:

#include <mdbx.h> - Link against the built library when compiling your project.

For more detailed usage, refer to the examples in the repository and the API documentation.

Competitor Comparisons

Fast persistent recoverable log and key-value store + cache, in C# and C++.

Pros of FASTER

- Designed for high-performance concurrent operations, particularly suited for large-scale systems

- Supports both key-value and log storage, offering versatility for different use cases

- Provides hybrid log and in-memory index for efficient data access and management

Cons of FASTER

- More complex to set up and use compared to libmdbx's simpler API

- Primarily optimized for C# and .NET environments, which may limit its applicability in some scenarios

- Requires more careful tuning and configuration to achieve optimal performance

Code Comparison

FASTER (C#):

using (var session = store.NewSession(new SimpleFunctions<long, long>()))

{

session.Upsert(1, 1);

session.Read(1, out long value);

}

libmdbx (C):

MDBX_txn *txn;

mdbx_txn_begin(env, NULL, MDBX_TXL_RDWR, &txn);

mdbx_put(txn, dbi, &key, &data, 0);

mdbx_get(txn, dbi, &key, &data);

mdbx_txn_commit(txn);

Both libraries offer high-performance key-value storage, but FASTER focuses on concurrent operations and hybrid storage, while libmdbx provides a simpler, lightweight solution with ACID compliance. FASTER may be preferred for large-scale .NET applications, while libmdbx is more suitable for embedded systems and cross-platform projects requiring a straightforward key-value store.

A library that provides an embeddable, persistent key-value store for fast storage.

Pros of RocksDB

- Highly optimized for SSDs and fast storage, offering excellent write performance

- Supports advanced features like column families and transactions

- Extensive documentation and wide industry adoption

Cons of RocksDB

- Higher memory usage compared to MDBX

- More complex configuration and tuning required

- Larger codebase and potentially steeper learning curve

Code Comparison

RocksDB example:

#include <rocksdb/db.h>

rocksdb::DB* db;

rocksdb::Options options;

options.create_if_missing = true;

rocksdb::Status status = rocksdb::DB::Open(options, "/path/to/db", &db);

MDBX example:

#include <mdbx.h>

MDBX_env *env;

mdbx_env_create(&env);

mdbx_env_open(env, "/path/to/db", MDBX_NOSUBDIR, 0664);

RocksDB offers a more feature-rich API with additional options, while MDBX provides a simpler, more straightforward interface. RocksDB is generally better suited for high-performance write-intensive workloads on SSDs, whereas MDBX excels in read-heavy scenarios and offers better memory efficiency. The choice between the two depends on specific use cases and performance requirements.

LevelDB is a fast key-value storage library written at Google that provides an ordered mapping from string keys to string values.

Pros of LevelDB

- Widely adopted and battle-tested in production environments

- Supports efficient range queries and iterators

- Offers good write performance, especially for bulk inserts

Cons of LevelDB

- Limited to single-process access, lacking multi-process support

- No built-in transactions or ACID compliance

- Requires manual compaction management for optimal performance

Code Comparison

LevelDB:

leveldb::DB* db;

leveldb::Options options;

options.create_if_missing = true;

leveldb::Status status = leveldb::DB::Open(options, "/tmp/testdb", &db);

MDBX:

MDBX_env *env;

mdbx_env_create(&env);

mdbx_env_open(env, "/tmp/testdb", MDBX_NOSUBDIR, 0664);

LevelDB uses a more C++-style API with classes and methods, while MDBX follows a C-style API with functions and pointers. LevelDB's API is generally more verbose but may be more familiar to C++ developers. MDBX's API is more concise and closer to the underlying database operations.

Both libraries offer key-value storage capabilities, but MDBX provides additional features like multi-process support, ACID transactions, and zero-copy reads. LevelDB, on the other hand, has a larger ecosystem and more language bindings available.

Seamless multi-primary syncing database with an intuitive HTTP/JSON API, designed for reliability

Pros of CouchDB

- Mature, widely-used document-oriented database with a large community

- Built-in web interface for easy management and data visualization

- Supports multi-master replication for distributed systems

Cons of CouchDB

- Higher resource usage compared to lightweight key-value stores

- Slower performance for simple key-value operations

- More complex setup and configuration for basic use cases

Code Comparison

CouchDB (JavaScript):

function(doc) {

if (doc.type === 'user') {

emit(doc._id, doc.name);

}

}

libmdbx (C):

MDBX_env *env;

mdbx_env_create(&env);

mdbx_env_open(env, "mydb", MDBX_NOSUBDIR, 0664);

Key Differences

- CouchDB is a full-featured document database, while libmdbx is a lightweight key-value store

- CouchDB offers a RESTful HTTP API, whereas libmdbx is a low-level embedded database library

- CouchDB supports complex queries and indexing, while libmdbx focuses on raw performance for simple operations

Use Cases

- CouchDB: Web applications, content management systems, real-time data synchronization

- libmdbx: Embedded systems, high-performance key-value storage, caching layers

FoundationDB - the open source, distributed, transactional key-value store

Pros of FoundationDB

- Distributed architecture, supporting high scalability and fault tolerance

- Multi-model database supporting key-value, document, and relational models

- Strong ACID guarantees with serializable isolation

Cons of FoundationDB

- More complex setup and maintenance due to distributed nature

- Steeper learning curve compared to simpler key-value stores

- Limited language bindings compared to some other databases

Code Comparison

FoundationDB (Python):

@fdb.transactional

def add_user(tr, user_id, name):

tr[f'users/{user_id}/name'] = name

fdb.open()

add_user(db, '123', 'John Doe')

libmdbx (C):

MDBX_env *env;

mdbx_env_create(&env);

mdbx_env_open(env, "mydb", MDBX_NOSUBDIR, 0664);

MDBX_txn *txn;

mdbx_txn_begin(env, NULL, 0, &txn);

MDBX_val key = {.iov_base = "users/123/name", .iov_len = 14};

MDBX_val data = {.iov_base = "John Doe", .iov_len = 8};

mdbx_put(txn, dbi, &key, &data, 0);

mdbx_txn_commit(txn);

FoundationDB offers a higher-level abstraction with built-in transaction support, while libmdbx provides lower-level control over database operations. FoundationDB's distributed nature allows for greater scalability, but libmdbx may offer better performance for single-node deployments.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

libmdbx

libmdbx is an extremely fast, compact, powerful, embedded, transactional key-value database, with Apache 2.0 license. libmdbx has a specific set of properties and capabilities, focused on creating unique lightweight solutions.

-

Allows a swarm of multi-threaded processes to ACIDly read and update several key-value maps and multimaps in a locally-shared database.

-

Provides extraordinary performance, minimal overhead through Memory-Mapping and

Olog(N)operations costs by virtue of B+ tree. -

Requires no maintenance and no crash recovery since it doesn't use WAL, but that might be a caveat for write-intensive workloads with durability requirements.

-

Enforces serializability for writers just by single mutex and affords wait-free for parallel readers without atomic/interlocked operations, while writing and reading transactions do not block each other.

-

Guarantee data integrity after crash unless this was explicitly neglected in favour of write performance.

-

Supports Linux, Windows, MacOS, Android, iOS, FreeBSD, DragonFly, Solaris, OpenSolaris, OpenIndiana, NetBSD, OpenBSD and other systems compliant with POSIX.1-2008.

-

Compact and friendly for fully embedding. Only â25KLOC of

C11, â64K x86 binary code of core, no internal threads neither server process(es), but implements a simplified variant of the Berkeley DB and dbm API.

Historically, libmdbx is a deeply revised and extended descendant of the legendary Lightning Memory-Mapped Database. libmdbx inherits all benefits from LMDB, but resolves some issues and adds a set of improvements.

Please refer to the online documentation with

CAPI description and pay attention to theC++API. Donations are welcome to the Ethereum/ERC-200xD104d8f8B2dC312aaD74899F83EBf3EEBDC1EA3A. ÐÑÑ Ð±ÑÐ´ÐµÑ Ñ Ð¾ÑоÑо!

Telegram Group archive: 1, 2, 3, 4, 5, 6, 7.

Github

на Ð ÑÑÑком (мой Ñодной ÑзÑк)

ÐеÑной 2022, без ÐºÐ°ÐºÐ¸Ñ -либо пÑедÑпÑеждений или поÑÑнений, админиÑÑÑаÑÐ¸Ñ Github Ñдалила мой аккаÑÐ½Ñ Ð¸ вÑе пÑоекÑÑ. ЧеÑез неÑколÑко меÑÑÑев, без какого-либо моего ÑÑаÑÑÐ¸Ñ Ð¸Ð»Ð¸ ÑведомлениÑ, пÑоекÑÑ Ð±Ñли воÑÑÑановленÑ/оÑкÑÑÑÑ Ð² ÑÑаÑÑÑе "public read-only archive" из какой-Ñо неполноÑенной ÑезеÑвной копии. ÐÑи дейÑÑÐ²Ð¸Ñ Github Ñ ÑаÑÑÐµÐ½Ð¸Ð²Ð°Ñ ÐºÐ°Ðº злонамеÑеннÑй ÑабоÑаж, а Ñам ÑеÑÐ²Ð¸Ñ Github ÑÑиÑÐ°Ñ Ð½Ð°Ð²Ñегда ÑÑÑаÑивÑим какое-либо довеÑие.

ÐÑледÑÑвие пÑоизоÑедÑего, никогда и ни пÑи ÐºÐ°ÐºÐ¸Ñ ÑÑловиÑÑ , Ñ Ð½Ðµ бÑÐ´Ñ ÑазмеÑаÑÑ Ð½Ð° Github пеÑвоиÑÑоÑники (aka origins) Ð¼Ð¾Ð¸Ñ Ð¿ÑоекÑов, либо как-либо полагаÑÑÑÑ Ð½Ð° инÑÑаÑÑÑÑкÑÑÑÑ Github.

Тем не менее, Ð¿Ð¾Ð½Ð¸Ð¼Ð°Ñ ÑÑо полÑзоваÑелÑм Ð¼Ð¾Ð¸Ñ Ð¿ÑоекÑов Ñдобнее полÑÑаÑÑ Ðº ним доÑÑÑп именно на Github, Ñ Ð½Ðµ Ñ Ð¾ÑÑ Ð¾Ð³ÑаниÑиваÑÑ Ð¸Ñ ÑÐ²Ð¾Ð±Ð¾Ð´Ñ Ð¸Ð»Ð¸ ÑоздаваÑÑ Ð½ÐµÑдобÑÑво, и поÑÑÐ¾Ð¼Ñ ÑазмеÑÐ°Ñ Ð½Ð° Github зеÑкала (aka mirrors) ÑепозиÑоÑиев Ð¼Ð¾Ð¸Ñ Ð¿ÑоекÑов. ÐÑи ÑÑом еÑÑ Ñаз акÑенÑиÑÑÑ Ð²Ð½Ð¸Ð¼Ð°Ð½Ð¸Ðµ, ÑÑо ÑÑо ÑолÑко зеÑкала, коÑоÑÑе могÑÑ Ð±ÑÑÑ Ð·Ð°Ð¼Ð¾ÑоженÑ, заблокиÑÐ¾Ð²Ð°Ð½Ñ Ð¸Ð»Ð¸ ÑÐ´Ð°Ð»ÐµÐ½Ñ Ð² лÑбой моменÑ, как ÑÑо Ñже бÑло в 2022.

in English

In the spring of 2022, without any warnings or explanations, the Github administration deleted my account and all projects. A few months later, without any involvement or notification from me, the projects were restored/opened in the "public read-only archive" status from some kind of incomplete backup. I regard these actions of Github as malicious sabotage, and I consider the Github service itself to have lost any trust forever.

As a result of what has happened, I will never, under any circumstances, post the primary sources (aka origins) of my projects on Github, or rely in any way on the Github infrastructure.

Nevertheless, realizing that it is more convenient for users of my projects to access them on Github, I do not want to restrict their freedom or create inconvenience, and therefore I place mirrors of my project repositories on Github. At the same time, I would like to emphasize once again that these are only mirrors that can be frozen, blocked or deleted at any time, as was the case in 2022.

MithrilDB and Future

The next version is under non-public development from scratch and will be

released as MithrilDB and libmithrildb for libraries & packages.

Admittedly mythical Mithril is

resembling silver but being stronger and lighter than steel. Therefore

MithrilDB is a rightly relevant name.

MithrilDB is radically different from libmdbx by the new database format and API based on C++20. The goal of this revolution is to provide a clearer and robust API, add more features and new valuable properties of the database. All fundamental architectural problems of libmdbx/LMDB have been solved there, but now the active development has been suspended for top-three reasons:

- For now libmdbx mostly enough and Iâm busy for scalability.

- Waiting for fresh Elbrus CPU of e2k architecture, especially with hardware acceleration of Streebog and Kuznyechik, which are required for Merkle tree, etc.

- The expectation of needs and opportunities due to the wide use of NVDIMM (aka persistent memory), modern NVMe and ÐнгаÑа.

However, MithrilDB will not be available for countries unfriendly to Russia (i.e. acceded the sanctions, devil adepts and/or NATO). But it is not yet known whether such restriction will be implemented only through a license and support, either the source code will not be open at all. Basically I am not inclined to allow my work to contribute to the profit that goes to weapons that kill my relatives and friends. NO OPTIONS.

Nonetheless, I try not to make any promises regarding MithrilDB until release.

Contrary to MithrilDB, libmdbx will forever free and open source. Moreover with high-quality support whenever possible. Tu deviens responsable pour toujours de ce que tu as apprivois. So I will continue to comply with the original open license and the principles of constructive cooperation, in spite of outright Github sabotage and sanctions. I will also try to keep (not drop) Windows support, despite it is an unused obsolete technology for us.

$ objdump -f -h -j .text libmdbx.so

libmdbx.so: ÑоÑÐ¼Ð°Ñ Ñайла elf64-e2k

аÑÑ

иÑекÑÑÑа: elbrus-v6:64, Ñлаги 0x00000150:

HAS_SYMS, DYNAMIC, D_PAGED

наÑалÑнÑй адÑÐµÑ 0x00000000??????00

РазделÑ:

Idx Name Разм VMA LMA Фа ÑмеÑ. ÐÑÑ. Флаги

10 .text 000e7460 0000000000025c00 0000000000025c00 00025c00 2**10 CONTENTS, ALLOC, LOAD, READONLY, CODE

$ cc --version

lcc:1.27.14:Jan-31-2024:e2k-v6-linux

gcc (GCC) 9.3.0 compatible

Table of Contents

Characteristics

Features

-

Key-value data model, keys are always sorted.

-

Multiple key-value tables/sub-databases within a single datafile.

-

Range lookups, including range query estimation.

-

Efficient support for short fixed length keys, including native 32/64-bit integers.

-

Ultra-efficient support for multimaps. Multi-values sorted, searchable and iterable. Keys stored without duplication.

-

Data is memory-mapped and accessible directly/zero-copy. Traversal of database records is extremely-fast.

-

Transactions for readers and writers, ones do not block others.

-

Writes are strongly serialized. No transaction conflicts nor deadlocks.

-

Readers are non-blocking, notwithstanding snapshot isolation.

-

Nested write transactions.

-

Reads scale linearly across CPUs.

-

Continuous zero-overhead database compactification.

-

Automatic on-the-fly database size adjustment.

-

Customizable database page size.

-

Olog(N)cost of lookup, insert, update, and delete operations by virtue of B+ tree characteristics. -

Online hot backup.

-

Append operation for efficient bulk insertion of pre-sorted data.

-

No WAL nor any transaction journal. No crash recovery needed. No maintenance is required.

-

No internal cache and/or memory management, all done by basic OS services.

Limitations

- Page size: a power of 2, minimum

256(mostly for testing), maximum65536bytes, default4096bytes. - Key size: minimum

0, maximum â½ pagesize (2022bytes for default 4K pagesize,32742bytes for 64K pagesize). - Value size: minimum

0, maximum2146435072(0x7FF00000) bytes for maps, â½ pagesize for multimaps (2022bytes for default 4K pagesize,32742bytes for 64K pagesize). - Write transaction size: up to

1327217884pages (4.944272TiB for default 4K pagesize,79.108351TiB for 64K pagesize). - Database size: up to

2147483648pages (â8.0TiB for default 4K pagesize, â128.0TiB for 64K pagesize). - Maximum tables/sub-databases:

32765.

Gotchas

-

There cannot be more than one writer at a time, i.e. no more than one write transaction at a time.

-

libmdbx is based on B+ tree, so access to database pages is mostly random. Thus SSDs provide a significant performance boost over spinning disks for large databases.

-

libmdbx uses shadow paging instead of WAL. Thus syncing data to disk might be a bottleneck for write intensive workload.

-

libmdbx uses copy-on-write for snapshot isolation during updates, but read transactions prevents recycling an old retired/freed pages, since it read ones. Thus altering of data during a parallel long-lived read operation will increase the process work set, may exhaust entire free database space, the database can grow quickly, and result in performance degradation. Try to avoid long running read transactions, otherwise use transaction parking and/or Handle-Slow-Readers callback.

-

libmdbx is extraordinarily fast and provides minimal overhead for data access, so you should reconsider using brute force techniques and double check your code. On the one hand, in the case of libmdbx, a simple linear search may be more profitable than complex indexes. On the other hand, if you make something suboptimally, you can notice detrimentally only on sufficiently large data.

Comparison with other databases

For now please refer to chapter of "BoltDB comparison with other databases" which is also (mostly) applicable to libmdbx with minor clarification:

- a database could shared by multiple processes, i.e. no multi-process issues;

- no issues with moving a cursor(s) after the deletion;

- libmdbx provides zero-overhead database compactification, so a database file could be shrinked/truncated in particular cases;

- excluding disk I/O time libmdbx could be â3 times faster than BoltDB and up to 10-100K times faster than both BoltDB and LMDB in particular extreme cases;

- libmdbx provides more features compared to BoltDB and/or LMDB.

Improvements beyond LMDB

libmdbx is superior to legendary LMDB in terms of features and reliability, not inferior in performance. In comparison to LMDB, libmdbx make things "just work" perfectly and out-of-the-box, not silently and catastrophically break down. The list below is pruned down to the improvements most notable and obvious from the user's point of view.

Some Added Features

-

Keys could be more than 2 times longer than LMDB.

For DB with default page size libmdbx support keys up to 2022 bytes and up to 32742 bytes for 64K page size. LMDB allows key size up to 511 bytes and may silently loses data with large values.

-

Up to 30% faster than LMDB in CRUD benchmarks.

Benchmarks of the in-tmpfs scenarios, that tests the speed of the engine itself, showned that libmdbx 10-20% faster than LMDB, and up to 30% faster when libmdbx compiled with specific build options which downgrades several runtime checks to be match with LMDB behaviour.

However, libmdbx may be slower than LMDB on Windows, since uses native file locking API. These locks are really slow, but they prevent an inconsistent backup from being obtained by copying the DB file during an ongoing write transaction. So I think this is the right decision, and for speed, it's better to use Linux, or ask Microsoft to fix up file locks.

Noted above and other results could be easily reproduced with ioArena just by

make bench-quartetcommand, including comparisons with RockDB and WiredTiger. -

Automatic on-the-fly database size adjustment, both increment and reduction.

libmdbx manages the database size according to parameters specified by

mdbx_env_set_geometry()function, ones include the growth step and the truncation threshold.Unfortunately, on-the-fly database size adjustment doesn't work under Wine due to its internal limitations and unimplemented functions, i.e. the

MDBX_UNABLE_EXTEND_MAPSIZEerror will be returned. -

Automatic continuous zero-overhead database compactification.

During each commit libmdbx merges a freeing pages which adjacent with the unallocated area at the end of file, and then truncates unused space when a lot enough of.

-

The same database format for 32- and 64-bit builds.

libmdbx database format depends only on the endianness but not on the bitness.

-

The "Big Foot" feature than solves speific performance issues with huge transactions and extra-large page-number-lists.

-

LIFO policy for Garbage Collection recycling. This can significantly increase write performance due write-back disk cache up to several times in a best case scenario.

LIFO means that for reuse will be taken the latest becomes unused pages. Therefore the loop of database pages circulation becomes as short as possible. In other words, the set of pages, that are (over)written in memory and on disk during a series of write transactions, will be as small as possible. Thus creates ideal conditions for the battery-backed or flash-backed disk cache efficiency.

-

Parking of read transactions with ousting and auto-restart, Handle-Slow-Readers callback to resolve an issues due to long-lived read transactions.

-

Fast estimation of range query result volume, i.e. how many items can be found between a

KEY1and aKEY2. This is a prerequisite for build and/or optimize query execution plans.libmdbx performs a rough estimate based on common B-tree pages of the paths from root to corresponding keys.

-

Database integrity check API both with standalone

mdbx_chkutility. -

Support for opening databases in the exclusive mode, including on a network share.

-

Extended information of whole-database, tables/sub-databases, transactions, readers enumeration.

libmdbx provides a lot of information, including dirty and leftover pages for a write transaction, reading lag and holdover space for read transactions.

-

Support of Zero-length for keys and values.

-

Useful runtime options for tuning engine to application's requirements and use cases specific.

-

Automated steady sync-to-disk upon several thresholds and/or timeout via cheap polling.

-

Ability to determine whether the particular data is on a dirty page or not, that allows to avoid copy-out before updates.

-

Extended update and delete operations.

libmdbx allows one at once with getting previous value and addressing the particular item from multi-value with the same key.

- Sequence generation and three persistent 64-bit vector-clock like markers.

Other fixes and specifics

-

Fixed more than 10 significant errors, in particular: page leaks, wrong table/sub-database statistics, segfault in several conditions, nonoptimal page merge strategy, updating an existing record with a change in data size (including for multimap), etc.

-

All cursors can be reused and should be closed explicitly, regardless ones were opened within a write or read transaction.

-

Opening database handles are spared from race conditions and pre-opening is not needed.

-

Returning

MDBX_EMULTIVALerror in case of ambiguous update or delete. -

Guarantee of database integrity even in asynchronous unordered write-to-disk mode.

libmdbx propose additional trade-off by

MDBX_SAFE_NOSYNCwith append-like manner for updates, that avoids database corruption after a system crash contrary to LMDB. Nevertheless, theMDBX_UTTERLY_NOSYNCmode is available to match LMDB's behaviour forMDB_NOSYNC. -

On MacOS & iOS the

fcntl(F_FULLFSYNC)syscall is used by default to synchronize data with the disk, as this is the only way to guarantee data durability in case of power failure. Unfortunately, in scenarios with high write intensity, the use ofF_FULLFSYNCsignificantly degrades performance compared to LMDB, where thefsync()syscall is used. Therefore, libmdbx allows you to override this behavior by defining theMDBX_OSX_SPEED_INSTEADOF_DURABILITY=1option while build the library. -

On Windows the

LockFileEx()syscall is used for locking, since it allows place the database on network drives, and provides protection against incompetent user actions (aka poka-yoke). Therefore libmdbx may be a little lag in performance tests from LMDB where the named mutexes are used.

History

Historically, libmdbx is a deeply revised and extended descendant of the Lightning Memory-Mapped Database. At first the development was carried out within the ReOpenLDAP project. About a year later libmdbx was separated into a standalone project, which was presented at Highload++ 2015 conference.

Since 2017 libmdbx is used in Fast Positive Tables, and until 2025 development was funded by Positive Technologies. Since 2020 libmdbx is used in Ethereum: Erigon, Akula, Silkworm, Reth, etc.

On 2022-04-15 the Github administration, without any warning nor

explanation, deleted libmdbx along with a lot of other projects,

simultaneously blocking access for many developers. Therefore on

2022-04-21 I have migrated to a reliable trusted infrastructure.

The origin for now is at GitFlic

with backup at ABF by ROSA Ðаб.

For the same reason Github is blacklisted forever.

Since May 2024 and version 0.13 libmdbx was re-licensed under Apache-2.0 license.

Please refer to the COPYRIGHT file for license change explanations.

Acknowledgments

Howard Chu hyc@openldap.org and Hallvard Furuseth hallvard@openldap.org are the authors of LMDB, from which libmdbx was forked in 2015.

Martin Hedenfalk martin@bzero.se is the author of btree.c code, which

was used to begin development of LMDB.

Usage

Currently, libmdbx is only available in a source code form. Packages support for common Linux distributions is planned in the future, since release the version 1.0.

Source code embedding

libmdbx provides three official ways for integration in source code form:

-

Using an amalgamated source code which available in the releases section on GitFlic.

An amalgamated source code includes all files required to build and use libmdbx, but not for testing libmdbx itself. Beside the releases an amalgamated sources could be created any time from the original clone of git repository on Linux by executing

make dist. As a result, the desired set of files will be formed in thedistsubdirectory. -

Using Conan Package Manager:

- optional: Setup your own conan-server;

- Create conan-package by

conan create .inside the libmdbx' repo subdirectory; - optional: Upload created recipe and/or package to the conan-server by

conan upload -r SERVER 'mdbx/*'; - Consume libmdbx-package from the local conan-cache or from conan-server in accordance with the Conan tutorial.

-

Adding the complete source code as a

git submodulefrom the origin git repository on GitFlic.This allows you to build as libmdbx and testing tool. On the other hand, this way requires you to pull git tags, and use C++11 compiler for test tool.

Please, avoid using any other techniques. Otherwise, at least

don't ask for support and don't name such chimeras libmdbx.

Building and Testing

Both amalgamated and original source code provides build through the use CMake or GNU Make with bash.

All build ways

are completely traditional and have minimal prerequirements like

build-essential, i.e. the non-obsolete C/C++ compiler and a

SDK for the

target platform.

Obviously you need building tools itself, i.e. git,

cmake or GNU make with bash. For your convenience, make help

and make options are also available for listing existing targets

and build options respectively.

The only significant specificity is that git' tags are required

to build from complete (not amalgamated) source codes.

Executing git fetch --tags --force --prune is enough to get ones,

and --unshallow or --update-shallow is required for shallow cloned case.

So just using CMake or GNU Make in your habitual manner and feel free to fill an issue or make pull request in the case something will be unexpected or broken down.

Testing

The amalgamated source code does not contain any tests for or several reasons. Please read the explanation and don't ask to alter this. So for testing libmdbx itself you need a full source code, i.e. the clone of a git repository, there is no option.

The full source code of libmdbx has a test subdirectory with minimalistic test "framework".

Actually yonder is a source code of the mdbx_test â console utility which has a set of command-line options that allow construct and run a reasonable enough test scenarios.

This test utility is intended for libmdbx's developers for testing library itself, but not for use by users.

Therefore, only basic information is provided:

- There are few CRUD-based test cases (hill, TTL, nested, append, jitter, etc),

which can be combined to test the concurrent operations within shared database in a multi-processes environment.

This is the

basictest scenario. - The

Makefileprovide several self-described targets for testing:smoke,test,check,memcheck,test-valgrind,test-asan,test-leak,test-ubsan,cross-gcc,cross-qemu,gcc-analyzer,smoke-fault,smoke-singleprocess,test-singleprocess,long-test. Please runmake --helpif doubt. - In addition to the

mdbx_testutility, there is the scriptstochastic.sh, which callsmdbx_testby going through set of modes and options, with gradually increasing the number of operations and the size of transactions. This script is used for mostly of all automatic testing, includingMakefiletargets and Continuous Integration. - Brief information of available command-line options is available by

--help. However, you should dive into source code to get all, there is no option.

Anyway, no matter how thoroughly the libmdbx is tested, you should rely only on your own tests for a few reasons:

- Mostly of all use cases are unique. So it is no warranty that your use case was properly tested, even the libmdbx's tests engages stochastic approach.

- If there are problems, then your test on the one hand will help to verify whether you are using libmdbx correctly, on the other hand it will allow to reproduce the problem and insure against regression in a future.

- Actually you should rely on than you checked by yourself or take a risk.

Common important details

Build reproducibility

By default libmdbx track build time via MDBX_BUILD_TIMESTAMP build option and macro.

So for a reproducible builds you should predefine/override it to known fixed string value.

For instance:

- for reproducible build with make:

make MDBX_BUILD_TIMESTAMP=unknown... - or during configure by CMake:

cmake -DMDBX_BUILD_TIMESTAMP:STRING=unknown...

Of course, in addition to this, your toolchain must ensure the reproducibility of builds. For more information please refer to reproducible-builds.org.

Containers

There are no special traits nor quirks if you use libmdbx ONLY inside the single container. But in a cross-container(s) or with a host-container(s) interoperability cases the three major things MUST be guaranteed:

-

Coherence of memory mapping content and unified page cache inside OS kernel for host and all container(s) operated with a DB. Basically this means must be only a single physical copy of each memory mapped DB' page in the system memory.

-

Uniqueness of PID values and/or a common space for ones:

- for POSIX systems: PID uniqueness for all processes operated with a DB.

I.e. the

--pid=hostis required for run DB-aware processes inside Docker, either without host interaction a--pid=container:<name|id>with the same name/id. - for non-POSIX (i.e. Windows) systems: inter-visibility of processes handles.

I.e. the

OpenProcess(SYNCHRONIZE, ..., PID)must return reasonable error, includingERROR_ACCESS_DENIED, but not theERROR_INVALID_PARAMETERas for an invalid/non-existent PID.

- for POSIX systems: PID uniqueness for all processes operated with a DB.

I.e. the

-

The versions/builds of libmdbx and

libc/pthreads(glibc,musl, etc) must be be compatible.- Basically, the

options:string in the output ofmdbx_chk -Vmust be the same for host and container(s). SeeMDBX_LOCKING,MDBX_USE_OFDLOCKSand other build options for details. - Avoid using different versions of

libc, especially mixing different implementations, i.e.glibcwithmusl, etc. Prefer to use the same LTS version, or switch to full virtualization/isolation if in doubt.

- Basically, the

DSO/DLL unloading and destructors of Thread-Local-Storage objects

When building libmdbx as a shared library or use static libmdbx as a part of another dynamic library, it is advisable to make sure that your system ensures the correctness of the call destructors of Thread-Local-Storage objects when unloading dynamic libraries.

If this is not the case, then unloading a dynamic-link library with libmdbx code inside, can result in either a resource leak or a crash due to calling destructors from an already unloaded DSO/DLL object. The problem can only manifest in a multithreaded application, which makes the unloading of shared dynamic libraries with libmdbx code inside, after using libmdbx. It is known that TLS-destructors are properly maintained in the following cases:

-

On all modern versions of Windows (Windows 7 and later).

-

On systems with the

__cxa_thread_atexit_impl()function in the standard C library, including systems with GNU libc version 2.18 and later. -

On systems with libpthread/ntpl from GNU libc with bug fixes #21031 and #21032, or where there are no similar bugs in the pthreads implementation.

Linux and other platforms with GNU Make

To build the library it is enough to execute make all in the directory

of source code, and make check to execute the basic tests.

If the make installed on the system is not GNU Make, there will be a

lot of errors from make when trying to build. In this case, perhaps you

should use gmake instead of make, or even gnu-make, etc.

FreeBSD and related platforms

As a rule on BSD and it derivatives the default is to use Berkeley Make and Bash is not installed.

So you need to install the required components: GNU Make, Bash, C and C++

compilers compatible with GCC or CLANG. After that, to build the

library, it is enough to execute gmake all (or make all) in the

directory with source code, and gmake check (or make check) to run

the basic tests.

Windows

For build libmdbx on Windows the original CMake and Microsoft Visual

Studio 2019 are

recommended. Please use the recent versions of CMake, Visual Studio and Windows

SDK to avoid troubles with C11 support and alignas() feature.

For build by MinGW the 10.2 or recent version coupled with a modern CMake are required. So it is recommended to use chocolatey to install and/or update the ones.

Another ways to build is potentially possible but not supported and will not.

The CMakeLists.txt or GNUMakefile scripts will probably need to be modified accordingly.

Using other methods do not forget to add the ntdll.lib to linking.

It should be noted that in libmdbx was efforts to avoid

runtime dependencies from CRT and other MSVC libraries.

For this is enough to pass the -DMDBX_WITHOUT_MSVC_CRT:BOOL=ON option

during configure by CMake.

To run the long stochastic test scenario, bash is required, and such testing is recommended with placing the test data on the RAM-disk.

Windows Subsystem for Linux

libmdbx could be used in WSL2

but NOT in WSL1 environment.

This is a consequence of the fundamental shortcomings of WSL1 and cannot be fixed.

To avoid data loss, libmdbx returns the ENOLCK (37, "No record locks available")

error when opening the database in a WSL1 environment.

MacOS

Current native build tools for

MacOS include GNU Make, CLANG and an outdated version of Bash.

However, the build script uses GNU-kind of sed and tar.

So the easiest way to install all prerequirements is to use Homebrew,

just by brew install bash make cmake ninja gnu-sed gnu-tar --with-default-names.

Next, to build the library, it is enough to run make all in the

directory with source code, and run make check to execute the base

tests. If something goes wrong, it is recommended to install

Homebrew and try again.

To run the long stochastic test scenario, you will need to install the current (not outdated) version of Bash. Just install it as noted above.

Android

I recommend using CMake to build libmdbx for Android. Please refer to the official guide.

iOS

To build libmdbx for iOS, I recommend using CMake with the "toolchain file" from the ios-cmake project.

API description

Please refer to the online libmdbx API reference and/or see the mdbx.h++ and mdbx.h headers.

Bindings

| Runtime | Repo | Author |

|---|---|---|

| Rust | libmdbx-rs | Artem Vorotnikov |

| Python | PyPi/libmdbx | Lazymio |

| Java | mdbxjni | Castor Technologies |

| Go | mdbx-go | Alex Sharov |

| Ruby | ruby-mdbx | Mahlon E. Smith |

| Zig | mdbx-zig | Sayan J. Das |

Obsolete/Outdated/Unsupported:

| Runtime | Repo | Author |

|---|---|---|

| .NET | mdbx.NET | Jerry Wang |

| Scala | mdbx4s | David Bouyssié |

| Rust | mdbx | gcxfd |

| Haskell | libmdbx-hs | Francisco Vallarino |

| Lua | lua-libmdbx | Masatoshi Fukunaga |

| NodeJS, Deno | lmdbx-js | Kris Zyp |

| NodeJS | node-mdbx | СеÑгей ФедоÑов |

| Nim | NimDBX | Jens Alfke |

Performance comparison

Over the past 10 years, libmdbx has had a lot of significant improvements and innovations. libmdbx has become a slightly faster in simple cases and many times faster in complex scenarios, especially with a huge transactions in gigantic databases. Therefore, on the one hand, the results below are outdated. However, on the other hand, these simple benchmarks are evident, easy to reproduce, and are close to the most common use cases.

The following all benchmark results were obtained in 2015 by IOArena and multiple scripts runs on my laptop (i7-4600U 2.1 GHz, SSD MZNTD512HAGL-000L1).

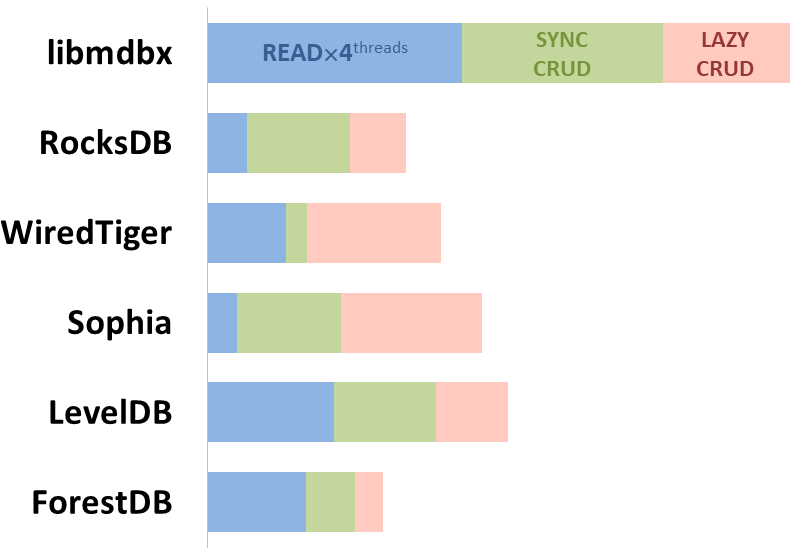

Integral performance

Here showed sum of performance metrics in 3 benchmarks:

-

Read/Search on the machine with 4 logical CPUs in HyperThreading mode (i.e. actually 2 physical CPU cores);

-

Transactions with CRUD operations in sync-write mode (fdatasync is called after each transaction);

-

Transactions with CRUD operations in lazy-write mode (moment to sync data to persistent storage is decided by OS).

Reasons why asynchronous mode isn't benchmarked here:

-

It doesn't make sense as it has to be done with DB engines, oriented for keeping data in memory e.g. Tarantool, Redis), etc.

-

Performance gap is too high to compare in any meaningful way.

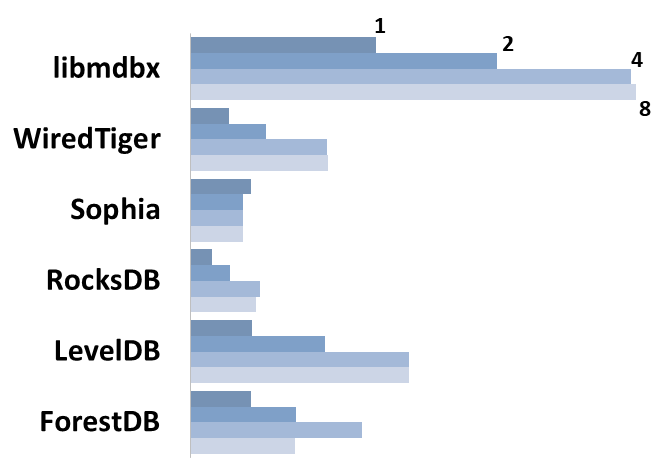

Read Scalability

Summary performance with concurrent read/search queries in 1-2-4-8 threads on the machine with 4 logical CPUs in HyperThreading mode (i.e. actually 2 physical CPU cores).

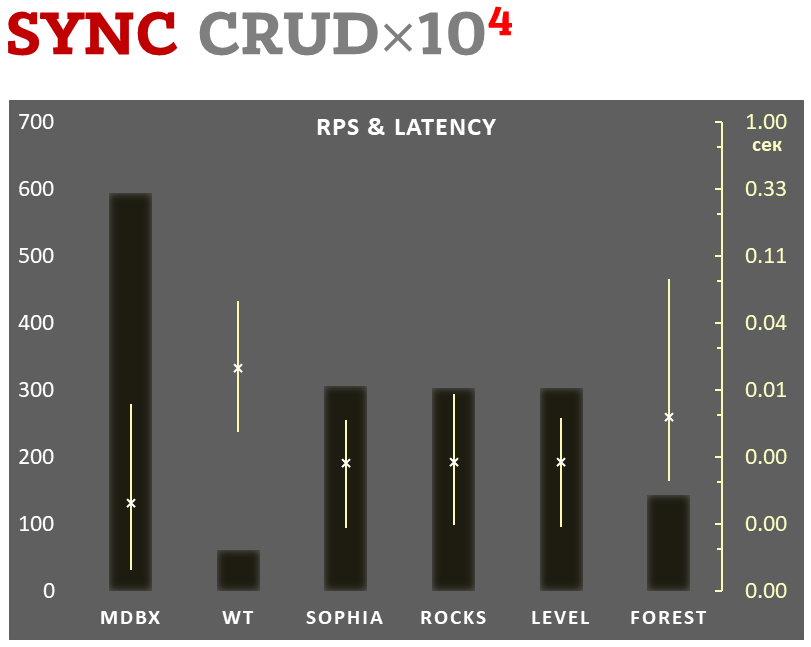

Sync-write mode

-

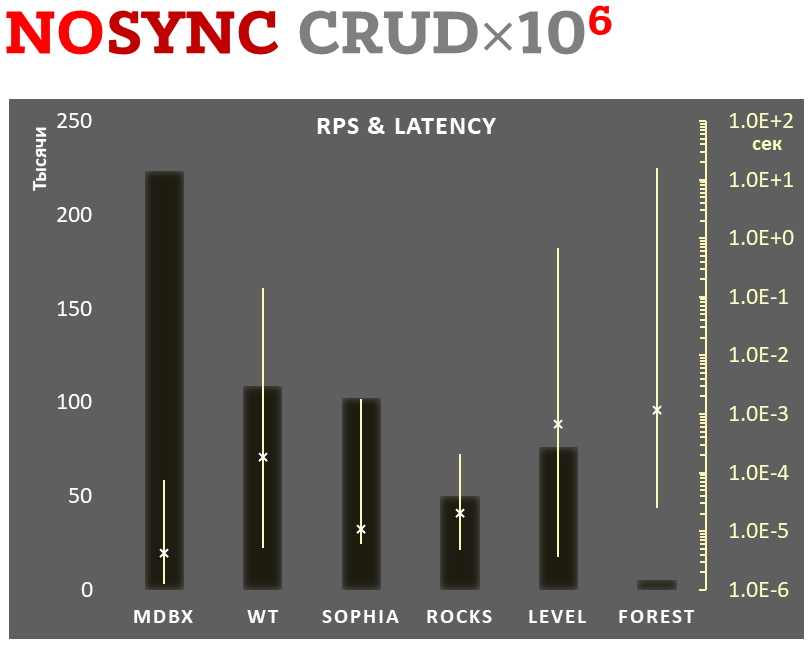

Linear scale on left and dark rectangles mean arithmetic mean transactions per second;

-

Logarithmic scale on right is in seconds and yellow intervals mean execution time of transactions. Each interval shows minimal and maximum execution time, cross marks standard deviation.

10,000 transactions in sync-write mode. In case of a crash all data is consistent and conforms to the last successful transaction. The fdatasync syscall is used after each write transaction in this mode.

In the benchmark each transaction contains combined CRUD operations (2 inserts, 1 read, 1 update, 1 delete). Benchmark starts on an empty database and after full run the database contains 10,000 small key-value records.

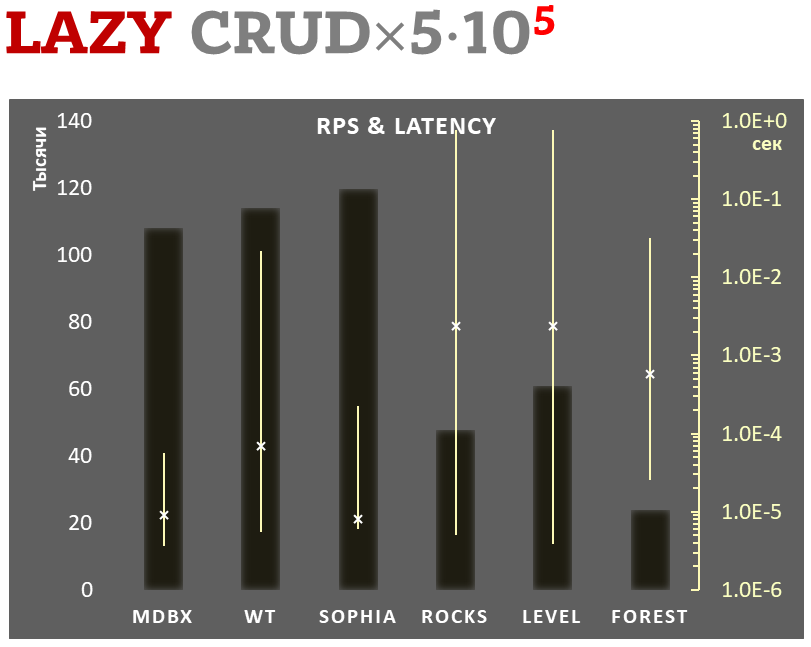

Lazy-write mode

-

Linear scale on left and dark rectangles mean arithmetic mean of thousands transactions per second;

-

Logarithmic scale on right in seconds and yellow intervals mean execution time of transactions. Each interval shows minimal and maximum execution time, cross marks standard deviation.

100,000 transactions in lazy-write mode. In case of a crash all data is consistent and conforms to the one of last successful transactions, but transactions after it will be lost. Other DB engines use WAL or transaction journal for that, which in turn depends on order of operations in the journaled filesystem. libmdbx doesn't use WAL and hands I/O operations to filesystem and OS kernel (mmap).

In the benchmark each transaction contains combined CRUD operations (2 inserts, 1 read, 1 update, 1 delete). Benchmark starts on an empty database and after full run the database contains 100,000 small key-value records.

Async-write mode

-

Linear scale on left and dark rectangles mean arithmetic mean of thousands transactions per second;

-

Logarithmic scale on right in seconds and yellow intervals mean execution time of transactions. Each interval shows minimal and maximum execution time, cross marks standard deviation.

1,000,000 transactions in async-write mode. In case of a crash all data is consistent and conforms to the one of last successful transactions, but lost transaction count is much higher than in lazy-write mode. All DB engines in this mode do as little writes as possible on persistent storage. libmdbx uses msync(MS_ASYNC) in this mode.

In the benchmark each transaction contains combined CRUD operations (2 inserts, 1 read, 1 update, 1 delete). Benchmark starts on an empty database and after full run the database contains 10,000 small key-value records.

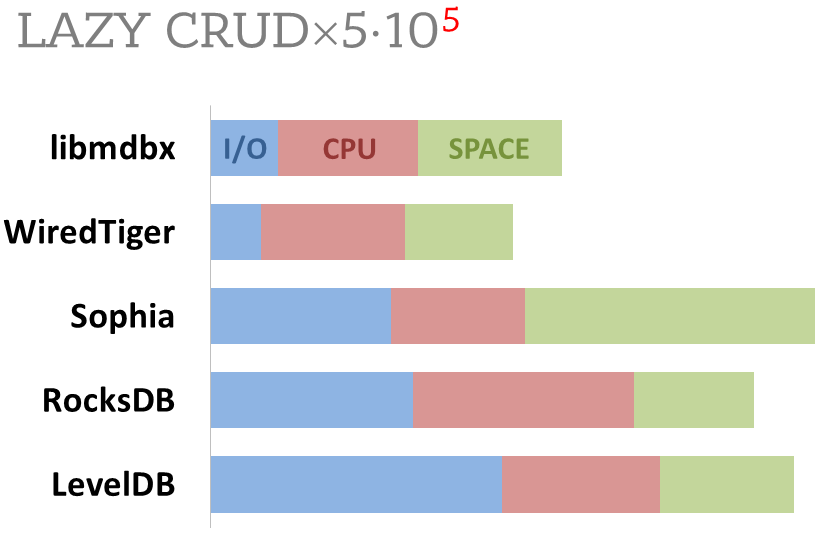

Cost comparison

Summary of used resources during lazy-write mode benchmarks:

-

Read and write IOPs;

-

Sum of user CPU time and sys CPU time;

-

Used space on persistent storage after the test and closed DB, but not waiting for the end of all internal housekeeping operations (LSM compactification, etc).

ForestDB is excluded because benchmark showed it's resource consumption for each resource (CPU, IOPs) much higher than other engines which prevents to meaningfully compare it with them.

All benchmark data is gathered by getrusage() syscall and by scanning the data directory.

Top Related Projects

Fast persistent recoverable log and key-value store + cache, in C# and C++.

A library that provides an embeddable, persistent key-value store for fast storage.

LevelDB is a fast key-value storage library written at Google that provides an ordered mapping from string keys to string values.

Seamless multi-primary syncing database with an intuitive HTTP/JSON API, designed for reliability

FoundationDB - the open source, distributed, transactional key-value store

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot