Top Related Projects

Cartographer is a system that provides real-time simultaneous localization and mapping (SLAM) in 2D and 3D across multiple platforms and sensor configurations.

Real-Time SLAM for Monocular, Stereo and RGB-D Cameras, with Loop Detection and Relocalization Capabilities

GTSAM is a library of C++ classes that implement smoothing and mapping (SAM) in robotics and vision, using factor graphs and Bayes networks as the underlying computing paradigm rather than sparse matrices.

Visual Inertial Odometry with SLAM capabilities and 3D Mesh generation.

Semi-direct Visual Odometry

Quick Overview

Maplab is an open-source research platform for visual-inertial mapping, developed by the Autonomous Systems Lab at ETH Zurich. It provides a collection of tools for processing and manipulating visual-inertial data, with a focus on creating and refining multi-session maps for robotic applications.

Pros

- Comprehensive suite of tools for visual-inertial mapping and localization

- Supports multi-session mapping and map merging

- Highly modular architecture allowing for easy extension and customization

- Active development and maintenance by a reputable research institution

Cons

- Steep learning curve due to the complexity of the system

- Limited documentation for some advanced features

- Requires specific sensor setups and data formats, which may not be compatible with all robotic platforms

- Performance can be computationally intensive for large-scale maps

Code Examples

- Initializing a map:

vi_map::VIMap map;

map.setMapHeader(map_header);

map.addNewMissionWithBaseframe(mission_id, T_G_M, base_frame_id);

- Adding a vertex to the map:

vi_map::Vertex::UniquePtr vertex_ptr(new vi_map::Vertex(vertex_id));

vertex_ptr->setMissionId(mission_id);

vertex_ptr->set_p_M_I(position);

vertex_ptr->set_q_M_I(orientation);

map.addVertex(std::move(vertex_ptr));

- Performing loop closure:

loop_closure::LoopDetector loop_detector;

loop_closure::LoopClosureConstraint constraint;

bool success = loop_detector.detectLoopClosures(map, &constraint);

if (success) {

map.addLoopClosureEdge(constraint);

}

Getting Started

-

Clone the repository:

git clone https://github.com/ethz-asl/maplab.git -

Install dependencies:

cd maplab ./dependencies/internal/install_dependencies.sh -

Build the project:

catkin build maplab -

Run the maplab console:

rosrun maplab_console maplab_console

Competitor Comparisons

Cartographer is a system that provides real-time simultaneous localization and mapping (SLAM) in 2D and 3D across multiple platforms and sensor configurations.

Pros of Cartographer

- More active development with frequent updates and contributions

- Better documentation and examples for easier integration

- Supports real-time SLAM for both 2D and 3D environments

Cons of Cartographer

- Higher computational requirements, especially for large-scale mapping

- Less flexible in terms of sensor integration compared to Maplab

- Steeper learning curve for customization and advanced usage

Code Comparison

Maplab (C++):

vi_map::VIMap map;

map_builder::MapBuilder map_builder;

map_builder.buildMapFromMissions(missions, &map);

Cartographer (C++):

cartographer::mapping::MapBuilder map_builder(options);

map_builder.AddTrajectoryBuilder(options, trajectory_id);

map_builder.FinishTrajectory(trajectory_id);

Both projects use C++ and provide similar high-level APIs for map building. However, Maplab focuses on visual-inertial mapping, while Cartographer is more versatile in terms of sensor inputs and mapping types. Cartographer's API is generally more straightforward, reflecting its focus on ease of use and integration.

Real-Time SLAM for Monocular, Stereo and RGB-D Cameras, with Loop Detection and Relocalization Capabilities

Pros of ORB_SLAM2

- Lightweight and efficient implementation, suitable for real-time applications

- Well-documented and easy to understand for beginners

- Supports monocular, stereo, and RGB-D cameras

Cons of ORB_SLAM2

- Limited to visual SLAM, lacking multi-sensor fusion capabilities

- No built-in loop closure or global optimization features

- Less scalable for large-scale mapping scenarios

Code Comparison

ORB_SLAM2 (feature extraction):

void Frame::ExtractORB(int flag, const cv::Mat &im)

{

if(flag==0)

(*mpORBextractorLeft)(im,cv::Mat(),mvKeys,mDescriptors);

else

(*mpORBextractorRight)(im,cv::Mat(),mvKeysRight,mDescriptorsRight);

}

maplab (feature extraction):

void VisualNFrameDetector::detectAndDescribeFeatures(

const cv::Mat& image, const cv::Mat& mask,

aslam::VisualFrame* frame) const {

detector_->detectAndComputeDescriptors(image, mask, &frame->getKeypointsMutable(),

&frame->getDescriptorsMutable());

}

Both repositories implement feature extraction, but maplab's approach is more modular and flexible, allowing for easier integration of different feature detectors and descriptors.

GTSAM is a library of C++ classes that implement smoothing and mapping (SAM) in robotics and vision, using factor graphs and Bayes networks as the underlying computing paradigm rather than sparse matrices.

Pros of GTSAM

- More versatile, supporting a wider range of applications beyond mapping

- Better documentation and extensive tutorials

- Actively maintained with frequent updates and contributions

Cons of GTSAM

- Steeper learning curve due to its broader scope

- May be overkill for projects focused solely on mapping

Code Comparison

GTSAM example (factor graph creation):

#include <gtsam/nonlinear/NonlinearFactorGraph.h>

#include <gtsam/nonlinear/Values.h>

#include <gtsam/inference/Symbol.h>

gtsam::NonlinearFactorGraph graph;

gtsam::Values initialEstimate;

graph.add(PriorFactor<Pose3>(Symbol('x', 1), Pose3(), priorNoise));

initialEstimate.insert(Symbol('x', 1), Pose3());

MapLab example (map creation):

#include <maplab-common/pose_types.h>

#include <vi-map/vi-map.h>

vi_map::VIMap map;

pose_graph::VertexId vertex_id;

map.addNewMissionWithBaseframe(vertex_id, T_G_M, mission_id);

map.addVertex(vertex_id, timestamp, T_M_I, imu_data);

GTSAM offers a more general-purpose approach to factor graphs and optimization, while MapLab is specifically tailored for visual-inertial mapping. GTSAM's code is more abstract, allowing for various problem formulations, whereas MapLab's code is more directly related to mapping concepts.

Visual Inertial Odometry with SLAM capabilities and 3D Mesh generation.

Pros of Kimera-VIO

- Real-time performance with low latency

- Modular architecture allowing easy integration of new components

- Supports both monocular and stereo vision

Cons of Kimera-VIO

- Less extensive mapping capabilities compared to maplab

- Smaller community and fewer available datasets

- Limited to visual-inertial odometry, while maplab offers more diverse functionalities

Code Comparison

Kimera-VIO (C++):

// Initialize VIO pipeline

VioBackEnd vio_backend(FLAGS_params_folder + "/BackendParams.yaml");

VioFrontEnd vio_frontend(FLAGS_params_folder + "/FrontendParams.yaml");

maplab (C++):

// Initialize VIO pipeline

vi_map::VIMap vi_map;

vio::MapInitializer map_initializer;

map_initializer.initializeMap(sensor_manager, &vi_map);

Both projects use C++ and have similar initialization patterns, but Kimera-VIO's modular approach is evident in its separate frontend and backend components.

Semi-direct Visual Odometry

Pros of rpg_svo

- Lightweight and efficient, suitable for resource-constrained systems

- Fast execution, capable of real-time performance on embedded platforms

- Simple setup and easy to integrate into existing projects

Cons of rpg_svo

- Limited to monocular visual odometry, lacking multi-sensor fusion capabilities

- No loop closure or global optimization, potentially leading to drift over time

- Less comprehensive feature set compared to maplab's full SLAM framework

Code Comparison

rpg_svo:

FrameHandlerMono::FrameHandlerMono(vk::AbstractCamera* cam) :

FrameHandlerBase(),

cam_(cam),

reprojector_(cam_, map_),

depth_filter_(NULL)

{

initialize();

}

maplab:

class VIMapManager {

public:

VIMapManager();

virtual ~VIMapManager() = default;

void addNewMission(const vi_map::MissionId& mission_id);

bool hasMission(const vi_map::MissionId& mission_id) const;

};

The code snippets show that rpg_svo focuses on frame handling and camera-based operations, while maplab deals with mission management and map representation, reflecting their different scopes and functionalities.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

News

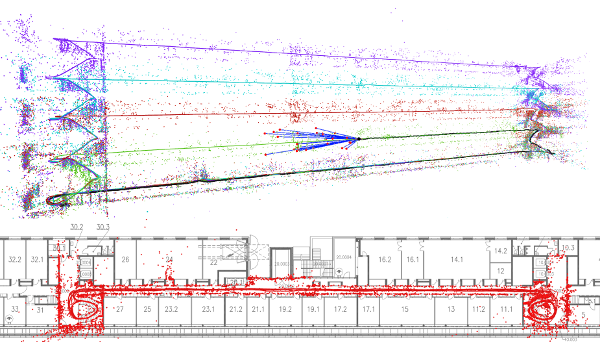

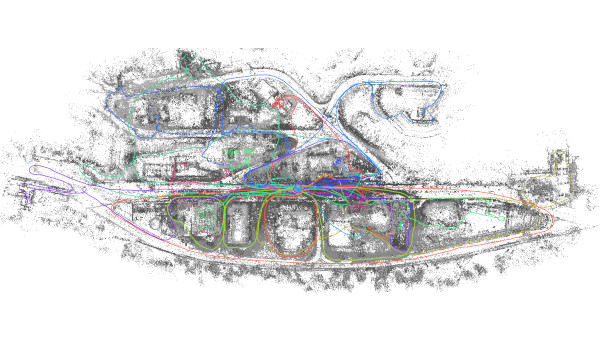

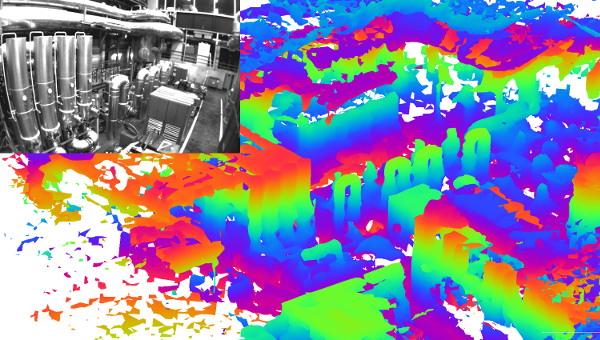

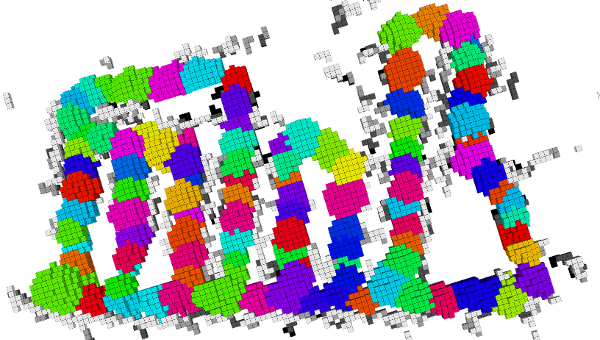

- November 2022: maplab 2.0 initial release with new features and sensors. Paper.

- July 2018: Check out our release candidate with improved localization and lots of new features! Release 1.3.

- May 2018: maplab was presented at ICRA in Brisbane. Paper / Initial Release.

Description

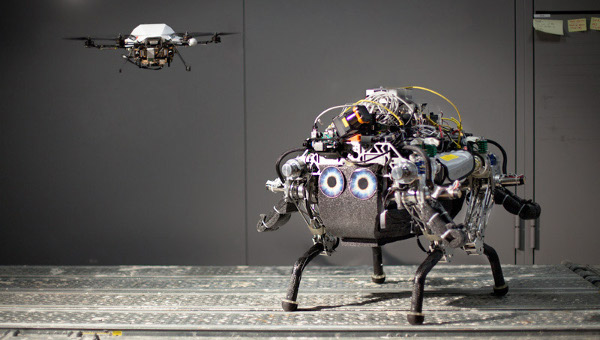

This repository contains maplab 2.0, an open research-oriented mapping framework, written in C++, for multi-session and multi-robot mapping. For the original maplab release from 2018 the source code and documentation is available here.

For documentation, tutorials and datasets, please visit the wiki.

Features

Robust visual-inertial odometry with localization

Large-scale multisession mapping and optimization

Multi-robot mapping and online operation

Dense reconstruction

A research platform extensively tested on real robots

Installation and getting started

The following articles help you with getting started with maplab and ROVIOLI:

- Installation on Ubuntu 18.04 or 20.04

- Introduction to the maplab framework

- Running ROVIOLI in VIO mode

- Basic console usage

- Console map management

More detailed information can be found in the wiki pages.

Research Results

The maplab framework has been used as an experimental platform for numerous scientific publications. For a complete list of publications please refer to Research based on maplab.

Citing

Please cite the following papers maplab and maplab 2.0 when using our framework for your research:

@article{schneider2018maplab,

title={{maplab: An Open Framework for Research in Visual-inertial Mapping and Localization}},

author={T. Schneider and M. T. Dymczyk and M. Fehr and K. Egger and S. Lynen and I. Gilitschenski and R. Siegwart},

journal={IEEE Robotics and Automation Letters},

volume={3},

number={3},

pages={1418--1425},

year={2018},

doi={10.1109/LRA.2018.2800113}

}

@article{cramariuc2022maplab,

title={{maplab 2.0 â A Modular and Multi-Modal Mapping Framework}},

author={A. Cramariuc and L. Bernreiter and F. Tschopp and M. Fehr and V. Reijgwart and J. Nieto and R. Siegwart and C. Cadena},

journal={IEEE Robotics and Automation Letters},

volume={8},

number={2},

pages={520-527},

year={2023},

doi={10.1109/LRA.2022.3227865}

}

Additional Citations

Certain components of maplab are directly based on other publications.

Credits

- Thomas Schneider

- Marcin Dymczyk

- Marius Fehr

- Kevin Egger

- Simon Lynen

- Mathias Bürki

- Titus Cieslewski

- Timo Hinzmann

- Mathias Gehrig

- Florian Tschopp

- Andrei Cramariuc

- Lukas Bernreiter

For a complete list of contributors, have a look at CONTRIBUTORS.md

Top Related Projects

Cartographer is a system that provides real-time simultaneous localization and mapping (SLAM) in 2D and 3D across multiple platforms and sensor configurations.

Real-Time SLAM for Monocular, Stereo and RGB-D Cameras, with Loop Detection and Relocalization Capabilities

GTSAM is a library of C++ classes that implement smoothing and mapping (SAM) in robotics and vision, using factor graphs and Bayes networks as the underlying computing paradigm rather than sparse matrices.

Visual Inertial Odometry with SLAM capabilities and 3D Mesh generation.

Semi-direct Visual Odometry

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot