ParlAI

ParlAI

A framework for training and evaluating AI models on a variety of openly available dialogue datasets.

Top Related Projects

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

The official Python library for the OpenAI API

TensorFlow code and pre-trained models for BERT

💫 Industrial-strength Natural Language Processing (NLP) in Python

Quick Overview

ParlAI (pronounced "par-lay") is an open-source platform for training and evaluating AI models on a wide range of conversational tasks. Developed by Facebook AI Research, it provides a unified framework for dialogue research, including a suite of tasks, agents, and evaluation metrics.

Pros

- Extensive collection of dialogue datasets and tasks

- Modular design allowing easy integration of new models and tasks

- Built-in support for popular machine learning frameworks like PyTorch

- Active community and regular updates

Cons

- Steep learning curve for beginners

- Documentation can be overwhelming due to the large number of features

- Some advanced features may require significant computational resources

- Occasional breaking changes in API between versions

Code Examples

- Creating a simple chatbot:

from parlai.scripts.interactive import Interactive

Interactive.main(model_file='zoo:blender/blender_90M/model')

- Training a model on a specific task:

from parlai.scripts.train_model import TrainModel

TrainModel.main(

task='convai2',

model='transformer/generator',

model_file='/tmp/model_convai2',

num_epochs=10,

batchsize=32

)

- Evaluating a model:

from parlai.scripts.eval_model import EvalModel

EvalModel.main(

task='wizard_of_wikipedia',

model_file='zoo:wizard_of_wikipedia/full_dialogue_retrieval_model/model',

datatype='valid'

)

Getting Started

To get started with ParlAI:

- Install ParlAI:

pip install parlai

- Run a quick interactive session with a pre-trained model:

from parlai.scripts.interactive import Interactive

Interactive.main(model_file='zoo:blender/blender_90M/model')

- For more advanced usage, refer to the official documentation and examples in the GitHub repository.

Competitor Comparisons

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

Pros of Transformers

- Broader scope, covering a wide range of NLP tasks and models

- More extensive documentation and community support

- Faster adoption of new models and techniques

Cons of Transformers

- Steeper learning curve for beginners

- Less focus on dialogue-specific tasks and metrics

- May require more setup and configuration for dialogue systems

Code Comparison

ParlAI example:

from parlai.core.agents import Agent

from parlai.core.worlds import DialogPartnerWorld

class MyAgent(Agent):

def act(self):

return {'text': 'Hello, how are you?'}

Transformers example:

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("gpt2")

tokenizer = AutoTokenizer.from_pretrained("gpt2")

input_text = "Hello, how are you?"

Both libraries offer powerful tools for NLP tasks, but ParlAI is more specialized for dialogue systems, while Transformers provides a broader range of models and applications. ParlAI's simplicity in dialogue tasks contrasts with Transformers' flexibility across various NLP domains.

DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

Pros of DeepSpeed

- Focuses on optimizing large-scale model training and inference

- Offers advanced distributed training techniques like ZeRO and 3D parallelism

- Provides significant memory and computational efficiency improvements

Cons of DeepSpeed

- Steeper learning curve for implementation compared to ParlAI

- Less focus on dialogue systems and natural language processing tasks

- May require more manual configuration for specific use cases

Code Comparison

DeepSpeed:

import deepspeed

model_engine, optimizer, _, _ = deepspeed.initialize(args=args,

model=model,

model_parameters=params)

ParlAI:

from parlai.core.agents import create_agent

from parlai.core.worlds import create_task

agent = create_agent(opt)

world = create_task(opt, agent)

DeepSpeed is more focused on optimizing model training, while ParlAI provides a higher-level interface for dialogue tasks. DeepSpeed requires more low-level configuration, whereas ParlAI offers pre-built agents and tasks for quicker setup in conversational AI scenarios.

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

Pros of fairseq

- More focused on sequence-to-sequence models and machine translation tasks

- Offers a wider range of pre-trained models and architectures

- Better suited for low-level research and custom model development

Cons of fairseq

- Steeper learning curve for beginners

- Less emphasis on dialogue systems and conversational AI

- Requires more manual setup and configuration for specific tasks

Code Comparison

fairseq:

from fairseq.models.transformer import TransformerModel

en2de = TransformerModel.from_pretrained('/path/to/model', checkpoint_file='model.pt')

en2de.translate('Hello world!')

ParlAI:

from parlai.core.agents import create_agent

from parlai.core.worlds import create_task

agent = create_agent({'model_file': 'zoo:blender/blender_90M/model'})

world = create_task({'task': 'convai2'}, [agent])

world.parley()

Both repositories offer powerful tools for natural language processing tasks, but they cater to different use cases. fairseq is more suitable for researchers working on sequence-to-sequence models and machine translation, while ParlAI is better for developing and evaluating dialogue systems. The code examples demonstrate the different approaches: fairseq focuses on direct model usage, while ParlAI emphasizes task-oriented interactions.

The official Python library for the OpenAI API

Pros of openai-python

- Focused specifically on OpenAI's API, providing streamlined access to their models and services

- Lightweight and easy to integrate into existing Python projects

- Regular updates to support the latest OpenAI API features and models

Cons of openai-python

- Limited to OpenAI's services, lacking the versatility of ParlAI for other AI tasks

- Less comprehensive documentation and community support compared to ParlAI

- Fewer built-in tools for data preprocessing and evaluation

Code Comparison

ParlAI example:

from parlai.core.agents import Agent

from parlai.core.worlds import DialogPartnerWorld

class MyAgent(Agent):

def act(self):

return {'text': 'Hello, how are you?'}

openai-python example:

import openai

openai.api_key = 'your-api-key'

response = openai.Completion.create(

engine="text-davinci-002",

prompt="Hello, how are you?"

)

TensorFlow code and pre-trained models for BERT

Pros of BERT

- Focused on pre-trained language representations, offering state-of-the-art performance on various NLP tasks

- Highly influential in the NLP community, with numerous extensions and adaptations

- Simpler to use for specific NLP tasks like text classification or named entity recognition

Cons of BERT

- Limited to text-based tasks, lacking support for multi-modal or dialogue-based applications

- Less flexible for creating custom AI agents or chatbots

- Requires more domain expertise to fine-tune and adapt to specific use cases

Code Comparison

BERT example:

from transformers import BertTokenizer, BertForSequenceClassification

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForSequenceClassification.from_pretrained('bert-base-uncased')

ParlAI example:

from parlai.core.agents import Agent

from parlai.core.worlds import DialogPartnerWorld

agent = Agent(opt)

world = DialogPartnerWorld(opt, [agent])

BERT focuses on text processing and classification, while ParlAI provides a framework for building dialogue agents and running interactive conversations. BERT is more specialized for NLP tasks, whereas ParlAI offers a broader platform for developing conversational AI applications.

💫 Industrial-strength Natural Language Processing (NLP) in Python

Pros of spaCy

- Focused on industrial-strength natural language processing

- Offers pre-trained models for various languages

- Highly optimized for speed and efficiency

Cons of spaCy

- Limited to NLP tasks, not suitable for general dialogue systems

- Steeper learning curve for beginners

- Less flexibility for custom model architectures

Code Comparison

spaCy:

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Apple is looking at buying U.K. startup for $1 billion")

for ent in doc.ents:

print(ent.text, ent.label_)

ParlAI:

from parlai.core.agents import Agent

from parlai.core.worlds import DialogPartnerWorld

from parlai.scripts.interactive import Interactive

agent = Agent(opt)

world = DialogPartnerWorld(opt, [agent])

Interactive.main(opt)

Summary

spaCy excels in industrial NLP tasks with pre-trained models and optimized performance, while ParlAI offers a more flexible platform for developing and evaluating dialogue systems. spaCy is better suited for specific NLP tasks, whereas ParlAI provides a broader framework for conversational AI research and development.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

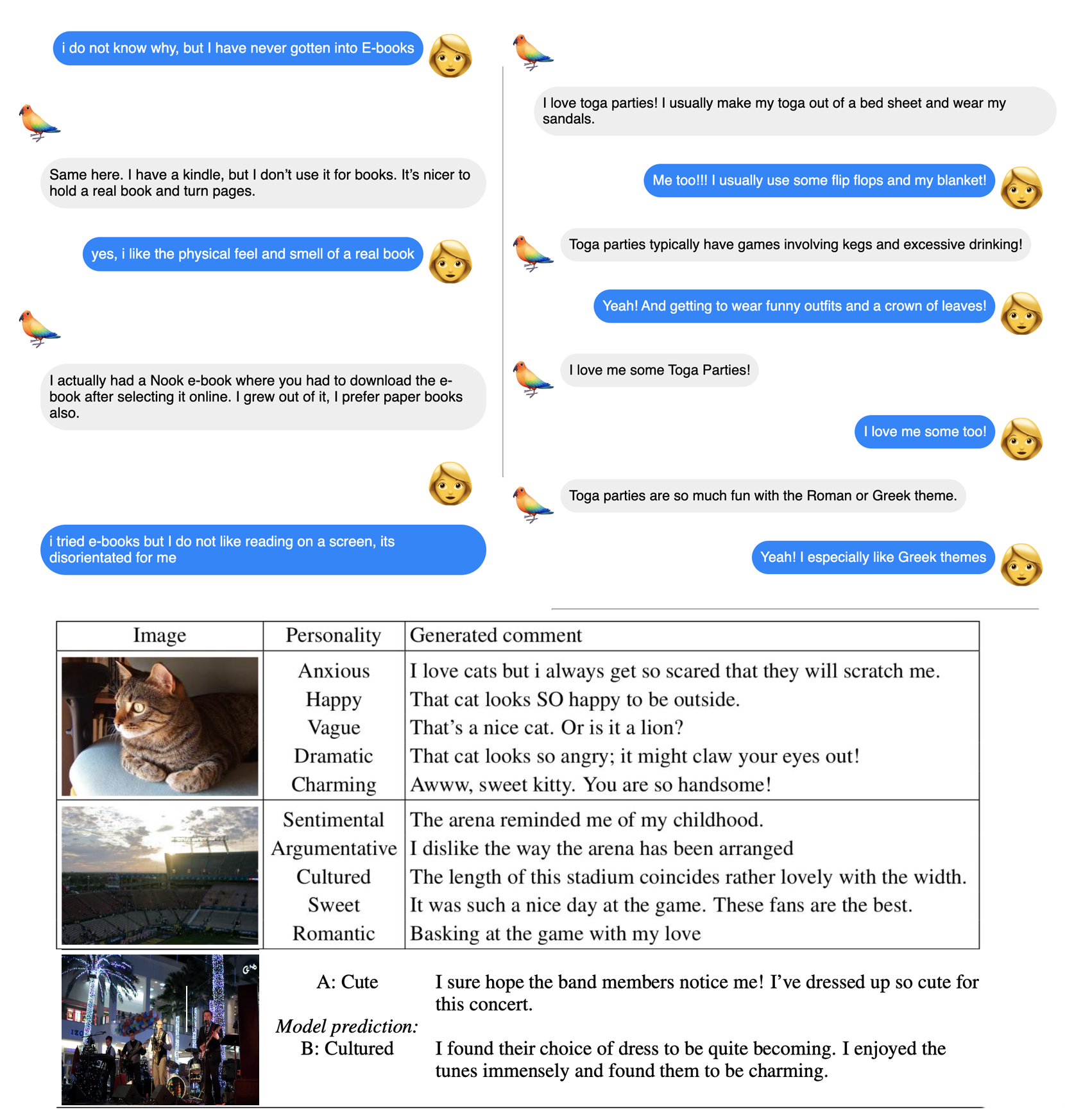

ParlAI (pronounced âpar-layâ) is a python framework for sharing, training and testing dialogue models, from open-domain chitchat, to task-oriented dialogue, to visual question answering.

Its goal is to provide researchers:

- 100+ popular datasets available all in one place, with the same API, among them PersonaChat, DailyDialog, Wizard of Wikipedia, Empathetic Dialogues, SQuAD, MS MARCO, QuAC, HotpotQA, QACNN & QADailyMail, CBT, BookTest, bAbI Dialogue tasks, Ubuntu Dialogue, OpenSubtitles, Image Chat, VQA, VisDial and CLEVR. See the complete list here.

- a wide set of reference models -- from retrieval baselines to Transformers.

- a large zoo of pretrained models ready to use off-the-shelf

- seamless integration of Amazon Mechanical Turk for data collection and human evaluation

- integration with Facebook Messenger to connect agents with humans in a chat interface

- a large range of helpers to create your own agents and train on several tasks with multitasking

- multimodality, some tasks use text and images

ParlAI is described in the following paper: âParlAI: A Dialog Research Software Platform", arXiv:1705.06476 or see these more up-to-date slides.

Follow us on Twitter and check out our Release notes to see the latest information about new features & updates, and the website http://parl.ai for further docs. For an archived list of updates, check out NEWS.md.

Interactive Tutorial

For those who want to start with ParlAI now, you can try our Colab Tutorial.

Installing ParlAI

Operating System

ParlAI should work as inteded under Linux or macOS. We do not support Windows at this time, but many users report success on Windows using Python 3.8 and issues with Python 3.9. We are happy to accept patches that improve Windows support.

Python Interpreter

ParlAI currently requires Python3.8+.

Requirements

ParlAI supports Pytorch 1.6 or higher.

All requirements of the core modules are listed in requirements.txt. However, some models included (in parlai/agents) have additional requirements.

Virtual Environment

We strongly recommend you install ParlAI in a virtual environment using venv or conda.

End User Installation

If you want to use ParlAI without modifications, you can install it with:

cd /path/to/your/parlai-app

python3.8 -m venv venv

venv/bin/pip install --upgrade pip setuptools wheel

venv/bin/pip install parlai

Developer Installation

Many users will want to modify some parts of ParlAI. To set up a development environment, run the following commands to clone the repository and install ParlAI:

git clone https://github.com/facebookresearch/ParlAI.git ~/ParlAI

cd ~/ParlAI

python3.8 -m venv venv

venv/bin/pip install --upgrade pip setuptools wheel

venv/bin/python setup.py develop

Note Sometimes the install from source maynot work due to dependencies (specially in PyTorch related packaged). In that case try building a fresh conda environment and running the similar to the following:

conda install pytorch==2.0.0 torchvision torchaudio torchtext pytorch-cuda=11.8 -c pytorch -c nvidia. Check torch setup documentation for your CUDA and OS versions.

All needed data will be downloaded to ~/ParlAI/data. If you need to clear out

the space used by these files, you can safely delete these directories and any

files needed will be downloaded again.

Documentation

- Quick Start

- Basics: world, agents, teachers, action and observations

- Creating a new dataset/task

- List of available tasks/datasets

- Creating a seq2seq agent

- List of available agents

- Model zoo (list pretrained models)

- Running crowdsourcing tasks

- Plug into Facebook Messenger

Examples

A large set of scripts can be found in parlai/scripts. Here are a few of them.

Note: If any of these examples fail, check the installation section to see if you have missed something.

Display 10 random examples from the SQuAD task

parlai display_data -t squad

Evaluate an IR baseline model on the validation set of the Personachat task:

parlai eval_model -m ir_baseline -t personachat -dt valid

Train a single layer transformer on PersonaChat (requires pytorch and torchtext). Detail: embedding size 300, 4 attention heads, 2 epochs using batchsize 64, word vectors are initialized with fasttext and the other elements of the batch are used as negative during training.

parlai train_model -t personachat -m transformer/ranker -mf /tmp/model_tr6 --n-layers 1 --embedding-size 300 --ffn-size 600 --n-heads 4 --num-epochs 2 -veps 0.25 -bs 64 -lr 0.001 --dropout 0.1 --embedding-type fasttext_cc --candidates batch

Code Organization

The code is set up into several main directories:

- core: contains the primary code for the framework

- agents: contains agents which can interact with the different tasks (e.g. machine learning models)

- scripts: contains a number of useful scripts, like training, evaluating, interactive chatting, ...

- tasks: contains code for the different tasks available from within ParlAI

- mturk: contains code for setting up Mechanical Turk, as well as sample MTurk tasks

- messenger: contains code for interfacing with Facebook Messenger

- utils: contains a wide number of frequently used utility methods

- crowdsourcing: contains code for running crowdsourcing tasks, such as on Amazon Mechanical Turk

- chat_service: contains code for interfacing with services such as Facebook Messenger

- zoo: contains code to directly download and use pretrained models from our model zoo

Support

If you have any questions, bug reports or feature requests, please don't hesitate to post on our Github Issues page. You may also be interested in checking out our FAQ and our Tips n Tricks.

Please remember to follow our Code of Conduct.

Contributing

We welcome PRs from the community!

You can find information about contributing to ParlAI in our Contributing document.

The Team

ParlAI is currently maintained by Moya Chen, Emily Dinan, Dexter Ju, Mojtaba Komeili, Spencer Poff, Pratik Ringshia, Stephen Roller, Kurt Shuster, Eric Michael Smith, Megan Ung, Jack Urbanek, Jason Weston, Mary Williamson, and Jing Xu. Kurt Shuster is the current Tech Lead.

Former major contributors and maintainers include Alexander H. Miller, Margaret Li, Will Feng, Adam Fisch, Jiasen Lu, Antoine Bordes, Devi Parikh, Dhruv Batra, Filipe de Avila Belbute Peres, Chao Pan, and Vedant Puri.

Citation

Please cite the arXiv paper if you use ParlAI in your work:

@article{miller2017parlai,

title={ParlAI: A Dialog Research Software Platform},

author={{Miller}, A.~H. and {Feng}, W. and {Fisch}, A. and {Lu}, J. and {Batra}, D. and {Bordes}, A. and {Parikh}, D. and {Weston}, J.},

journal={arXiv preprint arXiv:{1705.06476}},

year={2017}

}

License

ParlAI is MIT licensed. See the LICENSE file for details.

Top Related Projects

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

The official Python library for the OpenAI API

TensorFlow code and pre-trained models for BERT

💫 Industrial-strength Natural Language Processing (NLP) in Python

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot