Top Related Projects

Javascript/WebGL lightweight face tracking library designed for augmented reality webcam filters. Features : multiple faces detection, rotation, mouth opening. Various integration examples are provided (Three.js, Babylon.js, FaceSwap, Canvas2D, CSS3D...).

Image tracking, Location Based AR, Marker tracking. All on the Web.

Quick Overview

Mind-AR-js is an open-source web augmented reality library that leverages image tracking capabilities. It allows developers to create AR experiences that run directly in web browsers, without the need for any app installation.

Pros

- Easy to use and integrate into web projects

- Supports multiple image targets and tracking

- Works across various devices and browsers

- No app installation required for end-users

Cons

- Performance may vary depending on device capabilities

- Limited to image-based tracking (no 3D object or environment tracking)

- Requires good lighting conditions for optimal performance

- May have higher latency compared to native AR solutions

Code Examples

- Basic AR scene setup:

<script src="https://cdn.jsdelivr.net/npm/mind-ar@1.2.1/dist/mindar-image.prod.js"></script>

<script src="https://aframe.io/releases/1.2.0/aframe.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/mind-ar@1.2.1/dist/mindar-image-aframe.prod.js"></script>

<a-scene mindar-image="imageTargetSrc: ./targets.mind;" color-space="sRGB" renderer="colorManagement: true, physicallyCorrectLights" vr-mode-ui="enabled: false" device-orientation-permission-ui="enabled: false">

<a-camera position="0 0 0" look-controls="enabled: false"></a-camera>

<a-entity mindar-image-target="targetIndex: 0">

<a-plane color="blue" opaciy="0.5" position="0 0 0" height="0.552" width="1" rotation="0 0 0"></a-plane>

</a-entity>

</a-scene>

- Adding a 3D model to the AR scene:

<a-entity mindar-image-target="targetIndex: 0">

<a-gltf-model rotation="0 0 0 " position="0 0 0.1" scale="0.005 0.005 0.005" src="https://cdn.jsdelivr.net/gh/hiukim/mind-ar-js@1.2.1/examples/image-tracking/assets/card-example/softmind/scene.gltf" animation-mixer>

</a-entity>

- Handling events in AR:

const sceneEl = document.querySelector('a-scene');

const exampleTarget = sceneEl.querySelector('#example-target');

exampleTarget.addEventListener("targetFound", event => {

console.log("target found");

});

exampleTarget.addEventListener("targetLost", event => {

console.log("target lost");

});

Getting Started

- Include the necessary scripts in your HTML file:

<script src="https://cdn.jsdelivr.net/npm/mind-ar@1.2.1/dist/mindar-image.prod.js"></script>

<script src="https://aframe.io/releases/1.2.0/aframe.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/mind-ar@1.2.1/dist/mindar-image-aframe.prod.js"></script>

- Create an AR scene with an image target:

<a-scene mindar-image="imageTargetSrc: ./targets.mind;" color-space="sRGB" renderer="colorManagement: true, physicallyCorrectLights" vr-mode-ui="enabled: false" device-orientation-permission-ui="enabled: false">

<a-camera position="0 0 0" look-controls="enabled: false"></a-camera>

<a-entity mindar-image-target="targetIndex: 0">

<!-- Add your AR content here -->

</a-entity>

</a-scene>

- Generate a

.mindfile for your image target using the MindAR image compiler tool available on their website.

Competitor Comparisons

Javascript/WebGL lightweight face tracking library designed for augmented reality webcam filters. Features : multiple faces detection, rotation, mouth opening. Various integration examples are provided (Three.js, Babylon.js, FaceSwap, Canvas2D, CSS3D...).

Pros of jeelizFaceFilter

- Specializes in face detection and tracking for AR filters

- Offers real-time performance with low latency

- Provides a wide range of pre-built face filters and effects

Cons of jeelizFaceFilter

- Limited to face-based AR applications

- May require more setup and configuration for custom filters

- Less suitable for marker-based or image tracking AR

Code Comparison

jeelizFaceFilter:

JEELIZFACEFILTER.init({

canvasId: 'jeeFaceFilterCanvas',

NNCPath: '../../../neuralNets/',

callbackReady: function(errCode, spec) {

if (errCode) return console.log('AN ERROR OCCURRED. SORRY BRO :( . ERR =', errCode);

console.log('INFO : JEELIZFACEFILTER IS READY');

},

callbackTrack: function(detectState) {

// Render your 3D scene here

}

});

mind-ar-js:

const mindarThree = new window.MINDAR.IMAGE.MindARThree({

container: document.body,

imageTargetSrc: './targets.mind',

});

const {renderer, scene, camera} = mindarThree;

const anchor = mindarThree.addAnchor(0);

anchor.group.add(myObject3D);

await mindarThree.start();

renderer.setAnimationLoop(() => {

renderer.render(scene, camera);

});

Both libraries offer AR capabilities, but jeelizFaceFilter focuses on face tracking and filters, while mind-ar-js provides more general image-based AR tracking. The code examples show the initialization process for each library, highlighting their different approaches and use cases.

Image tracking, Location Based AR, Marker tracking. All on the Web.

Pros of AR.js

- Wider range of tracking options (image, marker, location-based)

- Better documentation and community support

- More mature project with longer development history

Cons of AR.js

- Larger file size and potentially slower performance

- Less focus on natural feature tracking

- May require more setup and configuration

Code Comparison

MindAR.js:

<script src="https://cdn.jsdelivr.net/npm/mind-ar@1.1.5/dist/mindar-image.prod.js"></script>

<a-scene mindar-image="imageTargetSrc: ./targets.mind;" color-space="sRGB" renderer="colorManagement: true, physicallyCorrectLights" vr-mode-ui="enabled: false" device-orientation-permission-ui="enabled: false">

<a-camera position="0 0 0" look-controls="enabled: false"></a-camera>

<a-entity mindar-image-target="targetIndex: 0">

<a-plane color="blue" opaciy="0.5" position="0 0 0" height="0.552" width="1" rotation="0 0 0"></a-plane>

</a-entity>

</a-scene>

AR.js:

<script src="https://aframe.io/releases/1.0.4/aframe.min.js"></script>

<script src="https://raw.githack.com/AR-js-org/AR.js/master/aframe/build/aframe-ar.js"></script>

<a-scene embedded arjs='sourceType: webcam; debugUIEnabled: false;'>

<a-marker preset="hiro">

<a-box position='0 0.5 0' material='color: yellow;'></a-box>

</a-marker>

<a-entity camera></a-entity>

</a-scene>

Both libraries use A-Frame for 3D rendering, but AR.js requires separate script imports for A-Frame and AR.js, while MindAR.js combines everything in a single script. AR.js uses marker-based tracking in this example, while MindAR.js uses image-based tracking.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

MindAR

MindAR is a web augmented reality library. Highlighted features include:

:star: Support Image tracking and Face tracking. For Location or Fiducial-Markers Tracking, checkout AR.js

:star: Written in pure javascript, end-to-end from the underlying computer vision engine to frontend

:star: Utilize gpu (through webgl) and web worker for performance

:star: Developer friendly. Easy to setup. With AFRAME extension, you can create an app with only 10 lines of codes

Fund Raising

MindAR is the only actively maintained web AR SDK which offer comparable features to commercial alternatives. This library is currently maintained by me as an individual developer. To raise fund for continuous development and to provide timely supports and responses to issues, here is a list of related projects/ services that you can support.

Unity WebAR FoundationWebAR Foundation is a unity package that allows Unity developer to build WebGL-platform AR applications. It acts as a Unity Plugin that wraps around popular Web SDK. If you are a Unity developer, check it out! https://github.com/hiukim/unity-webar-foundation

|

|

Web AR Development CourseI'm offering a WebAR development course in Udemy. It's a very comprehensive guide to Web AR development, not limited to MindAR. Check it out if you are interested: https://www.udemy.com/course/introduction-to-web-ar-development/?referralCode=D2565F4CA6D767F30D61 |

|

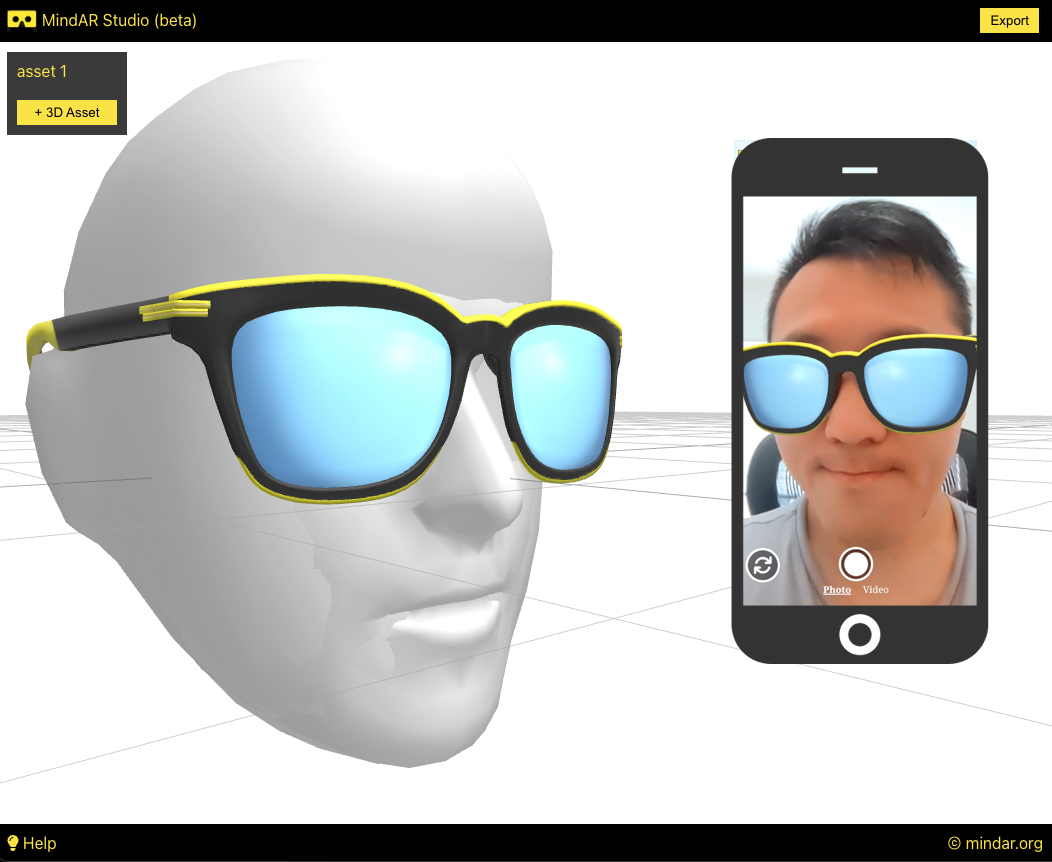

MindAR StudioMindAR Studio allows you to build Face Tracking AR without coding. You can build AR effects through a drag-n-drop editor and export static webpages for self-host. Free to use! Check it out if you are interested! https://studio.mindar.org |

|

PictarizePictarize is a hosted platform for creating and publishing Image Tracking AR applications. Free to use! Check it out if you are interested! https://pictarize.com |

|

Documentation

Official Documentation: https://hiukim.github.io/mind-ar-js-doc

Demo - Try it yourself

Image Tracking - Basic ExampleDemo video: https://youtu.be/hgVB9HpQpqY Try it yourself: https://hiukim.github.io/mind-ar-js-doc/examples/basic/ |

|

Image Tracking - Multiple Targets ExampleTry it yourself: https://hiukim.github.io/mind-ar-js-doc/examples/multi-tracks |

|

Image Tracking - Interactive ExampleDemo video: https://youtu.be/gm57gL1NGoQ Try it yourself: https://hiukim.github.io/mind-ar-js-doc/examples/interative |

|

Face Tracking - Virtual Try-On ExampleTry it yourself: https://hiukim.github.io/mind-ar-js-doc/face-tracking-examples/tryon |

|

Face Tracking - Face Mesh EffectTry it yourself: https://hiukim.github.io/mind-ar-js-doc/more-examples/threejs-face-facemesh |

|

More examples

More examples can be found here: https://hiukim.github.io/mind-ar-js-doc/examples/summary

Quick Start

Learn how to build the Basic example above in 5 minutes with a plain text editor!

Quick Start Guide: https://hiukim.github.io/mind-ar-js-doc/quick-start/overview

To give you a quick idea, this is the complete source code for the Basic example. It's static HTML page, you can host it anywhere.

<html>

<head>

<meta name="viewport" content="width=device-width, initial-scale=1" />

<script src="https://cdn.jsdelivr.net/gh/hiukim/mind-ar-js@1.1.4/dist/mindar-image.prod.js"></script>

<script src="https://aframe.io/releases/1.2.0/aframe.min.js"></script>

<script src="https://cdn.jsdelivr.net/gh/hiukim/mind-ar-js@1.1.4/dist/mindar-image-aframe.prod.js"></script>

</head>

<body>

<a-scene mindar-image="imageTargetSrc: https://cdn.jsdelivr.net/gh/hiukim/mind-ar-js@1.1.4/examples/image-tracking/assets/card-example/card.mind;" color-space="sRGB" renderer="colorManagement: true, physicallyCorrectLights" vr-mode-ui="enabled: false" device-orientation-permission-ui="enabled: false">

<a-assets>

<img id="card" src="https://cdn.jsdelivr.net/gh/hiukim/mind-ar-js@1.1.4/examples/image-tracking/assets/card-example/card.png" />

<a-asset-item id="avatarModel" src="https://cdn.jsdelivr.net/gh/hiukim/mind-ar-js@1.1.4/examples/image-tracking/assets/card-example/softmind/scene.gltf"></a-asset-item>

</a-assets>

<a-camera position="0 0 0" look-controls="enabled: false"></a-camera>

<a-entity mindar-image-target="targetIndex: 0">

<a-plane src="#card" position="0 0 0" height="0.552" width="1" rotation="0 0 0"></a-plane>

<a-gltf-model rotation="0 0 0 " position="0 0 0.1" scale="0.005 0.005 0.005" src="#avatarModel" animation="property: position; to: 0 0.1 0.1; dur: 1000; easing: easeInOutQuad; loop: true; dir: alternate">

</a-entity>

</a-scene>

</body>

</html>

Target Images Compiler

You can compile your own target images right on the browser using this friendly Compiler tools. If you don't know what it is, go through the Quick Start guide

https://hiukim.github.io/mind-ar-js-doc/tools/compile

Roadmaps

-

Supports more augmented reality features, like Hand Tracking, Body Tracking and Plane Tracking

-

Research on different state-of-the-arts algorithms to improve tracking accuracy and performance

-

More educational references.

Contributions

I personally don't come from a strong computer vision background, and I'm having a hard time improving the tracking accuracy. I could really use some help from computer vision expert. Please reach out and discuss.

Also welcome javascript experts to help with the non-engine part, like improving the APIs and so.

If you are graphics designer or 3D artists and can contribute to the visual. Even if you just use MindAR to develop some cool applications, please show us!

Whatever you can think of. It's an opensource web AR framework for everyone!

Development Guide

Directories explained

/srcfolder contains majority of the source code/examplesfolder contains examples to test out during development

To create a production build

run > npm run build. the build will be generated in dist folder

For development

To develop threeJS version, run > npm run watch. This will observe the file changes in src folder and continuously build the artefacts in dist-dev.

To develop AFRAME version, you will need to run >npm run build-dev everytime you make changes. The --watch parameter currently failed to automatically generate mindar-XXX-aframe.js.

All the examples in the examples folder is configured to use this development build, so you can open those examples in browser to start debugging or development.

The examples should run in desktop browser and they are just html files, so it's easy to start development. However, because it requires camera access, so you need a webcam. Also, you need to run the html file with some localhost web server. Simply opening the files won't work.

For example, you can install this chrome plugin to start a local server: https://chrome.google.com/webstore/detail/web-server-for-chrome/ofhbbkphhbklhfoeikjpcbhemlocgigb?hl=en

You most likely would want to test on mobile device as well. In that case, it's better if you could setup your development environment to be able to share your localhost webserver to your mobile devices. If you have difficulties doing that, perhaps behind a firewall, then you could use something like ngrok (https://ngrok.com/) to tunnel the request. But this is not an ideal solution, because the development build of MindAR is not small (>10Mb), and tunneling with free version of ngrok could be slow.

webgl backend

This library utilize tensorflowjs (https://github.com/tensorflow/tfjs) for webgl backend. Yes, tensorflow is a machine learning library, but we didn't use it for machine learning! :) Tensorflowjs has a very solid webgl engine which allows us to write general purpose GPU application (in this case, our AR application).

The core detection and tracking algorithm is written with custom operations in tensorflowjs. They are like shaders program. It might looks intimidating at first, but it's actually not that difficult to understand.

Credits

The computer vision idea is borrowed from artoolkit (i.e. https://github.com/artoolkitx/artoolkit5). Unfortunately, the library doesn't seems to be maintained anymore.

Face Tracking is based on mediapipe face mesh model (i.e. https://google.github.io/mediapipe/solutions/face_mesh.html)

Top Related Projects

Javascript/WebGL lightweight face tracking library designed for augmented reality webcam filters. Features : multiple faces detection, rotation, mouth opening. Various integration examples are provided (Three.js, Babylon.js, FaceSwap, Canvas2D, CSS3D...).

Image tracking, Location Based AR, Marker tracking. All on the Web.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot