Top Related Projects

Video, Image and GIF upscale/enlarge(Super-Resolution) and Video frame interpolation. Achieved with Waifu2x, Real-ESRGAN, Real-CUGAN, RTX Video Super Resolution VSR, SRMD, RealSR, Anime4K, RIFE, IFRNet, CAIN, DAIN, and ACNet.

waifu2x converter ncnn version, runs fast on intel / amd / nvidia / apple-silicon GPU with vulkan

waifu2xのCaffe版

Quick Overview

Waifu2x is an image super-resolution tool that uses convolutional neural networks to upscale and denoise anime-style art and photographs. It's particularly effective for anime and manga images, but can also be used on real-world photographs. The project includes both a web-based interface and command-line tools.

Pros

- High-quality upscaling and noise reduction for anime-style images

- Supports both CPU and GPU processing

- Available as a web service, command-line tool, and GUI application

- Open-source and actively maintained

Cons

- Can be computationally intensive, especially for large images

- May produce artifacts or undesired smoothing on non-anime images

- Requires significant setup for local installation and use

- Limited customization options for advanced users

Code Examples

-- Load an image and upscale it by 2x

local image = require 'image'

local w2x = require 'waifu2x'

local input = image.load('input.png')

local output = w2x.scale(input, 2)

image.save('output.png', output)

-- Denoise an image without upscaling

local input = image.load('noisy.png')

local denoised = w2x.denoise(input, 1) -- noise level 1

image.save('denoised.png', denoised)

-- Upscale and denoise in one step

local input = image.load('small_noisy.png')

local result = w2x.scale_and_denoise(input, 2, 2) -- 2x scale, noise level 2

image.save('large_clean.png', result)

Getting Started

To use waifu2x locally:

-

Clone the repository:

git clone https://github.com/nagadomi/waifu2x.git -

Install dependencies (CUDA, Torch, etc.) as per the README instructions.

-

Run the waifu2x command:

th waifu2x.lua -m noise_scale -noise_level 1 -i input.jpg -o output.png

For web-based usage, visit waifu2x.udp.jp and upload your image.

Competitor Comparisons

Video, Image and GIF upscale/enlarge(Super-Resolution) and Video frame interpolation. Achieved with Waifu2x, Real-ESRGAN, Real-CUGAN, RTX Video Super Resolution VSR, SRMD, RealSR, Anime4K, RIFE, IFRNet, CAIN, DAIN, and ACNet.

Pros of Waifu2x-Extension-GUI

- User-friendly graphical interface for easier operation

- Supports multiple AI models and engines (waifu2x, Real-ESRGAN, Anime4K)

- Batch processing capabilities for multiple files

Cons of Waifu2x-Extension-GUI

- Larger file size and more system resources required

- May have slower processing speed due to additional features

- Limited to Windows operating system

Code Comparison

While a direct code comparison is not particularly relevant due to the different nature of these projects (one being a core algorithm and the other a GUI wrapper), we can look at how they handle image processing:

waifu2x:

local image = require 'image'

local t = require 'torch'

local iproc = require 'iproc'

Waifu2x-Extension-GUI:

from PyQt5.QtWidgets import QApplication, QMainWindow

from waifu2x_extension_gui import *

The waifu2x repository focuses on the core image processing algorithms using Lua and Torch, while Waifu2x-Extension-GUI uses Python and PyQt5 to create a graphical interface that wraps multiple upscaling engines.

waifu2x converter ncnn version, runs fast on intel / amd / nvidia / apple-silicon GPU with vulkan

Pros of waifu2x-ncnn-vulkan

- Utilizes GPU acceleration via Vulkan, resulting in faster processing

- Supports more platforms, including Windows, Linux, and Android

- Offers real-time video upscaling capabilities

Cons of waifu2x-ncnn-vulkan

- May produce slightly lower quality results in some cases

- Requires a Vulkan-compatible GPU for optimal performance

- Has a more complex setup process for some users

Code Comparison

waifu2x:

require 'cudnn'

require 'cunn'

local model = torch.load('models/anime_style_art_rgb_scale2.0x_model.t7')

waifu2x-ncnn-vulkan:

#include "gpu.h"

#include "waifu2x.h"

Waifu2x waifu2x;

waifu2x.load(opt.model);

The code snippets show the different approaches to loading models and utilizing GPU acceleration. waifu2x uses Torch and CUDA, while waifu2x-ncnn-vulkan employs C++ and Vulkan for GPU computations.

waifu2xのCaffe版

Pros of waifu2x-caffe

- Utilizes CUDA for faster GPU processing

- Supports multiple GPUs for parallel processing

- Includes a user-friendly GUI for easier operation

Cons of waifu2x-caffe

- Limited to Windows operating system

- Requires CUDA-compatible NVIDIA GPUs for optimal performance

- May have higher system requirements due to Caffe framework

Code Comparison

waifu2x:

function image_loader.load_rgb(file)

local img = image.load(file, 3, 'float')

return img

end

waifu2x-caffe:

cv::Mat imread_rgb_f32(const std::string &path) {

cv::Mat img = cv::imread(path, cv::IMREAD_COLOR);

img.convertTo(img, CV_32FC3, 1.0 / 255.0);

return img;

}

Both projects aim to upscale and denoise anime-style images, but they differ in implementation and target platforms. waifu2x-caffe focuses on Windows users with NVIDIA GPUs, offering potentially faster processing and a GUI. However, it sacrifices cross-platform compatibility and may have higher system requirements. The original waifu2x provides broader compatibility but may not achieve the same performance levels on supported hardware.

Pros of ailab

- More advanced AI models and techniques, potentially offering better image quality

- Broader scope beyond just image upscaling, including other AI-related tasks

- Active development and regular updates from a large company (Bilibili)

Cons of ailab

- Less focused on a single task, which may result in a more complex user experience

- Potentially higher system requirements due to more advanced AI models

- May have a steeper learning curve for users new to AI-powered image processing

Code Comparison

waifu2x:

function image_loader.load_rgb(path)

local x = image.load(path, 3, "float")

x = image.rgb2yuv(x)

return x

end

ailab:

def load_image(image_path):

img = cv2.imread(image_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

return img / 255.0

Both repositories provide image loading functions, but ailab uses OpenCV and normalizes pixel values, while waifu2x uses a custom image library and converts to YUV color space.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

waifu2x

Image Super-Resolution for Anime-style art using Deep Convolutional Neural Networks. And it supports photo.

The demo application can be found at https://waifu2x.udp.jp/ (Cloud version), https://unlimited.waifu2x.net/ (In-Browser version).

2023/02 PyTorch version

waifu2x development has already been moved to the repository above.

Summary

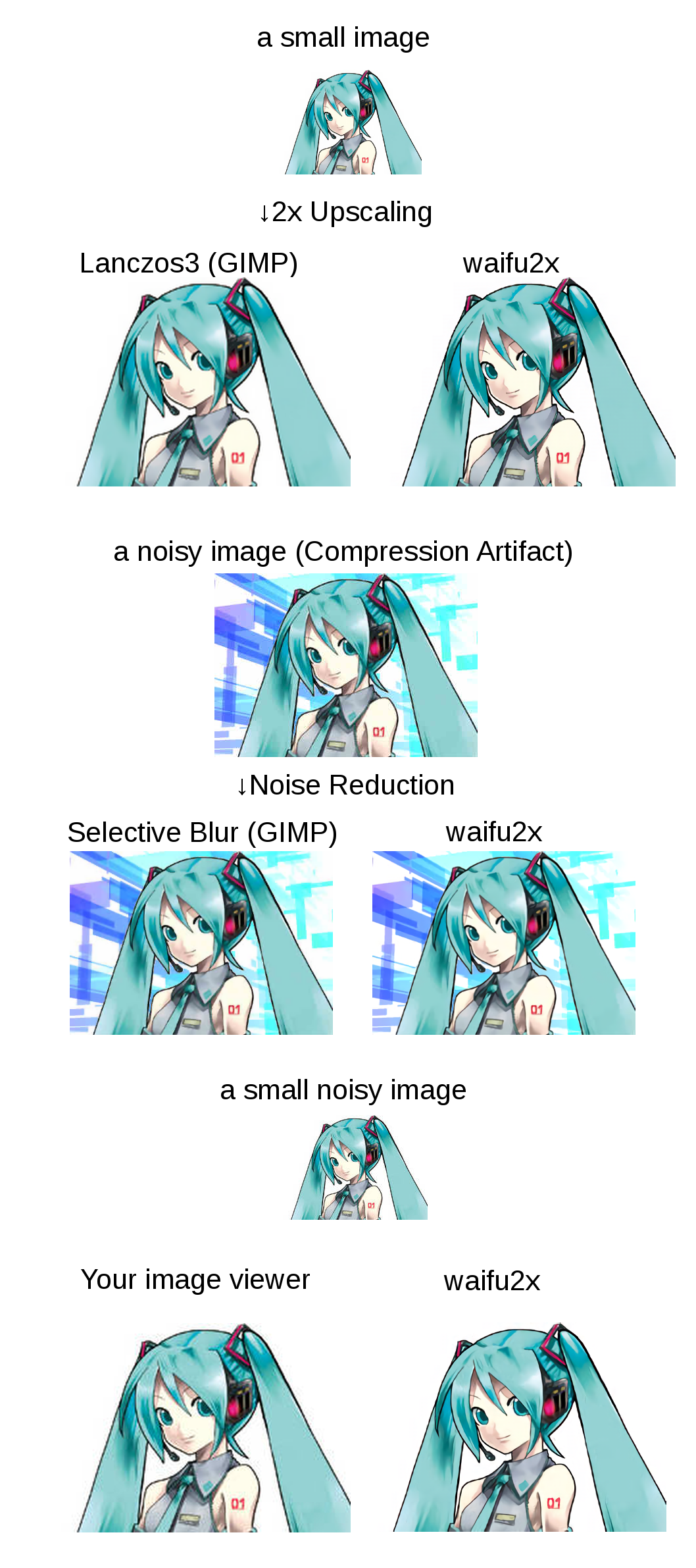

Click to see the slide show.

References

waifu2x is inspired by SRCNN [1]. 2D character picture (HatsuneMiku) is licensed under CC BY-NC by piapro [2].

- [1] Chao Dong, Chen Change Loy, Kaiming He, Xiaoou Tang, "Image Super-Resolution Using Deep Convolutional Networks", http://arxiv.org/abs/1501.00092

- [2] "For Creators", https://piapro.net/intl/en_for_creators.html

Public AMI

TODO

Third Party Software

If you are a windows user, I recommend you to use waifu2x-caffe(Just download from releases tab), waifu2x-ncnn-vulkan or waifu2x-conver-cpp.

Dependencies

Hardware

- NVIDIA GPU

Platform

LuaRocks packages (excludes torch7's default packages)

- lua-csnappy

- md5

- uuid

- csvigo

- turbo

Installation

Setting Up the Command Line Tool Environment

(on Ubuntu 16.04)

Install CUDA

See: NVIDIA CUDA Getting Started Guide for Linux

Download CUDA

sudo dpkg -i cuda-repo-ubuntu1404_7.5-18_amd64.deb

sudo apt-get update

sudo apt-get install cuda

Install Package

sudo apt-get install libsnappy-dev

sudo apt-get install libgraphicsmagick1-dev

sudo apt-get install libssl1.0-dev # for web server

Note: waifu2x requires little-cms2 linked graphicsmagick. if you use macOS/homebrew, See #174.

Install Torch7

See: Getting started with Torch.

Getting waifu2x

git clone --depth 1 https://github.com/nagadomi/waifu2x.git

and install lua modules.

cd waifu2x

./install_lua_modules.sh

Validation

Testing the waifu2x command line tool.

th waifu2x.lua

Web Application

th web.lua

View at: http://localhost:8812/

Command line tools

Notes: If you have cuDNN library, than you can use cuDNN with -force_cudnn 1 option. cuDNN is too much faster than default kernel. If you got GPU out of memory error, you can avoid it with -crop_size option (e.g. -crop_size 128).

Noise Reduction

th waifu2x.lua -m noise -noise_level 1 -i input_image.png -o output_image.png

th waifu2x.lua -m noise -noise_level 0 -i input_image.png -o output_image.png

th waifu2x.lua -m noise -noise_level 2 -i input_image.png -o output_image.png

th waifu2x.lua -m noise -noise_level 3 -i input_image.png -o output_image.png

2x Upscaling

th waifu2x.lua -m scale -i input_image.png -o output_image.png

Noise Reduction + 2x Upscaling

th waifu2x.lua -m noise_scale -noise_level 1 -i input_image.png -o output_image.png

th waifu2x.lua -m noise_scale -noise_level 0 -i input_image.png -o output_image.png

th waifu2x.lua -m noise_scale -noise_level 2 -i input_image.png -o output_image.png

th waifu2x.lua -m noise_scale -noise_level 3 -i input_image.png -o output_image.png

Batch conversion

find /path/to/imagedir -name "*.png" -o -name "*.jpg" > image_list.txt

th waifu2x.lua -m scale -l ./image_list.txt -o /path/to/outputdir/prefix_%d.png

The output format supports %s and %d(e.g. %06d). %s will be replaced the basename of the source filename. %d will be replaced a sequence number.

For example, when input filename is piyo.png, %s_%03d.png will be replaced piyo_001.png.

See also th waifu2x.lua -h.

Using photo model

Please add -model_dir models/photo to command line option, if you want to use photo model.

For example,

th waifu2x.lua -model_dir models/photo -m scale -i input_image.png -o output_image.png

Video Encoding

* avconv is alias of ffmpeg on Ubuntu 14.04.

Extracting images and audio from a video. (range: 00:09:00 ~ 00:12:00)

mkdir frames

avconv -i data/raw.avi -ss 00:09:00 -t 00:03:00 -r 24 -f image2 frames/%06d.png

avconv -i data/raw.avi -ss 00:09:00 -t 00:03:00 audio.mp3

Generating a image list.

find ./frames -name "*.png" |sort > data/frame.txt

waifu2x (for example, noise reduction)

mkdir new_frames

th waifu2x.lua -m noise -noise_level 1 -resume 1 -l data/frame.txt -o new_frames/%d.png

Generating a video from waifu2xed images and audio.

avconv -f image2 -framerate 24 -i new_frames/%d.png -i audio.mp3 -r 24 -vcodec libx264 -crf 16 video.mp4

Train Your Own Model

Note1: If you have cuDNN library, you can use cudnn kernel with -backend cudnn option. And, you can convert trained cudnn model to cunn model with tools/rebuild.lua.

Note2: The command that was used to train for waifu2x's pretrained models is available at appendix/train_upconv_7_art.sh, appendix/train_upconv_7_photo.sh. Maybe it is helpful.

Data Preparation

Genrating a file list.

find /path/to/image/dir -name "*.png" > data/image_list.txt

You should use noise free images. In my case, waifu2x is trained with 6000 high-resolution-noise-free-PNG images.

Converting training data.

th convert_data.lua

Train a Noise Reduction(level1) model

mkdir models/my_model

th train.lua -model_dir models/my_model -method noise -noise_level 1 -test images/miku_noisy.png

# usage

th waifu2x.lua -model_dir models/my_model -m noise -noise_level 1 -i images/miku_noisy.png -o output.png

You can check the performance of model with models/my_model/noise1_best.png.

Train a Noise Reduction(level2) model

th train.lua -model_dir models/my_model -method noise -noise_level 2 -test images/miku_noisy.png

# usage

th waifu2x.lua -model_dir models/my_model -m noise -noise_level 2 -i images/miku_noisy.png -o output.png

You can check the performance of model with models/my_model/noise2_best.png.

Train a 2x UpScaling model

th train.lua -model upconv_7 -model_dir models/my_model -method scale -scale 2 -test images/miku_small.png

# usage

th waifu2x.lua -model_dir models/my_model -m scale -scale 2 -i images/miku_small.png -o output.png

You can check the performance of model with models/my_model/scale2.0x_best.png.

Train a 2x and noise reduction fusion model

th train.lua -model upconv_7 -model_dir models/my_model -method noise_scale -scale 2 -noise_level 1 -test images/miku_small.png

# usage

th waifu2x.lua -model_dir models/my_model -m noise_scale -scale 2 -noise_level 1 -i images/miku_small.png -o output.png

You can check the performance of model with models/my_model/noise1_scale2.0x_best.png.

Docker

( Docker image is available at https://hub.docker.com/r/nagadomi/waifu2x )

Requires nvidia-docker.

docker build -t waifu2x .

docker run --gpus all -p 8812:8812 waifu2x th web.lua

docker run --gpus all -v `pwd`/images:/images waifu2x th waifu2x.lua -force_cudnn 1 -m scale -scale 2 -i /images/miku_small.png -o /images/output.png

Note that running waifu2x in without JIT caching is very slow, which is what would happen if you use docker.

For a workaround, you can mount a host volume to the CUDA_CACHE_PATH, for instance,

docker run --gpus all -v $PWD/ComputeCache:/root/.nv/ComputeCache waifu2x th waifu2x.lua --help

Top Related Projects

Video, Image and GIF upscale/enlarge(Super-Resolution) and Video frame interpolation. Achieved with Waifu2x, Real-ESRGAN, Real-CUGAN, RTX Video Super Resolution VSR, SRMD, RealSR, Anime4K, RIFE, IFRNet, CAIN, DAIN, and ACNet.

waifu2x converter ncnn version, runs fast on intel / amd / nvidia / apple-silicon GPU with vulkan

waifu2xのCaffe版

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot