Promptify

Promptify

Prompt Engineering | Prompt Versioning | Use GPT or other prompt based models to get structured output. Join our discord for Prompt-Engineering, LLMs and other latest research

Top Related Projects

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

An open-source NLP research library, built on PyTorch.

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

TensorFlow code and pre-trained models for BERT

💫 Industrial-strength Natural Language Processing (NLP) in Python

Quick Overview

Promptify is an open-source Python library designed to streamline prompt engineering workflows. It offers a range of tools for creating, managing, and optimizing prompts for large language models, making it easier for developers to work with AI-powered applications.

Pros

- Simplifies prompt engineering with a user-friendly API

- Supports multiple language models, including OpenAI's GPT models

- Offers various prompt templates and techniques for different use cases

- Includes tools for prompt versioning and management

Cons

- Limited documentation and examples for some advanced features

- May require additional setup for certain language models

- Still in active development, so some features might be unstable

- Learning curve for users new to prompt engineering concepts

Code Examples

- Basic prompt generation:

from promptify import Promptify

prompter = Promptify()

prompt = prompter.text_to_prompt("Summarize this article about climate change.")

print(prompt)

- Using a specific prompt template:

from promptify import Promptify, PromptTemplate

template = PromptTemplate("Translate the following text to {language}: {text}")

prompter = Promptify()

prompt = prompter.from_template(template, language="French", text="Hello, world!")

print(prompt)

- Generating a response using OpenAI's GPT-3:

from promptify import Promptify, OpenAILanguageModel

model = OpenAILanguageModel(api_key="your_api_key")

prompter = Promptify(model=model)

response = prompter.generate("What is the capital of France?")

print(response)

Getting Started

To get started with Promptify, follow these steps:

- Install the library:

pip install promptify

- Import and initialize Promptify:

from promptify import Promptify

prompter = Promptify()

- Generate a simple prompt:

prompt = prompter.text_to_prompt("Write a short story about a robot.")

print(prompt)

For more advanced usage, refer to the Promptify documentation and examples in the GitHub repository.

Competitor Comparisons

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

Pros of transformers

- Extensive library with support for a wide range of NLP tasks and models

- Well-documented and actively maintained by a large community

- Seamless integration with PyTorch and TensorFlow

Cons of transformers

- Steeper learning curve due to its comprehensive nature

- Can be resource-intensive for smaller projects or limited hardware

- May include unnecessary features for simple prompt engineering tasks

Code Comparison

transformers:

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

result = classifier("I love this product!")[0]

print(f"Label: {result['label']}, Score: {result['score']:.4f}")

Promptify:

from promptify import Promptify

prompter = Promptify()

result = prompter.classify("I love this product!", ["positive", "negative"])

print(f"Label: {result['label']}, Score: {result['score']:.4f}")

Summary

While transformers offers a comprehensive suite of NLP tools and models, Promptify focuses specifically on prompt engineering and management. transformers is better suited for complex NLP tasks and research, while Promptify may be more appropriate for projects centered around prompt-based AI interactions. The choice between the two depends on the specific requirements of your project and the level of complexity you're willing to manage.

DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

Pros of DeepSpeed

- Focuses on optimizing deep learning training, offering significant speed improvements

- Supports a wide range of AI models and frameworks

- Provides advanced features like ZeRO optimizer and 3D parallelism

Cons of DeepSpeed

- Steeper learning curve due to its complexity and advanced features

- Primarily designed for large-scale AI training, may be overkill for smaller projects

Code Comparison

DeepSpeed:

import deepspeed

model_engine, optimizer, _, _ = deepspeed.initialize(args=args,

model=model,

model_parameters=params)

Promptify:

from promptify import Promptify

prompter = Promptify()

result = prompter.chat("Your prompt here", model="gpt-3.5-turbo")

Key Differences

- DeepSpeed is focused on optimizing deep learning training, while Promptify is designed for prompt engineering and management

- DeepSpeed offers more advanced features for large-scale AI training, whereas Promptify provides a simpler interface for working with language models

- DeepSpeed requires more setup and configuration, while Promptify aims for ease of use in prompt-based applications

An open-source NLP research library, built on PyTorch.

Pros of AllenNLP

- Comprehensive NLP toolkit with a wide range of pre-built models and components

- Extensive documentation and tutorials for ease of use

- Strong community support and regular updates

Cons of AllenNLP

- Steeper learning curve for beginners

- Primarily focused on research-oriented tasks

- Requires more setup and configuration for basic tasks

Code Comparison

AllenNLP:

from allennlp.predictors.predictor import Predictor

predictor = Predictor.from_path("https://storage.googleapis.com/allennlp-public-models/bert-base-srl-2020.03.24.tar.gz")

result = predictor.predict(sentence="Did Uriah honestly think he could beat the game in under three hours?")

Promptify:

from promptify import Promptify

prompt = Promptify()

result = prompt.ask("Summarize the following text: {text}", text="Your input text here")

AllenNLP provides a more structured approach for specific NLP tasks, while Promptify offers a simpler interface for prompt-based operations. AllenNLP requires more setup but offers greater flexibility for complex NLP tasks, whereas Promptify focuses on ease of use for prompt engineering and language model interactions.

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

Pros of fairseq

- Comprehensive toolkit for sequence modeling tasks

- Supports a wide range of architectures and models

- Highly optimized for performance and scalability

Cons of fairseq

- Steeper learning curve due to its complexity

- Requires more setup and configuration

- May be overkill for simpler NLP tasks

Code Comparison

fairseq:

from fairseq.models.transformer import TransformerModel

en2de = TransformerModel.from_pretrained(

'/path/to/checkpoints',

checkpoint_file='checkpoint_best.pt',

data_name_or_path='data-bin/wmt16_en_de_bpe32k'

)

en2de.translate('Hello world!')

Promptify:

from promptify import Promptify

p = Promptify()

prompt = "Translate the following English text to French: 'Hello world!'"

response = p.generate(prompt)

print(response)

Summary

fairseq is a powerful and versatile toolkit for sequence modeling, offering a wide range of features and optimizations. However, it comes with a steeper learning curve and more complex setup. Promptify, on the other hand, provides a simpler interface for prompt-based tasks, making it easier to use for basic NLP operations but potentially lacking the advanced capabilities of fairseq.

TensorFlow code and pre-trained models for BERT

Pros of BERT

- Widely adopted and well-established pre-trained language model

- Extensive documentation and community support

- Proven performance on various NLP tasks

Cons of BERT

- Requires more computational resources for fine-tuning

- Less flexible for prompt engineering tasks

- Steeper learning curve for implementation

Code Comparison

BERT:

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained('bert-base-uncased')

inputs = tokenizer("Hello, my dog is cute", return_tensors="pt")

outputs = model(**inputs)

Promptify:

from promptify import Promptify

p = Promptify()

prompt = "Summarize the following text: {text}"

result = p.fit(prompt, text="Your input text here")

BERT focuses on tokenization and model loading for general NLP tasks, while Promptify simplifies prompt engineering with a more straightforward API. BERT requires more setup but offers greater flexibility, whereas Promptify provides a more user-friendly approach for specific prompt-based tasks.

💫 Industrial-strength Natural Language Processing (NLP) in Python

Pros of spaCy

- Mature, well-established library with extensive documentation and community support

- Offers a wide range of NLP functionalities beyond text processing

- Highly optimized for performance, suitable for large-scale production environments

Cons of spaCy

- Steeper learning curve due to its comprehensive feature set

- Requires more system resources and has a larger footprint

- Less focused on prompt engineering and LLM-specific tasks

Code Comparison

spaCy:

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Apple is looking at buying U.K. startup for $1 billion")

for ent in doc.ents:

print(ent.text, ent.label_)

Promptify:

from promptify import Promptify

p = Promptify()

text = "Apple is looking at buying U.K. startup for $1 billion"

result = p.ner(text, "en")

print(result)

Key Differences

- spaCy is a comprehensive NLP library, while Promptify focuses on prompt engineering and LLM interactions

- Promptify offers a simpler API for quick implementation of NLP tasks

- spaCy provides more granular control over NLP pipelines and models

- Promptify is designed to work seamlessly with various LLMs and prompt-based tasks

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Promptify

Prompt Engineering, Solve NLP Problems with LLM's & Easily generate different NLP Task prompts for popular generative models like GPT, PaLM, and more with Promptify

Installation

With pip

This repository is tested on Python 3.7+, openai 0.25+.

You should install Promptify using Pip command

pip3 install promptify

or

pip3 install git+https://github.com/promptslab/Promptify.git

Quick tour

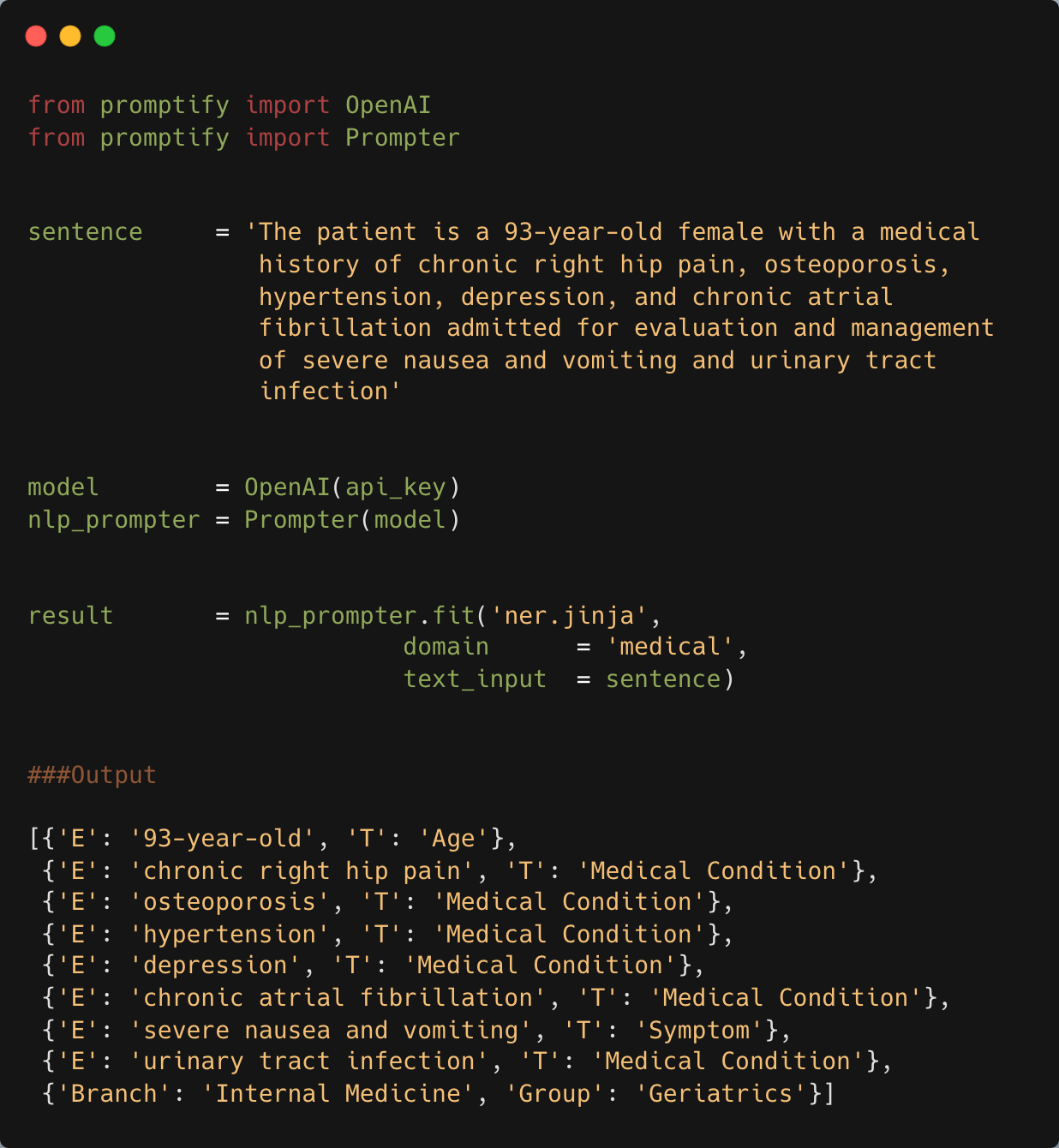

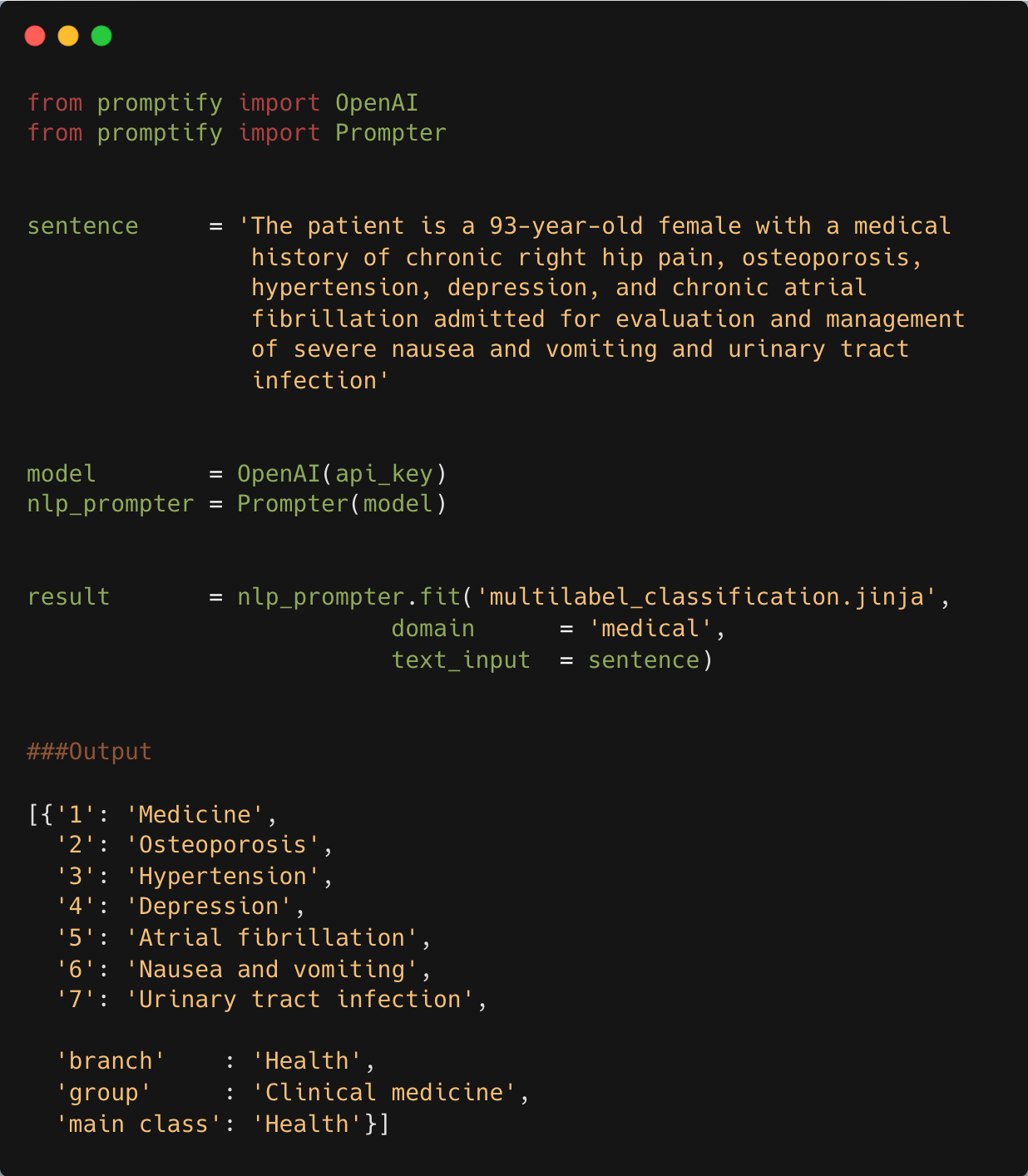

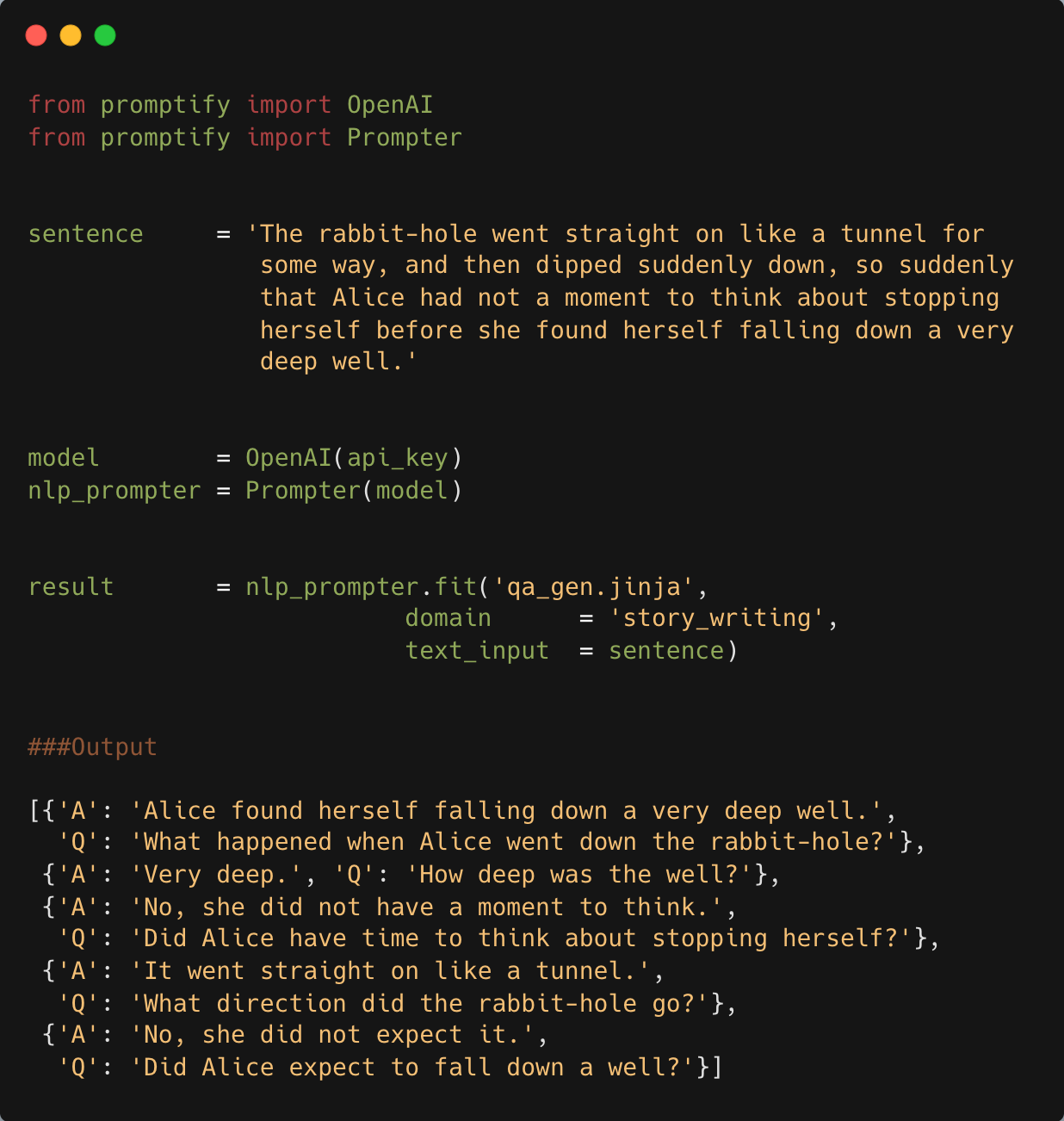

To immediately use a LLM model for your NLP task, we provide the Pipeline API.

from promptify import Prompter,OpenAI, Pipeline

sentence = """The patient is a 93-year-old female with a medical

history of chronic right hip pain, osteoporosis,

hypertension, depression, and chronic atrial

fibrillation admitted for evaluation and management

of severe nausea and vomiting and urinary tract

infection"""

model = OpenAI(api_key) # or `HubModel()` for Huggingface-based inference or 'Azure' etc

prompter = Prompter('ner.jinja') # select a template or provide custom template

pipe = Pipeline(prompter , model)

result = pipe.fit(sentence, domain="medical", labels=None)

### Output

[

{"E": "93-year-old", "T": "Age"},

{"E": "chronic right hip pain", "T": "Medical Condition"},

{"E": "osteoporosis", "T": "Medical Condition"},

{"E": "hypertension", "T": "Medical Condition"},

{"E": "depression", "T": "Medical Condition"},

{"E": "chronic atrial fibrillation", "T": "Medical Condition"},

{"E": "severe nausea and vomiting", "T": "Symptom"},

{"E": "urinary tract infection", "T": "Medical Condition"},

{"Branch": "Internal Medicine", "Group": "Geriatrics"},

]

GPT-3 Example with NER, MultiLabel, Question Generation Task

Features ð®

- Perform NLP tasks (such as NER and classification) in just 2 lines of code, with no training data required

- Easily add one shot, two shot, or few shot examples to the prompt

- Handling out-of-bounds prediction from LLMS (GPT, t5, etc.)

- Output always provided as a Python object (e.g. list, dictionary) for easy parsing and filtering. This is a major advantage over LLMs generated output, whose unstructured and raw output makes it difficult to use in business or other applications.

- Custom examples and samples can be easily added to the prompt

- ð¤ Run inference on any model stored on the Huggingface Hub (see notebook guide).

- Optimized prompts to reduce OpenAI token costs (coming soon)

Supporting wide-range of Prompt-Based NLP tasks :

| Task Name | Colab Notebook | Status |

|---|---|---|

| Named Entity Recognition | NER Examples with GPT-3 | â |

| Multi-Label Text Classification | Classification Examples with GPT-3 | â |

| Multi-Class Text Classification | Classification Examples with GPT-3 | â |

| Binary Text Classification | Classification Examples with GPT-3 | â |

| Question-Answering | QA Task Examples with GPT-3 | â |

| Question-Answer Generation | QA Task Examples with GPT-3 | â |

| Relation-Extraction | Relation-Extraction Examples with GPT-3 | â |

| Summarization | Summarization Task Examples with GPT-3 | â |

| Explanation | Explanation Task Examples with GPT-3 | â |

| SQL Writer | SQL Writer Example with GPT-3 | â |

| Tabular Data | ||

| Image Data | ||

| More Prompts |

Docs

Community

@misc{Promptify2022,

title = {Promptify: Structured Output from LLMs},

author = {Pal, Ankit},

year = {2022},

howpublished = {\url{https://github.com/promptslab/Promptify}},

note = {Prompt-Engineering components for NLP tasks in Python}

}

ð Contributing

We welcome any contributions to our open source project, including new features, improvements to infrastructure, and more comprehensive documentation. Please see the contributing guidelines

Top Related Projects

🤗 Transformers: the model-definition framework for state-of-the-art machine learning models in text, vision, audio, and multimodal models, for both inference and training.

DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

An open-source NLP research library, built on PyTorch.

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

TensorFlow code and pre-trained models for BERT

💫 Industrial-strength Natural Language Processing (NLP) in Python

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot