Top Related Projects

:robot: :speech_balloon: Deep learning for Text to Speech (Discussion forum: https://discourse.mozilla.org/c/tts)

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference

Clone a voice in 5 seconds to generate arbitrary speech in real-time

A TensorFlow Implementation of DC-TTS: yet another text-to-speech model

Quick Overview

Bark is a text-to-audio model developed by Suno AI. It can generate highly realistic multilingual speech, music, and other audio from text prompts. Bark is capable of producing various non-speech sounds like laughing, sighing, and crying, as well as generating background noises and simple music.

Pros

- Highly versatile, capable of generating speech, music, and sound effects

- Supports multiple languages and accents

- Can produce expressive and emotive audio, including non-speech sounds

- Open-source and available for research and non-commercial use

Cons

- Computationally intensive, requiring significant GPU resources

- May produce inconsistent results or artifacts in some cases

- Limited commercial use due to licensing restrictions

- Potential for misuse in creating deepfakes or misleading content

Code Examples

- Basic text-to-speech generation:

from bark import SAMPLE_RATE, generate_audio, preload_models

preload_models()

text_prompt = "Hello, world! This is a test of the Bark text-to-audio model."

audio_array = generate_audio(text_prompt)

- Generating speech with a specific speaker preset:

from bark import generate_audio, SAMPLE_RATE

from bark.generation import load_codec_model, generate_text_semantic

from bark.api import semantic_to_waveform

text_prompt = "I'm speaking with a specific voice preset."

voice_preset = "v2/en_speaker_6"

audio_array = generate_audio(text_prompt, history_prompt=voice_preset)

- Generating non-speech sounds:

from bark import generate_audio, SAMPLE_RATE

text_prompt = "A dog barking in the distance: [bark bark]"

audio_array = generate_audio(text_prompt)

Getting Started

To get started with Bark, follow these steps:

- Install the library:

pip install git+https://github.com/suno-ai/bark.git

- Import and use Bark in your Python script:

from bark import SAMPLE_RATE, generate_audio, preload_models

preload_models()

text_prompt = "Hello, this is a test of the Bark text-to-audio model."

audio_array = generate_audio(text_prompt)

# Save the audio to a file

from scipy.io.wavfile import write as write_wav

write_wav("output.wav", SAMPLE_RATE, audio_array)

This will generate an audio file named "output.wav" with the synthesized speech from your text prompt.

Competitor Comparisons

:robot: :speech_balloon: Deep learning for Text to Speech (Discussion forum: https://discourse.mozilla.org/c/tts)

Pros of TTS

- More established project with a longer history and larger community

- Supports a wider range of TTS models and techniques

- Better documentation and examples for integration

Cons of TTS

- Generally slower inference times compared to Bark

- Less focus on multi-lingual and multi-speaker capabilities

- May require more setup and configuration for advanced use cases

Code Comparison

TTS example:

from TTS.api import TTS

tts = TTS(model_name="tts_models/en/ljspeech/tacotron2-DDC")

tts.tts_to_file(text="Hello world!", file_path="output.wav")

Bark example:

from bark import SAMPLE_RATE, generate_audio, preload_models

preload_models()

text_prompt = "Hello world!"

audio_array = generate_audio(text_prompt)

Both repositories offer text-to-speech capabilities, but they differ in their approach and focus. TTS provides a more comprehensive toolkit for various TTS models, while Bark aims for simplicity and quick results with its pre-trained model. TTS may be better suited for researchers and developers looking for flexibility, while Bark could be preferable for those seeking rapid prototyping or simple integration.

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

Pros of fairseq

- More comprehensive and versatile, supporting a wide range of NLP tasks

- Extensive documentation and examples for various use cases

- Larger community and more frequent updates

Cons of fairseq

- Steeper learning curve due to its complexity and broad scope

- Requires more computational resources for training and inference

- Less focused on specific text-to-speech applications

Code Comparison

fairseq:

from fairseq.models.transformer import TransformerModel

model = TransformerModel.from_pretrained('/path/to/model', 'checkpoint.pt')

tokens = model.encode('Hello world')

translations = model.translate(tokens)

bark:

from bark import SAMPLE_RATE, generate_audio, preload_models

preload_models()

text_prompt = "Hello world"

audio_array = generate_audio(text_prompt)

Summary

fairseq is a more comprehensive NLP toolkit suitable for various tasks, while bark is specifically focused on text-to-speech generation. fairseq offers greater flexibility and a larger community, but comes with increased complexity and resource requirements. bark provides a simpler interface for audio generation but has a narrower scope. The choice between the two depends on the specific project requirements and the desired balance between versatility and ease of use.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference

Pros of Tacotron2

- Well-established and widely used in the research community

- Supports fine-tuning on custom datasets

- Produces high-quality speech synthesis with natural-sounding prosody

Cons of Tacotron2

- Requires more computational resources and training time

- Limited to single-speaker models without additional modifications

- May struggle with out-of-domain text or unusual pronunciations

Code Comparison

Bark:

from bark import SAMPLE_RATE, generate_audio, preload_models

preload_models()

text = "Hello, I'm a text-to-speech model."

audio_array = generate_audio(text)

Tacotron2:

from tacotron2.hparams import create_hparams

from tacotron2.model import Tacotron2

from tacotron2.stft import STFT

hparams = create_hparams()

model = Tacotron2(hparams)

stft = STFT(hparams.filter_length, hparams.hop_length, hparams.win_length)

Bark is designed for ease of use and quick generation of diverse voices, while Tacotron2 offers more control over the speech synthesis process and is better suited for research and custom model development. Bark's simplicity makes it more accessible for beginners, whereas Tacotron2's flexibility is advantageous for advanced users and researchers in the field of speech synthesis.

Clone a voice in 5 seconds to generate arbitrary speech in real-time

Pros of Real-Time-Voice-Cloning

- Focuses on real-time voice cloning, allowing for immediate results

- Provides a user-friendly interface for easy interaction

- Supports custom dataset creation for personalized voice cloning

Cons of Real-Time-Voice-Cloning

- Limited to voice cloning and doesn't offer text-to-speech functionality

- May require more computational resources for real-time processing

- Less versatile in terms of output options compared to Bark

Code Comparison

Real-Time-Voice-Cloning:

encoder = VoiceEncoder()

embed = encoder.embed_utterance(wav)

specs = synthesizer.synthesize_spectrograms([text], [embed])

generated_wav = vocoder.invert_spectrogram(specs[0])

Bark:

from bark import SAMPLE_RATE, generate_audio, preload_models

preload_models()

text_prompt = "Hello, this is a test."

audio_array = generate_audio(text_prompt)

Real-Time-Voice-Cloning focuses on cloning specific voices in real-time, while Bark is a more general text-to-audio model with a broader range of capabilities. Real-Time-Voice-Cloning offers more control over the voice being cloned but requires more setup and processing. Bark, on the other hand, provides a simpler API for generating audio from text, making it easier to use for general text-to-speech tasks but with less customization for specific voices.

A TensorFlow Implementation of DC-TTS: yet another text-to-speech model

Pros of dc_tts

- Simpler architecture, potentially easier to understand and modify

- Faster inference time due to its lightweight design

- Focuses specifically on text-to-speech, which may be beneficial for targeted applications

Cons of dc_tts

- Limited in scope compared to Bark's multi-modal capabilities

- May produce lower quality audio output

- Less active development and community support

Code Comparison

dc_tts:

def text2mel(text):

# Text to mel-spectrogram conversion

return mel_spectrogram

def ssrn(mel):

# Mel-spectrogram to waveform conversion

return waveform

Bark:

def generate_audio(text):

# Generate semantic tokens

semantic_tokens = generate_text_semantic(text)

# Convert semantic tokens to audio

coarse_tokens = generate_coarse(semantic_tokens)

fine_tokens = generate_fine(coarse_tokens)

audio_array = codec_decode(fine_tokens)

return audio_array

The code comparison highlights the difference in complexity between the two projects. dc_tts uses a simpler two-step process for text-to-speech conversion, while Bark employs a more sophisticated multi-stage approach that includes semantic token generation and multiple levels of audio synthesis.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Notice: Bark is Suno's open-source text-to-speech+ model. If you are looking for our text-to-music models, please visit us on our web page and join our community on Discord.

ð¶ Bark

ð Examples ⢠Suno Studio Waitlist ⢠Updates ⢠How to Use ⢠Installation ⢠FAQ

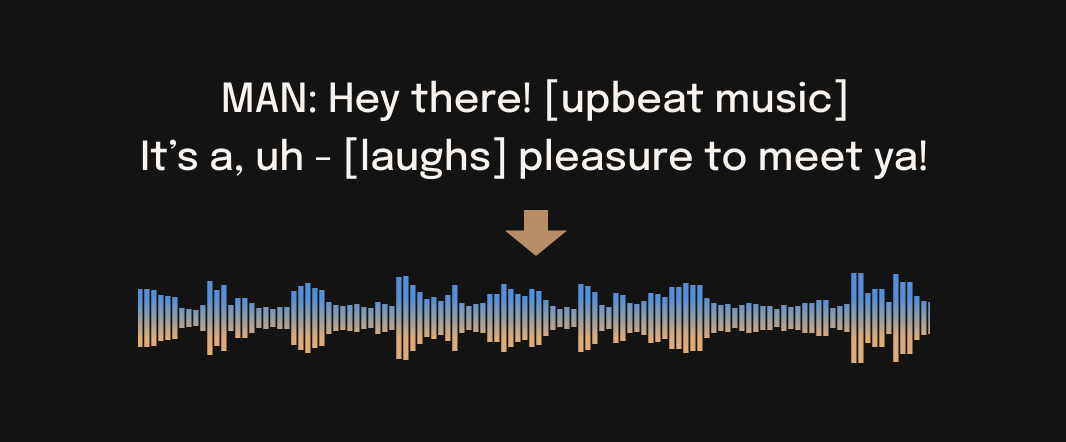

Bark is a transformer-based text-to-audio model created by Suno. Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model can also produce nonverbal communications like laughing, sighing and crying. To support the research community, we are providing access to pretrained model checkpoints, which are ready for inference and available for commercial use.

â Disclaimer

Bark was developed for research purposes. It is not a conventional text-to-speech model but instead a fully generative text-to-audio model, which can deviate in unexpected ways from provided prompts. Suno does not take responsibility for any output generated. Use at your own risk, and please act responsibly.

ð Quick Index

ð§ Demos

ð Updates

2023.05.01

-

Â©ï¸ Bark is now licensed under the MIT License, meaning it's now available for commercial use!

-

â¡ 2x speed-up on GPU. 10x speed-up on CPU. We also added an option for a smaller version of Bark, which offers additional speed-up with the trade-off of slightly lower quality.

-

ð Long-form generation, voice consistency enhancements and other examples are now documented in a new notebooks section.

-

ð¥ We created a voice prompt library. We hope this resource helps you find useful prompts for your use cases! You can also join us on Discord, where the community actively shares useful prompts in the #audio-prompts channel.

-

ð¬ Growing community support and access to new features here:

-

ð¾ You can now use Bark with GPUs that have low VRAM (<4GB).

2023.04.20

- ð¶ Bark release!

ð Usage in Python

ðª Basics

from bark import SAMPLE_RATE, generate_audio, preload_models

from scipy.io.wavfile import write as write_wav

from IPython.display import Audio

# download and load all models

preload_models()

# generate audio from text

text_prompt = """

Hello, my name is Suno. And, uh â and I like pizza. [laughs]

But I also have other interests such as playing tic tac toe.

"""

audio_array = generate_audio(text_prompt)

# save audio to disk

write_wav("bark_generation.wav", SAMPLE_RATE, audio_array)

# play text in notebook

Audio(audio_array, rate=SAMPLE_RATE)

ð Foreign Language

Bark supports various languages out-of-the-box and automatically determines language from input text. When prompted with code-switched text, Bark will attempt to employ the native accent for the respective languages. English quality is best for the time being, and we expect other languages to further improve with scaling.

text_prompt = """

ì¶ìì ë´ê° ê°ì¥ ì¢ìíë ëª

ì ì´ë¤. ëë ë©°ì¹ ëì í´ìì ì·¨íê³ ì¹êµ¬ ë° ê°ì¡±ê³¼ ìê°ì ë³´ë¼ ì ììµëë¤.

"""

audio_array = generate_audio(text_prompt)

Note: since Bark recognizes languages automatically from input text, it is possible to use, for example, a german history prompt with english text. This usually leads to english audio with a german accent.

text_prompt = """

Der DreiÃigjährige Krieg (1618-1648) war ein verheerender Konflikt, der Europa stark geprägt hat.

This is a beginning of the history. If you want to hear more, please continue.

"""

audio_array = generate_audio(text_prompt)

ð¶ Music

Bark can generate all types of audio, and, in principle, doesn't see a difference between speech and music. Sometimes Bark chooses to generate text as music, but you can help it out by adding music notes around your lyrics.

text_prompt = """

⪠In the jungle, the mighty jungle, the lion barks tonight âª

"""

audio_array = generate_audio(text_prompt)

ð¤ Voice Presets

Bark supports 100+ speaker presets across supported languages. You can browse the library of supported voice presets HERE, or in the code. The community also often shares presets in Discord.

Bark tries to match the tone, pitch, emotion and prosody of a given preset, but does not currently support custom voice cloning. The model also attempts to preserve music, ambient noise, etc.

text_prompt = """

I have a silky smooth voice, and today I will tell you about

the exercise regimen of the common sloth.

"""

audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1")

ð Generating Longer Audio

By default, generate_audio works well with around 13 seconds of spoken text. For an example of how to do long-form generation, see ð Notebook ð

Click to toggle example long-form generations (from the example notebook)

Command line

python -m bark --text "Hello, my name is Suno." --output_filename "example.wav"

ð» Installation

â¼ï¸ CAUTION â¼ï¸ Do NOT use pip install bark. It installs a different package, which is not managed by Suno.

pip install git+https://github.com/suno-ai/bark.git

or

git clone https://github.com/suno-ai/bark

cd bark && pip install .

ð¤ Transformers Usage

Bark is available in the ð¤ Transformers library from version 4.31.0 onwards, requiring minimal dependencies and additional packages. Steps to get started:

- First install the ð¤ Transformers library from main:

pip install git+https://github.com/huggingface/transformers.git

- Run the following Python code to generate speech samples:

from transformers import AutoProcessor, BarkModel

processor = AutoProcessor.from_pretrained("suno/bark")

model = BarkModel.from_pretrained("suno/bark")

voice_preset = "v2/en_speaker_6"

inputs = processor("Hello, my dog is cute", voice_preset=voice_preset)

audio_array = model.generate(**inputs)

audio_array = audio_array.cpu().numpy().squeeze()

- Listen to the audio samples either in an ipynb notebook:

from IPython.display import Audio

sample_rate = model.generation_config.sample_rate

Audio(audio_array, rate=sample_rate)

Or save them as a .wav file using a third-party library, e.g. scipy:

import scipy

sample_rate = model.generation_config.sample_rate

scipy.io.wavfile.write("bark_out.wav", rate=sample_rate, data=audio_array)

For more details on using the Bark model for inference using the ð¤ Transformers library, refer to the Bark docs or the hands-on Google Colab.

ð ï¸ Hardware and Inference Speed

Bark has been tested and works on both CPU and GPU (pytorch 2.0+, CUDA 11.7 and CUDA 12.0).

On enterprise GPUs and PyTorch nightly, Bark can generate audio in roughly real-time. On older GPUs, default colab, or CPU, inference time might be significantly slower. For older GPUs or CPU you might want to consider using smaller models. Details can be found in out tutorial sections here.

The full version of Bark requires around 12GB of VRAM to hold everything on GPU at the same time.

To use a smaller version of the models, which should fit into 8GB VRAM, set the environment flag SUNO_USE_SMALL_MODELS=True.

If you don't have hardware available or if you want to play with bigger versions of our models, you can also sign up for early access to our model playground here.

âï¸ Details

Bark is fully generative text-to-audio model devolved for research and demo purposes. It follows a GPT style architecture similar to AudioLM and Vall-E and a quantized Audio representation from EnCodec. It is not a conventional TTS model, but instead a fully generative text-to-audio model capable of deviating in unexpected ways from any given script. Different to previous approaches, the input text prompt is converted directly to audio without the intermediate use of phonemes. It can therefore generalize to arbitrary instructions beyond speech such as music lyrics, sound effects or other non-speech sounds.

Below is a list of some known non-speech sounds, but we are finding more every day. Please let us know if you find patterns that work particularly well on Discord!

[laughter][laughs][sighs][music][gasps][clears throat]âor...for hesitationsâªfor song lyrics- CAPITALIZATION for emphasis of a word

[MAN]and[WOMAN]to bias Bark toward male and female speakers, respectively

Supported Languages

| Language | Status |

|---|---|

| English (en) | â |

| German (de) | â |

| Spanish (es) | â |

| French (fr) | â |

| Hindi (hi) | â |

| Italian (it) | â |

| Japanese (ja) | â |

| Korean (ko) | â |

| Polish (pl) | â |

| Portuguese (pt) | â |

| Russian (ru) | â |

| Turkish (tr) | â |

| Chinese, simplified (zh) | â |

Requests for future language support here or in the #forums channel on Discord.

ð Appreciation

- nanoGPT for a dead-simple and blazing fast implementation of GPT-style models

- EnCodec for a state-of-the-art implementation of a fantastic audio codec

- AudioLM for related training and inference code

- Vall-E, AudioLM and many other ground-breaking papers that enabled the development of Bark

© License

Bark is licensed under the MIT License.

ð±Â Community

ð§Â Suno Studio (Early Access)

Weâre developing a playground for our models, including Bark.

If you are interested, you can sign up for early access here.

â FAQ

How do I specify where models are downloaded and cached?

- Bark uses Hugging Face to download and store models. You can see find more info here.

Bark's generations sometimes differ from my prompts. What's happening?

- Bark is a GPT-style model. As such, it may take some creative liberties in its generations, resulting in higher-variance model outputs than traditional text-to-speech approaches.

What voices are supported by Bark?

- Bark supports 100+ speaker presets across supported languages. You can browse the library of speaker presets here. The community also shares presets in Discord. Bark also supports generating unique random voices that fit the input text. Bark does not currently support custom voice cloning.

Why is the output limited to ~13-14 seconds?

- Bark is a GPT-style model, and its architecture/context window is optimized to output generations with roughly this length.

How much VRAM do I need?

- The full version of Bark requires around 12Gb of memory to hold everything on GPU at the same time. However, even smaller cards down to ~2Gb work with some additional settings. Simply add the following code snippet before your generation:

import os

os.environ["SUNO_OFFLOAD_CPU"] = "True"

os.environ["SUNO_USE_SMALL_MODELS"] = "True"

My generated audio sounds like a 1980s phone call. What's happening?

- Bark generates audio from scratch. It is not meant to create only high-fidelity, studio-quality speech. Rather, outputs could be anything from perfect speech to multiple people arguing at a baseball game recorded with bad microphones.

Top Related Projects

:robot: :speech_balloon: Deep learning for Text to Speech (Discussion forum: https://discourse.mozilla.org/c/tts)

Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference

Clone a voice in 5 seconds to generate arbitrary speech in real-time

A TensorFlow Implementation of DC-TTS: yet another text-to-speech model

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot