Top Related Projects

Machine Learning Pipelines for Kubeflow

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

The open source developer platform to build AI/LLM applications and models with confidence. Enhance your AI applications with end-to-end tracking, observability, and evaluations, all in one integrated platform.

The Open Source Feature Store for AI/ML

The easiest way to serve AI apps and models - Build Model Inference APIs, Job queues, LLM apps, Multi-model pipelines, and more!

Quick Overview

TensorFlow Extended (TFX) is an end-to-end platform for deploying production machine learning pipelines. It provides a set of components and tools that help data scientists and engineers build, test, and deploy robust ML systems.

Pros

- Scalable and Extensible: TFX is built on top of TensorFlow and can scale to handle large-scale data and models.

- Modular Design: TFX has a modular design, allowing users to easily integrate custom components or replace existing ones.

- Production-Ready: TFX is designed for production use, with features like monitoring, versioning, and deployment automation.

- Ecosystem Integration: TFX integrates with various tools and services in the ML ecosystem, such as Kubeflow, Apache Beam, and BigQuery.

Cons

- Steep Learning Curve: TFX has a relatively steep learning curve, especially for users new to the TensorFlow ecosystem.

- Limited Documentation: The documentation for TFX, while improving, can still be challenging to navigate for some users.

- Performance Overhead: The additional abstraction and tooling provided by TFX can introduce some performance overhead compared to a more lightweight approach.

- Vendor Lock-in: TFX is tightly integrated with the TensorFlow ecosystem, which may limit its adoption for users who prefer other ML frameworks.

Code Examples

# Define a simple TFX pipeline

from tfx.orchestration.experimental.interactive.interactive_context import InteractiveContext

from tfx.components.example_gen.csv_example_gen.component import CsvExampleGen

from tfx.components.trainer.component import Trainer

from tfx.dsl.components.base.executor_spec import ExecutorClassSpec

from tfx.extensions.google_cloud_ai_platform.trainer.executor import Executor as AITrainerExecutor

# Create an InteractiveContext

context = InteractiveContext()

# Define the components

example_gen = CsvExampleGen(input_base='path/to/data')

trainer = Trainer(

module_file='path/to/trainer_module.py',

custom_executor_spec=ExecutorClassSpec(AITrainerExecutor),

examples=example_gen.outputs['examples'])

# Run the pipeline

context.run([example_gen, trainer])

This code example demonstrates how to define a simple TFX pipeline with two components: CsvExampleGen and Trainer. The pipeline reads data from a CSV file, generates examples, and trains a model using the Google Cloud AI Platform Trainer executor.

# Define a custom TFX component

from tfx.components.base import BaseComponent, ExecutionDecision

from tfx.types import standard_artifacts

class MyCustomComponent(BaseComponent):

SPEC_CLASS = MyCustomComponentSpec

EXECUTOR_SPEC = ExecutorClassSpec(MyCustomComponentExecutor)

def __init__(self, input_data, output_data):

self.input_data = input_data

self.output_data = output_data

def _create_execution_decision(self, context, inputs, outputs):

return ExecutionDecision(

input_dict={"input_data": [inputs["input_data"]]},

output_dict={"output_data": [outputs["output_data"]]},

exec_properties={},

component_id=self.id,

executor_spec=self.executor_spec,

cache_key=None)

# Use the custom component in a pipeline

my_custom_component = MyCustomComponent(

input_data=example_gen.outputs['examples'],

output_data=standard_artifacts.Examples())

context.run([my_custom_component])

This code example demonstrates how to define a custom TFX component and integrate it into a TFX pipeline. The MyCustomComponent class inherits from BaseComponent and defines the necessary methods to create an execution decision and run the component.

Getting Started

To get started with TFX, you can follow these steps:

- Install the TFX library:

pip install tfx

- Create a new TFX pipeline:

from tfx.orchestration.experimental.interactive.interactive_

Competitor Comparisons

Machine Learning Pipelines for Kubeflow

Pros of Pipelines

- More flexible and language-agnostic, supporting multiple ML frameworks

- Better integration with Kubernetes ecosystem and cloud-native technologies

- Stronger focus on end-to-end ML workflows, including deployment and monitoring

Cons of Pipelines

- Steeper learning curve due to Kubernetes complexity

- Less seamless integration with TensorFlow-specific features

- Potentially more resource-intensive for smaller projects

Code Comparison

TFX example:

import tfx

from tfx.components import CsvExampleGen

example_gen = CsvExampleGen(input_base='/path/to/data')

Pipelines example:

from kfp import dsl

@dsl.pipeline(name='My pipeline')

def my_pipeline():

data_op = dsl.ContainerOp(

name='Load Data',

image='data-loader:latest',

arguments=['--data-path', '/path/to/data']

)

TFX is more tightly integrated with TensorFlow, offering a simpler setup for TensorFlow-based projects. Pipelines provides a more flexible, container-based approach that can accommodate various ML frameworks and tools, but requires more configuration and Kubernetes knowledge.

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

Pros of Airflow

- More general-purpose workflow orchestration, suitable for a wide range of data processing tasks

- Larger community and ecosystem with extensive plugins and integrations

- Easier to set up and use for non-ML-specific workflows

Cons of Airflow

- Less specialized for machine learning pipelines compared to TFX

- May require more custom code for ML-specific tasks

- Lacks built-in ML model versioning and metadata tracking

Code Comparison

Airflow DAG example:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

def process_data():

# Data processing logic

dag = DAG('data_pipeline', default_args=default_args, schedule_interval=timedelta(days=1))

process_task = PythonOperator(task_id='process_data', python_callable=process_data, dag=dag)

TFX pipeline example:

from tfx import components

from tfx.orchestration import pipeline

def create_pipeline():

example_gen = components.CsvExampleGen(input_base=data_root)

statistics_gen = components.StatisticsGen(examples=example_gen.outputs['examples'])

schema_gen = components.SchemaGen(statistics=statistics_gen.outputs['statistics'])

return pipeline.Pipeline(components=[example_gen, statistics_gen, schema_gen])

The open source developer platform to build AI/LLM applications and models with confidence. Enhance your AI applications with end-to-end tracking, observability, and evaluations, all in one integrated platform.

Pros of MLflow

- More lightweight and flexible, easier to integrate with various ML frameworks

- Better support for experiment tracking and model versioning

- Simpler setup and usage, with a lower learning curve

Cons of MLflow

- Less comprehensive end-to-end ML pipeline management

- Fewer built-in components for data validation and preprocessing

- Limited support for large-scale distributed training

Code Comparison

MLflow:

import mlflow

mlflow.start_run()

mlflow.log_param("param1", value1)

mlflow.log_metric("metric1", value2)

mlflow.end_run()

TFX:

from tfx import components

from tfx.orchestration import pipeline

example_gen = components.CsvExampleGen(input_base=data_root)

statistics_gen = components.StatisticsGen(examples=example_gen.outputs['examples'])

schema_gen = components.SchemaGen(statistics=statistics_gen.outputs['statistics'])

MLflow focuses on experiment tracking and model management, while TFX provides a more comprehensive pipeline for production ML workflows. MLflow is easier to adopt and use with various ML frameworks, but TFX offers more robust data validation and preprocessing capabilities, especially within the TensorFlow ecosystem. The code examples highlight MLflow's simplicity in logging experiments versus TFX's pipeline-based approach for data processing and model training.

The Open Source Feature Store for AI/ML

Pros of Feast

- Lightweight and focused specifically on feature management

- Easier to integrate with existing data infrastructure

- Supports multiple data sources and storage backends out-of-the-box

Cons of Feast

- Less comprehensive ML pipeline support compared to TFX

- Smaller community and ecosystem

- Limited built-in model training and serving capabilities

Code Comparison

Feast example:

from feast import FeatureStore

store = FeatureStore("feature_repo/")

features = store.get_online_features(

features=["driver:rating", "driver:trips_today"],

entity_rows=[{"driver_id": 1001}]

)

TFX example:

from tfx import components

from tfx.orchestration import pipeline

example_gen = components.CsvExampleGen(input_base="data/")

statistics_gen = components.StatisticsGen(examples=example_gen.outputs['examples'])

schema_gen = components.SchemaGen(statistics=statistics_gen.outputs['statistics'])

Feast focuses on feature retrieval and management, while TFX provides a more comprehensive pipeline for data processing, model training, and deployment. Feast is more suitable for teams looking to add feature management to existing ML workflows, while TFX offers an end-to-end solution for building production-ready ML pipelines.

The easiest way to serve AI apps and models - Build Model Inference APIs, Job queues, LLM apps, Multi-model pipelines, and more!

Pros of BentoML

- Lightweight and flexible, easier to get started with for smaller projects

- Supports a wider range of ML frameworks beyond TensorFlow

- Focuses on model serving and deployment, with built-in performance optimizations

Cons of BentoML

- Less comprehensive end-to-end ML pipeline support compared to TFX

- Smaller community and ecosystem than TensorFlow/TFX

- May require more manual configuration for complex production scenarios

Code Comparison

BentoML example:

import bentoml

@bentoml.env(pip_packages=["scikit-learn"])

@bentoml.artifacts([SklearnModelArtifact('model')])

class SklearnIrisClassifier(bentoml.BentoService):

@bentoml.api(input=JsonInput(), output=JsonOutput())

def predict(self, input_data):

return self.artifacts.model.predict(input_data)

TFX example:

import tfx

from tfx.components import Trainer

trainer = Trainer(

module_file=module_file,

examples=transform.outputs['transformed_examples'],

transform_graph=transform.outputs['transform_graph'],

schema=schema_gen.outputs['schema'],

train_args=trainer_pb2.TrainArgs(num_steps=10000),

eval_args=trainer_pb2.EvalArgs(num_steps=5000)

)

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

TFX

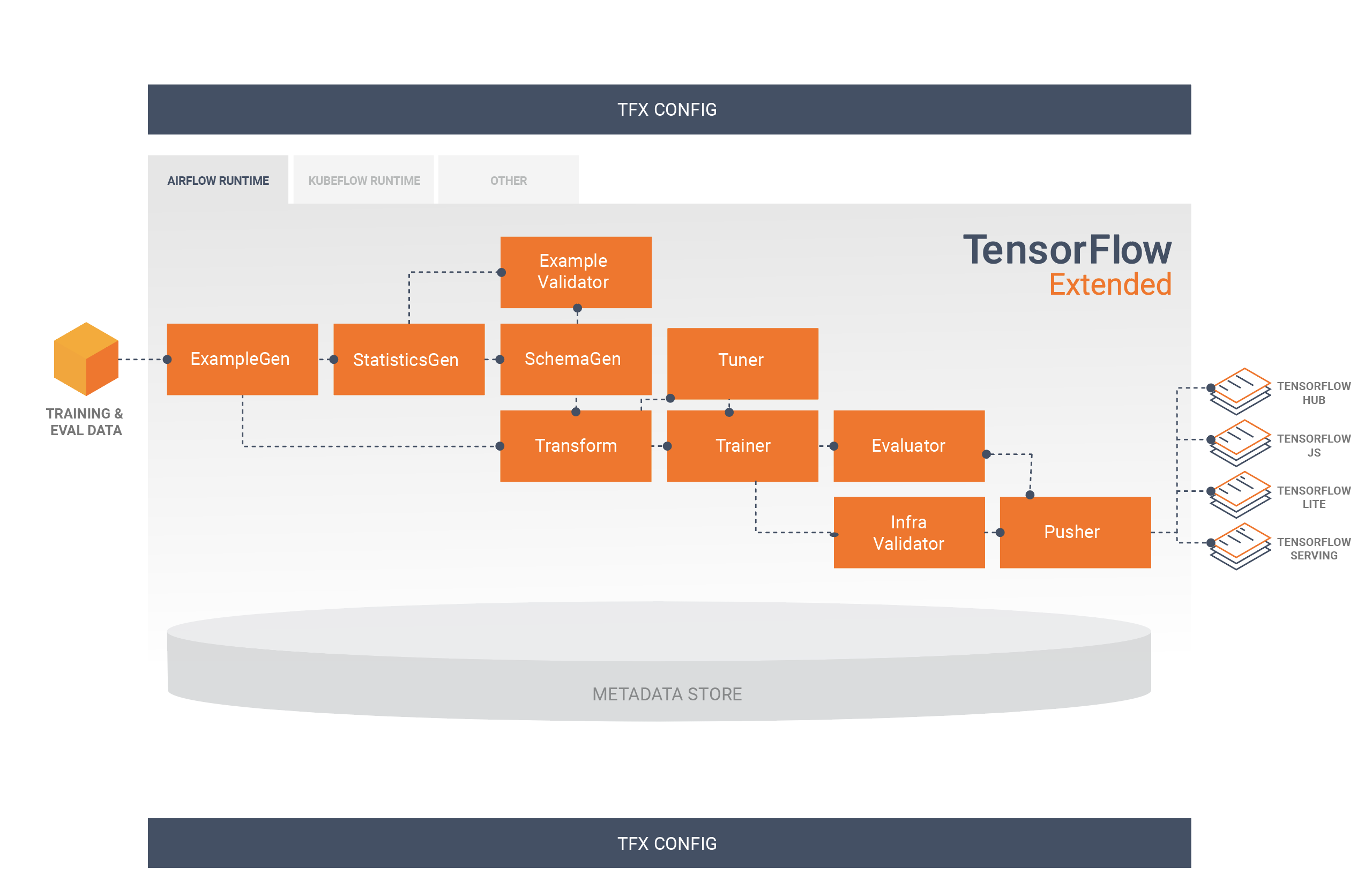

TensorFlow Extended (TFX) is a Google-production-scale machine learning platform based on TensorFlow. It provides a configuration framework to express ML pipelines consisting of TFX components. TFX pipelines can be orchestrated using Apache Airflow and Kubeflow Pipelines. Both the components themselves as well as the integrations with orchestration systems can be extended.

TFX components interact with a ML Metadata backend that keeps a record of component runs, input and output artifacts, and runtime configuration. This metadata backend enables advanced functionality like experiment tracking or warmstarting/resuming ML models from previous runs.

Documentation

User Documentation

Please see the TFX User Guide.

Development References

Roadmap

The TFX Roadmap, which is updated quarterly.

Release Details

For detailed previous and upcoming changes, please check here

Requests For Comment

TFX is an open-source project and we strongly encourage active participation by the ML community in helping to shape TFX to meet or exceed their needs. An important component of that effort is the RFC process. Please see the listing of current and past TFX RFCs. Please see the TensorFlow Request for Comments (TF-RFC) process page for information on how community members can contribute.

Examples

Compatible versions

The following table describes how the tfx package versions are compatible with

its major dependency PyPI packages. This is determined by our testing framework,

but other untested combinations may also work.

| tfx | Python | apache-beam[gcp] | ml-metadata | pyarrow | tensorflow | tensorflow-data-validation | tensorflow-metadata | tensorflow-model-analysis | tensorflow-serving-api | tensorflow-transform | tfx-bsl |

|---|---|---|---|---|---|---|---|---|---|---|---|

| GitHub master | >=3.9,<3.11 | 2.59.0 | 1.16.0 | 10.0.1 | nightly (2.x) | 1.16.1 | 1.16.1 | 0.47.0 | 2.16.1 | 1.16.0 | 1.16.1 |

| 1.16.0 | >=3.9,<3.11 | 2.59.0 | 1.16.0 | 10.0.1 | 2.16 | 1.16.1 | 1.16.1 | 0.47.0 | 2.16.1 | 1.16.0 | 1.16.1 |

| 1.15.0 | >=3.9,<3.11 | 2.47.0 | 1.15.0 | 10.0.0 | 2.15 | 1.15.1 | 1.15.0 | 0.46.0 | 2.15.1 | 1.15.0 | 1.15.1 |

| 1.14.0 | >=3.8,<3.11 | 2.47.0 | 1.14.0 | 10.0.0 | 2.13 | 1.14.0 | 1.14.0 | 0.45.0 | 2.9.0 | 1.14.0 | 1.14.0 |

| 1.13.0 | >=3.8,<3.10 | 2.40.0 | 1.13.1 | 6.0.0 | 2.12 | 1.13.0 | 1.13.1 | 0.44.0 | 2.9.0 | 1.13.0 | 1.13.0 |

| 1.12.0 | >=3.7,<3.10 | 2.40.0 | 1.12.0 | 6.0.0 | 2.11 | 1.12.0 | 1.12.0 | 0.43.0 | 2.9.0 | 1.12.0 | 1.12.0 |

| 1.11.0 | >=3.7,<3.10 | 2.40.0 | 1.11.0 | 6.0.0 | 1.15.5 / 2.10.0 | 1.11.0 | 1.11.0 | 0.42.0 | 2.9.0 | 1.11.0 | 1.11.0 |

| 1.10.0 | >=3.7,<3.10 | 2.40.0 | 1.10.0 | 6.0.0 | 1.15.5 / 2.9.0 | 1.10.0 | 1.10.0 | 0.41.0 | 2.9.0 | 1.10.0 | 1.10.0 |

| 1.9.0 | >=3.7,<3.10 | 2.38.0 | 1.9.0 | 5.0.0 | 1.15.5 / 2.9.0 | 1.9.0 | 1.9.0 | 0.40.0 | 2.9.0 | 1.9.0 | 1.9.0 |

| 1.8.0 | >=3.7,<3.10 | 2.38.0 | 1.8.0 | 5.0.0 | 1.15.5 / 2.8.0 | 1.8.0 | 1.8.0 | 0.39.0 | 2.8.0 | 1.8.0 | 1.8.0 |

| 1.7.0 | >=3.7,<3.9 | 2.36.0 | 1.7.0 | 5.0.0 | 1.15.5 / 2.8.0 | 1.7.0 | 1.7.0 | 0.38.0 | 2.8.0 | 1.7.0 | 1.7.0 |

| 1.6.2 | >=3.7,<3.9 | 2.35.0 | 1.6.0 | 5.0.0 | 1.15.5 / 2.8.0 | 1.6.0 | 1.6.0 | 0.37.0 | 2.7.0 | 1.6.0 | 1.6.0 |

| 1.6.0 | >=3.7,<3.9 | 2.35.0 | 1.6.0 | 5.0.0 | 1.15.5 / 2.7.0 | 1.6.0 | 1.6.0 | 0.37.0 | 2.7.0 | 1.6.0 | 1.6.0 |

| 1.5.0 | >=3.7,<3.9 | 2.34.0 | 1.5.0 | 5.0.0 | 1.15.2 / 2.7.0 | 1.5.0 | 1.5.0 | 0.36.0 | 2.7.0 | 1.5.0 | 1.5.0 |

| 1.4.0 | >=3.7,<3.9 | 2.33.0 | 1.4.0 | 5.0.0 | 1.15.0 / 2.6.0 | 1.4.0 | 1.4.0 | 0.35.0 | 2.6.0 | 1.4.0 | 1.4.0 |

| 1.3.4 | >=3.6,<3.9 | 2.32.0 | 1.3.0 | 2.0.0 | 1.15.0 / 2.6.0 | 1.3.0 | 1.2.0 | 0.34.1 | 2.6.0 | 1.3.0 | 1.3.0 |

| 1.3.3 | >=3.6,<3.9 | 2.32.0 | 1.3.0 | 2.0.0 | 1.15.0 / 2.6.0 | 1.3.0 | 1.2.0 | 0.34.1 | 2.6.0 | 1.3.0 | 1.3.0 |

| 1.3.2 | >=3.6,<3.9 | 2.32.0 | 1.3.0 | 2.0.0 | 1.15.0 / 2.6.0 | 1.3.0 | 1.2.0 | 0.34.1 | 2.6.0 | 1.3.0 | 1.3.0 |

| 1.3.1 | >=3.6,<3.9 | 2.32.0 | 1.3.0 | 2.0.0 | 1.15.0 / 2.6.0 | 1.3.0 | 1.2.0 | 0.34.1 | 2.6.0 | 1.3.0 | 1.3.0 |

| 1.3.0 | >=3.6,<3.9 | 2.32.0 | 1.3.0 | 2.0.0 | 1.15.0 / 2.6.0 | 1.3.0 | 1.2.0 | 0.34.1 | 2.6.0 | 1.3.0 | 1.3.0 |

| 1.2.1 | >=3.6,<3.9 | 2.31.0 | 1.2.0 | 2.0.0 | 1.15.0 / 2.5.0 | 1.2.0 | 1.2.0 | 0.33.0 | 2.5.1 | 1.2.0 | 1.2.0 |

| 1.2.0 | >=3.6,<3.9 | 2.31.0 | 1.2.0 | 2.0.0 | 1.15.0 / 2.5.0 | 1.2.0 | 1.2.0 | 0.33.0 | 2.5.1 | 1.2.0 | 1.2.0 |

| 1.0.0 | >=3.6,<3.9 | 2.29.0 | 1.0.0 | 2.0.0 | 1.15.0 / 2.5.0 | 1.0.0 | 1.0.0 | 0.31.0 | 2.5.1 | 1.0.0 | 1.0.0 |

| 0.30.0 | >=3.6,<3.9 | 2.28.0 | 0.30.0 | 2.0.0 | 1.15.0 / 2.4.0 | 0.30.0 | 0.30.0 | 0.30.0 | 2.4.0 | 0.30.0 | 0.30.0 |

| 0.29.0 | >=3.6,<3.9 | 2.28.0 | 0.29.0 | 2.0.0 | 1.15.0 / 2.4.0 | 0.29.0 | 0.29.0 | 0.29.0 | 2.4.0 | 0.29.0 | 0.29.0 |

| 0.28.0 | >=3.6,<3.9 | 2.28.0 | 0.28.0 | 2.0.0 | 1.15.0 / 2.4.0 | 0.28.0 | 0.28.0 | 0.28.0 | 2.4.0 | 0.28.0 | 0.28.1 |

| 0.27.0 | >=3.6,<3.9 | 2.27.0 | 0.27.0 | 2.0.0 | 1.15.0 / 2.4.0 | 0.27.0 | 0.27.0 | 0.27.0 | 2.4.0 | 0.27.0 | 0.27.0 |

| 0.26.4 | >=3.6,<3.9 | 2.28.0 | 0.26.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.26.1 | 0.26.0 | 0.26.0 | 2.3.0 | 0.26.0 | 0.26.0 |

| 0.26.3 | >=3.6,<3.9 | 2.25.0 | 0.26.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.26.0 | 0.26.0 | 0.26.0 | 2.3.0 | 0.26.0 | 0.26.0 |

| 0.26.1 | >=3.6,<3.9 | 2.25.0 | 0.26.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.26.0 | 0.26.0 | 0.26.0 | 2.3.0 | 0.26.0 | 0.26.0 |

| 0.26.0 | >=3.6,<3.9 | 2.25.0 | 0.26.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.26.0 | 0.26.0 | 0.26.0 | 2.3.0 | 0.26.0 | 0.26.0 |

| 0.25.0 | >=3.6,<3.9 | 2.25.0 | 0.24.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.25.0 | 0.25.0 | 0.25.0 | 2.3.0 | 0.25.0 | 0.25.0 |

| 0.24.1 | >=3.6,<3.9 | 2.24.0 | 0.24.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.24.1 | 0.24.0 | 0.24.3 | 2.3.0 | 0.24.1 | 0.24.1 |

| 0.24.0 | >=3.6,<3.9 | 2.24.0 | 0.24.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.24.1 | 0.24.0 | 0.24.3 | 2.3.0 | 0.24.1 | 0.24.1 |

| 0.23.1 | >=3.5,<4 | 2.24.0 | 0.23.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.23.1 | 0.23.0 | 0.23.0 | 2.3.0 | 0.23.0 | 0.23.0 |

| 0.23.0 | >=3.5,<4 | 2.23.0 | 0.23.0 | 0.17.0 | 1.15.0 / 2.3.0 | 0.23.0 | 0.23.0 | 0.23.0 | 2.3.0 | 0.23.0 | 0.23.0 |

| 0.22.2 | >=3.5,<4 | 2.21.0 | 0.22.1 | 0.16.0 | 1.15.0 / 2.2.0 | 0.22.2 | 0.22.2 | 0.22.2 | 2.2.0 | 0.22.0 | 0.22.1 |

| 0.22.1 | >=3.5,<4 | 2.21.0 | 0.22.1 | 0.16.0 | 1.15.0 / 2.2.0 | 0.22.2 | 0.22.2 | 0.22.2 | 2.2.0 | 0.22.0 | 0.22.1 |

| 0.22.0 | >=3.5,<4 | 2.21.0 | 0.22.0 | 0.16.0 | 1.15.0 / 2.2.0 | 0.22.0 | 0.22.0 | 0.22.1 | 2.2.0 | 0.22.0 | 0.22.0 |

| 0.21.5 | >=2.7,<3 or >=3.5,<4 | 2.17.0 | 0.21.2 | 0.15.0 | 1.15.0 / 2.1.0 | 0.21.5 | 0.21.1 | 0.21.5 | 2.1.0 | 0.21.2 | 0.21.4 |

| 0.21.4 | >=2.7,<3 or >=3.5,<4 | 2.17.0 | 0.21.2 | 0.15.0 | 1.15.0 / 2.1.0 | 0.21.5 | 0.21.1 | 0.21.5 | 2.1.0 | 0.21.2 | 0.21.4 |

| 0.21.3 | >=2.7,<3 or >=3.5,<4 | 2.17.0 | 0.21.2 | 0.15.0 | 1.15.0 / 2.1.0 | 0.21.5 | 0.21.1 | 0.21.5 | 2.1.0 | 0.21.2 | 0.21.4 |

| 0.21.2 | >=2.7,<3 or >=3.5,<4 | 2.17.0 | 0.21.2 | 0.15.0 | 1.15.0 / 2.1.0 | 0.21.5 | 0.21.1 | 0.21.5 | 2.1.0 | 0.21.2 | 0.21.4 |

| 0.21.1 | >=2.7,<3 or >=3.5,<4 | 2.17.0 | 0.21.2 | 0.15.0 | 1.15.0 / 2.1.0 | 0.21.4 | 0.21.1 | 0.21.4 | 2.1.0 | 0.21.2 | 0.21.3 |

| 0.21.0 | >=2.7,<3 or >=3.5,<4 | 2.17.0 | 0.21.0 | 0.15.0 | 1.15.0 / 2.1.0 | 0.21.0 | 0.21.0 | 0.21.1 | 2.1.0 | 0.21.0 | 0.21.0 |

| 0.15.0 | >=2.7,<3 or >=3.5,<4 | 2.16.0 | 0.15.0 | 0.15.0 | 1.15.0 | 0.15.0 | 0.15.0 | 0.15.2 | 1.15.0 | 0.15.0 | 0.15.1 |

| 0.14.0 | >=2.7,<3 or >=3.5,<4 | 2.14.0 | 0.14.0 | 0.14.0 | 1.14.0 | 0.14.1 | 0.14.0 | 0.14.0 | 1.14.0 | 0.14.0 | n/a |

| 0.13.0 | >=2.7,<3 or >=3.5,<4 | 2.12.0 | 0.13.2 | n/a | 1.13.1 | 0.13.1 | 0.13.0 | 0.13.2 | 1.13.0 | 0.13.0 | n/a |

| 0.12.0 | >=2.7,<3 | 2.10.0 | 0.13.2 | n/a | 1.12.0 | 0.12.0 | 0.12.1 | 0.12.1 | 1.12.0 | 0.12.0 | n/a |

Resources

Top Related Projects

Machine Learning Pipelines for Kubeflow

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

The open source developer platform to build AI/LLM applications and models with confidence. Enhance your AI applications with end-to-end tracking, observability, and evaluations, all in one integrated platform.

The Open Source Feature Store for AI/ML

The easiest way to serve AI apps and models - Build Model Inference APIs, Job queues, LLM apps, Multi-model pipelines, and more!

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot