Top Related Projects

Pretrained models for TensorFlow.js

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Code repo for realtime multi-person pose estimation in CVPR'17 (Oral)

A real-time approach for mapping all human pixels of 2D RGB images to a 3D surface-based model of the body

Human Pose estimation with TensorFlow framework

Quick Overview

Pose Animator is an open-source project that brings illustrations to life using PoseNet and FaceMesh machine learning models. It allows users to create animated characters that mimic human movements captured through a webcam, making it possible to generate real-time animations without traditional motion capture equipment.

Pros

- Easy to use and accessible for beginners in animation and machine learning

- Real-time animation capabilities using just a webcam

- Customizable character designs and animations

- Open-source, allowing for community contributions and modifications

Cons

- Limited to 2D animations

- Accuracy may vary depending on lighting conditions and webcam quality

- Requires a relatively powerful computer for smooth performance

- May have privacy concerns due to the use of webcam input

Code Examples

// Initialize PoseNet

const net = await posenet.load();

// Detect poses in an image

const poses = await net.estimatePoses(imageElement);

// Get keypoints from the first detected pose

const keypoints = poses[0].keypoints;

// Initialize FaceMesh

const model = await facemesh.load();

// Detect facial landmarks

const predictions = await model.estimateFaces(imageElement);

// Get facial landmarks from the first detected face

const landmarks = predictions[0].scaledMesh;

// Animate character based on detected pose and facial landmarks

function animate(pose, face) {

// Update character's body position

character.body.position = pose.nose;

// Update character's facial expression

character.face.updateExpression(face);

// Render the animated character

renderer.render(character);

}

Getting Started

-

Clone the repository:

git clone https://github.com/yemount/pose-animator.git -

Install dependencies:

cd pose-animator npm install -

Run the development server:

npm start -

Open your browser and navigate to

http://localhost:1234to see the Pose Animator in action.

Competitor Comparisons

Pretrained models for TensorFlow.js

Pros of tfjs-models

- Broader scope with multiple pre-trained models for various tasks

- Backed by TensorFlow, offering better integration with the TensorFlow ecosystem

- More active development and community support

Cons of tfjs-models

- May be more complex to use for specific pose animation tasks

- Larger package size due to inclusion of multiple models

- Potentially higher computational requirements

Code Comparison

pose-animator:

const pose = await poseDetector.estimatePoses(image);

const keypoints = pose[0].keypoints;

animator.animate(keypoints);

tfjs-models:

const net = await posenet.load();

const pose = await net.estimateSinglePose(image);

const keypoints = pose.keypoints;

// Additional processing required for animation

Summary

tfjs-models offers a more comprehensive set of pre-trained models and better integration with TensorFlow, but may be overkill for simple pose animation tasks. pose-animator is more focused on its specific use case, potentially offering a simpler implementation for pose-based animation. The choice between the two depends on the project's requirements and the developer's familiarity with TensorFlow.

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Pros of OpenPose

- More comprehensive body pose estimation, including face and hand keypoints

- Higher accuracy and robustness in complex scenarios

- Supports multi-person detection and tracking

Cons of OpenPose

- Requires more computational resources and may be slower

- More complex setup and installation process

- Less suitable for real-time applications on low-end devices

Code Comparison

OpenPose (C++):

#include <openpose/pose/poseExtractor.hpp>

auto poseExtractor = op::PoseExtractorCaffe::getInstance(poseModel, netInputSize, outputSize, keypointScaleMode, num_gpu_start);

poseExtractor->forwardPass(netInputArray, imageSize, scaleInputToNetInputs);

Pose Animator (JavaScript):

import * as posenet from '@tensorflow-models/posenet';

const net = await posenet.load();

const pose = await net.estimateSinglePose(imageElement);

OpenPose offers more detailed pose estimation but requires more setup, while Pose Animator is simpler to use and better suited for lightweight applications. OpenPose is ideal for research and complex scenarios, whereas Pose Animator is more appropriate for web-based or mobile applications requiring basic pose estimation.

Code repo for realtime multi-person pose estimation in CVPR'17 (Oral)

Pros of Realtime_Multi-Person_Pose_Estimation

- Supports real-time multi-person pose estimation

- Utilizes advanced deep learning techniques for accurate pose detection

- Provides a more comprehensive solution for complex scenes with multiple subjects

Cons of Realtime_Multi-Person_Pose_Estimation

- Higher computational requirements due to complex algorithms

- May have a steeper learning curve for implementation and customization

- Less focused on animation and more on raw pose estimation

Code Comparison

Pose-animator:

const videoElement = document.getElementById('video');

const canvasElement = document.getElementById('canvas');

const ctx = canvasElement.getContext('2d');

poseNet.on('pose', (results) => {

ctx.clearRect(0, 0, canvasElement.width, canvasElement.height);

drawKeypoints(results);

});

Realtime_Multi-Person_Pose_Estimation:

import cv2

import numpy as np

from scipy.ndimage.filters import gaussian_filter

def process(input_image, params, model_params):

heatmaps = np.zeros((input_image.shape[0], input_image.shape[1], 19))

pafs = np.zeros((input_image.shape[0], input_image.shape[1], 38))

return heatmaps, pafs

The code snippets highlight the different approaches: Pose-animator focuses on real-time animation using JavaScript, while Realtime_Multi-Person_Pose_Estimation uses Python for more complex image processing and pose estimation.

A real-time approach for mapping all human pixels of 2D RGB images to a 3D surface-based model of the body

Pros of DensePose

- More advanced and accurate 3D human body pose estimation

- Provides dense correspondence between 2D image pixels and 3D surface

- Backed by Facebook's research team, likely more robust and well-maintained

Cons of DensePose

- More complex to set up and use

- Requires more computational resources

- May be overkill for simpler animation projects

Code Comparison

Pose-animator (JavaScript):

const videoElement = document.getElementsByClassName('input_video')[0];

const canvasElement = document.getElementsByClassName('output_canvas')[0];

const canvasCtx = canvasElement.getContext('2d');

function onResults(results) {

// Process pose estimation results

}

DensePose (Python):

from detectron2.config import get_cfg

from detectron2.engine import DefaultPredictor

cfg = get_cfg()

cfg.merge_from_file("path/to/densepose_rcnn_R_50_FPN_s1x.yaml")

predictor = DefaultPredictor(cfg)

outputs = predictor(image)

Summary

Pose-animator is a simpler, JavaScript-based solution for 2D pose animation, while DensePose is a more advanced Python-based tool for dense 3D human body pose estimation. Pose-animator is easier to integrate into web projects, while DensePose offers more accurate and detailed results at the cost of increased complexity and resource requirements.

Human Pose estimation with TensorFlow framework

Pros of pose-tensorflow

- More comprehensive and flexible implementation of pose estimation

- Supports multiple models and architectures for different use cases

- Better suited for research and custom applications

Cons of pose-tensorflow

- More complex setup and usage compared to pose-animator

- Requires more computational resources and expertise to run effectively

- Less focus on real-time animation and visualization

Code Comparison

pose-tensorflow:

import tensorflow as tf

from pose_tensorflow.nnet import predict

from pose_tensorflow.config import load_config

cfg = load_config("path/to/config.yaml")

pose_net = predict.setup_pose_prediction(cfg)

pose-animator:

import * as posenet from '@tensorflow-models/posenet';

const net = await posenet.load();

const pose = await net.estimateSinglePose(imageElement);

Summary

pose-tensorflow offers a more robust and flexible framework for pose estimation, suitable for research and custom applications. However, it requires more setup and expertise. pose-animator, on the other hand, provides a simpler interface focused on real-time animation, making it more accessible for quick implementations and creative projects.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

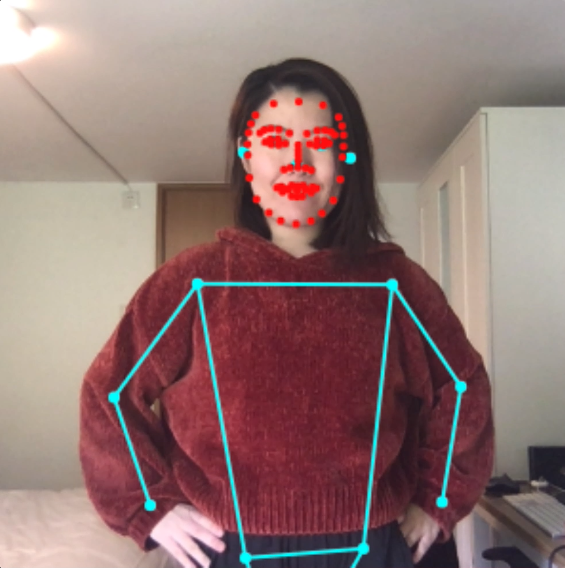

Pose Animator

Pose Animator takes a 2D vector illustration and animates its containing curves in real-time based on the recognition result from PoseNet and FaceMesh. It borrows the idea of skeleton-based animation from computer graphics and applies it to vector characters.

This is running in the browser in realtime using TensorFlow.js. Check out more cool TF.js demos here.

This is not an officially supported Google product.

In skeletal animation a character is represented in two parts:

- a surface used to draw the character, and

- a hierarchical set of interconnected bones used to animate the surface.

In Pose Animator, the surface is defined by the 2D vector paths in the input SVG files. For the bone structure, Pose Animator provides a predefined rig (bone hierarchy) representation, designed based on the keypoints from PoseNet and FaceMesh. This bone structureâs initial pose is specified in the input SVG file, along with the character illustration, while the real time bone positions are updated by the recognition result from ML models.

// TODO: Add blog post link. For more details on its technical design please check out this blog post.

Demo 1: Camera feed

The camera demo animates a 2D avatar in real-time from a webcam video stream.

Demo 2: Static image

The static image demo shows the avatar positioned from a single image.

Build And Run

Install dependencies and prepare the build directory:

yarn

To watch files for changes, and launch a dev server:

yarn watch

Platform support

Demos are supported on Desktop Chrome and iOS Safari.

It should also run on Chrome on Android and potentially more Android mobile browsers though support has not been tested yet.

Animate your own design

- Download the sample skeleton SVG here.

- Create a new file in your vector graphics editor of choice. Copy the group named âskeletonâ from the above file into your working file. Note:

- Do not add, remove or rename the joints (circles) in this group. Pose Animator relies on these named paths to read the skeletonâs initial position. Missing joints will cause errors.

- However you can move the joints around to embed them into your illustration. See step 4.

- Create a new group and name it âillustrationâ, next to the âskeletonâ group. This is the group where you can put all the paths for your illustration.

- Flatten all subgroups so that âillustrationâ only contains path elements.

- Composite paths are not supported at the moment.

- The working file structure should look like this:

[Layer 1] |---- skeleton |---- illustration |---- path 1 |---- path 2 |---- path 3 - Embed the sample skeleton in âskeletonâ group into your illustration by moving the joints around.

- Export the file as an SVG file.

- Open Pose Animator camera demo. Once everything loads, drop your SVG file into the browser tab. You should be able to see it come to life :D

Top Related Projects

Pretrained models for TensorFlow.js

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Code repo for realtime multi-person pose estimation in CVPR'17 (Oral)

A real-time approach for mapping all human pixels of 2D RGB images to a 3D surface-based model of the body

Human Pose estimation with TensorFlow framework

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot