Top Related Projects

Code and data for paper "Deep Photo Style Transfer": https://arxiv.org/abs/1703.07511

Neural Style and MSG-Net

TensorFlow CNN for fast style transfer ⚡🖥🎨🖼

Feedforward style transfer

Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization

Quick Overview

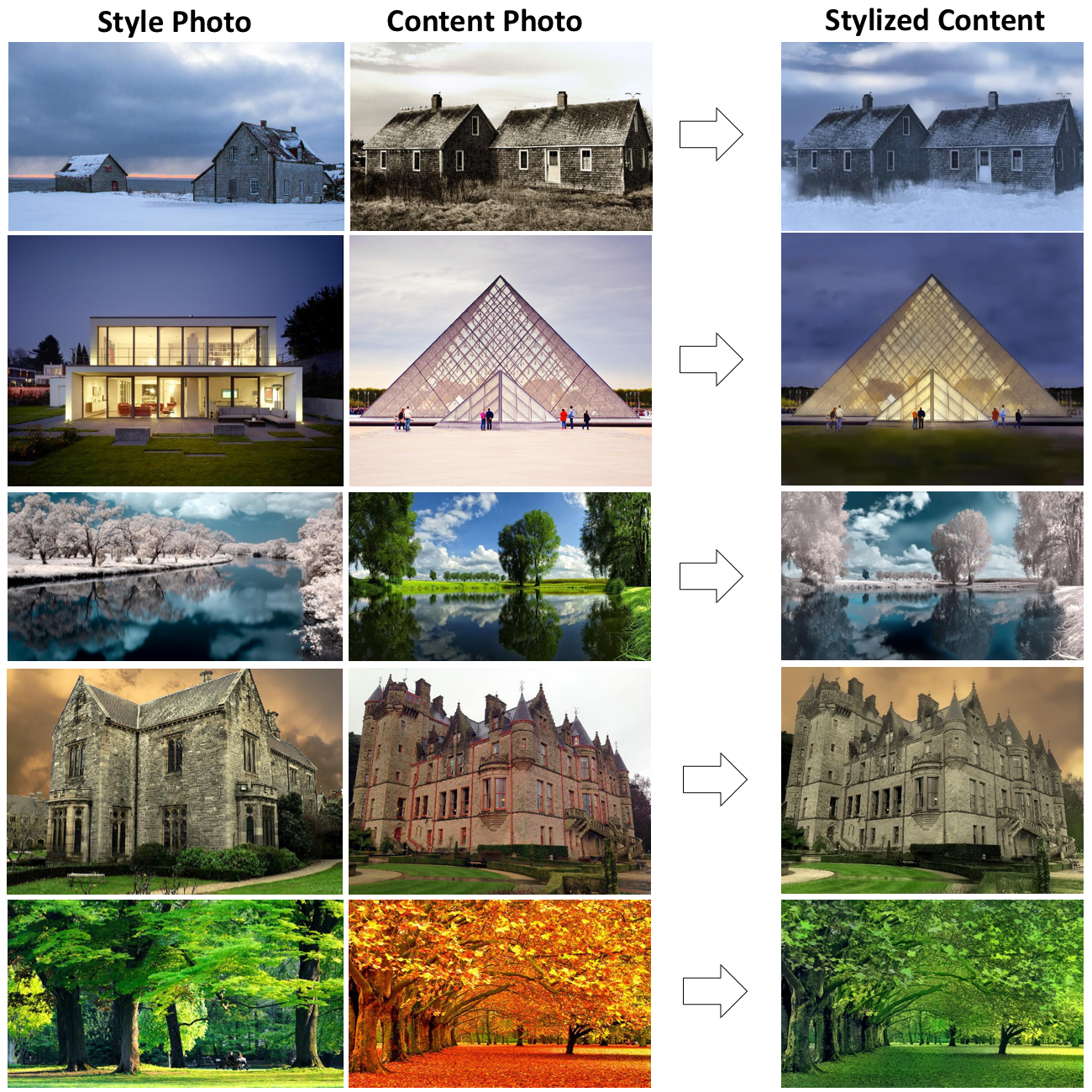

FastPhotoStyle is an NVIDIA project that implements a fast photorealistic style transfer algorithm. It allows users to transfer the style of one photo onto another while maintaining photorealism, producing high-quality results in a fraction of the time compared to traditional methods.

Pros

- Fast processing time, enabling real-time or near-real-time style transfer

- Produces high-quality, photorealistic results

- Supports both photo-to-photo and sketch-to-photo style transfer

- Implemented in PyTorch, allowing for easy integration with other deep learning projects

Cons

- Requires a powerful GPU for optimal performance

- Limited documentation and examples for advanced usage

- May struggle with extreme style differences or complex images

- Dependency on specific versions of libraries, which may cause compatibility issues

Code Examples

- Basic style transfer:

from photo_style import stylize

content_image = 'path/to/content.jpg'

style_image = 'path/to/style.jpg'

output_image = 'path/to/output.jpg'

stylize(content_image, style_image, output_image)

- Adjusting style strength:

from photo_style import stylize

stylize(content_image, style_image, output_image, style_strength=0.8)

- Using sketch-to-photo transfer:

from photo_style import sketch_to_photo

sketch_image = 'path/to/sketch.jpg'

style_image = 'path/to/style.jpg'

output_image = 'path/to/output.jpg'

sketch_to_photo(sketch_image, style_image, output_image)

Getting Started

-

Clone the repository:

git clone https://github.com/NVIDIA/FastPhotoStyle.git cd FastPhotoStyle -

Install dependencies:

pip install -r requirements.txt -

Download pre-trained models:

python download_models.py -

Run the style transfer:

from photo_style import stylize stylize('content.jpg', 'style.jpg', 'output.jpg')

Competitor Comparisons

Code and data for paper "Deep Photo Style Transfer": https://arxiv.org/abs/1703.07511

Pros of deep-photo-styletransfer

- Produces high-quality results with detailed preservation of content structure

- Offers more control over the stylization process through various parameters

- Suitable for academic research and experimentation in photo-realistic style transfer

Cons of deep-photo-styletransfer

- Slower processing time compared to FastPhotoStyle

- Requires more computational resources and technical expertise to set up and use

- Less user-friendly for non-technical users or those seeking quick results

Code Comparison

deep-photo-styletransfer:

local content_image = image.load(params.content_image, 3)

local style_image = image.load(params.style_image, 3)

local content_layers = params.content_layers

local style_layers = params.style_layers

FastPhotoStyle:

from photo_style import stylize

content_image = load_image(args.content)

style_image = load_image(args.style)

output = stylize(content_image, style_image)

FastPhotoStyle offers a more streamlined API and easier integration, while deep-photo-styletransfer provides more granular control over the process. FastPhotoStyle is generally faster and more accessible for practical applications, whereas deep-photo-styletransfer may be preferred for research or when fine-tuning the style transfer process is required.

Neural Style and MSG-Net

Pros of PyTorch-Multi-Style-Transfer

- Supports multiple style transfer techniques, offering more versatility

- Implemented in PyTorch, which may be preferred by some developers

- Includes pre-trained models for quick experimentation

Cons of PyTorch-Multi-Style-Transfer

- May have slower processing times for large images

- Less focus on photorealistic results compared to FastPhotoStyle

- Potentially more complex to use due to multiple style transfer options

Code Comparison

FastPhotoStyle:

from photo_style import stylize

stylize(content='input.png', style='style.png', output='output.png')

PyTorch-Multi-Style-Transfer:

from net import Net

model = Net(ngf=128)

model.load_state_dict(torch.load('model.pth'))

output = model(content, style)

The FastPhotoStyle code appears more straightforward, while PyTorch-Multi-Style-Transfer offers more flexibility but may require additional setup.

TensorFlow CNN for fast style transfer ⚡🖥🎨🖼

Pros of fast-style-transfer

- Simpler implementation, making it easier for beginners to understand and use

- Faster training process, allowing for quicker experimentation with different styles

- Supports both image and video stylization

Cons of fast-style-transfer

- Generally produces lower quality results compared to FastPhotoStyle

- Less control over the stylization process and output

- Limited to a single style per model, requiring separate training for each style

Code Comparison

FastPhotoStyle:

from photo_wct import PhotoWCT

p_wct = PhotoWCT()

p_wct.load_state_dict(torch.load('models/photo_wct.pth'))

stylized = p_wct.transfer(content, style, csF=1.0)

fast-style-transfer:

import style

stylizer = style.StyleTransfer(model_path)

stylized = stylizer.transfer_style(content_image)

Both repositories focus on neural style transfer, but FastPhotoStyle offers more advanced features and generally produces higher quality results at the cost of increased complexity. fast-style-transfer is simpler to use and faster to train, making it more accessible for beginners or those looking for quick stylization results.

Feedforward style transfer

Pros of fast-neural-style

- Simpler implementation, making it easier to understand and modify

- Faster training and inference times for basic style transfer tasks

- Supports both training new models and using pre-trained models

Cons of fast-neural-style

- Limited to artistic style transfer, lacking photorealistic capabilities

- Less advanced features compared to FastPhotoStyle

- May produce lower quality results for complex style transfers

Code Comparison

FastPhotoStyle:

from photo_style import stylize

stylized = stylize(content, style, content_masks, style_masks)

fast-neural-style:

local style_img = image.load('examples/inputs/starry_night.jpg', 3)

local content_img = image.load('examples/inputs/stanford_quad.jpg', 3)

local output = net:forward({content_img, style_img})

FastPhotoStyle offers a more streamlined API with mask support, while fast-neural-style uses a Lua-based implementation with separate content and style inputs. FastPhotoStyle's approach is more suitable for photorealistic style transfer, whereas fast-neural-style is better for artistic style transfer.

Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization

Pros of AdaIN-style

- Faster training and inference times due to simpler architecture

- More flexible in handling various artistic styles

- Produces smoother and more coherent stylized images

Cons of AdaIN-style

- Less photorealistic results compared to FastPhotoStyle

- May struggle with preserving fine details in complex scenes

- Limited control over specific style transfer parameters

Code Comparison

AdaIN-style:

def calc_mean_std(feat, eps=1e-5):

size = feat.size()

assert (len(size) == 4)

N, C = size[:2]

feat_var = feat.view(N, C, -1).var(dim=2) + eps

feat_std = feat_var.sqrt().view(N, C, 1, 1)

feat_mean = feat.view(N, C, -1).mean(dim=2).view(N, C, 1, 1)

return feat_mean, feat_std

FastPhotoStyle:

void PhotoWCT::Whiten(const float* ContentIN, float* ContentOUT, const int N, const int C, const int H, const int W)

{

const int HW = H * W;

Eigen::MatrixXf Content = Eigen::Map<const Eigen::MatrixXf>(ContentIN, C, HW);

Eigen::JacobiSVD<Eigen::MatrixXf> svd(Content, Eigen::ComputeThinU | Eigen::ComputeThinV);

Eigen::MatrixXf WhitenContent = svd.matrixU() * Eigen::MatrixXf::Identity(C, HW);

Eigen::Map<Eigen::MatrixXf>(ContentOUT, C, HW) = WhitenContent;

}

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

FastPhotoStyle

License

Copyright (C) 2018 NVIDIA Corporation. All rights reserved. Licensed under the CC BY-NC-SA 4.0 license (https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode).

What's new

| Date | News |

|---|---|

| 2018-07-25 | Migrate to pytorch 0.4.0. For pytorch 0.3.0 user, check out FastPhotoStyle for pytorch 0.3.0. |

| Add a tutorial showing 3 ways of using the FastPhotoStyle algorithm. | |

| 2018-07-10 | Our paper is accepted by the ECCV 2018 conference!!! |

About

Given a content photo and a style photo, the code can transfer the style of the style photo to the content photo. The details of the algorithm behind the code is documented in our arxiv paper. Please cite the paper if this code repository is used in your publications.

A Closed-form Solution to Photorealistic Image Stylization

Yijun Li (UC Merced), Ming-Yu Liu (NVIDIA), Xueting Li (UC Merced), Ming-Hsuan Yang (NVIDIA, UC Merced), Jan Kautz (NVIDIA)

European Conference on Computer Vision (ECCV), 2018

Tutorial

Please check out the tutorial.

Top Related Projects

Code and data for paper "Deep Photo Style Transfer": https://arxiv.org/abs/1703.07511

Neural Style and MSG-Net

TensorFlow CNN for fast style transfer ⚡🖥🎨🖼

Feedforward style transfer

Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot