Top Related Projects

Torch implementation of neural style algorithm

TensorFlow CNN for fast style transfer ⚡🖥🎨🖼

Code and data for paper "Deep Photo Style Transfer": https://arxiv.org/abs/1703.07511

Style transfer, deep learning, feature transform

Quick Overview

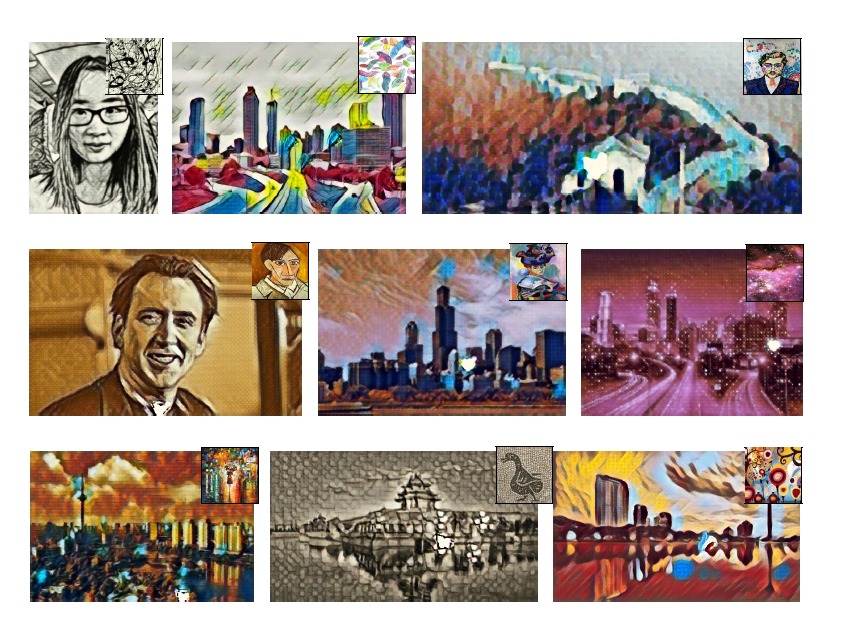

PyTorch-Multi-Style-Transfer is a GitHub repository that implements multiple style transfer algorithms using PyTorch. It provides a collection of pre-trained models and scripts for applying artistic styles to images, allowing users to create visually appealing stylized images based on content and style inputs.

Pros

- Implements multiple style transfer algorithms in one repository

- Uses PyTorch, which is known for its ease of use and dynamic computational graph

- Provides pre-trained models for quick and easy style transfer

- Includes both training and evaluation scripts for customization

Cons

- Limited documentation and examples for some advanced features

- May require significant computational resources for training custom models

- Some dependencies might be outdated and require manual updates

- Limited support for video style transfer

Code Examples

- Basic style transfer using a pre-trained model:

from net import Net

from option import Options

from utils import styleTransfer

# Load pre-trained model

model = Net(ngf=128)

model.load_state_dict(torch.load('models/21styles.model'))

# Perform style transfer

content_image = 'images/content.jpg'

style_image = 'images/style.jpg'

output = styleTransfer(model, content_image, style_image)

- Training a new style transfer model:

from net import Net

from train import train

# Initialize model and optimizer

model = Net(ngf=128)

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3)

# Train the model

train(model, optimizer, content_dataset, style_dataset, num_epochs=10)

- Applying multiple styles to a single content image:

from utils import multipleStyleTransfer

content_image = 'images/content.jpg'

style_images = ['images/style1.jpg', 'images/style2.jpg', 'images/style3.jpg']

weights = [0.5, 0.3, 0.2]

output = multipleStyleTransfer(model, content_image, style_images, weights)

Getting Started

-

Clone the repository:

git clone https://github.com/zhanghang1989/PyTorch-Multi-Style-Transfer.git cd PyTorch-Multi-Style-Transfer -

Install dependencies:

pip install -r requirements.txt -

Download pre-trained models:

sh models/download_model.sh -

Run style transfer:

python main.py --content-image images/content.jpg --style-image images/style.jpg --model models/21styles.model

This will generate a stylized output image based on the content and style inputs using the pre-trained model.

Competitor Comparisons

Torch implementation of neural style algorithm

Pros of neural-style

- Implemented in Lua and Torch, which can be more efficient for certain deep learning tasks

- Provides detailed documentation and examples for various style transfer techniques

- Supports multiple pre-trained models for different artistic styles

Cons of neural-style

- Limited to single-style transfer, lacking multi-style capabilities

- Requires Torch framework, which has a steeper learning curve compared to PyTorch

- Less active development and community support

Code Comparison

neural-style:

local cmd = torch.CmdLine()

cmd:option('-style_image', 'examples/inputs/seated-nude.jpg', 'Style target image')

cmd:option('-content_image', 'examples/inputs/tubingen.jpg', 'Content target image')

cmd:option('-output_image', 'out.png', 'Output image')

PyTorch-Multi-Style-Transfer:

parser = argparse.ArgumentParser()

parser.add_argument("--content", type=str, default="images/content/venice-boat.jpg")

parser.add_argument("--style", type=str, default="images/9styles.jpg")

parser.add_argument("--output", type=str, default="output.jpg")

PyTorch-Multi-Style-Transfer offers a more modern approach using Python and PyTorch, supporting multi-style transfer and providing easier integration with other deep learning projects. It benefits from PyTorch's dynamic computation graph and extensive ecosystem. However, neural-style may still be preferred for its established codebase and detailed documentation on various style transfer techniques.

TensorFlow CNN for fast style transfer ⚡🖥🎨🖼

Pros of fast-style-transfer

- Implemented in TensorFlow, which may be preferred by some users

- Includes pre-trained models for quick style transfer

- Supports video stylization

Cons of fast-style-transfer

- Limited to single-style transfer per model

- Less flexibility in customizing the style transfer process

- Older implementation (last updated in 2018)

Code Comparison

PyTorch-Multi-Style-Transfer:

style_model = Net(ngf=128)

style_model.load_state_dict(torch.load(args.model))

style_model.to(device)

style_model.eval()

fast-style-transfer:

with tf.Graph().as_default():

soft_config = tf.ConfigProto(allow_soft_placement=True)

soft_config.gpu_options.allow_growth = True

with tf.Session(config=soft_config) as sess:

batch_shape = (1,) + img.shape

img_placeholder = tf.placeholder(tf.float32, shape=batch_shape, name='img_placeholder')

Summary

PyTorch-Multi-Style-Transfer offers more flexibility with multi-style transfer and a more recent implementation in PyTorch. fast-style-transfer, while older, provides pre-trained models and video stylization support in TensorFlow. The choice between them depends on the user's preferred framework, desired features, and the need for single or multi-style transfer capabilities.

Code and data for paper "Deep Photo Style Transfer": https://arxiv.org/abs/1703.07511

Pros of deep-photo-styletransfer

- Focuses on photorealistic style transfer, preserving the structure of the original image

- Implements a photorealistic regularization term for improved results

- Provides detailed instructions for setup and usage

Cons of deep-photo-styletransfer

- Implemented in Lua and requires Torch, which is less popular than PyTorch

- Limited to single-style transfer, unlike PyTorch-Multi-Style-Transfer

- Slower processing time compared to PyTorch-Multi-Style-Transfer

Code Comparison

deep-photo-styletransfer:

local content_image = image.load(params.content_image, 3)

local style_image = image.load(params.style_image, 3)

local content_layers = params.content_layers:split(",")

local style_layers = params.style_layers:split(",")

PyTorch-Multi-Style-Transfer:

content_image = utils.tensor_load_rgbimage(args.content_image, size=args.content_size)

style = utils.tensor_load_rgbimage(args.style_image, size=args.style_size)

content_image = content_image.unsqueeze(0)

style = style.unsqueeze(0)

Both repositories provide implementations for neural style transfer, but they differ in their approach and focus. PyTorch-Multi-Style-Transfer offers a more flexible, multi-style approach using PyTorch, while deep-photo-styletransfer specializes in photorealistic style transfer using Lua and Torch.

Style transfer, deep learning, feature transform

Pros of FastPhotoStyle

- Faster processing time for style transfer

- Better preservation of content structure and details

- Supports high-resolution image processing

Cons of FastPhotoStyle

- Limited to photorealistic style transfer

- Requires more computational resources

- Less flexibility in artistic style manipulation

Code Comparison

PyTorch-Multi-Style-Transfer:

style_model = Net(ngf=128)

style_model.load_state_dict(torch.load(args.model))

style_model.eval()

output = style_model(content_image, style_image)

FastPhotoStyle:

from photo_style import stylize

output = stylize(content_image, style_image,

content_size=1024,

style_size=1024,

alpha=1.0)

The PyTorch-Multi-Style-Transfer code uses a custom neural network model for style transfer, while FastPhotoStyle employs a specialized function for photorealistic style transfer. FastPhotoStyle's approach is more streamlined but less customizable.

FastPhotoStyle is optimized for photorealistic results and faster processing, making it suitable for high-resolution images and applications requiring quick turnaround. However, it may not be ideal for more artistic or abstract style transfers.

PyTorch-Multi-Style-Transfer offers more flexibility in style manipulation and can handle a wider range of artistic styles, but it may be slower and less effective at preserving detailed content structure in photorealistic scenarios.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

PyTorch-Style-Transfer

This repo provides PyTorch Implementation of MSG-Net (ours) and Neural Style (Gatys et al. CVPR 2016), which has been included by ModelDepot. We also provide Torch implementation and MXNet implementation.

Tabe of content

MSG-Net

|

Multi-style Generative Network for Real-time Transfer [arXiv] [project] Hang Zhang, Kristin Dana @article{zhang2017multistyle,

title={Multi-style Generative Network for Real-time Transfer},

author={Zhang, Hang and Dana, Kristin},

journal={arXiv preprint arXiv:1703.06953},

year={2017}

}

|

|

Stylize Images Using Pre-trained MSG-Net

- Download the pre-trained model

git clone git@github.com:zhanghang1989/PyTorch-Style-Transfer.git cd PyTorch-Style-Transfer/experiments bash models/download_model.sh - Camera Demo

python camera_demo.py demo --model models/21styles.model

- Test the model

python main.py eval --content-image images/content/venice-boat.jpg --style-image images/21styles/candy.jpg --model models/21styles.model --content-size 1024

-

If you don't have a GPU, simply set

--cuda=0. For a different style, set--style-image path/to/style. If you would to stylize your own photo, change the--content-image path/to/your/photo. More options:--content-image: path to content image you want to stylize.--style-image: path to style image (typically covered during the training).--model: path to the pre-trained model to be used for stylizing the image.--output-image: path for saving the output image.--content-size: the content image size to test on.--cuda: set it to 1 for running on GPU, 0 for CPU.

Train Your Own MSG-Net Model

- Download the COCO dataset

bash dataset/download_dataset.sh - Train the model

python main.py train --epochs 4

- If you would like to customize styles, set

--style-folder path/to/your/styles. More options:--style-folder: path to the folder style images.--vgg-model-dir: path to folder where the vgg model will be downloaded.--save-model-dir: path to folder where trained model will be saved.--cuda: set it to 1 for running on GPU, 0 for CPU.

Neural Style

Image Style Transfer Using Convolutional Neural Networks by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge.

python main.py optim --content-image images/content/venice-boat.jpg --style-image images/21styles/candy.jpg

--content-image: path to content image.--style-image: path to style image.--output-image: path for saving the output image.--content-size: the content image size to test on.--style-size: the style image size to test on.--cuda: set it to 1 for running on GPU, 0 for CPU.

Acknowledgement

The code benefits from outstanding prior work and their implementations including:

- Texture Networks: Feed-forward Synthesis of Textures and Stylized Images by Ulyanov et al. ICML 2016. (code)

- Perceptual Losses for Real-Time Style Transfer and Super-Resolution by Johnson et al. ECCV 2016 (code) and its pytorch implementation code by Abhishek.

- Image Style Transfer Using Convolutional Neural Networks by Gatys et al. CVPR 2016 and its torch implementation code by Johnson.

Top Related Projects

Torch implementation of neural style algorithm

TensorFlow CNN for fast style transfer ⚡🖥🎨🖼

Code and data for paper "Deep Photo Style Transfer": https://arxiv.org/abs/1703.07511

Style transfer, deep learning, feature transform

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot