GFPGAN

GFPGAN

GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

Top Related Projects

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

waifu2x converter ncnn version, runs fast on intel / amd / nvidia / apple-silicon GPU with vulkan

Image Super-Resolution for Anime-Style Art

ECCV18 Workshops - Enhanced SRGAN. Champion PIRM Challenge on Perceptual Super-Resolution. The training codes are in BasicSR.

Bringing Old Photo Back to Life (CVPR 2020 oral)

Quick Overview

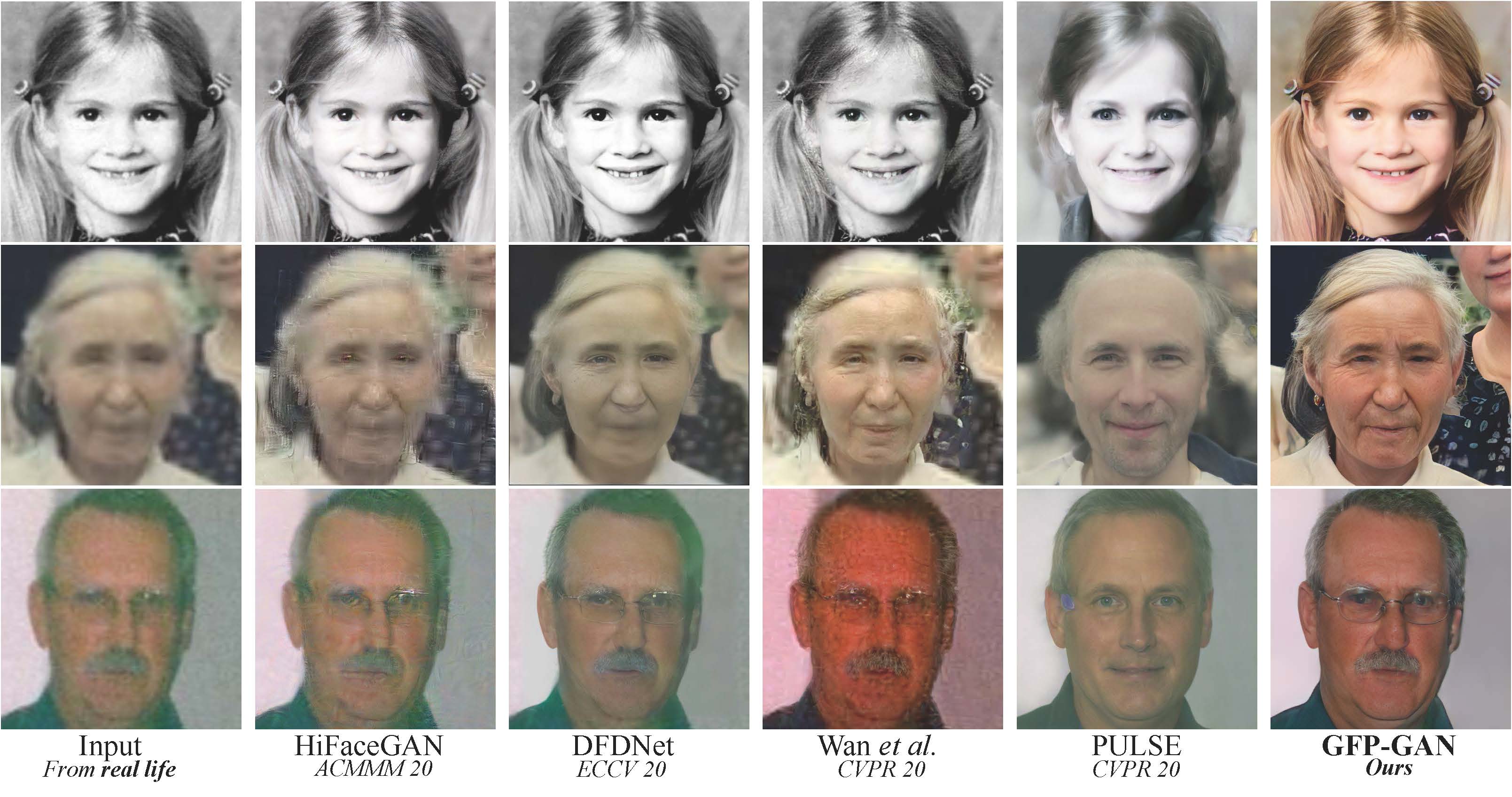

GFPGAN (Generative Facial Prior-based GAN) is an open-source project for real-world face restoration. It aims to restore severely degraded facial images, including those with low resolution, blur, and noise. GFPGAN leverages rich and diverse priors encapsulated in a pretrained face GAN for blind face restoration.

Pros

- High-quality face restoration, capable of handling severe degradations

- Incorporates both local and global facial priors for improved results

- Supports various input formats and resolutions

- Easy to use with pre-trained models and a user-friendly interface

Cons

- May struggle with extreme poses or non-frontal faces

- Computationally intensive, requiring significant GPU resources for optimal performance

- Limited to face restoration, not suitable for general image enhancement

- Potential privacy concerns when processing personal facial images

Code Examples

- Basic face restoration:

import cv2

import gfpgan

from gfpgan import GFPGANer

# Load the model

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

# Read an image

img = cv2.imread('inputs/whole_imgs/low_quality_face.jpg')

# Restore face

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

# Save the result

cv2.imwrite('results/restored_face.png', restored_img)

- Batch processing:

import os

import cv2

import gfpgan

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

input_folder = 'inputs/whole_imgs'

output_folder = 'results'

for img_name in os.listdir(input_folder):

img_path = os.path.join(input_folder, img_name)

img = cv2.imread(img_path)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

cv2.imwrite(os.path.join(output_folder, f'restored_{img_name}'), restored_img)

- Custom model usage:

from gfpgan import GFPGANer

# Load a custom model

restorer = GFPGANer(model_path='path/to/custom_model.pth', upscale=2, arch='clean', channel_multiplier=2)

# Use the custom model for face restoration

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

Getting Started

- Install GFPGAN:

pip install gfpgan

- Download pre-trained models:

wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth -P experiments/pretrained_models

- Use GFPGAN in your Python script:

import cv2

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

img = cv2.imread('input_image.jpg')

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

cv2.imwrite('restored_image.png', restored_img)

Competitor Comparisons

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

Pros of Real-ESRGAN

- More versatile, capable of enhancing general images beyond just faces

- Offers better performance for upscaling and restoring heavily compressed images

- Provides pre-trained models for various tasks, including anime-style image enhancement

Cons of Real-ESRGAN

- May not perform as well specifically for face restoration and enhancement

- Lacks some of the advanced facial feature reconstruction capabilities of GFPGAN

Code Comparison

Real-ESRGAN:

from basicsr.archs.rrdbnet_arch import RRDBNet

from realesrgan import RealESRGANer

model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32)

upsampler = RealESRGANer(scale=4, model_path='weights/RealESRGAN_x4plus.pth', model=model)

GFPGAN:

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

Both repositories offer powerful image enhancement capabilities, but Real-ESRGAN is more general-purpose, while GFPGAN specializes in face restoration and enhancement. The code examples demonstrate the different approaches, with Real-ESRGAN focusing on general upscaling and GFPGAN emphasizing face-specific enhancements.

waifu2x converter ncnn version, runs fast on intel / amd / nvidia / apple-silicon GPU with vulkan

Pros of waifu2x-ncnn-vulkan

- Faster processing speed due to GPU acceleration with Vulkan

- Lightweight and efficient, suitable for real-time applications

- Cross-platform support (Windows, Linux, macOS, Android)

Cons of waifu2x-ncnn-vulkan

- Limited to image super-resolution and denoising tasks

- Less advanced in face restoration compared to GFPGAN

- Smaller community and fewer updates

Code Comparison

waifu2x-ncnn-vulkan:

ncnn::VulkanDevice* vkdev = ncnn::get_gpu_device();

waifu2x.load(vkdev, "models-cunet");

waifu2x.process(in_image, out_image);

GFPGAN:

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

While waifu2x-ncnn-vulkan focuses on efficient image processing using C++ and Vulkan, GFPGAN utilizes Python and deep learning techniques for advanced face restoration. waifu2x-ncnn-vulkan is more suitable for general image upscaling, while GFPGAN excels in face enhancement and restoration tasks.

Image Super-Resolution for Anime-Style Art

Pros of waifu2x

- Specialized in anime-style image upscaling and noise reduction

- Lightweight and efficient, suitable for lower-end hardware

- Extensive language support and active community

Cons of waifu2x

- Limited to anime-style images and general upscaling tasks

- Less effective for complex facial restoration or enhancement

- Older technology compared to more recent AI-based solutions

Code Comparison

waifu2x (Lua):

local image = image.load(input_path)

local model = torch.load(model_path)

local output = model:forward(image)

image.save(output_path, output)

GFPGAN (Python):

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

Summary

While waifu2x excels in anime-style image upscaling and noise reduction, GFPGAN offers more advanced facial restoration capabilities using modern AI techniques. waifu2x is lighter and more accessible, but GFPGAN provides superior results for complex image enhancement tasks, especially involving human faces.

ECCV18 Workshops - Enhanced SRGAN. Champion PIRM Challenge on Perceptual Super-Resolution. The training codes are in BasicSR.

Pros of ESRGAN

- Focuses specifically on image super-resolution, excelling in enhancing low-resolution images

- Offers flexibility with various model architectures and training options

- Provides pre-trained models for quick implementation and testing

Cons of ESRGAN

- Limited to super-resolution tasks, lacking face restoration capabilities

- May struggle with facial details and overall image coherence compared to GFPGAN

- Requires more manual tuning and parameter adjustment for optimal results

Code Comparison

ESRGAN:

model = arch.RRDBNet(3, 3, 64, 23, gc=32)

model.load_state_dict(torch.load(model_path), strict=True)

model.eval()

output = model(input_image)

GFPGAN:

restorer = GFPGANer(model_path='GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(input_img, has_aligned=False, only_center_face=False, paste_back=True)

The code snippets demonstrate the simplicity of using pre-trained models in both repositories. ESRGAN focuses on general image super-resolution, while GFPGAN is tailored for face restoration and enhancement. GFPGAN's code shows additional options for face alignment and processing, reflecting its specialized nature in facial image improvement.

Pros of ailab

- More focused on real-time face animation and tracking

- Includes tools for live streaming and virtual avatars

- Offers a wider range of face-related AI technologies

Cons of ailab

- Less specialized in face restoration and enhancement

- Fewer pre-trained models available for immediate use

- Documentation may be less comprehensive for some features

Code Comparison

GFPGAN example:

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

ailab example:

from face_landmarker import FaceLandmarker

landmarker = FaceLandmarker()

landmarks = landmarker.get_landmarks(image)

Summary

While GFPGAN focuses primarily on face restoration and enhancement, ailab offers a broader range of face-related AI technologies, including real-time animation and tracking. GFPGAN may be more suitable for tasks specifically related to improving image quality, while ailab provides tools for live streaming and virtual avatar applications. The choice between the two depends on the specific requirements of your project.

Bringing Old Photo Back to Life (CVPR 2020 oral)

Pros of Bringing-Old-Photos-Back-to-Life

- Specialized in restoring old and damaged photos

- Handles multiple types of degradation (scratches, blurriness, color fading)

- Provides a complete pipeline for photo restoration

Cons of Bringing-Old-Photos-Back-to-Life

- Less focused on face enhancement compared to GFPGAN

- May require more manual intervention for optimal results

- Limited to old photo restoration, less versatile for modern images

Code Comparison

GFPGAN:

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

Bringing-Old-Photos-Back-to-Life:

from run_face_enhancement import run_face_enhancement

from run_global import run_global

global_output = run_global(input_image)

final_output = run_face_enhancement(global_output)

Both repositories offer image enhancement capabilities, but GFPGAN is more focused on face restoration and general image improvement, while Bringing-Old-Photos-Back-to-Life specializes in restoring old, damaged photos. GFPGAN provides a simpler API for face enhancement, while Bringing-Old-Photos-Back-to-Life offers a more comprehensive pipeline for handling various types of image degradation.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

- :boom: Updated online demo:

. Here is the backup.

- :boom: Updated online demo:

- Colab Demo for GFPGAN

; (Another Colab Demo for the original paper model)

:rocket: Thanks for your interest in our work. You may also want to check our new updates on the tiny models for anime images and videos in Real-ESRGAN :blush:

GFPGAN aims at developing a Practical Algorithm for Real-world Face Restoration.

It leverages rich and diverse priors encapsulated in a pretrained face GAN (e.g., StyleGAN2) for blind face restoration.

:question: Frequently Asked Questions can be found in FAQ.md.

:triangular_flag_on_post: Updates

- :white_check_mark: Add RestoreFormer inference codes.

- :white_check_mark: Add V1.4 model, which produces slightly more details and better identity than V1.3.

- :white_check_mark: Add V1.3 model, which produces more natural restoration results, and better results on very low-quality / high-quality inputs. See more in Model zoo, Comparisons.md

- :white_check_mark: Integrated to Huggingface Spaces with Gradio. See Gradio Web Demo.

- :white_check_mark: Support enhancing non-face regions (background) with Real-ESRGAN.

- :white_check_mark: We provide a clean version of GFPGAN, which does not require CUDA extensions.

- :white_check_mark: We provide an updated model without colorizing faces.

If GFPGAN is helpful in your photos/projects, please help to :star: this repo or recommend it to your friends. Thanks:blush:

Other recommended projects:

:arrow_forward: Real-ESRGAN: A practical algorithm for general image restoration

:arrow_forward: BasicSR: An open-source image and video restoration toolbox

:arrow_forward: facexlib: A collection that provides useful face-relation functions

:arrow_forward: HandyView: A PyQt5-based image viewer that is handy for view and comparison

:book: GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

[Paper] [Project Page] [Demo]

Xintao Wang, Yu Li, Honglun Zhang, Ying Shan

Applied Research Center (ARC), Tencent PCG

:wrench: Dependencies and Installation

- Python >= 3.7 (Recommend to use Anaconda or Miniconda)

- PyTorch >= 1.7

- Option: NVIDIA GPU + CUDA

- Option: Linux

Installation

We now provide a clean version of GFPGAN, which does not require customized CUDA extensions.

If you want to use the original model in our paper, please see PaperModel.md for installation.

-

Clone repo

git clone https://github.com/TencentARC/GFPGAN.git cd GFPGAN -

Install dependent packages

# Install basicsr - https://github.com/xinntao/BasicSR # We use BasicSR for both training and inference pip install basicsr # Install facexlib - https://github.com/xinntao/facexlib # We use face detection and face restoration helper in the facexlib package pip install facexlib pip install -r requirements.txt python setup.py develop # If you want to enhance the background (non-face) regions with Real-ESRGAN, # you also need to install the realesrgan package pip install realesrgan

:zap: Quick Inference

We take the v1.3 version for an example. More models can be found here.

Download pre-trained models: GFPGANv1.3.pth

wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth -P experiments/pretrained_models

Inference!

python inference_gfpgan.py -i inputs/whole_imgs -o results -v 1.3 -s 2

Usage: python inference_gfpgan.py -i inputs/whole_imgs -o results -v 1.3 -s 2 [options]...

-h show this help

-i input Input image or folder. Default: inputs/whole_imgs

-o output Output folder. Default: results

-v version GFPGAN model version. Option: 1 | 1.2 | 1.3. Default: 1.3

-s upscale The final upsampling scale of the image. Default: 2

-bg_upsampler background upsampler. Default: realesrgan

-bg_tile Tile size for background sampler, 0 for no tile during testing. Default: 400

-suffix Suffix of the restored faces

-only_center_face Only restore the center face

-aligned Input are aligned faces

-ext Image extension. Options: auto | jpg | png, auto means using the same extension as inputs. Default: auto

If you want to use the original model in our paper, please see PaperModel.md for installation and inference.

:european_castle: Model Zoo

| Version | Model Name | Description |

|---|---|---|

| V1.3 | GFPGANv1.3.pth | Based on V1.2; more natural restoration results; better results on very low-quality / high-quality inputs. |

| V1.2 | GFPGANCleanv1-NoCE-C2.pth | No colorization; no CUDA extensions are required. Trained with more data with pre-processing. |

| V1 | GFPGANv1.pth | The paper model, with colorization. |

The comparisons are in Comparisons.md.

Note that V1.3 is not always better than V1.2. You may need to select different models based on your purpose and inputs.

| Version | Strengths | Weaknesses |

|---|---|---|

| V1.3 | â natural outputs âbetter results on very low-quality inputs â work on relatively high-quality inputs â can have repeated (twice) restorations | â not very sharp â have a slight change on identity |

| V1.2 | â sharper output â with beauty makeup | â some outputs are unnatural |

You can find more models (such as the discriminators) here: [Google Drive], OR [Tencent Cloud è ¾è®¯å¾®äº]

:computer: Training

We provide the training codes for GFPGAN (used in our paper).

You could improve it according to your own needs.

Tips

- More high quality faces can improve the restoration quality.

- You may need to perform some pre-processing, such as beauty makeup.

Procedures

(You can try a simple version ( options/train_gfpgan_v1_simple.yml) that does not require face component landmarks.)

-

Dataset preparation: FFHQ

-

Download pre-trained models and other data. Put them in the

experiments/pretrained_modelsfolder. -

Modify the configuration file

options/train_gfpgan_v1.ymlaccordingly. -

Training

python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 gfpgan/train.py -opt options/train_gfpgan_v1.yml --launcher pytorch

:scroll: License and Acknowledgement

GFPGAN is released under Apache License Version 2.0.

BibTeX

@InProceedings{wang2021gfpgan,

author = {Xintao Wang and Yu Li and Honglun Zhang and Ying Shan},

title = {Towards Real-World Blind Face Restoration with Generative Facial Prior},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2021}

}

:e-mail: Contact

If you have any question, please email xintao.wang@outlook.com or xintaowang@tencent.com.

Top Related Projects

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

waifu2x converter ncnn version, runs fast on intel / amd / nvidia / apple-silicon GPU with vulkan

Image Super-Resolution for Anime-Style Art

ECCV18 Workshops - Enhanced SRGAN. Champion PIRM Challenge on Perceptual Super-Resolution. The training codes are in BasicSR.

Bringing Old Photo Back to Life (CVPR 2020 oral)

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot