Top Related Projects

[Open Source]. The improved version of AnimeGAN. Landscape photos/videos to anime

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

Official PyTorch repo for JoJoGAN: One Shot Face Stylization

GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

Quick Overview

The bryandlee/animegan2-pytorch repository is a PyTorch implementation of AnimeGANv2, a Generative Adversarial Network (GAN) for transforming real-world images into anime-style artwork. This project provides pre-trained models and tools for both inference and training, allowing users to create anime-style images from photographs or retrain the model on custom datasets.

Pros

- Easy-to-use implementation of AnimeGANv2 in PyTorch

- Includes pre-trained models for quick inference

- Supports both image and video processing

- Provides tools for custom training and dataset preparation

Cons

- Limited documentation for advanced usage and customization

- Dependency on specific versions of PyTorch and other libraries

- May require significant computational resources for training

- Output quality can vary depending on input image characteristics

Code Examples

- Loading a pre-trained model and performing inference:

from model import Generator

from utils import *

device = 'cuda'

model = Generator().to(device)

model.load_state_dict(torch.load('weights/face_paint_512_v2.pt'))

model.eval()

image = load_image('input.jpg')

output = model(image.to(device))

save_image(output, 'output.png')

- Processing a video file:

from model import Generator

from utils import *

device = 'cuda'

model = Generator().to(device)

model.load_state_dict(torch.load('weights/face_paint_512_v2.pt'))

model.eval()

process_video('input_video.mp4', 'output_video.mp4', model, device)

- Custom training loop (simplified):

from model import Generator, Discriminator

from utils import *

device = 'cuda'

generator = Generator().to(device)

discriminator = Discriminator().to(device)

for epoch in range(num_epochs):

for real_images, anime_images in dataloader:

fake_images = generator(real_images)

d_loss = train_discriminator(discriminator, real_images, anime_images, fake_images)

g_loss = train_generator(generator, discriminator, real_images, anime_images)

update_learning_rate(d_optimizer, g_optimizer)

Getting Started

-

Clone the repository:

git clone https://github.com/bryandlee/animegan2-pytorch.git cd animegan2-pytorch -

Install dependencies:

pip install -r requirements.txt -

Download pre-trained weights:

wget https://github.com/bryandlee/animegan2-pytorch/releases/download/v1.0/face_paint_512_v2.pt -P weights/ -

Run inference on an image:

python test.py --input input.jpg --output output.png --checkpoint weights/face_paint_512_v2.pt

Competitor Comparisons

[Open Source]. The improved version of AnimeGAN. Landscape photos/videos to anime

Pros of AnimeGANv2

- Original implementation of the AnimeGANv2 architecture

- Includes pre-trained models for various anime styles

- Provides comprehensive training and testing scripts

Cons of AnimeGANv2

- Implemented in TensorFlow, which may be less popular among PyTorch users

- Less active maintenance and updates compared to animegan2-pytorch

- Limited documentation and examples for easy integration

Code Comparison

AnimeGANv2 (TensorFlow):

import tensorflow as tf

from net import generator

def inference(img, checkpoint_dir):

generator = generator.Generator()

checkpoint = tf.train.Checkpoint(generator=generator)

checkpoint.restore(tf.train.latest_checkpoint(checkpoint_dir))

fake_img = generator(img, training=False)

return fake_img

animegan2-pytorch (PyTorch):

import torch

from model import Generator

def inference(img, checkpoint_path):

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = Generator().to(device)

model.load_state_dict(torch.load(checkpoint_path, map_location=device))

with torch.no_grad():

fake_img = model(img.to(device))

return fake_img

The code comparison highlights the main difference in implementation between TensorFlow and PyTorch, showcasing the syntax and structure variations between the two frameworks for loading models and performing inference.

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

Pros of Real-ESRGAN

- Focuses on general image super-resolution and enhancement

- Provides pre-trained models for various use cases

- Supports both CPU and GPU inference

Cons of Real-ESRGAN

- May not be optimized specifically for anime-style images

- Requires more computational resources for high-resolution outputs

- Less specialized in style transfer compared to AnimeGAN2

Code Comparison

Real-ESRGAN:

from basicsr.archs.rrdbnet_arch import RRDBNet

from realesrgan import RealESRGANer

model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32)

upsampler = RealESRGANer(model_path='weights/RealESRGAN_x4plus.pth', model=model, scale=4)

AnimeGAN2-PyTorch:

from model import Generator

from utils import *

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = Generator().to(device)

model.load_state_dict(torch.load('weights/face_paint_512_v2.pt'))

Both repositories provide powerful image processing capabilities, but they serve different purposes. Real-ESRGAN is more versatile for general image enhancement, while AnimeGAN2-PyTorch specializes in anime-style transfer. The choice between them depends on the specific use case and desired output style.

Official PyTorch repo for JoJoGAN: One Shot Face Stylization

Pros of JoJoGAN

- Focuses on stylizing faces in the style of JoJo's Bizarre Adventure, offering a unique and specific artistic transformation

- Utilizes a GAN-based approach, potentially allowing for more diverse and creative outputs

- Includes a user-friendly Colab notebook for easy experimentation

Cons of JoJoGAN

- Limited to a specific art style, whereas AnimeGAN2-PyTorch offers more general anime-style conversions

- May require more computational resources due to the GAN architecture

- Less extensive documentation compared to AnimeGAN2-PyTorch

Code Comparison

JoJoGAN:

def train(args):

g_ema.eval()

mean_path_length = 0

# ... (truncated for brevity)

AnimeGAN2-PyTorch:

def train(args):

device = torch.device(args.device)

generator = Generator().to(device)

discriminator = Discriminator().to(device)

# ... (truncated for brevity)

Both repositories provide PyTorch implementations for stylizing images, but they differ in their specific approaches and target styles. JoJoGAN is more specialized, while AnimeGAN2-PyTorch offers a broader anime-style conversion.

GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

Pros of GFPGAN

- Focuses on face restoration and enhancement, offering more specialized functionality

- Utilizes a pre-trained model for improved performance on facial features

- Provides a user-friendly interface for easy implementation

Cons of GFPGAN

- Limited to face-specific tasks, less versatile for general image processing

- May require more computational resources due to its complex architecture

- Less suitable for anime-style image generation or transformation

Code Comparison

GFPGAN:

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

AnimaGAN2:

from animegan2 import AnimeGANv2

model = AnimeGANv2()

out_img = model(img)

GFPGAN is more focused on face restoration and enhancement, while AnimaGAN2 is designed for anime-style image transformation. GFPGAN offers more specialized functionality for facial features but is less versatile for general image processing. AnimaGAN2 provides a simpler implementation for anime-style transformations but may not offer the same level of facial detail enhancement as GFPGAN.

Pros of GPEN

- Focuses on face restoration and enhancement, offering more specialized functionality for facial images

- Includes pre-trained models for immediate use, reducing setup time

- Provides a user-friendly GUI interface for easier interaction

Cons of GPEN

- Limited to face-related tasks, less versatile than AnimeGAN2's general image stylization

- Requires more computational resources due to its complex architecture

- Less active development and community support compared to AnimeGAN2

Code Comparison

GPEN example:

from gpen import FaceRestoration

model = FaceRestoration()

restored_face = model.process(input_image)

AnimeGAN2 example:

from animegan2 import Generator

model = Generator()

anime_style_image = model(input_image)

Both repositories offer image processing capabilities, but GPEN is tailored for face restoration while AnimeGAN2 focuses on anime-style image conversion. GPEN provides a more specialized solution for facial images, while AnimeGAN2 offers broader stylization options for various types of images. The choice between the two depends on the specific use case and desired output style.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

PyTorch Implementation of AnimeGANv2

Updates

-

2021-10-17Add weights for FacePortraitV2.

-

2021-11-07Thanks to ak92501, a web demo is integrated to Huggingface Spaces with Gradio. -

2021-11-07Thanks to xhlulu, thetorch.hubmodel is now available. See Torch Hub Usage.

Basic Usage

Inference

python test.py --input_dir [image_folder_path] --device [cpu/cuda]

Torch Hub Usage

You can load the model via torch.hub:

import torch

model = torch.hub.load("bryandlee/animegan2-pytorch", "generator").eval()

out = model(img_tensor) # BCHW tensor

Currently, the following pretrained shorthands are available:

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="celeba_distill")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v1")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v2")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="paprika")

You can also load the face2paint util function:

from PIL import Image

face2paint = torch.hub.load("bryandlee/animegan2-pytorch:main", "face2paint", size=512)

img = Image.open(...).convert("RGB")

out = face2paint(model, img)

More details about torch.hub is in the torch docs

Weight Conversion from the Original Repo (Tensorflow)

- Install the original repo's dependencies: python 3.6, tensorflow 1.15.0-gpu

- Install torch >= 1.7.1

- Clone the original repo & run

git clone https://github.com/TachibanaYoshino/AnimeGANv2

python convert_weights.py

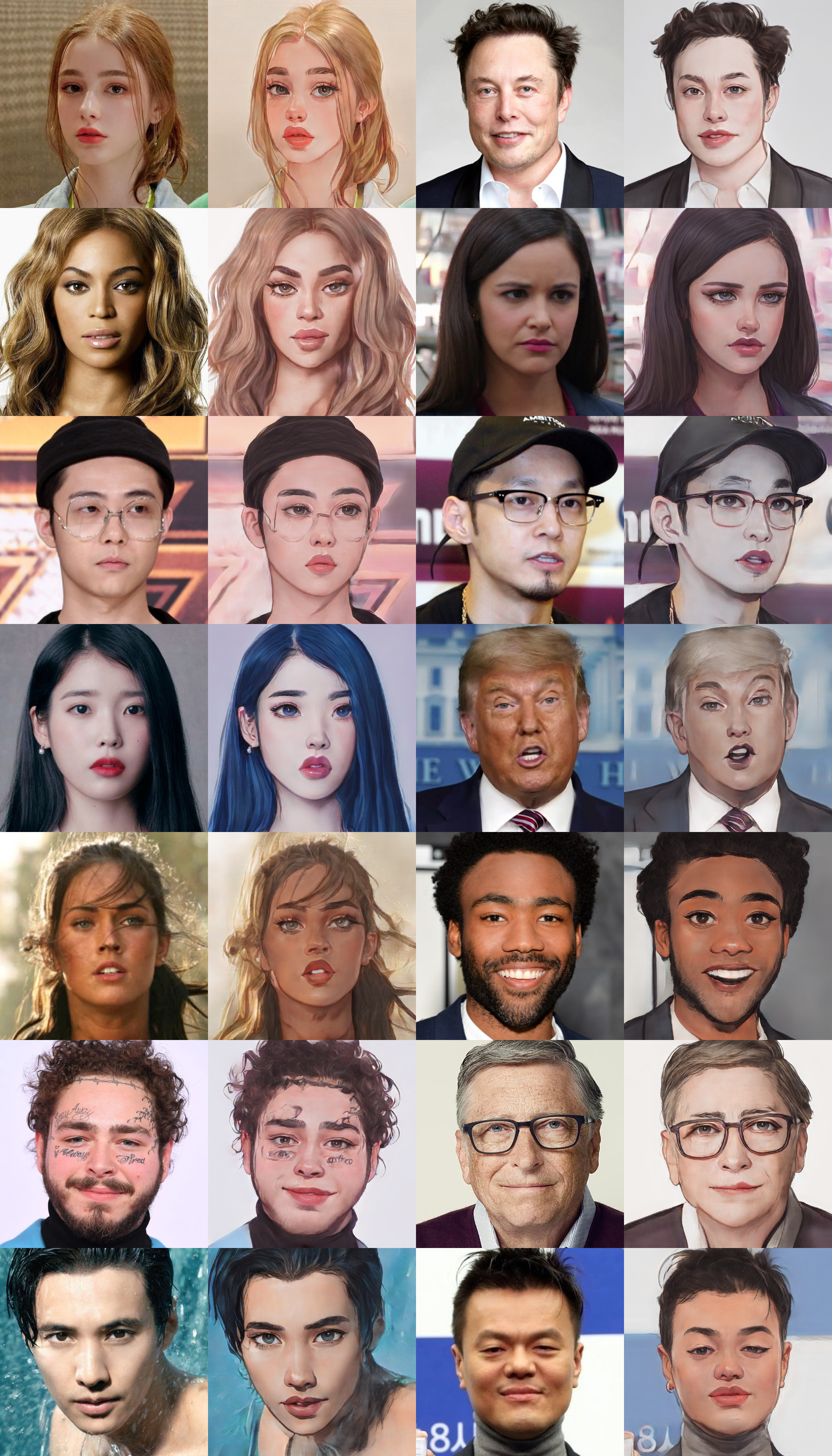

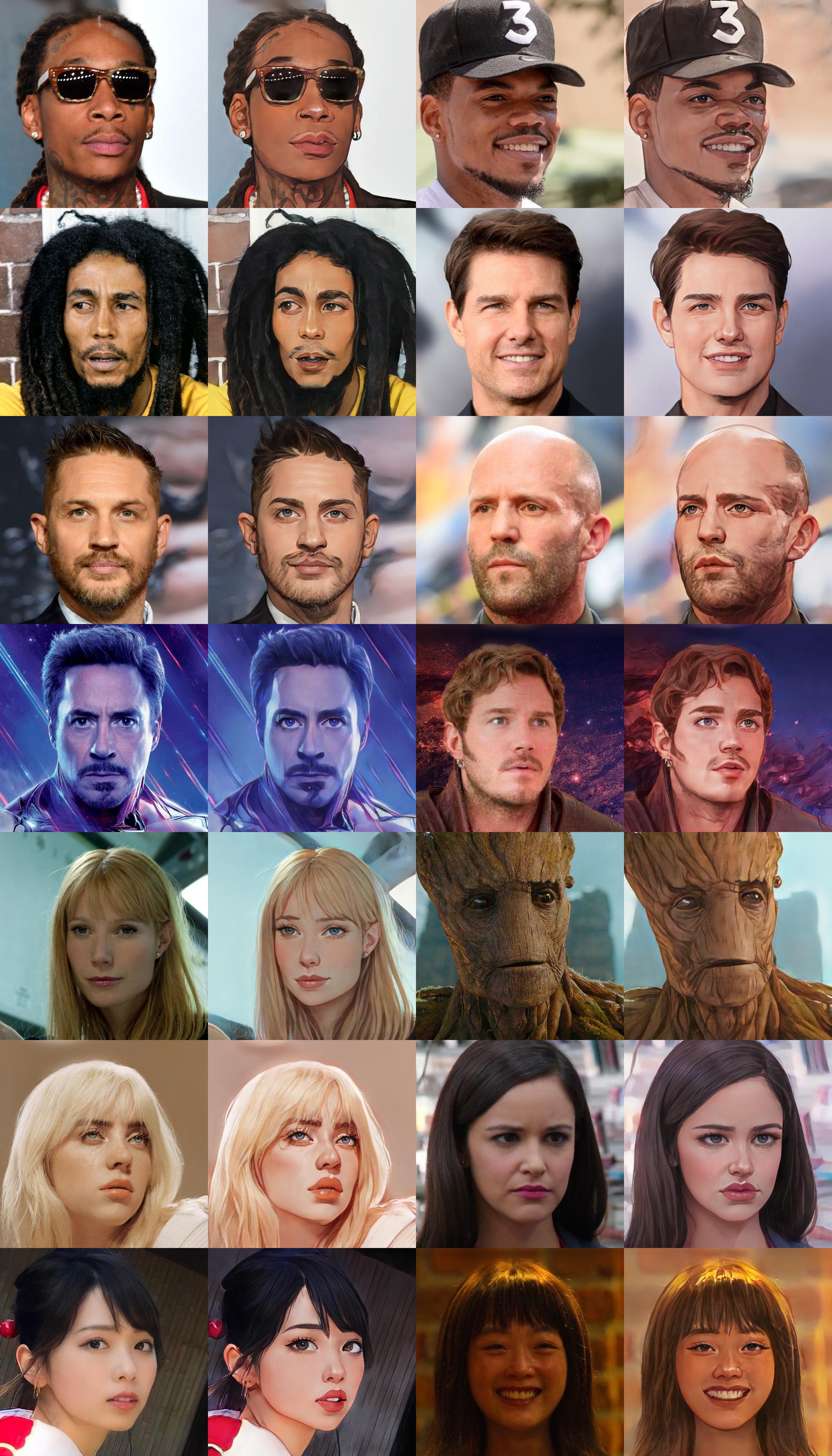

samples

Results from converted `Paprika` style model (input image, original tensorflow result, pytorch result from left to right)

Note: Results from converted weights slightly different due to the bilinear upsample issue

Additional Model Weights

Webtoon Face [ckpt]

samples

Trained on 256x256 face images. Distilled from webtoon face model with L2 + VGG + GAN Loss and CelebA-HQ images.

Face Portrait v1 [ckpt]

Face Portrait v2 [ckpt]

Top Related Projects

[Open Source]. The improved version of AnimeGAN. Landscape photos/videos to anime

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

Official PyTorch repo for JoJoGAN: One Shot Face Stylization

GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot