jetson-inference

jetson-inference

Hello AI World guide to deploying deep-learning inference networks and deep vision primitives with TensorRT and NVIDIA Jetson.

Top Related Projects

DeepStream SDK Python bindings and sample applications

Models and examples built with TensorFlow

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

YOLOv4 / Scaled-YOLOv4 / YOLO - Neural Networks for Object Detection (Windows and Linux version of Darknet )

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

Quick Overview

The dusty-nv/jetson-inference repository is a collection of deep learning inference examples and libraries for NVIDIA Jetson. It provides pre-trained networks for image classification, object detection, and semantic segmentation, along with C++ and Python bindings for deploying these models on Jetson devices.

Pros

- Optimized for NVIDIA Jetson platforms, ensuring efficient performance on edge devices

- Includes a wide range of pre-trained models for various computer vision tasks

- Offers both C++ and Python APIs for flexibility in development

- Provides comprehensive documentation and examples for easy integration

Cons

- Limited to NVIDIA Jetson platforms, not suitable for other hardware

- May require specific versions of CUDA and TensorRT, potentially limiting compatibility

- Some advanced features may require additional setup or dependencies

- Performance may vary depending on the specific Jetson model used

Code Examples

- Image classification using ResNet-18:

import jetson.inference

import jetson.utils

net = jetson.inference.imageNet("resnet18")

input = jetson.utils.loadImage("path/to/image.jpg")

class_id, confidence = net.Classify(input)

class_desc = net.GetClassDesc(class_id)

print(f"Image is recognized as '{class_desc}' (class #{class_id}) with {confidence:.2f}% confidence")

- Object detection using SSD-Mobilenet:

import jetson.inference

import jetson.utils

net = jetson.inference.detectNet("ssd-mobilenet-v2")

input = jetson.utils.videoSource("path/to/video.mp4")

output = jetson.utils.videoOutput("output.mp4")

while True:

img = input.Capture()

detections = net.Detect(img)

for detection in detections:

print(f"Detected {net.GetClassDesc(detection.ClassID)} (ID: {detection.ClassID})")

output.Render(img)

if not input.IsStreaming():

break

- Semantic segmentation using FCN-ResNet18:

import jetson.inference

import jetson.utils

net = jetson.inference.segNet("fcn-resnet18-cityscapes-1024x512")

input = jetson.utils.loadImage("path/to/image.jpg")

output = jetson.utils.cudaAllocMapped(width=input.width, height=input.height, format="rgb8")

net.Process(input, output)

jetson.utils.saveImageRGBA("segmentation_output.jpg", output, input.width, input.height)

Getting Started

-

Clone the repository:

git clone https://github.com/dusty-nv/jetson-inference cd jetson-inference -

Build the project:

mkdir build cd build cmake ../ make -j$(nproc) sudo make install sudo ldconfig -

Run an example:

cd aarch64/bin ./imagenet-console images/orange_0.jpg images/test/output.jpg

Competitor Comparisons

DeepStream SDK Python bindings and sample applications

Pros of deepstream_python_apps

- Offers a more comprehensive pipeline for video analytics and streaming

- Provides better scalability for multi-stream processing

- Integrates seamlessly with other NVIDIA SDKs and tools

Cons of deepstream_python_apps

- Steeper learning curve due to more complex architecture

- Less focus on single-image inference tasks

- Requires more setup and configuration

Code Comparison

jetson-inference example:

import jetson.inference

import jetson.utils

net = jetson.inference.detectNet("ssd-mobilenet-v2")

img = jetson.utils.loadImage("image.jpg")

detections = net.Detect(img)

deepstream_python_apps example:

import gi

gi.require_version('Gst', '1.0')

from gi.repository import Gst

pipeline = Gst.parse_launch("filesrc location=video.mp4 ! decodebin ! nvinfer config-file-path=config.txt ! nvvideoconvert ! nvdsosd ! nveglglessink")

pipeline.set_state(Gst.State.PLAYING)

The jetson-inference code focuses on simple image detection, while deepstream_python_apps demonstrates a more complex video processing pipeline using GStreamer.

Models and examples built with TensorFlow

Pros of TensorFlow Models

- Broader scope with a wide range of pre-trained models for various tasks

- Extensive documentation and community support

- Regular updates and contributions from the TensorFlow team and community

Cons of TensorFlow Models

- Steeper learning curve for beginners

- May require more computational resources for some models

- Less focused on specific hardware optimizations (e.g., NVIDIA Jetson)

Code Comparison

Jetson-inference (C++):

#include <jetson-inference/imageNet.h>

imageNet* net = imageNet::Create("googlenet");

float confidence = 0.0f;

const int img_class = net->Classify(image, &confidence);

TensorFlow Models (Python):

import tensorflow as tf

from official.vision.image_classification import resnet_model

model = resnet_model.resnet50(num_classes=1000)

predictions = model(images, training=False)

Summary

Jetson-inference is tailored for NVIDIA Jetson platforms, offering optimized performance and ease of use for specific hardware. TensorFlow Models provides a broader range of pre-trained models and greater flexibility but may require more setup and resources. The choice depends on your specific hardware, project requirements, and familiarity with the respective ecosystems.

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

Pros of YOLOv5

- Highly optimized and faster inference times

- More extensive documentation and community support

- Regular updates and improvements to the model architecture

Cons of YOLOv5

- Less focus on Jetson-specific optimizations

- May require more setup for deployment on Jetson devices

- Potentially higher resource requirements for training

Code Comparison

YOLOv5:

import torch

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

results = model('image.jpg')

results.print()

jetson-inference:

import jetson.inference

import jetson.utils

net = jetson.inference.detectNet("ssd-mobilenet-v2")

img = jetson.utils.loadImage("image.jpg")

detections = net.Detect(img)

The YOLOv5 code is more concise and uses PyTorch's ecosystem, while jetson-inference provides a more specialized API for Jetson devices. YOLOv5 offers flexibility in model selection, whereas jetson-inference focuses on optimized models for Jetson hardware. Both repositories serve different purposes, with YOLOv5 being more general-purpose and jetson-inference tailored for NVIDIA Jetson platforms.

YOLOv4 / Scaled-YOLOv4 / YOLO - Neural Networks for Object Detection (Windows and Linux version of Darknet )

Pros of darknet

- More extensive support for various neural network architectures and datasets

- Active community development with frequent updates and contributions

- Broader platform compatibility, including Windows and Linux

Cons of darknet

- Steeper learning curve for beginners due to more complex configuration options

- Less optimized for Jetson platforms compared to jetson-inference

Code Comparison

darknet:

network *net = parse_network_cfg(cfgfile);

load_weights(&net, weightfile);

detection_layer l = net->layers[net->n-1];

jetson-inference:

detectNet* net = detectNet::Create("ssd-mobilenet-v2");

float* imgCPU = NULL;

float* imgCUDA = NULL;

cudaMalloc(&imgCUDA, imageSize);

The darknet code focuses on network configuration and weight loading, while jetson-inference emphasizes CUDA-accelerated image processing for Jetson platforms. jetson-inference provides a more streamlined API for deployment on NVIDIA Jetson devices, whereas darknet offers greater flexibility for various deep learning tasks across different platforms.

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

Pros of Mask_RCNN

- Specialized in instance segmentation, offering more detailed object detection

- Implements the Mask R-CNN architecture, which is more advanced for certain tasks

- Provides pre-trained models on the COCO dataset, enabling quick start for common object detection

Cons of Mask_RCNN

- More computationally intensive, potentially slower for real-time applications

- Requires more setup and configuration compared to the streamlined Jetson Inference

- Less optimized for edge devices and may not fully utilize Jetson hardware capabilities

Code Comparison

Mask_RCNN:

import mrcnn.model as modellib

model = modellib.MaskRCNN(mode="inference", config=config, model_dir=MODEL_DIR)

model.load_weights(COCO_MODEL_PATH, by_name=True)

results = model.detect([image], verbose=1)

Jetson Inference:

import jetson.inference

import jetson.utils

net = jetson.inference.detectNet("ssd-mobilenet-v2")

img = jetson.utils.loadImage("image.jpg")

detections = net.Detect(img)

The Mask_RCNN code focuses on loading a pre-trained model and performing detection, while Jetson Inference provides a more streamlined API for object detection on Jetson devices.

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

Pros of onnxruntime

- Broader platform support beyond NVIDIA hardware

- More extensive ecosystem and community backing

- Flexible deployment options (cloud, edge, mobile)

Cons of onnxruntime

- Less optimized for NVIDIA Jetson devices

- May require more setup and configuration for specific use cases

- Potentially higher learning curve for beginners

Code Comparison

jetson-inference:

import jetson.inference

import jetson.utils

net = jetson.inference.detectNet("ssd-mobilenet-v2")

img = jetson.utils.loadImage("image.jpg")

detections = net.Detect(img)

onnxruntime:

import onnxruntime as ort

import numpy as np

session = ort.InferenceSession("model.onnx")

input_name = session.get_inputs()[0].name

output = session.run(None, {input_name: np.array(image).astype(np.float32)})

Both repositories offer powerful tools for inference, but jetson-inference is more focused on NVIDIA Jetson devices, while onnxruntime provides a more versatile solution for various platforms and deployment scenarios.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Deploying Deep Learning

Welcome to our instructional guide for inference and realtime vision DNN library for NVIDIA Jetson devices. This project uses TensorRT to run optimized networks on GPUs from C++ or Python, and PyTorch for training models.

Supported DNN vision primitives include imageNet for image classification, detectNet for object detection, segNet for semantic segmentation, poseNet for pose estimation, and actionNet for action recognition. Examples are provided for streaming from live camera feeds, making webapps with WebRTC, and support for ROS/ROS2.

Follow the Hello AI World tutorial for running inference and transfer learning onboard your Jetson, including collecting your own datasets, training your own models with PyTorch, and deploying them with TensorRT.

Table of Contents

- Hello AI World

- Jetson AI Lab

- Video Walkthroughs

- API Reference

- Code Examples

- Pre-Trained Models

- System Requirements

- Change Log

> JetPack 6 is now supported on Orin devices (developer.nvidia.com/jetpack)

> Check out the Generative AI and LLM tutorials on Jetson AI Lab!

> See the Change Log for the latest updates and new features.

Hello AI World

Hello AI World can be run completely onboard your Jetson, including live inferencing with TensorRT and transfer learning with PyTorch. For installation instructions, see System Setup. It's then recommended to start with the Inference section to familiarize yourself with the concepts, before diving into Training your own models.

System Setup

Inference

- Image Classification

- Object Detection

- Semantic Segmentation

- Pose Estimation

- Action Recognition

- Background Removal

- Monocular Depth

Training

- Transfer Learning with PyTorch

- Classification/Recognition (ResNet-18)

- Object Detection (SSD-Mobilenet)

WebApp Frameworks

Appendix

Jetson AI Lab

The Jetson AI Lab has additional tutorials on LLMs, Vision Transformers (ViT), and Vision Language Models (VLM) that run on Orin (and in some cases Xavier). Check out some of these:

NanoOWL - Open Vocabulary Object Detection ViT (container:

nanoowl)

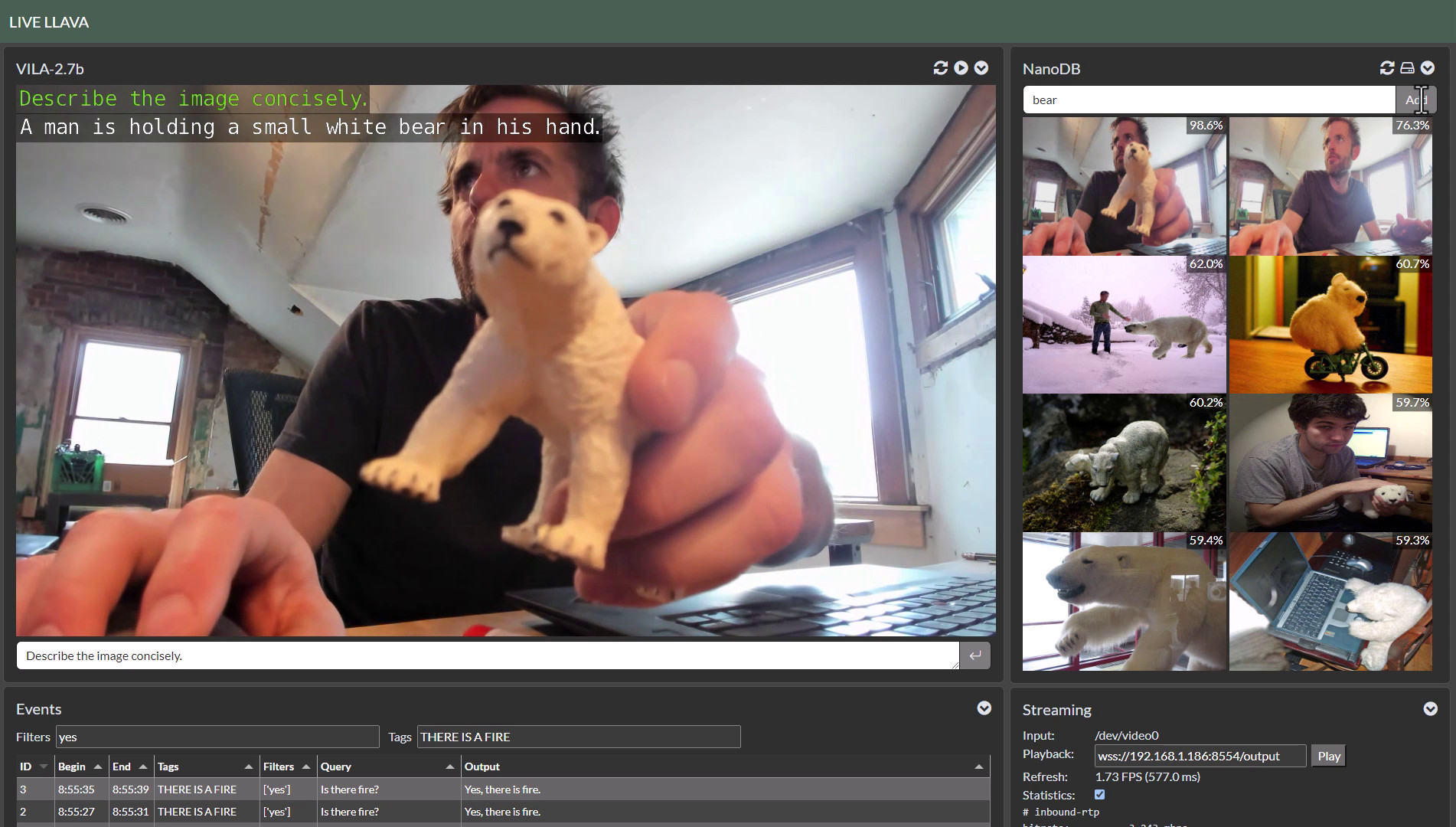

Live Llava on Jetson AGX Orin (container:

local_llm)

Live Llava 2.0 - VILA + Multimodal NanoDB on Jetson Orin (container:

local_llm)

Realtime Multimodal VectorDB on NVIDIA Jetson (container:

nanodb)

Video Walkthroughs

Below are screencasts of Hello AI World that were recorded for the Jetson AI Certification course:

| Description | Video |

|---|---|

| Hello AI World Setup Download and run the Hello AI World container on Jetson Nano, test your camera feed, and see how to stream it over the network via RTP. | <img src=https://github.com/dusty-nv/jetson-inference/raw/master/docs/images/thumbnail_setup.jpg width="750"> |

| Image Classification Inference Code your own Python program for image classification using Jetson Nano and deep learning, then experiment with realtime classification on a live camera stream. | <img src=https://github.com/dusty-nv/jetson-inference/raw/master/docs/images/thumbnail_imagenet.jpg width="750"> |

| Training Image Classification Models Learn how to train image classification models with PyTorch onboard Jetson Nano, and collect your own classification datasets to create custom models. | <img src=https://github.com/dusty-nv/jetson-inference/raw/master/docs/images/thumbnail_imagenet_training.jpg width="750"> |

| Object Detection Inference Code your own Python program for object detection using Jetson Nano and deep learning, then experiment with realtime detection on a live camera stream. | <img src=https://github.com/dusty-nv/jetson-inference/raw/master/docs/images/thumbnail_detectnet.jpg width="750"> |

| Training Object Detection Models Learn how to train object detection models with PyTorch onboard Jetson Nano, and collect your own detection datasets to create custom models. | <img src=https://github.com/dusty-nv/jetson-inference/raw/master/docs/images/thumbnail_detectnet_training.jpg width="750"> |

| Semantic Segmentation Experiment with fully-convolutional semantic segmentation networks on Jetson Nano, and run realtime segmentation on a live camera stream. | <img src=https://github.com/dusty-nv/jetson-inference/raw/master/docs/images/thumbnail_segnet.jpg width="750"> |

API Reference

Below are links to reference documentation for the C++ and Python libraries from the repo:

jetson-inference

| C++ | Python | |

|---|---|---|

| Image Recognition | imageNet | imageNet |

| Object Detection | detectNet | detectNet |

| Segmentation | segNet | segNet |

| Pose Estimation | poseNet | poseNet |

| Action Recognition | actionNet | actionNet |

| Background Removal | backgroundNet | actionNet |

| Monocular Depth | depthNet | depthNet |

jetson-utils

These libraries are able to be used in external projects by linking to libjetson-inference and libjetson-utils.

Code Examples

Introductory code walkthroughs of using the library are covered during these steps of the Hello AI World tutorial:

Additional C++ and Python samples for running the networks on images and live camera streams can be found here:

| C++ | Python | |

|---|---|---|

| Image Recognition | imagenet.cpp | imagenet.py |

| Object Detection | detectnet.cpp | detectnet.py |

| Segmentation | segnet.cpp | segnet.py |

| Pose Estimation | posenet.cpp | posenet.py |

| Action Recognition | actionnet.cpp | actionnet.py |

| Background Removal | backgroundnet.cpp | backgroundnet.py |

| Monocular Depth | depthnet.cpp | depthnet.py |

note: see the Array Interfaces section for using memory with other Python libraries (like Numpy, PyTorch, ect)

These examples will automatically be compiled while Building the Project from Source, and are able to run the pre-trained models listed below in addition to custom models provided by the user. Launch each example with --help for usage info.

Pre-Trained Models

The project comes with a number of pre-trained models that are available to use and will be automatically downloaded:

Image Recognition

| Network | CLI argument | NetworkType enum |

|---|---|---|

| AlexNet | alexnet | ALEXNET |

| GoogleNet | googlenet | GOOGLENET |

| GoogleNet-12 | googlenet-12 | GOOGLENET_12 |

| ResNet-18 | resnet-18 | RESNET_18 |

| ResNet-50 | resnet-50 | RESNET_50 |

| ResNet-101 | resnet-101 | RESNET_101 |

| ResNet-152 | resnet-152 | RESNET_152 |

| VGG-16 | vgg-16 | VGG-16 |

| VGG-19 | vgg-19 | VGG-19 |

| Inception-v4 | inception-v4 | INCEPTION_V4 |

Object Detection

| Model | CLI argument | NetworkType enum | Object classes |

|---|---|---|---|

| SSD-Mobilenet-v1 | ssd-mobilenet-v1 | SSD_MOBILENET_V1 | 91 (COCO classes) |

| SSD-Mobilenet-v2 | ssd-mobilenet-v2 | SSD_MOBILENET_V2 | 91 (COCO classes) |

| SSD-Inception-v2 | ssd-inception-v2 | SSD_INCEPTION_V2 | 91 (COCO classes) |

| TAO PeopleNet | peoplenet | PEOPLENET | person, bag, face |

| TAO PeopleNet (pruned) | peoplenet-pruned | PEOPLENET_PRUNED | person, bag, face |

| TAO DashCamNet | dashcamnet | DASHCAMNET | person, car, bike, sign |

| TAO TrafficCamNet | trafficcamnet | TRAFFICCAMNET | person, car, bike, sign |

| TAO FaceDetect | facedetect | FACEDETECT | face |

Legacy Detection Models

| Model | CLI argument | NetworkType enum | Object classes |

|---|---|---|---|

| DetectNet-COCO-Dog | coco-dog | COCO_DOG | dogs |

| DetectNet-COCO-Bottle | coco-bottle | COCO_BOTTLE | bottles |

| DetectNet-COCO-Chair | coco-chair | COCO_CHAIR | chairs |

| DetectNet-COCO-Airplane | coco-airplane | COCO_AIRPLANE | airplanes |

| ped-100 | pednet | PEDNET | pedestrians |

| multiped-500 | multiped | PEDNET_MULTI | pedestrians, luggage |

| facenet-120 | facenet | FACENET | faces |

Semantic Segmentation

| Dataset | Resolution | CLI Argument | Accuracy | Jetson Nano | Jetson Xavier |

|---|---|---|---|---|---|

| Cityscapes | 512x256 | fcn-resnet18-cityscapes-512x256 | 83.3% | 48 FPS | 480 FPS |

| Cityscapes | 1024x512 | fcn-resnet18-cityscapes-1024x512 | 87.3% | 12 FPS | 175 FPS |

| Cityscapes | 2048x1024 | fcn-resnet18-cityscapes-2048x1024 | 89.6% | 3 FPS | 47 FPS |

| DeepScene | 576x320 | fcn-resnet18-deepscene-576x320 | 96.4% | 26 FPS | 360 FPS |

| DeepScene | 864x480 | fcn-resnet18-deepscene-864x480 | 96.9% | 14 FPS | 190 FPS |

| Multi-Human | 512x320 | fcn-resnet18-mhp-512x320 | 86.5% | 34 FPS | 370 FPS |

| Multi-Human | 640x360 | fcn-resnet18-mhp-512x320 | 87.1% | 23 FPS | 325 FPS |

| Pascal VOC | 320x320 | fcn-resnet18-voc-320x320 | 85.9% | 45 FPS | 508 FPS |

| Pascal VOC | 512x320 | fcn-resnet18-voc-512x320 | 88.5% | 34 FPS | 375 FPS |

| SUN RGB-D | 512x400 | fcn-resnet18-sun-512x400 | 64.3% | 28 FPS | 340 FPS |

| SUN RGB-D | 640x512 | fcn-resnet18-sun-640x512 | 65.1% | 17 FPS | 224 FPS |

- If the resolution is omitted from the CLI argument, the lowest resolution model is loaded

- Accuracy indicates the pixel classification accuracy across the model's validation dataset

- Performance is measured for GPU FP16 mode with JetPack 4.2.1,

nvpmodel 0(MAX-N)

Legacy Segmentation Models

| Network | CLI Argument | NetworkType enum | Classes |

|---|---|---|---|

| Cityscapes (2048x2048) | fcn-alexnet-cityscapes-hd | FCN_ALEXNET_CITYSCAPES_HD | 21 |

| Cityscapes (1024x1024) | fcn-alexnet-cityscapes-sd | FCN_ALEXNET_CITYSCAPES_SD | 21 |

| Pascal VOC (500x356) | fcn-alexnet-pascal-voc | FCN_ALEXNET_PASCAL_VOC | 21 |

| Synthia (CVPR16) | fcn-alexnet-synthia-cvpr | FCN_ALEXNET_SYNTHIA_CVPR | 14 |

| Synthia (Summer-HD) | fcn-alexnet-synthia-summer-hd | FCN_ALEXNET_SYNTHIA_SUMMER_HD | 14 |

| Synthia (Summer-SD) | fcn-alexnet-synthia-summer-sd | FCN_ALEXNET_SYNTHIA_SUMMER_SD | 14 |

| Aerial-FPV (1280x720) | fcn-alexnet-aerial-fpv-720p | FCN_ALEXNET_AERIAL_FPV_720p | 2 |

Pose Estimation

| Model | CLI argument | NetworkType enum | Keypoints |

|---|---|---|---|

| Pose-ResNet18-Body | resnet18-body | RESNET18_BODY | 18 |

| Pose-ResNet18-Hand | resnet18-hand | RESNET18_HAND | 21 |

| Pose-DenseNet121-Body | densenet121-body | DENSENET121_BODY | 18 |

Action Recognition

| Model | CLI argument | Classes |

|---|---|---|

| Action-ResNet18-Kinetics | resnet18 | 1040 |

| Action-ResNet34-Kinetics | resnet34 | 1040 |

Recommended System Requirements

- Jetson Nano Developer Kit with JetPack 4.2 or newer (Ubuntu 18.04 aarch64).

- Jetson Nano 2GB Developer Kit with JetPack 4.4.1 or newer (Ubuntu 18.04 aarch64).

- Jetson Orin Nano Developer Kit with JetPack 5.0 or newer (Ubuntu 20.04 aarch64).

- Jetson Xavier NX Developer Kit with JetPack 4.4 or newer (Ubuntu 18.04 aarch64).

- Jetson AGX Xavier Developer Kit with JetPack 4.0 or newer (Ubuntu 18.04 aarch64).

- Jetson AGX Orin Developer Kit with JetPack 5.0 or newer (Ubuntu 20.04 aarch64).

- Jetson TX2 Developer Kit with JetPack 3.0 or newer (Ubuntu 16.04 aarch64).

- Jetson TX1 Developer Kit with JetPack 2.3 or newer (Ubuntu 16.04 aarch64).

The Transfer Learning with PyTorch section of the tutorial speaks from the perspective of running PyTorch onboard Jetson for training DNNs, however the same PyTorch code can be used on a PC, server, or cloud instance with an NVIDIA discrete GPU for faster training.

Extra Resources

In this area, links and resources for deep learning are listed:

- ros_deep_learning - TensorRT inference ROS nodes

- NVIDIA AI IoT - NVIDIA Jetson GitHub repositories

- Jetson eLinux Wiki - Jetson eLinux Wiki

Two Days to a Demo (DIGITS)

note: the DIGITS/Caffe tutorial from below is deprecated. It's recommended to follow the Transfer Learning with PyTorch tutorial from Hello AI World.

Expand this section to see original DIGITS tutorial (deprecated)

The DIGITS tutorial includes training DNN's in the cloud or PC, and inference on the Jetson with TensorRT, and can take roughly two days or more depending on system setup, downloading the datasets, and the training speed of your GPU.

- DIGITS Workflow

- DIGITS System Setup

- Setting up Jetson with JetPack

- Building the Project from Source

- Classifying Images with ImageNet

- Using the Console Program on Jetson

- Coding Your Own Image Recognition Program

- Running the Live Camera Recognition Demo

- Re-Training the Network with DIGITS

- Downloading Image Recognition Dataset

- Customizing the Object Classes

- Importing Classification Dataset into DIGITS

- Creating Image Classification Model with DIGITS

- Testing Classification Model in DIGITS

- Downloading Model Snapshot to Jetson

- Loading Custom Models on Jetson

- Locating Objects with DetectNet

- Detection Data Formatting in DIGITS

- Downloading the Detection Dataset

- Importing the Detection Dataset into DIGITS

- Creating DetectNet Model with DIGITS

- Testing DetectNet Model Inference in DIGITS

- Downloading the Detection Model to Jetson

- DetectNet Patches for TensorRT

- Detecting Objects from the Command Line

- Multi-class Object Detection Models

- Running the Live Camera Detection Demo on Jetson

- Semantic Segmentation with SegNet

© 2016-2019 NVIDIA | Table of Contents

Top Related Projects

DeepStream SDK Python bindings and sample applications

Models and examples built with TensorFlow

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

YOLOv4 / Scaled-YOLOv4 / YOLO - Neural Networks for Object Detection (Windows and Linux version of Darknet )

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot