gocache

gocache

☔️ A complete Go cache library that brings you multiple ways of managing your caches

Top Related Projects

Simple and powerful toolkit for BoltDB

An in-memory key:value store/cache (similar to Memcached) library for Go, suitable for single-machine applications.

Efficient cache for gigabytes of data written in Go.

An in-memory cache library for golang. It supports multiple eviction policies: LRU, LFU, ARC

A cache library for Go with zero GC overhead.

Go Memcached client library #golang

Quick Overview

The eko/gocache project is a lightweight, in-memory cache library for Go (Golang) applications. It provides a simple and efficient way to cache data in memory, with support for various cache eviction policies and expiration mechanisms.

Pros

- Simplicity: The library has a straightforward and easy-to-use API, making it accessible for developers of all skill levels.

- Performance:

gocacheis designed to be fast and efficient, with low overhead and minimal impact on application performance. - Flexibility: The library supports multiple cache eviction policies, including LRU (Least Recently Used), LFU (Least Frequently Used), and TTL (Time-to-Live), allowing developers to choose the most appropriate strategy for their use case.

- Extensibility: The library is designed to be easily extensible, with the ability to add custom cache providers and eviction policies.

Cons

- Limited Features: While

gocacheprovides a solid set of core features, it may lack some more advanced functionality found in other cache libraries, such as distributed caching or support for complex data structures. - Lack of Documentation: The project's documentation could be more comprehensive, making it potentially more challenging for new users to get started.

- Limited Community: Compared to some other popular Go cache libraries,

gocachehas a smaller community and may have fewer contributors and resources available. - Potential Performance Concerns: While the library is designed to be fast, the performance of the cache may be affected by the specific use case and the chosen eviction policy.

Code Examples

Here are a few examples of how to use the gocache library:

- Basic Cache Usage:

import "github.com/eko/gocache/cache"

// Create a new cache instance

c := cache.New()

// Set a value in the cache

c.Set("key", "value", nil)

// Retrieve a value from the cache

value, _ := c.Get("key")

- Cache with Expiration:

import (

"time"

"github.com/eko/gocache/cache"

)

// Create a new cache instance with a TTL (Time-to-Live)

c := cache.New(cache.WithTTL(time.Minute))

// Set a value in the cache with a TTL

c.Set("key", "value", cache.WithTTL(time.Minute))

// Retrieve a value from the cache

value, _ := c.Get("key")

- Cache with LRU Eviction Policy:

import "github.com/eko/gocache/cache"

// Create a new cache instance with LRU eviction policy

c := cache.New(cache.WithMaxEntries(100), cache.WithEviction(cache.LRU))

// Set values in the cache

c.Set("key1", "value1", nil)

c.Set("key2", "value2", nil)

c.Set("key3", "value3", nil)

// Retrieve a value from the cache

value, _ := c.Get("key1")

- Cache with Custom Provider:

import (

"github.com/eko/gocache/cache"

"github.com/eko/gocache/store"

)

// Create a custom cache provider

type MyProvider struct {}

func (p *MyProvider) Get(key string) (interface{}, error) {

// Implement custom logic to retrieve the value

return "value", nil

}

func (p *MyProvider) Set(key string, value interface{}, options *store.Options) error {

// Implement custom logic to store the value

return nil

}

// Create a new cache instance with the custom provider

c := cache.New(cache.WithProvider(&MyProvider{}))

Getting Started

To get started with the gocache library, follow these steps:

- Install the library using Go modules:

go get github.com/eko/gocache

- Import the library in your Go code:

import "github.com/eko/gocache/cache"

Competitor Comparisons

Simple and powerful toolkit for BoltDB

Pros of Storm

- Storm provides a more comprehensive set of features, including support for transactions, indexes, and advanced querying capabilities.

- Storm has a larger and more active community, with more contributors and a wider range of use cases.

- Storm offers better performance and scalability compared to GoCahe, especially for larger datasets and more complex queries.

Cons of Storm

- Storm has a steeper learning curve and may require more time to set up and configure compared to GoCahe.

- Storm's API and syntax may be more complex and less intuitive for developers familiar with simpler key-value stores.

- Storm may have a higher resource footprint compared to GoCahe, especially for smaller-scale applications.

Code Comparison

GoCahe:

cache := gocache.New(gocache.Options{

Expiration: time.Minute * 5,

CleanupInterval: time.Minute,

})

cache.Set("key", "value")

value, _ := cache.Get("key")

Storm:

db, _ := storm.Open("database.db")

defer db.Close()

type User struct {

ID int `storm:"id,increment"`

Name string `storm:"index"`

}

db.Save(&User{Name: "John Doe"})

var user User

db.One("Name", "John Doe", &user)

An in-memory key:value store/cache (similar to Memcached) library for Go, suitable for single-machine applications.

Pros of go-cache

- Provides a simple and lightweight in-memory cache implementation.

- Supports expiration of cache entries with customizable TTL.

- Offers thread-safe access to the cache.

Cons of go-cache

- Limited functionality compared to eko/gocache, which provides more advanced features like distributed caching.

- Lacks support for advanced cache eviction policies and cache invalidation.

Code Comparison

eko/gocache:

cache := gocache.New(gocache.Options{

Expiration: time.Minute * 5,

CleanupInterval: time.Minute,

})

cache.Set("key", "value", 0)

value, found := cache.Get("key")

patrickmn/go-cache:

cache := go_cache.New(5*time.Minute, 10*time.Minute)

cache.Set("key", "value", go_cache.DefaultExpiration)

value, found := cache.Get("key")

Efficient cache for gigabytes of data written in Go.

Pros of BigCache

- BigCache is designed for high-performance, low-latency caching, making it suitable for applications with high traffic and strict performance requirements.

- BigCache provides a simple and efficient API, making it easy to integrate into existing projects.

- BigCache supports various eviction policies, allowing you to customize the cache behavior to suit your application's needs.

Cons of BigCache

- BigCache is a more specialized cache solution, while GoCahe provides a more general-purpose caching library with additional features.

- BigCache may have a steeper learning curve compared to GoCahe, as it requires a deeper understanding of its internal workings to optimize its usage.

Code Comparison

GoCahe:

cache := gocache.New(gocache.Config{

MaxSize: 100 * gocache.MB,

TTL: time.Minute * 5,

CleanupRate: time.Minute,

})

cache.Set("key", "value")

value, ok := cache.Get("key")

BigCache:

cache, _ := bigcache.NewBigCache(bigcache.DefaultConfig(10 * time.Minute))

cache.Set("key", []byte("value"))

value, err := cache.Get("key")

An in-memory cache library for golang. It supports multiple eviction policies: LRU, LFU, ARC

Pros of bluele/gcache

- Supports multiple cache eviction policies, including LRU, LFU, and FIFO, allowing for more flexible cache management.

- Provides a simple and intuitive API for interacting with the cache, making it easy to integrate into existing projects.

- Includes support for expiration of cache entries, which can be useful for caching data that has a limited lifespan.

Cons of bluele/gcache

- Lacks some of the advanced features and customization options available in gocache, such as the ability to define custom cache keys and values.

- May have a slightly higher overhead compared to gocache, as it includes additional functionality and complexity.

- The documentation and community support for bluele/gcache may not be as extensive as that of gocache.

Code Comparison

Here's a brief comparison of the code for setting a cache value in both repositories:

gocache:

cache := gocache.New(gocache.WithExpiration(time.Minute))

cache.Set("key", "value", 60*time.Second)

gcache:

cache := gcache.New(gcache.WithExpiration(time.Minute))

cache.Set("key", "value")

As you can see, the gocache example includes an explicit expiration time, while the gcache example relies on the default expiration time set when creating the cache instance.

A cache library for Go with zero GC overhead.

Pros of FreeCahe

- Memory Efficiency: FreeCahe is designed to be more memory-efficient than GoCahe, making it a better choice for applications with tight memory constraints.

- Faster Lookups: FreeCahe claims to have faster lookup times compared to GoCahe, which can be beneficial for high-performance applications.

- Concurrent Access: FreeCahe supports concurrent access to the cache, allowing for better scalability in multi-threaded environments.

Cons of FreeCahe

- Fewer Features: FreeCahe has a more limited feature set compared to GoCahe, lacking some of the more advanced functionality like expiration callbacks and batch operations.

- Smaller Community: GoCahe has a larger and more active community, which can mean more support, documentation, and third-party integrations.

- Lack of Benchmarks: While FreeCahe claims to be faster, there are fewer independent benchmarks available to verify these claims compared to the more established GoCahe.

Code Comparison

Here's a brief comparison of the code for setting a key-value pair in both libraries:

GoCahe:

cache := gocache.New(1000, 0)

cache.Set("key", "value", 60 * time.Second)

FreeCahe:

cache := freecache.NewCache(1000 * 1024)

cache.Set([]byte("key"), []byte("value"))

The main differences are that FreeCahe uses byte slices for keys and values, and the expiration time is set separately using the Expire() method, rather than being part of the Set() call.

Go Memcached client library #golang

Pros of gomemcache

- Mature and well-established library with a large user base and active development.

- Provides a simple and straightforward API for interacting with Memcached.

- Supports advanced features like connection pooling and failover.

Cons of gomemcache

- Lacks some of the more advanced features and customization options available in GoCa che.

- May have a steeper learning curve for developers who are not familiar with Memcached.

Code Comparison

GoCa che:

cache := gocache.New(gocache.Options{

Expiration: time.Minute * 5,

CleanupInterval: time.Minute,

})

cache.Set("key", "value", 0)

value, _ := cache.Get("key")

gomemcache:

client := memcache.New("localhost:11211")

client.Set(&memcache.Item{

Key: "key",

Value: []byte("value"),

})

item, _ := client.Get("key")

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Gocache

Guess what is Gocache? a Go cache library. This is an extendable cache library that brings you a lot of features for caching data.

Overview

Here is what it brings in detail:

- â Multiple cache stores: actually in memory, redis, or your own custom store

- â A chain cache: use multiple cache with a priority order (memory then fallback to a redis shared cache for instance)

- â A loadable cache: allow you to call a callback function to put your data back in cache

- â A metric cache to let you store metrics about your caches usage (hits, miss, set success, set error, ...)

- â A marshaler to automatically marshal/unmarshal your cache values as a struct

- â Define default values in stores and override them when setting data

- â Cache invalidation by expiration time and/or using tags

- â Use of Generics

Built-in stores

- Memory (bigcache) (allegro/bigcache)

- Memory (ristretto) (dgraph-io/ristretto)

- Memory (go-cache) (patrickmn/go-cache)

- Memcache (bradfitz/memcache)

- Redis (go-redis/redis)

- Redis (rueidis) (redis/rueidis)

- Freecache (coocood/freecache)

- Pegasus (apache/incubator-pegasus) benchmark

- Hazelcast (hazelcast-go-client/hazelcast)

- More to come soon

Built-in metrics providers

Installation

To begin working with the latest version of gocache, you can import the library in your project:

go get github.com/eko/gocache/lib/v4

and then, import the store(s) you want to use between all available ones:

go get github.com/eko/gocache/store/bigcache/v4

go get github.com/eko/gocache/store/freecache/v4

go get github.com/eko/gocache/store/go_cache/v4

go get github.com/eko/gocache/store/hazelcast/v4

go get github.com/eko/gocache/store/memcache/v4

go get github.com/eko/gocache/store/pegasus/v4

go get github.com/eko/gocache/store/redis/v4

go get github.com/eko/gocache/store/rediscluster/v4

go get github.com/eko/gocache/store/rueidis/v4

go get github.com/eko/gocache/store/ristretto/v4

Then, simply use the following import statements:

import (

"github.com/eko/gocache/lib/v4/cache"

"github.com/eko/gocache/store/redis/v4"

)

If you run into any errors, please be sure to run go mod tidy to clean your go.mod file.

Available cache features in detail

A simple cache

Here is a simple cache instantiation with Redis but you can also look at other available stores:

Memcache

memcacheStore := memcache_store.NewMemcache(

memcache.New("10.0.0.1:11211", "10.0.0.2:11211", "10.0.0.3:11212"),

store.WithExpiration(10*time.Second),

)

cacheManager := cache.New[[]byte](memcacheStore)

err := cacheManager.Set(ctx, "my-key", []byte("my-value"),

store.WithExpiration(15*time.Second), // Override default value of 10 seconds defined in the store

)

if err != nil {

panic(err)

}

value := cacheManager.Get(ctx, "my-key")

cacheManager.Delete(ctx, "my-key")

cacheManager.Clear(ctx) // Clears the entire cache, in case you want to flush all cache

Memory (using Bigcache)

bigcacheClient, _ := bigcache.NewBigCache(bigcache.DefaultConfig(5 * time.Minute))

bigcacheStore := bigcache_store.NewBigcache(bigcacheClient)

cacheManager := cache.New[[]byte](bigcacheStore)

err := cacheManager.Set(ctx, "my-key", []byte("my-value"))

if err != nil {

panic(err)

}

value := cacheManager.Get(ctx, "my-key")

Memory (using Ristretto)

import (

"github.com/dgraph-io/ristretto"

"github.com/eko/gocache/lib/v4/cache"

"github.com/eko/gocache/lib/v4/store"

ristretto_store "github.com/eko/gocache/store/ristretto/v4"

)

ristrettoCache, err := ristretto.NewCache(&ristretto.Config{

NumCounters: 1000,

MaxCost: 100,

BufferItems: 64,

})

if err != nil {

panic(err)

}

ristrettoStore := ristretto_store.NewRistretto(ristrettoCache)

cacheManager := cache.New[string](ristrettoStore)

err := cacheManager.Set(ctx, "my-key", "my-value", store.WithCost(2))

if err != nil {

panic(err)

}

value := cacheManager.Get(ctx, "my-key")

cacheManager.Delete(ctx, "my-key")

Memory (using Go-cache)

gocacheClient := gocache.New(5*time.Minute, 10*time.Minute)

gocacheStore := gocache_store.NewGoCache(gocacheClient)

cacheManager := cache.New[[]byte](gocacheStore)

err := cacheManager.Set(ctx, "my-key", []byte("my-value"))

if err != nil {

panic(err)

}

value, err := cacheManager.Get(ctx, "my-key")

if err != nil {

panic(err)

}

fmt.Printf("%s", value)

Redis

redisStore := redis_store.NewRedis(redis.NewClient(&redis.Options{

Addr: "127.0.0.1:6379",

}))

cacheManager := cache.New[string](redisStore)

err := cacheManager.Set(ctx, "my-key", "my-value", store.WithExpiration(15*time.Second))

if err != nil {

panic(err)

}

value, err := cacheManager.Get(ctx, "my-key")

switch err {

case nil:

fmt.Printf("Get the key '%s' from the redis cache. Result: %s", "my-key", value)

case redis.Nil:

fmt.Printf("Failed to find the key '%s' from the redis cache.", "my-key")

default:

fmt.Printf("Failed to get the value from the redis cache with key '%s': %v", "my-key", err)

}

Redis Client-Side Caching (using rueidis)

client, err := rueidis.NewClient(rueidis.ClientOption{InitAddress: []string{"127.0.0.1:6379"}})

if err != nil {

panic(err)

}

cacheManager := cache.New[string](rueidis_store.NewRueidis(

client,

store.WithExpiration(15*time.Second),

store.WithClientSideCaching(15*time.Second)),

)

if err = cacheManager.Set(ctx, "my-key", "my-value"); err != nil {

panic(err)

}

value, err := cacheManager.Get(ctx, "my-key")

if err != nil {

log.Fatalf("Failed to get the value from the redis cache with key '%s': %v", "my-key", err)

}

log.Printf("Get the key '%s' from the redis cache. Result: %s", "my-key", value)

Freecache

freecacheStore := freecache_store.NewFreecache(freecache.NewCache(1000), store.WithExpiration(10 * time.Second))

cacheManager := cache.New[[]byte](freecacheStore)

err := cacheManager.Set(ctx, "by-key", []byte("my-value"), opts)

if err != nil {

panic(err)

}

value := cacheManager.Get(ctx, "my-key")

Pegasus

pegasusStore, err := pegasus_store.NewPegasus(&store.OptionsPegasus{

MetaServers: []string{"127.0.0.1:34601", "127.0.0.1:34602", "127.0.0.1:34603"},

})

if err != nil {

fmt.Println(err)

return

}

cacheManager := cache.New[string](pegasusStore)

err = cacheManager.Set(ctx, "my-key", "my-value", store.WithExpiration(10 * time.Second))

if err != nil {

panic(err)

}

value, _ := cacheManager.Get(ctx, "my-key")

Hazelcast

hzClient, err := hazelcast.StartNewClient(ctx)

if err != nil {

log.Fatalf("Failed to start client: %v", err)

}

hzMap, err := hzClient.GetMap(ctx, "gocache")

if err != nil {

b.Fatalf("Failed to get map: %v", err)

}

hazelcastStore := hazelcast_store.NewHazelcast(hzMap)

cacheManager := cache.New[string](hazelcastStore)

err := cacheManager.Set(ctx, "my-key", "my-value", store.WithExpiration(15*time.Second))

if err != nil {

panic(err)

}

value, err := cacheManager.Get(ctx, "my-key")

if err != nil {

panic(err)

}

fmt.Printf("Get the key '%s' from the hazelcast cache. Result: %s", "my-key", value)

A chained cache

Here, we will chain caches in the following order: first in memory with Ristretto store, then in Redis (as a fallback):

// Initialize Ristretto cache and Redis client

ristrettoCache, err := ristretto.NewCache(&ristretto.Config{NumCounters: 1000, MaxCost: 100, BufferItems: 64})

if err != nil {

panic(err)

}

redisClient := redis.NewClient(&redis.Options{Addr: "127.0.0.1:6379"})

// Initialize stores

ristrettoStore := ristretto_store.NewRistretto(ristrettoCache)

redisStore := redis_store.NewRedis(redisClient, store.WithExpiration(5*time.Second))

// Initialize chained cache

cacheManager := cache.NewChain[any](

cache.New[any](ristrettoStore),

cache.New[any](redisStore),

)

// ... Then, do what you want with your cache

Chain cache also put data back in previous caches when it's found so in this case, if ristretto doesn't have the data in its cache but redis have, data will also get setted back into ristretto (memory) cache.

A loadable cache

This cache will provide a load function that acts as a callable function and will set your data back in your cache in case they are not available:

type Book struct {

ID string

Name string

}

// Initialize Redis client and store

redisClient := redis.NewClient(&redis.Options{Addr: "127.0.0.1:6379"})

redisStore := redis_store.NewRedis(redisClient)

// Initialize a load function that loads your data from a custom source

loadFunction := func(ctx context.Context, key any) (*Book, error) {

// ... retrieve value from available source

return &Book{ID: 1, Name: "My test amazing book"}, nil

}

// Initialize loadable cache

cacheManager := cache.NewLoadable[*Book](

loadFunction,

cache.New[*Book](redisStore),

)

// ... Then, you can get your data and your function will automatically put them in cache(s)

Of course, you can also pass a Chain cache into the Loadable one so if your data is not available in all caches, it will bring it back in all caches.

A metric cache to retrieve cache statistics

This cache will record metrics depending on the metric provider you pass to it. Here we give a Prometheus provider:

// Initialize Redis client and store

redisClient := redis.NewClient(&redis.Options{Addr: "127.0.0.1:6379"})

redisStore := redis_store.NewRedis(redisClient)

// Initializes Prometheus metrics service

promMetrics := metrics.NewPrometheus("my-test-app")

// Initialize metric cache

cacheManager := cache.NewMetric[any](

promMetrics,

cache.New[any](redisStore),

)

// ... Then, you can get your data and metrics will be observed by Prometheus

A marshaler wrapper

Some caches like Redis stores and returns the value as a string so you have to marshal/unmarshal your structs if you want to cache an object. That's why we bring a marshaler service that wraps your cache and make the work for you:

// Initialize Redis client and store

redisClient := redis.NewClient(&redis.Options{Addr: "127.0.0.1:6379"})

redisStore := redis_store.NewRedis(redisClient)

// Initialize chained cache

cacheManager := cache.NewMetric[any](

promMetrics,

cache.New[any](redisStore),

)

// Initializes marshaler

marshal := marshaler.New(cacheManager)

key := BookQuery{Slug: "my-test-amazing-book"}

value := Book{ID: 1, Name: "My test amazing book", Slug: "my-test-amazing-book"}

err = marshal.Set(ctx, key, value)

if err != nil {

panic(err)

}

returnedValue, err := marshal.Get(ctx, key, new(Book))

if err != nil {

panic(err)

}

// Then, do what you want with the value

marshal.Delete(ctx, "my-key")

The only thing you have to do is to specify the struct in which you want your value to be un-marshalled as a second argument when calling the .Get() method.

Cache invalidation using tags

You can attach some tags to items you create so you can easily invalidate some of them later.

Tags are stored using the same storage you choose for your cache.

Here is an example on how to use it:

// Initialize Redis client and store

redisClient := redis.NewClient(&redis.Options{Addr: "127.0.0.1:6379"})

redisStore := redis_store.NewRedis(redisClient)

// Initialize chained cache

cacheManager := cache.NewMetric[*Book](

promMetrics,

cache.New[*Book](redisStore),

)

// Initializes marshaler

marshal := marshaler.New(cacheManager)

key := BookQuery{Slug: "my-test-amazing-book"}

value := &Book{ID: 1, Name: "My test amazing book", Slug: "my-test-amazing-book"}

// Set an item in the cache and attach it a "book" tag

err = marshal.Set(ctx, key, value, store.WithTags([]string{"book"}))

if err != nil {

panic(err)

}

// Remove all items that have the "book" tag

err := marshal.Invalidate(ctx, store.WithInvalidateTags([]string{"book"}))

if err != nil {

panic(err)

}

returnedValue, err := marshal.Get(ctx, key, new(Book))

if err != nil {

// Should be triggered because item has been deleted so it cannot be found.

panic(err)

}

Mix this with expiration times on your caches to have a fine-tuned control on how your data are cached.

package main

import (

"fmt"

"log"

"time"

"github.com/eko/gocache/lib/v4/cache"

"github.com/eko/gocache/lib/v4/store"

"github.com/redis/go-redis/v9"

)

func main() {

redisStore := redis_store.NewRedis(redis.NewClient(&redis.Options{

Addr: "127.0.0.1:6379",

}), nil)

cacheManager := cache.New[string](redisStore)

err := cacheManager.Set(ctx, "my-key", "my-value", store.WithExpiration(15*time.Second))

if err != nil {

panic(err)

}

key := "my-key"

value, err := cacheManager.Get(ctx, key)

if err != nil {

log.Fatalf("unable to get cache key '%s' from the cache: %v", key, err)

}

fmt.Printf("%#+v\n", value)

}

Write your own custom cache

Cache respect the following interface so you can write your own (proprietary?) cache logic if needed by implementing the following interface:

type CacheInterface[T any] interface {

Get(ctx context.Context, key any) (T, error)

Set(ctx context.Context, key any, object T, options ...store.Option) error

Delete(ctx context.Context, key any) error

Invalidate(ctx context.Context, options ...store.InvalidateOption) error

Clear(ctx context.Context) error

GetType() string

}

Or, in case you use a setter cache, also implement the GetCodec() method:

type SetterCacheInterface[T any] interface {

CacheInterface[T]

GetWithTTL(ctx context.Context, key any) (T, time.Duration, error)

GetCodec() codec.CodecInterface

}

As all caches available in this library implement CacheInterface, you will be able to mix your own caches with your own.

Write your own custom store

You also have the ability to write your own custom store by implementing the following interface:

type StoreInterface interface {

Get(ctx context.Context, key any) (any, error)

GetWithTTL(ctx context.Context, key any) (any, time.Duration, error)

Set(ctx context.Context, key any, value any, options ...Option) error

Delete(ctx context.Context, key any) error

Invalidate(ctx context.Context, options ...InvalidateOption) error

Clear(ctx context.Context) error

GetType() string

}

Of course, I suggest you to have a look at current caches or stores to implement your own.

Custom cache key generator

You can implement the following interface in order to generate a custom cache key:

type CacheKeyGenerator interface {

GetCacheKey() string

}

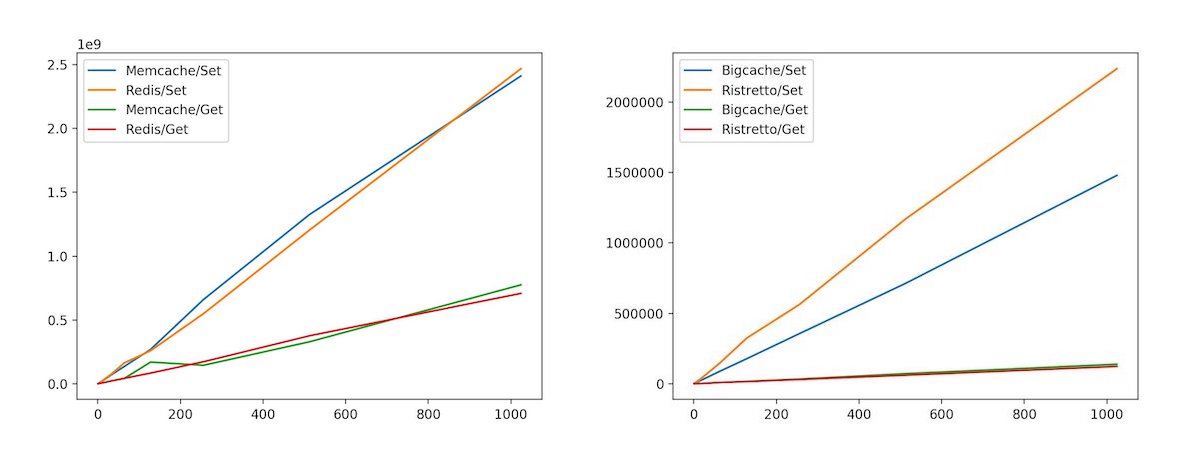

Benchmarks

Run tests

To generate mocks using mockgen library, run:

$ make mocks

Test suite can be run with:

$ make test # run unit test

Community

Please feel free to contribute on this library and do not hesitate to open an issue if you want to discuss about a feature.

Top Related Projects

Simple and powerful toolkit for BoltDB

An in-memory key:value store/cache (similar to Memcached) library for Go, suitable for single-machine applications.

Efficient cache for gigabytes of data written in Go.

An in-memory cache library for golang. It supports multiple eviction policies: LRU, LFU, ARC

A cache library for Go with zero GC overhead.

Go Memcached client library #golang

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot