Top Related Projects

Image-to-Image Translation in PyTorch

starter from "How to Train a GAN?" at NIPS2016

A list of all named GANs!

A tensorflow implementation of "Deep Convolutional Generative Adversarial Networks"

Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow.

Quick Overview

Keras-GAN is a GitHub repository containing implementations of various Generative Adversarial Networks (GANs) using the Keras deep learning framework. It provides a collection of GAN architectures, including popular models like DCGAN, CGAN, and WGAN, implemented in a consistent and easy-to-understand manner.

Pros

- Offers a wide variety of GAN implementations in one place

- Code is well-organized and consistent across different models

- Provides a good starting point for learning about and experimenting with GANs

- Implementations are based on Keras, making them accessible to many deep learning practitioners

Cons

- Some implementations may not be optimized for performance

- Limited documentation and explanations for each model

- May not always include the latest GAN architectures or improvements

- Dependency on older versions of Keras and TensorFlow

Code Examples

- Importing and creating a DCGAN model:

from keras_gan.dcgan.dcgan import DCGAN

# Create DCGAN instance

dcgan = DCGAN(img_rows=28, img_cols=28, channels=1)

# Build and compile the model

dcgan.build_and_compile()

- Training a CGAN model:

from keras_gan.cgan.cgan import CGAN

# Create CGAN instance

cgan = CGAN(img_rows=28, img_cols=28, channels=1, num_classes=10)

# Train the model

cgan.train(epochs=20000, batch_size=32, sample_interval=200)

- Generating images with a trained WGAN:

from keras_gan.wgan.wgan import WGAN

import numpy as np

# Create and train WGAN instance (assuming it's already trained)

wgan = WGAN(img_rows=28, img_cols=28, channels=1)

# Generate random noise

noise = np.random.normal(0, 1, (25, 100))

# Generate images

gen_imgs = wgan.generator.predict(noise)

Getting Started

To get started with Keras-GAN:

-

Clone the repository:

git clone https://github.com/eriklindernoren/Keras-GAN.git cd Keras-GAN -

Install dependencies:

pip install -r requirements.txt -

Choose a GAN implementation and run the corresponding script:

python keras_gan/dcgan/dcgan.py

Note: Make sure you have the required datasets (e.g., MNIST) available or modify the code to use your own dataset.

Competitor Comparisons

Image-to-Image Translation in PyTorch

Pros of pytorch-CycleGAN-and-pix2pix

- Implements multiple GAN models, including CycleGAN and pix2pix

- Provides extensive documentation and usage examples

- Offers pre-trained models for quick testing and deployment

Cons of pytorch-CycleGAN-and-pix2pix

- Limited to PyTorch framework, less flexible for users familiar with other libraries

- Focuses on specific GAN architectures, potentially less suitable for general GAN experimentation

Code Comparison

pytorch-CycleGAN-and-pix2pix:

class CycleGANModel(BaseModel):

def __init__(self, opt):

BaseModel.__init__(self, opt)

self.loss_names = ['D_A', 'G_A', 'cycle_A', 'idt_A', 'D_B', 'G_B', 'cycle_B', 'idt_B']

self.visual_names = ['real_A', 'fake_B', 'rec_A', 'real_B', 'fake_A', 'rec_B']

Keras-GAN:

class GAN():

def __init__(self):

self.img_rows = 28

self.img_cols = 28

self.channels = 1

self.img_shape = (self.img_rows, self.img_cols, self.channels)

self.latent_dim = 100

Both repositories provide implementations of GANs, but pytorch-CycleGAN-and-pix2pix focuses on specific architectures with more detailed implementations, while Keras-GAN offers a broader range of GAN models with simpler implementations using the Keras framework.

starter from "How to Train a GAN?" at NIPS2016

Pros of ganhacks

- Provides a comprehensive list of tips and tricks for training GANs

- Language-agnostic advice applicable to various GAN implementations

- Regularly updated with community contributions and new insights

Cons of ganhacks

- Lacks actual code implementations, focusing on theoretical advice

- May require more effort to apply the tips in practice

- Not specific to any particular framework or library

Code comparison

ganhacks doesn't provide direct code examples, but Keras-GAN offers implementations like:

def build_generator(self):

model = Sequential()

model.add(Dense(256, input_dim=self.latent_dim))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

Summary

ganhacks serves as a valuable resource for GAN best practices and troubleshooting, while Keras-GAN provides concrete implementations in Keras. ganhacks is more versatile but requires additional effort to implement, whereas Keras-GAN offers ready-to-use code examples but is limited to the Keras framework.

A list of all named GANs!

Pros of the-gan-zoo

- Comprehensive list of GAN variants with links to papers and code

- Regularly updated with new GAN architectures

- Serves as a valuable reference for researchers and practitioners

Cons of the-gan-zoo

- No implementation code provided, only links to external resources

- Less focused on practical implementation compared to Keras-GAN

- May be overwhelming for beginners due to the sheer number of GAN variants listed

Code comparison

The-gan-zoo doesn't provide any implementation code, so a direct code comparison isn't possible. However, Keras-GAN offers implementations for various GAN architectures. Here's a snippet from their ACGAN implementation:

def build_generator(self):

noise = Input(shape=(self.latent_dim,))

label = Input(shape=(1,), dtype='int32')

label_embedding = Flatten()(Embedding(self.num_classes, self.latent_dim)(label))

model_input = multiply([noise, label_embedding])

x = Dense(128 * 7 * 7, activation="relu")(model_input)

x = Reshape((7, 7, 128))(x)

x = BatchNormalization(momentum=0.8)(x)

x = UpSampling2D()(x)

x = Conv2D(128, kernel_size=3, padding="same")(x)

x = Activation("relu")(x)

# ... (more layers)

img = Conv2D(self.channels, kernel_size=3, padding="same")(x)

img = Activation("tanh")(img)

return Model([noise, label], img)

This code demonstrates the practical implementation focus of Keras-GAN, which is not present in the-gan-zoo.

A tensorflow implementation of "Deep Convolutional Generative Adversarial Networks"

Pros of DCGAN-tensorflow

- Implements DCGAN architecture specifically, which is known for stable training and high-quality image generation

- Uses TensorFlow, offering more flexibility and lower-level control over the model architecture

- Provides detailed implementation of DCGAN paper, useful for researchers and advanced practitioners

Cons of DCGAN-tensorflow

- Limited to DCGAN architecture, while Keras-GAN offers multiple GAN variants

- Less user-friendly compared to Keras-GAN's high-level API and simpler implementation

- May require more in-depth understanding of TensorFlow and GANs to use effectively

Code Comparison

DCGAN-tensorflow:

def discriminator(image, reuse=False):

with tf.variable_scope("discriminator") as scope:

if reuse:

scope.reuse_variables()

# Discriminator network implementation

Keras-GAN:

def build_discriminator():

model = Sequential()

# Discriminator model layers

return model

The DCGAN-tensorflow implementation uses TensorFlow's lower-level API, providing more control but requiring more complex code. Keras-GAN uses Keras' high-level API, resulting in more concise and readable code, but with less flexibility in terms of architecture customization.

Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow.

Pros of generative-models

- Implements a wider variety of generative models, including VAEs, GANs, and autoregressive models

- Uses multiple frameworks (TensorFlow, PyTorch, and NumPy), providing more flexibility

- Includes detailed explanations and mathematical formulations in the README

Cons of generative-models

- Less focused on GANs specifically, which may be a drawback for users primarily interested in GAN implementations

- Code structure is less uniform across different models, potentially making it harder to navigate

Code Comparison

generative-models (PyTorch GAN):

D = torch.nn.Sequential(

torch.nn.Linear(X_dim, h_dim),

torch.nn.ReLU(),

torch.nn.Linear(h_dim, 1),

torch.nn.Sigmoid()

)

Keras-GAN:

def build_discriminator():

model = Sequential()

model.add(Dense(512, input_dim=self.img_shape))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(1, activation='sigmoid'))

return model

Both repositories provide valuable resources for implementing generative models. generative-models offers a broader range of models and frameworks, while Keras-GAN focuses specifically on GAN implementations using Keras. The choice between them depends on the user's specific needs and preferred framework.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

This repository has gone stale as I unfortunately do not have the time to maintain it anymore. If you would like to continue the development of it as a collaborator send me an email at eriklindernoren@gmail.com.

Keras-GAN

Collection of Keras implementations of Generative Adversarial Networks (GANs) suggested in research papers. These models are in some cases simplified versions of the ones ultimately described in the papers, but I have chosen to focus on getting the core ideas covered instead of getting every layer configuration right. Contributions and suggestions of GAN varieties to implement are very welcomed.

See also: PyTorch-GAN

Table of Contents

- Installation

- Implementations

- Auxiliary Classifier GAN

- Adversarial Autoencoder

- Bidirectional GAN

- Boundary-Seeking GAN

- Conditional GAN

- Context-Conditional GAN

- Context Encoder

- Coupled GANs

- CycleGAN

- Deep Convolutional GAN

- DiscoGAN

- DualGAN

- Generative Adversarial Network

- InfoGAN

- LSGAN

- Pix2Pix

- PixelDA

- Semi-Supervised GAN

- Super-Resolution GAN

- Wasserstein GAN

- Wasserstein GAN GP

Installation

$ git clone https://github.com/eriklindernoren/Keras-GAN

$ cd Keras-GAN/

$ sudo pip3 install -r requirements.txt

Implementations

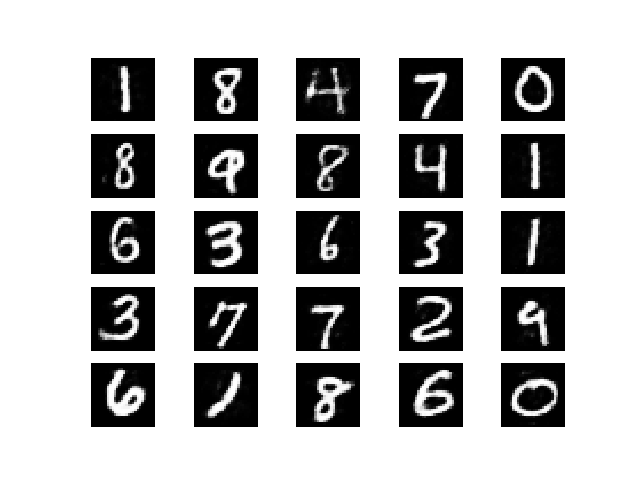

AC-GAN

Implementation of Auxiliary Classifier Generative Adversarial Network.

Paper: https://arxiv.org/abs/1610.09585

Example

$ cd acgan/

$ python3 acgan.py

Adversarial Autoencoder

Implementation of Adversarial Autoencoder.

Paper: https://arxiv.org/abs/1511.05644

Example

$ cd aae/

$ python3 aae.py

BiGAN

Implementation of Bidirectional Generative Adversarial Network.

Paper: https://arxiv.org/abs/1605.09782

Example

$ cd bigan/

$ python3 bigan.py

BGAN

Implementation of Boundary-Seeking Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1702.08431

Example

$ cd bgan/

$ python3 bgan.py

CC-GAN

Implementation of Semi-Supervised Learning with Context-Conditional Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1611.06430

Example

$ cd ccgan/

$ python3 ccgan.py

CGAN

Implementation of Conditional Generative Adversarial Nets.

Paper:https://arxiv.org/abs/1411.1784

Example

$ cd cgan/

$ python3 cgan.py

Context Encoder

Implementation of Context Encoders: Feature Learning by Inpainting.

Paper: https://arxiv.org/abs/1604.07379

Example

$ cd context_encoder/

$ python3 context_encoder.py

CoGAN

Implementation of Coupled generative adversarial networks.

Paper: https://arxiv.org/abs/1606.07536

Example

$ cd cogan/

$ python3 cogan.py

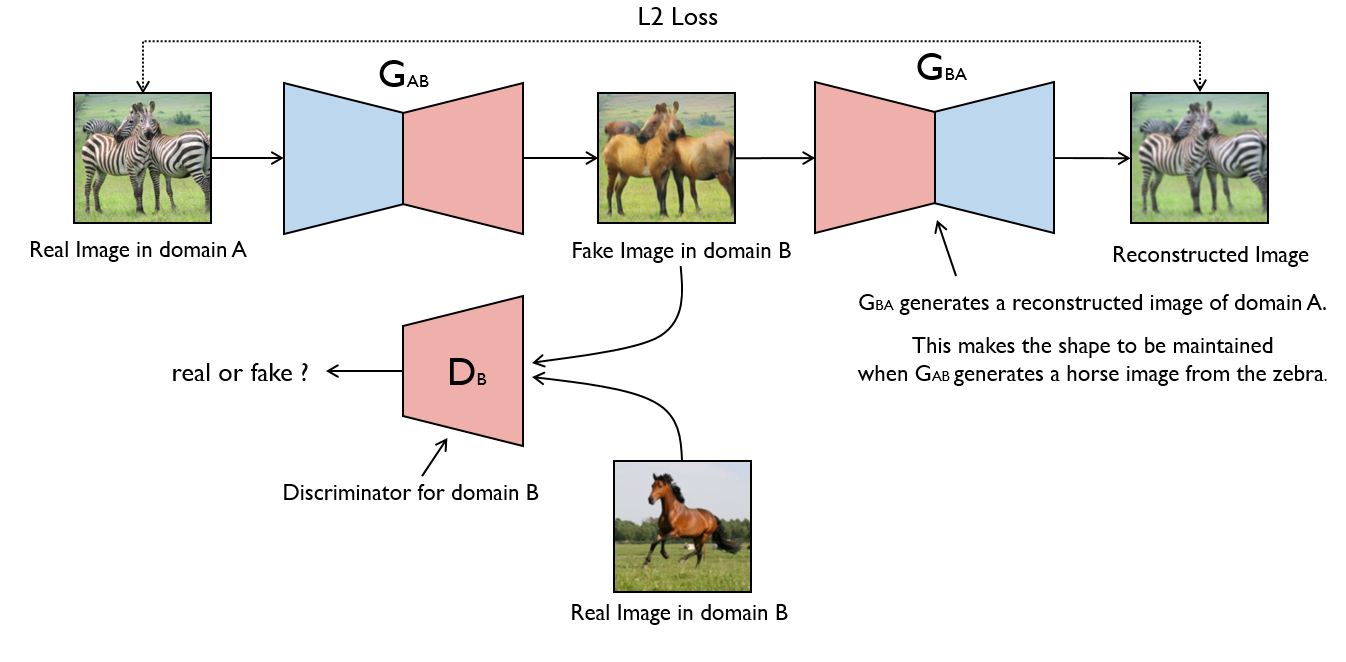

CycleGAN

Implementation of Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

Paper: https://arxiv.org/abs/1703.10593

Example

$ cd cyclegan/

$ bash download_dataset.sh apple2orange

$ python3 cyclegan.py

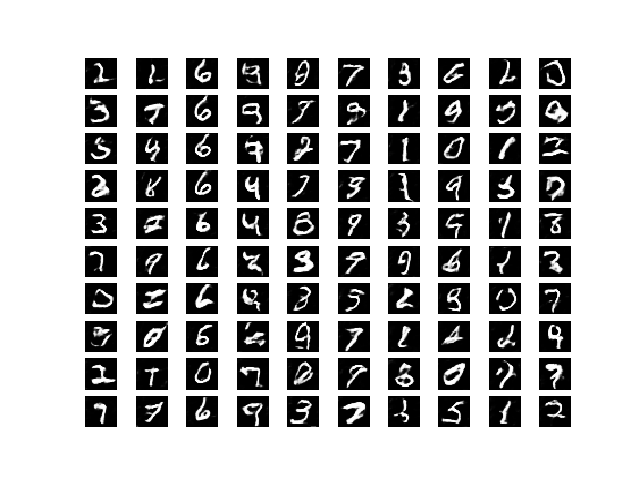

DCGAN

Implementation of Deep Convolutional Generative Adversarial Network.

Paper: https://arxiv.org/abs/1511.06434

Example

$ cd dcgan/

$ python3 dcgan.py

DiscoGAN

Implementation of Learning to Discover Cross-Domain Relations with Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1703.05192

Example

$ cd discogan/

$ bash download_dataset.sh edges2shoes

$ python3 discogan.py

DualGAN

Implementation of DualGAN: Unsupervised Dual Learning for Image-to-Image Translation.

Paper: https://arxiv.org/abs/1704.02510

Example

$ cd dualgan/

$ python3 dualgan.py

GAN

Implementation of Generative Adversarial Network with a MLP generator and discriminator.

Paper: https://arxiv.org/abs/1406.2661

Example

$ cd gan/

$ python3 gan.py

InfoGAN

Implementation of InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets.

Paper: https://arxiv.org/abs/1606.03657

Example

$ cd infogan/

$ python3 infogan.py

LSGAN

Implementation of Least Squares Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1611.04076

Example

$ cd lsgan/

$ python3 lsgan.py

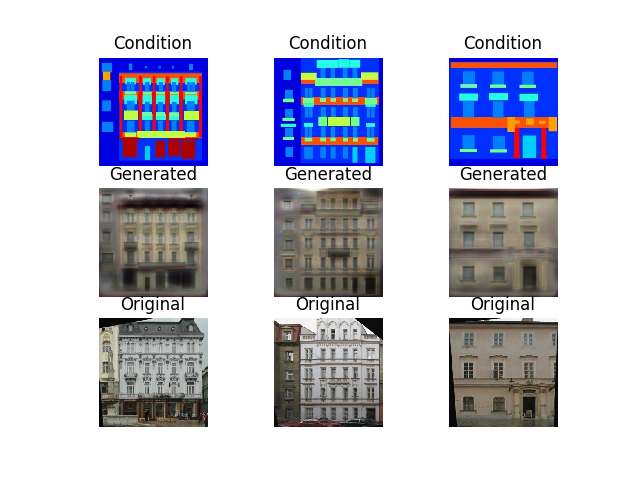

Pix2Pix

Implementation of Image-to-Image Translation with Conditional Adversarial Networks.

Paper: https://arxiv.org/abs/1611.07004

Example

$ cd pix2pix/

$ bash download_dataset.sh facades

$ python3 pix2pix.py

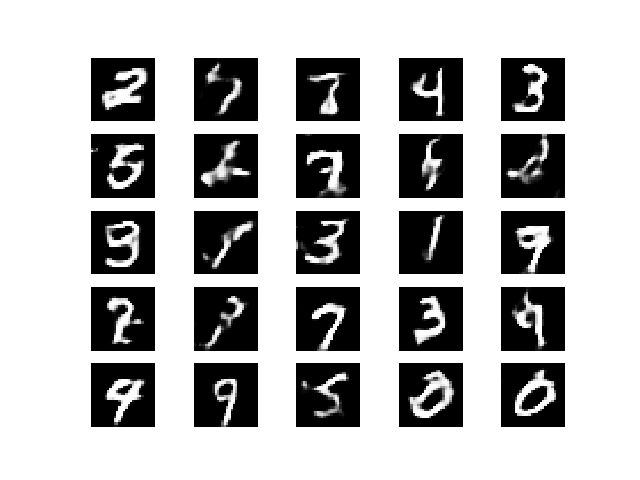

PixelDA

Implementation of Unsupervised Pixel-Level Domain Adaptation with Generative Adversarial Networks.

Paper: https://arxiv.org/abs/1612.05424

MNIST to MNIST-M Classification

Trains a classifier on MNIST images that are translated to resemble MNIST-M (by performing unsupervised image-to-image domain adaptation). This model is compared to the naive solution of training a classifier on MNIST and evaluating it on MNIST-M. The naive model manages a 55% classification accuracy on MNIST-M while the one trained during domain adaptation gets a 95% classification accuracy.

$ cd pixelda/

$ python3 pixelda.py

| Method | Accuracy |

|---|---|

| Naive | 55% |

| PixelDA | 95% |

SGAN

Implementation of Semi-Supervised Generative Adversarial Network.

Paper: https://arxiv.org/abs/1606.01583

Example

$ cd sgan/

$ python3 sgan.py

SRGAN

Implementation of Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network.

Paper: https://arxiv.org/abs/1609.04802

Example

$ cd srgan/

<follow steps at the top of srgan.py>

$ python3 srgan.py

WGAN

Implementation of Wasserstein GAN (with DCGAN generator and discriminator).

Paper: https://arxiv.org/abs/1701.07875

Example

$ cd wgan/

$ python3 wgan.py

WGAN GP

Implementation of Improved Training of Wasserstein GANs.

Paper: https://arxiv.org/abs/1704.00028

Example

$ cd wgan_gp/

$ python3 wgan_gp.py

Top Related Projects

Image-to-Image Translation in PyTorch

starter from "How to Train a GAN?" at NIPS2016

A list of all named GANs!

A tensorflow implementation of "Deep Convolutional Generative Adversarial Networks"

Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot