Top Related Projects

Simple, efficient background processing for Ruby

Resque is a Redis-backed Ruby library for creating background jobs, placing them on multiple queues, and processing them later.

Beanstalk is a simple, fast work queue.

Machinery is an asynchronous task queue/job queue based on distributed message passing.

Process background jobs in Go

Message queue system written in Go and backed by Redis

Quick Overview

Asynq is a Go library for distributed task queue processing. It provides a simple, reliable, and efficient way to process background tasks asynchronously in Go applications, using Redis as a message broker.

Pros

- Simple and easy-to-use API for task queue management

- Built-in support for task scheduling and retries

- Scalable architecture with support for multiple workers and queues

- Comprehensive monitoring and observability features

Cons

- Requires Redis as a dependency

- Limited to Go programming language

- May have a learning curve for developers new to task queue systems

- Lacks some advanced features found in more mature task queue solutions

Code Examples

- Defining and enqueueing a task:

import "github.com/hibiken/asynq"

// Define a task

task := asynq.NewTask("email:send", map[string]interface{}{

"user_id": 123,

"template_id": "welcome_email",

})

// Enqueue the task

client := asynq.NewClient(asynq.RedisClientOpt{Addr: "localhost:6379"})

info, err := client.Enqueue(task)

if err != nil {

log.Fatalf("could not enqueue task: %v", err)

}

fmt.Printf("enqueued task: id=%s queue=%s\n", info.ID, info.Queue)

- Processing tasks with a worker:

import "github.com/hibiken/asynq"

func main() {

srv := asynq.NewServer(

asynq.RedisClientOpt{Addr: "localhost:6379"},

asynq.Config{Concurrency: 10},

)

mux := asynq.NewServeMux()

mux.HandleFunc("email:send", handleSendEmailTask)

if err := srv.Run(mux); err != nil {

log.Fatalf("could not run server: %v", err)

}

}

func handleSendEmailTask(ctx context.Context, t *asynq.Task) error {

userID, _ := t.Payload.GetInt("user_id")

templateID, _ := t.Payload.GetString("template_id")

// Implement email sending logic here

return nil

}

- Scheduling a periodic task:

import "github.com/hibiken/asynq"

scheduler := asynq.NewScheduler(

asynq.RedisClientOpt{Addr: "localhost:6379"},

&asynq.SchedulerOpts{},

)

task := asynq.NewTask("cleanup:expired_data", nil)

entryID, err := scheduler.Register("@daily", task)

if err != nil {

log.Fatal(err)

}

if err := scheduler.Run(); err != nil {

log.Fatal(err)

}

Getting Started

To get started with Asynq, follow these steps:

-

Install Asynq:

go get -u github.com/hibiken/asynq -

Set up Redis (ensure it's running on your system)

-

Create a client to enqueue tasks and a server to process them (see code examples above)

-

Run your application and start processing tasks

For more detailed information and advanced usage, refer to the official documentation at https://github.com/hibiken/asynq.

Competitor Comparisons

Simple, efficient background processing for Ruby

Pros of Sidekiq

- Mature and battle-tested with a large community and ecosystem

- Rich feature set including web UI, cron jobs, and batches

- Extensive documentation and support options

Cons of Sidekiq

- Ruby-specific, limiting language choices

- Commercial version required for some advanced features

- Higher resource consumption compared to Asynq

Code Comparison

Sidekiq (Ruby):

class HardWorker

include Sidekiq::Worker

def perform(name, count)

# do something

end

end

HardWorker.perform_async('bob', 5)

Asynq (Go):

type EmailDeliveryTask struct {

UserID int

Email string

}

asynq.NewTask("email:deliver", map[string]interface{}{

"user_id": 123,

"email": "user@example.com",

})

Both Sidekiq and Asynq provide simple APIs for defining and enqueuing background jobs. Sidekiq uses a class-based approach with Ruby, while Asynq uses structs and interfaces in Go. Sidekiq's syntax is more concise, but Asynq offers type safety and better performance characteristics due to Go's compiled nature.

Asynq is a newer project that aims to bring Sidekiq-like functionality to Go applications. It offers similar core features like Redis-backed queues, retries, and scheduled jobs. Asynq benefits from Go's performance and concurrency model but has a smaller ecosystem compared to Sidekiq's mature Ruby-based offering.

Resque is a Redis-backed Ruby library for creating background jobs, placing them on multiple queues, and processing them later.

Pros of Resque

- Mature and battle-tested, with a large community and extensive ecosystem

- Built-in web interface for monitoring and managing jobs

- Supports multiple queues and prioritization out of the box

Cons of Resque

- Ruby-specific, limiting its use in polyglot environments

- Relies on Redis for job storage, which may not be ideal for all use cases

- Can be more resource-intensive compared to newer alternatives

Code Comparison

Resque job definition:

class ImageProcessor

@queue = :image_processing

def self.perform(image_id)

image = Image.find(image_id)

image.process

end

end

Asynq task definition:

func ProcessImage(ctx context.Context, imageID int64) error {

image, err := findImage(imageID)

if err != nil {

return err

}

return image.Process()

}

Asynq offers a more Go-idiomatic approach with context support and error handling, while Resque uses a class-based structure typical of Ruby. Asynq's design allows for better integration with Go's concurrency model and error handling patterns.

Beanstalk is a simple, fast work queue.

Pros of Beanstalkd

- Language-agnostic: Supports multiple programming languages through various client libraries

- Simple and lightweight: Easy to set up and use with minimal configuration

- Proven reliability: Has been in use for many years with a stable codebase

Cons of Beanstalkd

- Limited features: Lacks advanced functionality like periodic tasks or result storage

- No built-in monitoring: Requires additional tools for monitoring and management

- Single-node architecture: Doesn't natively support distributed processing

Code Comparison

Beanstalkd (using Go client):

c, _ := beanstalk.Dial("tcp", "127.0.0.1:11300")

id, _ := c.Put([]byte("hello"), 1, 0, 120*time.Second)

Asynq:

client := asynq.NewClient(asynq.RedisClientOpt{Addr: "localhost:6379"})

task := asynq.NewTask("email:send", []byte("hello"))

_, err := client.Enqueue(task)

Summary

Beanstalkd is a simple, language-agnostic job queue that's easy to set up and use. It's reliable and has been around for a long time. However, it lacks some advanced features and built-in monitoring capabilities. Asynq, on the other hand, is a Go-specific solution that offers more advanced features like periodic tasks and result storage, as well as built-in monitoring and distributed processing support. The choice between the two depends on your specific requirements and the programming languages you're using in your project.

Machinery is an asynchronous task queue/job queue based on distributed message passing.

Pros of Machinery

- Supports multiple brokers (Redis, AMQP, MongoDB)

- Offers more advanced features like rate limiting and chaining tasks

- Has a longer development history and potentially more mature codebase

Cons of Machinery

- More complex setup and configuration

- Heavier resource usage due to broader feature set

- Less focused on performance optimization compared to Asynq

Code Comparison

Machinery task definition:

var AddTask = &tasks.Signature{

Name: "add",

Args: []tasks.Arg{

{Type: "int64", Value: 1},

{Type: "int64", Value: 1},

},

}

Asynq task definition:

task := asynq.NewTask("add", map[string]interface{}{

"x": 1,

"y": 1,

})

Both libraries offer distributed task processing for Go applications, but they have different focuses. Machinery provides a more feature-rich solution with support for multiple brokers, while Asynq emphasizes simplicity and performance with Redis as its primary backend. Asynq's design is more streamlined, making it easier to set up and use for simpler use cases. However, Machinery's flexibility and advanced features may be preferable for more complex distributed systems or those requiring specific broker integrations.

Process background jobs in Go

Pros of Work

- Mature and battle-tested, with a longer history of production use

- Supports job priorities out of the box

- Offers more granular control over worker concurrency

Cons of Work

- Less active development and community support

- Limited to Redis as the backend storage

- Lacks some modern features like periodic jobs and middleware support

Code Comparison

Work:

job := work.NewJob()

job.ArgString("name", "John")

job.ArgInt("age", 30)

enqueuer.Enqueue("send_email", job)

Asynq:

task := asynq.NewTask("send_email", map[string]interface{}{

"name": "John",

"age": 30,

})

client.Enqueue(task)

Both libraries offer similar functionality for enqueueing jobs, but Asynq uses a more modern and flexible approach with a map for task payload. Work provides a more structured way to add arguments to jobs, which can be beneficial for type safety but may be less flexible for complex data structures.

While Work has been around longer and offers some unique features, Asynq is gaining popularity due to its active development, broader feature set, and more modern API design. The choice between the two depends on specific project requirements and preferences for API style and feature set.

Message queue system written in Go and backed by Redis

Pros of rmq

- Lightweight and simple implementation

- Supports both synchronous and asynchronous processing

- Easy to integrate with existing Ruby applications

Cons of rmq

- Less feature-rich compared to asynq

- Limited documentation and community support

- Lacks built-in monitoring and management tools

Code Comparison

rmq:

RMQ.configure do |config|

config.redis = Redis.new

end

RMQ.enqueue("my_queue", "my_job", arg1: "value1", arg2: "value2")

asynq:

client := asynq.NewClient(asynq.RedisClientOpt{Addr: "localhost:6379"})

task := asynq.NewTask("my_job", map[string]interface{}{"arg1": "value1", "arg2": "value2"})

_, err := client.Enqueue(task)

Both libraries provide simple ways to enqueue tasks, but asynq offers more configuration options and type safety due to Go's static typing. rmq's syntax is more concise, reflecting Ruby's simplicity, while asynq's approach is more verbose but provides better control over task creation and execution.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Simple, reliable & efficient distributed task queue in Go

Asynq is a Go library for queueing tasks and processing them asynchronously with workers. It's backed by Redis and is designed to be scalable yet easy to get started.

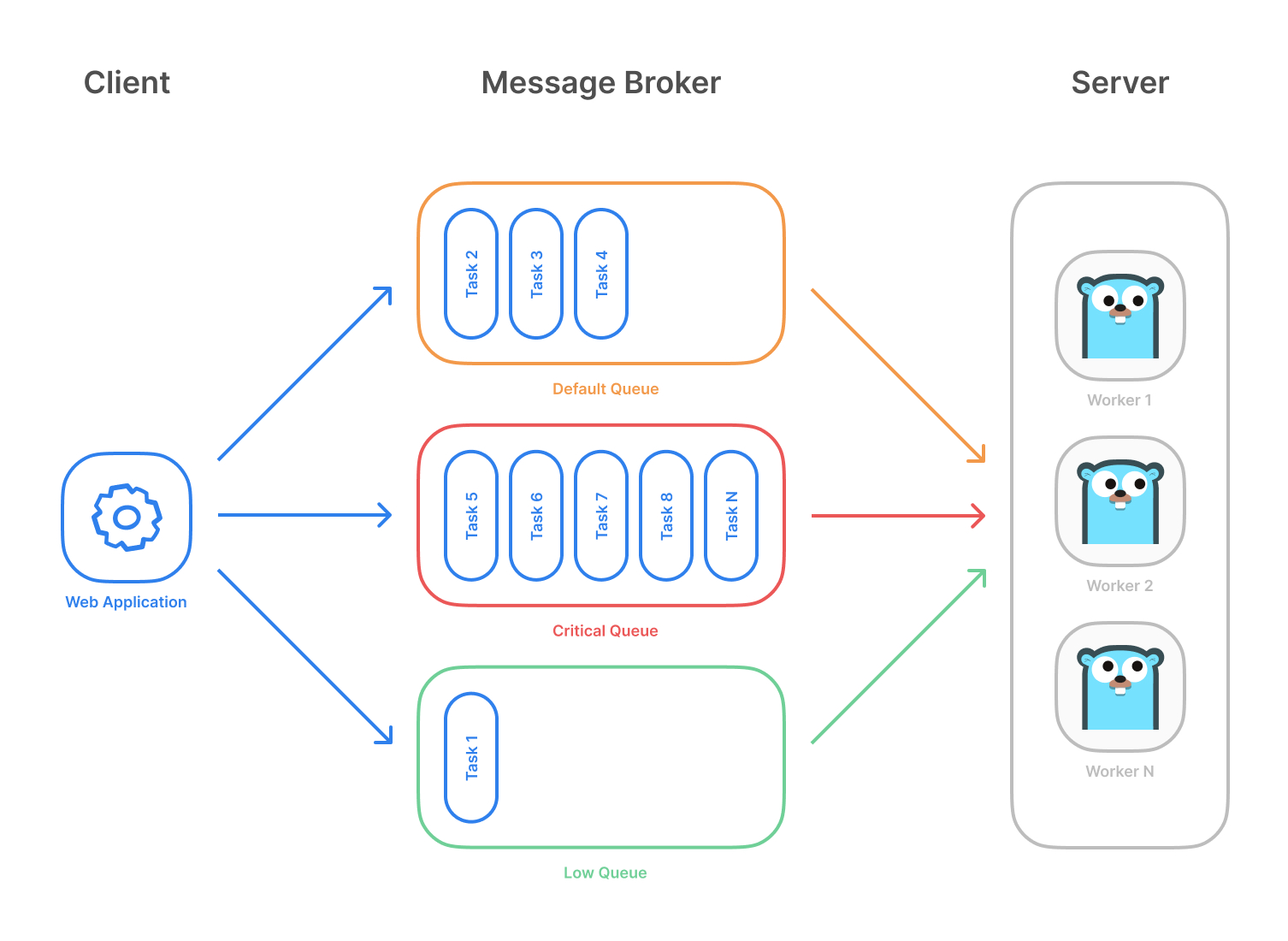

Highlevel overview of how Asynq works:

- Client puts tasks on a queue

- Server pulls tasks off queues and starts a worker goroutine for each task

- Tasks are processed concurrently by multiple workers

Task queues are used as a mechanism to distribute work across multiple machines. A system can consist of multiple worker servers and brokers, giving way to high availability and horizontal scaling.

Example use case

Features

- Guaranteed at least one execution of a task

- Scheduling of tasks

- Retries of failed tasks

- Automatic recovery of tasks in the event of a worker crash

- Weighted priority queues

- Strict priority queues

- Low latency to add a task since writes are fast in Redis

- De-duplication of tasks using unique option

- Allow timeout and deadline per task

- Allow aggregating group of tasks to batch multiple successive operations

- Flexible handler interface with support for middlewares

- Ability to pause queue to stop processing tasks from the queue

- Periodic Tasks

- Support Redis Sentinels for high availability

- Integration with Prometheus to collect and visualize queue metrics

- Web UI to inspect and remote-control queues and tasks

- CLI to inspect and remote-control queues and tasks

Stability and Compatibility

Status: The library relatively stable and is currently undergoing moderate development with less frequent breaking API changes.

âï¸ Important Note: Current major version is zero (

v0.x.x) to accommodate rapid development and fast iteration while getting early feedback from users (feedback on APIs are appreciated!). The public API could change without a major version update beforev1.0.0release.

Redis Cluster Compatibility

Some of the lua scripts in this library may not be compatible with Redis Cluster.

Sponsoring

If you are using this package in production, please consider sponsoring the project to show your support!

Quickstart

Make sure you have Go installed (download). The last two Go versions are supported (See https://go.dev/dl).

Initialize your project by creating a folder and then running go mod init github.com/your/repo (learn more) inside the folder. Then install Asynq library with the go get command:

go get -u github.com/hibiken/asynq

Make sure you're running a Redis server locally or from a Docker container. Version 4.0 or higher is required.

Next, write a package that encapsulates task creation and task handling.

package tasks

import (

"context"

"encoding/json"

"fmt"

"log"

"time"

"github.com/hibiken/asynq"

)

// A list of task types.

const (

TypeEmailDelivery = "email:deliver"

TypeImageResize = "image:resize"

)

type EmailDeliveryPayload struct {

UserID int

TemplateID string

}

type ImageResizePayload struct {

SourceURL string

}

//----------------------------------------------

// Write a function NewXXXTask to create a task.

// A task consists of a type and a payload.

//----------------------------------------------

func NewEmailDeliveryTask(userID int, tmplID string) (*asynq.Task, error) {

payload, err := json.Marshal(EmailDeliveryPayload{UserID: userID, TemplateID: tmplID})

if err != nil {

return nil, err

}

return asynq.NewTask(TypeEmailDelivery, payload), nil

}

func NewImageResizeTask(src string) (*asynq.Task, error) {

payload, err := json.Marshal(ImageResizePayload{SourceURL: src})

if err != nil {

return nil, err

}

// task options can be passed to NewTask, which can be overridden at enqueue time.

return asynq.NewTask(TypeImageResize, payload, asynq.MaxRetry(5), asynq.Timeout(20 * time.Minute)), nil

}

//---------------------------------------------------------------

// Write a function HandleXXXTask to handle the input task.

// Note that it satisfies the asynq.HandlerFunc interface.

//

// Handler doesn't need to be a function. You can define a type

// that satisfies asynq.Handler interface. See examples below.

//---------------------------------------------------------------

func HandleEmailDeliveryTask(ctx context.Context, t *asynq.Task) error {

var p EmailDeliveryPayload

if err := json.Unmarshal(t.Payload(), &p); err != nil {

return fmt.Errorf("json.Unmarshal failed: %v: %w", err, asynq.SkipRetry)

}

log.Printf("Sending Email to User: user_id=%d, template_id=%s", p.UserID, p.TemplateID)

// Email delivery code ...

return nil

}

// ImageProcessor implements asynq.Handler interface.

type ImageProcessor struct {

// ... fields for struct

}

func (processor *ImageProcessor) ProcessTask(ctx context.Context, t *asynq.Task) error {

var p ImageResizePayload

if err := json.Unmarshal(t.Payload(), &p); err != nil {

return fmt.Errorf("json.Unmarshal failed: %v: %w", err, asynq.SkipRetry)

}

log.Printf("Resizing image: src=%s", p.SourceURL)

// Image resizing code ...

return nil

}

func NewImageProcessor() *ImageProcessor {

return &ImageProcessor{}

}

In your application code, import the above package and use Client to put tasks on queues.

package main

import (

"log"

"time"

"github.com/hibiken/asynq"

"your/app/package/tasks"

)

const redisAddr = "127.0.0.1:6379"

func main() {

client := asynq.NewClient(asynq.RedisClientOpt{Addr: redisAddr})

defer client.Close()

// ------------------------------------------------------

// Example 1: Enqueue task to be processed immediately.

// Use (*Client).Enqueue method.

// ------------------------------------------------------

task, err := tasks.NewEmailDeliveryTask(42, "some:template:id")

if err != nil {

log.Fatalf("could not create task: %v", err)

}

info, err := client.Enqueue(task)

if err != nil {

log.Fatalf("could not enqueue task: %v", err)

}

log.Printf("enqueued task: id=%s queue=%s", info.ID, info.Queue)

// ------------------------------------------------------------

// Example 2: Schedule task to be processed in the future.

// Use ProcessIn or ProcessAt option.

// ------------------------------------------------------------

info, err = client.Enqueue(task, asynq.ProcessIn(24*time.Hour))

if err != nil {

log.Fatalf("could not schedule task: %v", err)

}

log.Printf("enqueued task: id=%s queue=%s", info.ID, info.Queue)

// ----------------------------------------------------------------------------

// Example 3: Set other options to tune task processing behavior.

// Options include MaxRetry, Queue, Timeout, Deadline, Unique etc.

// ----------------------------------------------------------------------------

task, err = tasks.NewImageResizeTask("https://example.com/myassets/image.jpg")

if err != nil {

log.Fatalf("could not create task: %v", err)

}

info, err = client.Enqueue(task, asynq.MaxRetry(10), asynq.Timeout(3 * time.Minute))

if err != nil {

log.Fatalf("could not enqueue task: %v", err)

}

log.Printf("enqueued task: id=%s queue=%s", info.ID, info.Queue)

}

Next, start a worker server to process these tasks in the background. To start the background workers, use Server and provide your Handler to process the tasks.

You can optionally use ServeMux to create a handler, just as you would with net/http Handler.

package main

import (

"log"

"github.com/hibiken/asynq"

"your/app/package/tasks"

)

const redisAddr = "127.0.0.1:6379"

func main() {

srv := asynq.NewServer(

asynq.RedisClientOpt{Addr: redisAddr},

asynq.Config{

// Specify how many concurrent workers to use

Concurrency: 10,

// Optionally specify multiple queues with different priority.

Queues: map[string]int{

"critical": 6,

"default": 3,

"low": 1,

},

// See the godoc for other configuration options

},

)

// mux maps a type to a handler

mux := asynq.NewServeMux()

mux.HandleFunc(tasks.TypeEmailDelivery, tasks.HandleEmailDeliveryTask)

mux.Handle(tasks.TypeImageResize, tasks.NewImageProcessor())

// ...register other handlers...

if err := srv.Run(mux); err != nil {

log.Fatalf("could not run server: %v", err)

}

}

For a more detailed walk-through of the library, see our Getting Started guide.

To learn more about asynq features and APIs, see the package godoc.

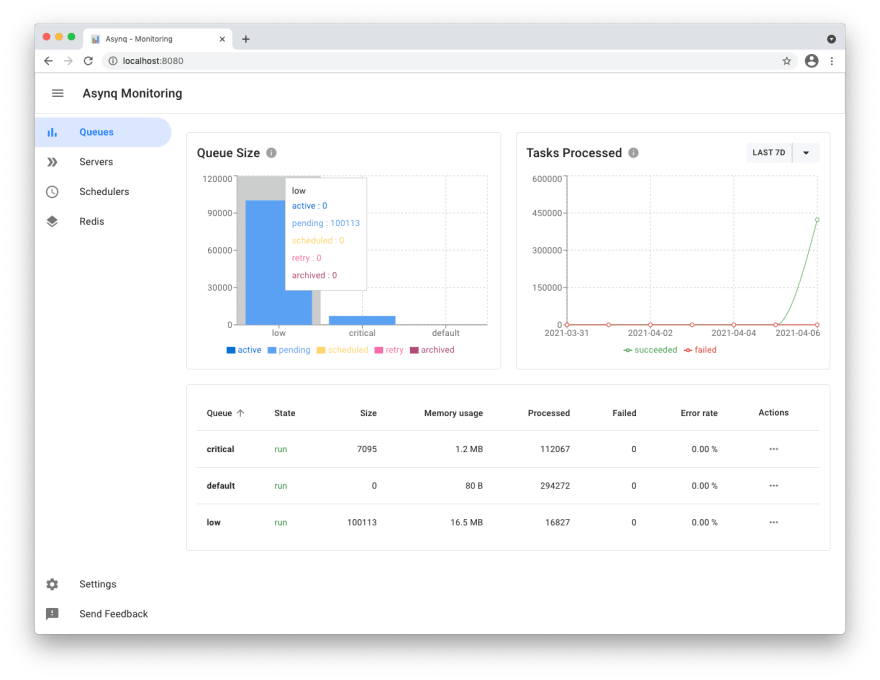

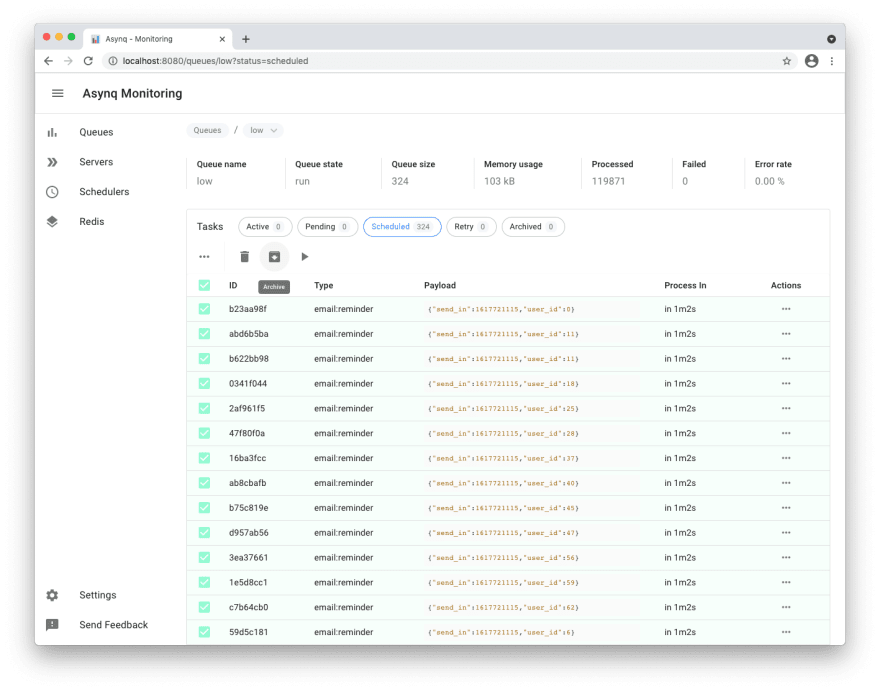

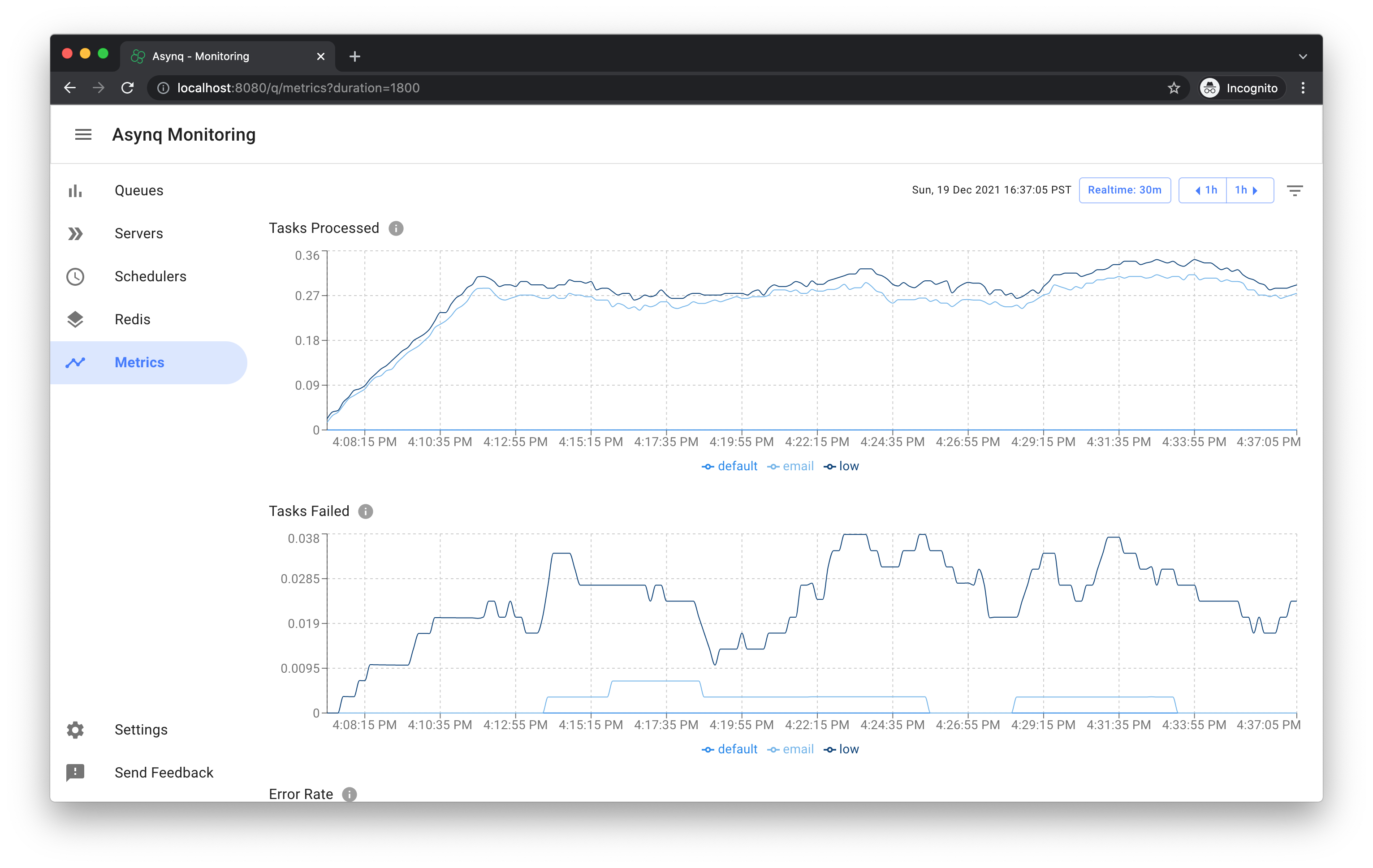

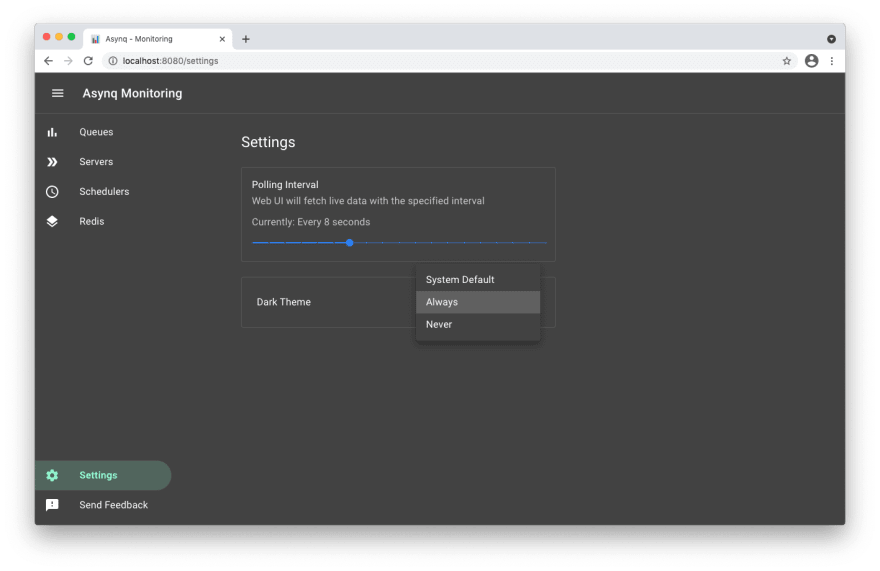

Web UI

Asynqmon is a web based tool for monitoring and administrating Asynq queues and tasks.

Here's a few screenshots of the Web UI:

Queues view

Tasks view

Metrics view

Settings and adaptive dark mode

For details on how to use the tool, refer to the tool's README.

Command Line Tool

Asynq ships with a command line tool to inspect the state of queues and tasks.

To install the CLI tool, run the following command:

go install github.com/hibiken/asynq/tools/asynq@latest

Here's an example of running the asynq dash command:

For details on how to use the tool, refer to the tool's README.

Contributing

We are open to, and grateful for, any contributions (GitHub issues/PRs, feedback on Gitter channel, etc) made by the community.

Please see the Contribution Guide before contributing.

License

Copyright (c) 2019-present Ken Hibino and Contributors. Asynq is free and open-source software licensed under the MIT License. Official logo was created by Vic Shóstak and distributed under Creative Commons license (CC0 1.0 Universal).

Top Related Projects

Simple, efficient background processing for Ruby

Resque is a Redis-backed Ruby library for creating background jobs, placing them on multiple queues, and processing them later.

Beanstalk is a simple, fast work queue.

Machinery is an asynchronous task queue/job queue based on distributed message passing.

Process background jobs in Go

Message queue system written in Go and backed by Redis

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot