kedro

kedro

Kedro is a toolbox for production-ready data science. It uses software engineering best practices to help you create data engineering and data science pipelines that are reproducible, maintainable, and modular.

Top Related Projects

Prefect is a workflow orchestration framework for building resilient data pipelines in Python.

An orchestration platform for the development, production, and observation of data assets.

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

The open source developer platform to build AI/LLM applications and models with confidence. Enhance your AI applications with end-to-end tracking, observability, and evaluations, all in one integrated platform.

The fastest ⚡️ way to build data pipelines. Develop iteratively, deploy anywhere. ☁️

Quick Overview

Kedro is an open-source Python framework for creating reproducible, maintainable, and modular data science code. It provides a standard structure for data science projects, making it easier to collaborate, scale, and deploy machine learning models.

Pros

- Promotes best practices in software engineering for data science projects

- Enhances reproducibility and maintainability of data pipelines

- Provides a standardized project structure, making it easier to onboard new team members

- Integrates well with various data science tools and platforms

Cons

- Steep learning curve for those unfamiliar with software engineering principles

- May feel overly structured for small or simple projects

- Limited flexibility in project structure compared to a custom setup

- Requires additional setup and configuration compared to ad-hoc scripting

Code Examples

- Creating a node in Kedro:

from kedro.pipeline import node

def process_data(data):

return data.dropna()

process_node = node(

func=process_data,

inputs="raw_data",

outputs="cleaned_data",

name="clean_data_node"

)

- Defining a pipeline:

from kedro.pipeline import Pipeline

data_processing_pipeline = Pipeline(

[

node(func=load_data, inputs=None, outputs="raw_data"),

node(func=clean_data, inputs="raw_data", outputs="cleaned_data"),

node(func=train_model, inputs="cleaned_data", outputs="model")

]

)

- Running a pipeline:

from kedro.runner import SequentialRunner

runner = SequentialRunner()

runner.run(data_processing_pipeline)

Getting Started

To get started with Kedro, follow these steps:

- Install Kedro:

pip install kedro

- Create a new project:

kedro new

- Navigate to the project directory and install dependencies:

cd <project_name>

kedro install

- Run the example pipeline:

kedro run

This will set up a basic Kedro project structure and run the example pipeline. You can then start building your own data science project using Kedro's framework and best practices.

Competitor Comparisons

Prefect is a workflow orchestration framework for building resilient data pipelines in Python.

Pros of Prefect

- More flexible scheduling and execution options, including distributed and cloud-native workflows

- Built-in support for real-time monitoring and alerting

- Extensive API and integrations with various data tools and platforms

Cons of Prefect

- Steeper learning curve due to more complex architecture

- Less emphasis on data pipeline structure and modularity

- May be overkill for simpler data processing tasks

Code Comparison

Kedro pipeline definition:

pipeline = Pipeline([

node(func=preprocess_data, inputs="raw_data", outputs="cleaned_data"),

node(func=train_model, inputs="cleaned_data", outputs="model")

])

Prefect flow definition:

@flow

def data_pipeline():

raw_data = load_data()

cleaned_data = preprocess_data(raw_data)

model = train_model(cleaned_data)

return model

Both Kedro and Prefect offer ways to define data pipelines, but Prefect uses a more function-oriented approach with decorators, while Kedro focuses on explicit node connections. Kedro's structure may be more intuitive for data scientists, while Prefect's approach offers more flexibility for complex workflows.

An orchestration platform for the development, production, and observation of data assets.

Pros of Dagster

- More comprehensive asset management and observability features

- Better support for cloud-native deployments and containerization

- Stronger emphasis on data lineage and impact analysis

Cons of Dagster

- Steeper learning curve due to more complex architecture

- Less focus on modular project structure compared to Kedro

- Potentially overkill for smaller data projects

Code Comparison

Kedro pipeline definition:

def create_pipeline(**kwargs):

return Pipeline(

[

node(process_data, "raw_data", "processed_data"),

node(train_model, "processed_data", "model"),

]

)

Dagster job definition:

@job

def my_job():

processed_data = process_data()

train_model(processed_data)

Both Kedro and Dagster are powerful data orchestration tools, but they have different strengths. Kedro excels in providing a structured approach to data science projects, while Dagster offers more advanced features for complex data pipelines and observability. The choice between them depends on the specific needs of your project and team.

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

Pros of Airflow

- More mature and widely adopted in industry

- Extensive ecosystem with many integrations and plugins

- Powerful scheduling capabilities for complex workflows

Cons of Airflow

- Steeper learning curve and more complex setup

- Can be resource-intensive for smaller projects

- Less focus on data science-specific workflows

Code Comparison

Airflow DAG definition:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

dag = DAG('example_dag', schedule_interval='@daily')

def task_function():

# Task logic here

Kedro pipeline definition:

from kedro.pipeline import Pipeline, node

def task_function():

# Task logic here

pipeline = Pipeline([

node(func=task_function, inputs=None, outputs="output_name")

])

Both Kedro and Airflow are powerful tools for data pipeline management, but they cater to different use cases. Airflow excels in complex, production-grade workflow orchestration, while Kedro focuses on modular, reproducible data science workflows. The choice between them depends on the specific needs of your project and team.

The open source developer platform to build AI/LLM applications and models with confidence. Enhance your AI applications with end-to-end tracking, observability, and evaluations, all in one integrated platform.

Pros of MLflow

- More comprehensive experiment tracking and model registry

- Better support for model serving and deployment

- Wider ecosystem integration with various ML frameworks

Cons of MLflow

- Steeper learning curve for beginners

- Less focus on data engineering and pipeline management

- Can be overkill for smaller projects or teams

Code Comparison

MLflow example:

import mlflow

mlflow.start_run()

mlflow.log_param("param1", 5)

mlflow.log_metric("accuracy", 0.85)

mlflow.end_run()

Kedro example:

from kedro.pipeline import node, Pipeline

def process_data(data):

return data.dropna()

pipeline = Pipeline([

node(process_data, inputs="raw_data", outputs="clean_data")

])

MLflow focuses on experiment tracking and model management, while Kedro emphasizes data pipeline creation and management. MLflow's code is centered around logging parameters and metrics, whereas Kedro's code revolves around defining data processing nodes and pipelines.

Both tools serve different primary purposes in the ML workflow, with MLflow excelling in experiment tracking and model management, and Kedro specializing in creating reproducible, maintainable data science code through its pipeline-based approach.

The fastest ⚡️ way to build data pipelines. Develop iteratively, deploy anywhere. ☁️

Pros of Ploomber

- Lightweight and flexible, allowing for easier integration with existing workflows

- Strong focus on reproducibility and collaboration through notebook-based workflows

- Built-in support for cloud execution and distributed computing

Cons of Ploomber

- Smaller community and ecosystem compared to Kedro

- Less opinionated structure, which may lead to less consistency across projects

- Fewer built-in features for data versioning and pipeline visualization

Code Comparison

Kedro pipeline definition:

def create_pipeline(**kwargs):

return Pipeline(

[

node(func=preprocess_data, inputs="raw_data", outputs="preprocessed_data"),

node(func=train_model, inputs="preprocessed_data", outputs="model"),

]

)

Ploomber pipeline definition:

from ploomber import DAG

dag = DAG()

dag.task(source='preprocess.py', product='preprocessed_data.pkl')

dag.task(source='train.py', product='model.pkl', upstream=['preprocessed_data.pkl'])

Both Kedro and Ploomber offer powerful data pipeline management capabilities, but they differ in their approach and focus. Kedro provides a more structured and opinionated framework, while Ploomber emphasizes flexibility and notebook-based workflows. The choice between the two depends on project requirements and team preferences.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

What is Kedro?

Kedro is a toolbox for production-ready data science. It uses software engineering best practices to help you create data engineering and data science pipelines that are reproducible, maintainable, and modular. You can find out more at kedro.org.

Kedro is an open-source Python framework hosted by the LF AI & Data Foundation.

How do I install Kedro?

To install Kedro from the Python Package Index (PyPI) run:

uv pip install kedro

It is also possible to install Kedro using conda:

conda install -c conda-forge kedro

Our Get Started guide contains full installation instructions, and includes how to set up Python virtual environments.

Installation from source

To access the latest Kedro version before its official release, install it from the main branch.

uv pip install git+https://github.com/kedro-org/kedro@main

What are the main features of Kedro?

| Feature | What is this? |

|---|---|

| Project Template | A standard, modifiable and easy-to-use project template based on Cookiecutter Data Science. |

| Data Catalog | A series of lightweight data connectors used to save and load data across many different file formats and file systems, including local and network file systems, cloud object stores, and HDFS. The Data Catalog also includes data and model versioning for file-based systems. |

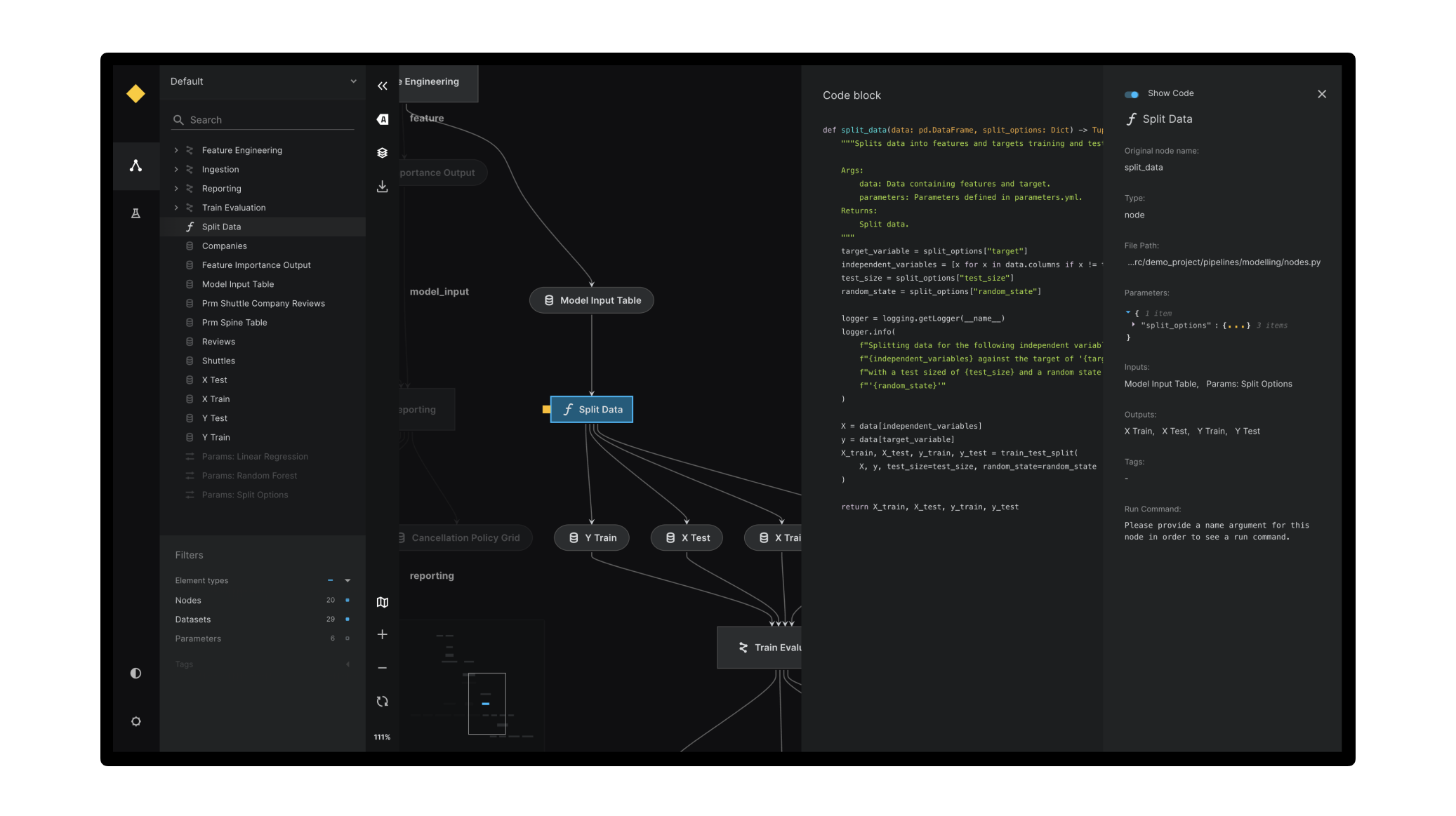

| Pipeline Abstraction | Automatic resolution of dependencies between pure Python functions and data pipeline visualisation using Kedro-Viz. |

| Coding Standards | Test-driven development using pytest, produce well-documented code using Sphinx, create linted code with support for ruff and make use of the standard Python logging library. |

| Flexible Deployment | Deployment strategies that include single or distributed-machine deployment as well as additional support for deploying on Argo, Prefect, Kubeflow, AWS Batch, and Databricks. |

How do I use Kedro?

The Kedro documentation first explains how to install Kedro and then introduces key Kedro concepts.

You can then review the spaceflights tutorial to build a Kedro project for hands-on experience.

For new and intermediate Kedro users, there's a comprehensive section on how to visualise Kedro projects using Kedro-Viz.

A pipeline visualisation generated using Kedro-Viz

A pipeline visualisation generated using Kedro-Viz

Additional documentation explains how to work with Kedro and Jupyter notebooks, and there are a set of advanced user guides for advanced for key Kedro features. We also recommend the API reference documentation for further information.

Why does Kedro exist?

Kedro is built upon our collective best-practice (and mistakes) trying to deliver real-world ML applications that have vast amounts of raw unvetted data. We developed Kedro to achieve the following:

- To address the main shortcomings of Jupyter notebooks, one-off scripts, and glue-code because there is a focus on creating maintainable data science code

- To enhance team collaboration when different team members have varied exposure to software engineering concepts

- To increase efficiency, because applied concepts like modularity and separation of concerns inspire the creation of reusable analytics code

Find out more about how Kedro can answer your use cases from the product FAQs on the Kedro website.

The humans behind Kedro

The Kedro product team and a number of open source contributors from across the world maintain Kedro.

Can I contribute?

Yes! We welcome all kinds of contributions. Check out our guide to contributing to Kedro.

Where can I learn more?

There is a growing community around Kedro. We encourage you to ask and answer technical questions on Slack and bookmark the Linen archive of past discussions.

We keep a list of technical FAQs in the Kedro documentation and you can find a growing list of blog posts, videos and projects that use Kedro over on the awesome-kedro GitHub repository. If you have created anything with Kedro we'd love to include it on the list. Just make a PR to add it!

How can I cite Kedro?

If you're an academic, Kedro can also help you, for example, as a tool to solve the problem of reproducible research. Use the "Cite this repository" button on our repository to generate a citation from the CITATION.cff file.

Python version support policy

- The core Kedro Framework supports all Python versions that are actively maintained by the CPython core team. When a Python version reaches end of life, support for that version is dropped from Kedro. This is not considered a breaking change.

- The Kedro Datasets package follows the NEP 29 Python version support policy. This means that

kedro-datasetsgenerally drops Python version support beforekedro. This is becausekedro-datasetshas a lot of dependencies that follow NEP 29 and the more conservative version support approach of the Kedro Framework makes it hard to manage those dependencies properly.

âï¸ Kedro Coffee Chat ð¶

We appreciate our community and want to stay connected. For that, we offer a public Coffee Chat format where we share updates and cool stuff around Kedro once every two weeks and give you time to ask your questions live.

Check out the upcoming demo topics and dates at the Kedro Coffee Chat wiki page.

Follow our Slack announcement channel to see Kedro Coffee Chat announcements and access demo recordings.

Top Related Projects

Prefect is a workflow orchestration framework for building resilient data pipelines in Python.

An orchestration platform for the development, production, and observation of data assets.

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

The open source developer platform to build AI/LLM applications and models with confidence. Enhance your AI applications with end-to-end tracking, observability, and evaluations, all in one integrated platform.

The fastest ⚡️ way to build data pipelines. Develop iteratively, deploy anywhere. ☁️

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot