Top Related Projects

tap-producing test harness for node and browsers

Node.js test runner that lets you develop with confidence 🚀

☕️ simple, flexible, fun javascript test framework for node.js & the browser

Delightful JavaScript Testing.

Simple JavaScript testing framework for browsers and node.js

Fast, easy and reliable testing for anything that runs in a browser.

Quick Overview

Tape is a lightweight JavaScript testing library for Node.js and browsers. It provides a simple, minimalist approach to writing and running tests, focusing on simplicity and ease of use. Tape is designed to work well with continuous integration systems and supports both synchronous and asynchronous testing.

Pros

- Minimal and lightweight, with no configuration required

- Works in both Node.js and browser environments

- Supports asynchronous testing out of the box

- Produces TAP (Test Anything Protocol) output, making it easy to integrate with other tools

Cons

- Limited built-in assertion methods compared to more comprehensive testing frameworks

- Lacks advanced features like test grouping or built-in mocking

- May require additional libraries for more complex testing scenarios

- Less suitable for large-scale applications with complex testing requirements

Code Examples

- Basic synchronous test:

const test = require('tape');

test('Basic arithmetic test', (t) => {

t.equal(2 + 2, 4, 'Addition works correctly');

t.notEqual(2 * 3, 5, 'Multiplication is not equal to 5');

t.end();

});

- Asynchronous test:

const test = require('tape');

test('Asynchronous test', (t) => {

t.plan(1);

setTimeout(() => {

t.pass('Async operation completed');

}, 1000);

});

- Testing promises:

const test = require('tape');

test('Promise test', async (t) => {

t.plan(1);

const result = await Promise.resolve(42);

t.equal(result, 42, 'Promise resolves to expected value');

});

Getting Started

To start using Tape in your project, follow these steps:

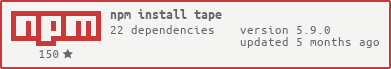

- Install Tape:

npm install tape --save-dev

- Create a test file (e.g.,

test.js):

const test = require('tape');

test('My first test', (t) => {

t.equal(true, true, 'True is true');

t.end();

});

- Run the test:

node test.js

You can also add a test script to your package.json:

"scripts": {

"test": "tape test.js"

}

Then run tests with npm test.

Competitor Comparisons

tap-producing test harness for node and browsers

Pros of tape

- Simpler and more lightweight testing framework

- Easier to get started with for beginners

- Minimal configuration required

Cons of tape

- Less feature-rich compared to tape>

- Limited built-in assertion methods

- Lacks advanced reporting and test organization options

Code Comparison

tape:

const test = require('tape');

test('basic assertion', (t) => {

t.equal(1 + 1, 2);

t.end();

});

tape>:

import { test } from 'tape>';

test('basic assertion', (t) => {

t.equal(1 + 1, 2);

t.end();

});

The code comparison shows that both frameworks have similar syntax for basic tests. However, tape> uses ES6 import syntax, while tape uses CommonJS require.

tape> builds upon the simplicity of tape while offering additional features and improvements. It aims to provide a more modern and feature-rich testing experience while maintaining the core principles of tape. The choice between the two depends on the specific needs of the project and the developer's preferences for simplicity versus additional functionality.

Node.js test runner that lets you develop with confidence 🚀

Pros of AVA

- Concurrent test execution, leading to faster test runs

- Built-in assertion library with more expressive assertions

- Isolated test environments for each test file

Cons of AVA

- Steeper learning curve due to more complex API

- Larger package size and more dependencies

- Less suitable for simple projects or quick prototyping

Code Comparison

tape:

const test = require('tape');

test('My test', (t) => {

t.equal(1 + 1, 2);

t.end();

});

AVA:

import test from 'ava';

test('My test', (t) => {

t.is(1 + 1, 2);

});

Key Differences

- AVA uses ES6 module syntax, while tape uses CommonJS

- AVA doesn't require explicit test ending (t.end())

- AVA's assertion methods have slightly different names (e.g., 'is' instead of 'equal')

Use Cases

- tape: Simple projects, quick prototyping, minimal setup

- AVA: Larger projects, need for concurrent testing, more advanced features

Both frameworks offer a straightforward approach to testing, but AVA provides more features at the cost of increased complexity. The choice between them depends on project requirements and personal preference.

☕️ simple, flexible, fun javascript test framework for node.js & the browser

Pros of Mocha

- Rich feature set with built-in assertion library and support for various testing styles (BDD, TDD)

- Extensive ecosystem with plugins and integrations

- Attractive and detailed test reporter output

Cons of Mocha

- Steeper learning curve due to more complex API and configuration options

- Heavier footprint and slower execution compared to minimalist alternatives

- Requires additional setup and configuration for optimal use

Code Comparison

Tape:

const test = require('tape');

test('My test', (t) => {

t.equal(1 + 1, 2);

t.end();

});

Mocha:

const assert = require('assert');

describe('My test', () => {

it('should add numbers correctly', () => {

assert.equal(1 + 1, 2);

});

});

Summary

Mocha offers a feature-rich testing environment with extensive customization options, making it suitable for complex projects. However, this comes at the cost of increased complexity and setup time. Tape, on the other hand, provides a simpler, more lightweight approach to testing, which may be preferable for smaller projects or developers who prioritize simplicity and speed. The choice between the two depends on project requirements, team preferences, and the desired balance between features and simplicity.

Delightful JavaScript Testing.

Pros of Jest

- Built-in mocking and assertion capabilities

- Parallel test execution for faster performance

- Snapshot testing for UI components

Cons of Jest

- Larger package size and more dependencies

- Steeper learning curve for beginners

- Potentially slower startup time for small projects

Code Comparison

Jest:

describe('sum module', () => {

test('adds 1 + 2 to equal 3', () => {

expect(sum(1, 2)).toBe(3);

});

});

Tape:

const test = require('tape');

test('sum module', (t) => {

t.equal(sum(1, 2), 3, 'adds 1 + 2 to equal 3');

t.end();

});

Key Differences

- Jest provides a more feature-rich environment with built-in mocking and assertion libraries

- Tape follows a minimalist approach, focusing on simplicity and ease of use

- Jest uses a describe/test structure, while Tape uses a single test function

- Jest offers automatic test discovery, whereas Tape requires manual test file execution

- Tape has a smaller footprint and faster startup time for small projects

Use Cases

- Jest: Large-scale applications, React projects, complex testing scenarios

- Tape: Small to medium-sized projects, quick prototypes, environments where simplicity is preferred

Simple JavaScript testing framework for browsers and node.js

Pros of Jasmine

- Rich set of built-in matchers and assertion styles

- Supports asynchronous testing out of the box

- Extensive documentation and large community support

Cons of Jasmine

- Steeper learning curve due to more complex syntax

- Heavier and more opinionated framework compared to Tape

- Setup and configuration can be more time-consuming

Code Comparison

Jasmine:

describe('Calculator', () => {

it('should add two numbers', () => {

expect(add(2, 3)).toBe(5);

});

});

Tape:

test('Calculator', (t) => {

t.equal(add(2, 3), 5, 'should add two numbers');

t.end();

});

Key Differences

- Syntax: Jasmine uses

describeanditblocks, while Tape uses a singletestfunction - Assertions: Jasmine has a wide range of matchers (

toBe,toEqual, etc.), whereas Tape uses simpler assertion methods (equal,deepEqual, etc.) - Setup: Jasmine requires more configuration, while Tape is more lightweight and easier to set up

- Philosophy: Jasmine follows a BDD-style approach, while Tape adheres to a simpler, more functional testing style

Both frameworks are widely used and have their strengths. Jasmine is often preferred for larger projects with complex test suites, while Tape is favored for its simplicity and ease of use in smaller projects or when a minimal testing framework is desired.

Fast, easy and reliable testing for anything that runs in a browser.

Pros of Cypress

- Rich, interactive test runner with time-travel debugging

- Automatic waiting and retry logic for asynchronous operations

- Extensive API for simulating user actions and assertions

Cons of Cypress

- Limited to testing web applications in Chrome-based browsers

- Slower test execution compared to lightweight alternatives

- Steeper learning curve due to its comprehensive feature set

Code Comparison

Tape:

const test = require('tape');

test('My test', (t) => {

t.equal(1 + 1, 2, 'Addition works');

t.end();

});

Cypress:

describe('My test', () => {

it('checks addition', () => {

expect(1 + 1).to.equal(2);

});

});

Summary

Tape is a minimalist testing framework focused on simplicity and ease of use. It's lightweight, fast, and works well for both frontend and backend JavaScript testing. Cypress, on the other hand, is a comprehensive end-to-end testing framework specifically designed for web applications. It offers a more feature-rich environment with built-in tools for debugging and handling asynchronous operations.

While Tape excels in simplicity and flexibility, Cypress provides a more robust solution for complex web application testing scenarios. The choice between the two depends on the specific needs of your project, with Tape being ideal for quick, straightforward tests and Cypress offering a more powerful toolset for intricate web testing requirements.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

tape

TAP-producing test harness for node and browsers

example

var test = require('tape');

test('timing test', function (t) {

t.plan(2);

t.equal(typeof Date.now, 'function');

var start = Date.now();

setTimeout(function () {

t.equal(Date.now() - start, 100);

}, 100);

});

test('test using promises', async function (t) {

const result = await someAsyncThing();

t.ok(result);

});

$ node example/timing.js

TAP version 13

# timing test

ok 1 should be strictly equal

not ok 2 should be strictly equal

---

operator: equal

expected: 100

actual: 107

...

1..2

# tests 2

# pass 1

# fail 1

usage

You always need to require('tape') in test files. You can run the tests by usual node means (require('test-file.js') or node test-file.js).

You can also run tests using the tape binary to utilize globbing, on Windows for example:

$ tape tests/**/*.js

tape's arguments are passed to the glob module.

If you want glob to perform the expansion on a system where the shell performs such expansion, quote the arguments as necessary:

$ tape 'tests/**/*.js'

$ tape "tests/**/*.js"

If you want tape to error when no files are found, pass --strict:

$ tape --strict 'tests/**/*.js'

Preloading modules

Additionally, it is possible to make tape load one or more modules before running any tests, by using the -r or --require flag. Here's an example that loads babel-register before running any tests, to allow for JIT compilation:

$ tape -r babel-register tests/**/*.js

Depending on the module you're loading, you may be able to parameterize it using environment variables or auxiliary files. Babel, for instance, will load options from .babelrc at runtime.

The -r flag behaves exactly like node's require, and uses the same module resolution algorithm. This means that if you need to load local modules, you have to prepend their path with ./ or ../ accordingly.

For example:

$ tape -r ./my/local/module tests/**/*.js

Please note that all modules loaded using the -r flag will run before any tests, regardless of when they are specified. For example, tape -r a b -r c will actually load a and c before loading b, since they are flagged as required modules.

things that go well with tape

tape maintains a fairly minimal core. Additional features are usually added by using another module alongside tape.

pretty reporters

The default TAP output is good for machines and humans that are robots.

If you want a more colorful / pretty output there are lots of modules on npm that will output something pretty if you pipe TAP into them:

- tap-spec

- tap-dot

- faucet

- tap-bail

- tap-browser-color

- tap-json

- tap-min

- tap-nyan

- tap-pessimist

- tap-prettify

- colortape

- tap-xunit

- tap-difflet

- tape-dom

- tap-diff

- tap-notify

- tap-summary

- tap-markdown

- tap-html

- tap-react-browser

- tap-junit

- tap-nyc

- tap-spec (emoji patch)

- tape-repeater

- tabe

To use them, try node test/index.js | tap-spec or pipe it into one of the modules of your choice!

uncaught exceptions

By default, uncaught exceptions in your tests will not be intercepted, and will cause tape to crash. If you find this behavior undesirable, use tape-catch to report any exceptions as TAP errors.

other

- CoffeeScript support with https://www.npmjs.com/package/coffeetape

- ES6 support with https://www.npmjs.com/package/babel-tape-runner or https://www.npmjs.com/package/buble-tape-runner

- Different test syntax with https://github.com/pguth/flip-tape (warning: mutates String.prototype)

- Electron test runner with https://github.com/tundrax/electron-tap

- Concurrency support with https://github.com/imsnif/mixed-tape

- In-process reporting with https://github.com/DavidAnson/tape-player

- Describe blocks with https://github.com/mattriley/tape-describe

command-line flags

While running tests, top-level configurations can be passed via the command line to specify desired behavior.

Available configurations are listed below:

--require

Alias: -r

This is used to load modules before running tests and is explained extensively in the preloading modules section.

--ignore

Alias: -i

This flag is used when tests from certain folders and/or files are not intended to be run.

The argument is a path to a file that contains the patterns to be ignored.

It defaults to .gitignore when passed with no argument.

tape -i .ignore '**/*.js'

An error is thrown if the specified file passed as argument does not exist.

--ignore-pattern

Same functionality as --ignore, but passing the pattern directly instead of an ignore file.

If both --ignore and --ignore-pattern are given, the --ignore-pattern argument is appended to the content of the ignore file.

tape --ignore-pattern 'integration_tests/**/*.js' '**/*.js'

--no-only

This is particularly useful in a CI environment where an only test is not supposed to go unnoticed.

By passing the --no-only flag, any existing only test causes tests to fail.

tape --no-only **/*.js

Alternatively, the environment variable NODE_TAPE_NO_ONLY_TEST can be set to true to achieve the same behavior; the command-line flag takes precedence.

methods

The assertion methods in tape are heavily influenced or copied from the methods in node-tap.

var test = require('tape')

test([name], [opts], cb)

Create a new test with an optional name string and optional opts object.

cb(t) fires with the new test object t once all preceding tests have finished.

Tests execute serially.

Available opts options are:

- opts.skip = true/false. See test.skip.

- opts.timeout = 500. Set a timeout for the test, after which it will fail. See test.timeoutAfter.

- opts.objectPrintDepth = 5. Configure max depth of expected / actual object printing. Environmental variable

NODE_TAPE_OBJECT_PRINT_DEPTHcan set the desired default depth for all tests; locally-set values will take precedence. - opts.todo = true/false. Test will be allowed to fail.

If you forget to t.plan() out how many assertions you are going to run and you don't call t.end() explicitly, or return a Promise that eventually settles, your test will hang.

If cb returns a Promise, it will be implicitly awaited. If that promise rejects, the test will be failed; if it fulfills, the test will end. Explicitly calling t.end() while also returning a Promise that fulfills is an error.

test.skip([name], [opts], cb)

Generate a new test that will be skipped over.

test.onFinish(fn)

The onFinish hook will get invoked when ALL tape tests have finished right before tape is about to print the test summary.

fn is called with no arguments, and its return value is ignored.

test.onFailure(fn)

The onFailure hook will get invoked whenever any tape tests has failed.

fn is called with no arguments, and its return value is ignored.

t.plan(n)

Declare that n assertions should be run. t.end() will be called automatically after the nth assertion.

If there are any more assertions after the nth, or after t.end() is called, they will generate errors.

t.end(err)

Declare the end of a test explicitly. If err is passed in t.end will assert that it is falsy.

Do not call t.end() if your test callback returns a Promise.

t.teardown(cb)

Register a callback to run after the individual test has completed. Multiple registered teardown callbacks will run in order. Useful for undoing side effects, closing network connections, etc.

t.fail(msg)

Generate a failing assertion with a message msg.

t.pass(msg)

Generate a passing assertion with a message msg.

t.timeoutAfter(ms)

Automatically timeout the test after X ms.

t.skip(msg)

Generate an assertion that will be skipped over.

t.ok(value, msg)

Assert that value is truthy with an optional description of the assertion msg.

Aliases: t.true(), t.assert()

t.notOk(value, msg)

Assert that value is falsy with an optional description of the assertion msg.

Aliases: t.false(), t.notok()

t.error(err, msg)

Assert that err is falsy. If err is non-falsy, use its err.message as the description message.

Aliases: t.ifError(), t.ifErr(), t.iferror()

t.equal(actual, expected, msg)

Assert that Object.is(actual, expected) with an optional description of the assertion msg.

Aliases: t.equals(), t.isEqual(), t.strictEqual(), t.strictEquals(), t.is()

t.notEqual(actual, expected, msg)

Assert that !Object.is(actual, expected) with an optional description of the assertion msg.

Aliases: t.notEquals(), t.isNotEqual(), t.doesNotEqual(), t.isInequal(), t.notStrictEqual(), t.notStrictEquals(), t.isNot(), t.not()

t.looseEqual(actual, expected, msg)

Assert that actual == expected with an optional description of the assertion msg.

Aliases: t.looseEquals()

t.notLooseEqual(actual, expected, msg)

Assert that actual != expected with an optional description of the assertion msg.

Aliases: t.notLooseEquals()

t.deepEqual(actual, expected, msg)

Assert that actual and expected have the same structure and nested values using node's deepEqual() algorithm with strict comparisons (===) on leaf nodes and an optional description of the assertion msg.

Aliases: t.deepEquals(), t.isEquivalent(), t.same()

t.notDeepEqual(actual, expected, msg)

Assert that actual and expected do not have the same structure and nested values using node's deepEqual() algorithm with strict comparisons (===) on leaf nodes and an optional description of the assertion msg.

Aliases: t.notDeepEquals, t.notEquivalent(), t.notDeeply(), t.notSame(),

t.isNotDeepEqual(), t.isNotDeeply(), t.isNotEquivalent(),

t.isInequivalent()

t.deepLooseEqual(actual, expected, msg)

Assert that actual and expected have the same structure and nested values using node's deepEqual() algorithm with loose comparisons (==) on leaf nodes and an optional description of the assertion msg.

t.notDeepLooseEqual(actual, expected, msg)

Assert that actual and expected do not have the same structure and nested values using node's deepEqual() algorithm with loose comparisons (==) on leaf nodes and an optional description of the assertion msg.

t.throws(fn, expected, msg)

Assert that the function call fn() throws an exception. expected, if present, must be a RegExp, Function, or Object. The RegExp matches the string representation of the exception, as generated by err.toString(). For example, if you set expected to /user/, the test will pass only if the string representation of the exception contains the word user. Any other exception will result in a failed test. The Function could be the constructor for the Error type thrown, or a predicate function to be called with that exception. Object in this case corresponds to a so-called validation object, in which each property is tested for strict deep equality. As an example, see the following two tests--each passes a validation object to t.throws() as the second parameter. The first test will pass, because all property values in the actual error object are deeply strictly equal to the property values in the validation object.

const err = new TypeError("Wrong value");

err.code = 404;

err.check = true;

// Passing test.

t.throws(

() => {

throw err;

},

{

code: 404,

check: true

},

"Test message."

);

This next test will fail, because all property values in the actual error object are not deeply strictly equal to the property values in the validation object.

const err = new TypeError("Wrong value");

err.code = 404;

err.check = "true";

// Failing test.

t.throws(

() => {

throw err;

},

{

code: 404,

check: true // This is not deeply strictly equal to err.check.

},

"Test message."

);

This is very similar to how Node's assert.throws() method tests validation objects (please see the Node assert.throws() documentation for more information).

If expected is not of type RegExp, Function, or Object, or omitted entirely, any exception will result in a passed test. msg is an optional description of the assertion.

Please note that the second parameter, expected, cannot be of type string. If a value of type string is provided for expected, then t.throws(fn, expected, msg) will execute, but the value of expected will be set to undefined, and the specified string will be set as the value for the msg parameter (regardless of what actually passed as the third parameter). This can cause unexpected results, so please be mindful.

t.doesNotThrow(fn, expected, msg)

Assert that the function call fn() does not throw an exception. expected, if present, limits what should not be thrown, and must be a RegExp or Function. The RegExp matches the string representation of the exception, as generated by err.toString(). For example, if you set expected to /user/, the test will fail only if the string representation of the exception contains the word user. Any other exception will result in a passed test. The Function is the exception thrown (e.g. Error). If expected is not of type RegExp or Function, or omitted entirely, any exception will result in a failed test. msg is an optional description of the assertion.

Please note that the second parameter, expected, cannot be of type string. If a value of type string is provided for expected, then t.doesNotThrows(fn, expected, msg) will execute, but the value of expected will be set to undefined, and the specified string will be set as the value for the msg parameter (regardless of what actually passed as the third parameter). This can cause unexpected results, so please be mindful.

t.test(name, [opts], cb)

Create a subtest with a new test handle st from cb(st) inside the current test t. cb(st) will only fire when t finishes. Additional tests queued up after t will not be run until all subtests finish.

You may pass the same options that test() accepts.

t.comment(message)

Print a message without breaking the tap output.

(Useful when using e.g. tap-colorize where output is buffered & console.log will print in incorrect order vis-a-vis tap output.)

Multiline output will be split by \n characters, and each one printed as a comment.

t.match(string, regexp, message)

Assert that string matches the RegExp regexp. Will fail when the first two arguments are the wrong type.

t.doesNotMatch(string, regexp, message)

Assert that string does not match the RegExp regexp. Will fail when the first two arguments are the wrong type.

t.capture(obj, method, implementation = () => {})

Replaces obj[method] with the supplied implementation.

obj must be a non-primitive, method must be a valid property key (string or symbol), and implementation, if provided, must be a function.

Calling the returned results() function will return an array of call result objects.

The array of calls will be reset whenever the function is called.

Call result objects will match one of these forms:

{ args: [x, y, z], receiver: o, returned: a }{ args: [x, y, z], receiver: o, threw: true }

The replacement will automatically be restored on test teardown.

You can restore it manually, if desired, by calling .restore() on the returned results function.

Modeled after tap.

t.captureFn(original)

Wraps the supplied function.

The returned wrapper has a .calls property, which is an array that will be populated with call result objects, described under t.capture().

Modeled after tap.

t.intercept(obj, property, desc = {}, strictMode = true)

Similar to t.capture()``, but can be used to track get/set operations for any arbitrary property. Calling the returned results()` function will return an array of call result objects.

The array of calls will be reset whenever the function is called.

Call result objects will match one of these forms:

{ type: 'get', value: '1.2.3', success: true, args: [x, y, z], receiver: o }{ type: 'set', value: '2.4.6', success: false, args: [x, y, z], receiver: o }

If strictMode is true, and writable is false, and no get or set is provided, an exception will be thrown when obj[property] is assigned to.

If strictMode is false in this scenario, nothing will be set, but the attempt will still be logged.

Providing both desc.get and desc.set are optional and can still be useful for logging get/set attempts.

desc must be a valid property descriptor, meaning that get/set are mutually exclusive with writable/value.

Additionally, explicitly setting configurable to false is not permitted, so that the property can be restored.

t.assertion(fn, ...args)

If you want to write your own custom assertions, you can invoke these conveniently using this method.

function isAnswer(value, msg) {

// eslint-disable-next-line no-invalid-this

this.equal(value, 42, msg || 'value must be the answer to life, the universe, and everything');

};

test('is this the answer?', (t) => {

t.assertion(isAnswer, 42); // passes, default message

t.assertion(isAnswer, 42, 'what is 6 * 9?'); // passes, custom message

t.assertion(isAnswer, 54, 'what is 6 * 9!'); // fails, custom message

t.end();

});

var htest = test.createHarness()

Create a new test harness instance, which is a function like test(), but with a new pending stack and test state.

By default the TAP output goes to console.log(). You can pipe the output to someplace else if you htest.createStream().pipe() to a destination stream on the first tick.

test.only([name], [opts], cb)

Like test([name], [opts], cb) except if you use .only this is the only test case that will run for the entire process, all other test cases using tape will be ignored.

Check out how the usage of the --no-only flag could help ensure there is no .only test running in a specified environment.

var stream = test.createStream(opts)

Create a stream of output, bypassing the default output stream that writes messages to console.log(). By default stream will be a text stream of TAP output, but you can get an object stream instead by setting opts.objectMode to true.

tap stream reporter

You can create your own custom test reporter using this createStream() api:

var test = require('tape');

var path = require('path');

test.createStream().pipe(process.stdout);

process.argv.slice(2).forEach(function (file) {

require(path.resolve(file));

});

You could substitute process.stdout for whatever other output stream you want, like a network connection or a file.

Pass in test files to run as arguments:

$ node tap.js test/x.js test/y.js

TAP version 13

# (anonymous)

not ok 1 should be strictly equal

---

operator: equal

expected: "boop"

actual: "beep"

...

# (anonymous)

ok 2 should be strictly equal

ok 3 (unnamed assert)

# wheee

ok 4 (unnamed assert)

1..4

# tests 4

# pass 3

# fail 1

object stream reporter

Here's how you can render an object stream instead of TAP:

var test = require('tape');

var path = require('path');

test.createStream({ objectMode: true }).on('data', function (row) {

console.log(JSON.stringify(row))

});

process.argv.slice(2).forEach(function (file) {

require(path.resolve(file));

});

The output for this runner is:

$ node object.js test/x.js test/y.js

{"type":"test","name":"(anonymous)","id":0}

{"id":0,"ok":false,"name":"should be strictly equal","operator":"equal","actual":"beep","expected":"boop","error":{},"test":0,"type":"assert"}

{"type":"end","test":0}

{"type":"test","name":"(anonymous)","id":1}

{"id":0,"ok":true,"name":"should be strictly equal","operator":"equal","actual":2,"expected":2,"test":1,"type":"assert"}

{"id":1,"ok":true,"name":"(unnamed assert)","operator":"ok","actual":true,"expected":true,"test":1,"type":"assert"}

{"type":"end","test":1}

{"type":"test","name":"wheee","id":2}

{"id":0,"ok":true,"name":"(unnamed assert)","operator":"ok","actual":true,"expected":true,"test":2,"type":"assert"}

{"type":"end","test":2}

A convenient alternative to achieve the same:

// report.js

var test = require('tape');

test.createStream({ objectMode: true }).on('data', function (row) {

console.log(JSON.stringify(row)) // for example

});

and then:

$ tape -r ./report.js **/*.test.js

install

With npm do:

npm install tape --save-dev

troubleshooting

Sometimes t.end() doesnât preserve the expected output ordering.

For instance the following:

var test = require('tape');

test('first', function (t) {

setTimeout(function () {

t.ok(1, 'first test');

t.end();

}, 200);

t.test('second', function (t) {

t.ok(1, 'second test');

t.end();

});

});

test('third', function (t) {

setTimeout(function () {

t.ok(1, 'third test');

t.end();

}, 100);

});

will output:

ok 1 second test

ok 2 third test

ok 3 first test

because second and third assume first has ended before it actually does.

Use t.plan() instead to let other tests know they should wait:

var test = require('tape');

test('first', function (t) {

+ t.plan(2);

setTimeout(function () {

t.ok(1, 'first test');

- t.end();

}, 200);

t.test('second', function (t) {

t.ok(1, 'second test');

t.end();

});

});

test('third', function (t) {

setTimeout(function () {

t.ok(1, 'third test');

t.end();

}, 100);

});

license

MIT

Top Related Projects

tap-producing test harness for node and browsers

Node.js test runner that lets you develop with confidence 🚀

☕️ simple, flexible, fun javascript test framework for node.js & the browser

Delightful JavaScript Testing.

Simple JavaScript testing framework for browsers and node.js

Fast, easy and reliable testing for anything that runs in a browser.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot