VToonify

VToonify

[SIGGRAPH Asia 2022] VToonify: Controllable High-Resolution Portrait Video Style Transfer

Top Related Projects

PhotoMaker [CVPR 2024]

GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

[NeurIPS 2022] Towards Robust Blind Face Restoration with Codebook Lookup Transformer

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

A latent text-to-image diffusion model

Official PyTorch repo for JoJoGAN: One Shot Face Stylization

Quick Overview

VToonify is an AI-powered tool for stylizing portrait images and videos. It combines the capabilities of StyleGAN and AdaIN to transform real-world portraits into various artistic styles, such as cartoon, anime, or caricature. The project aims to provide high-quality, controllable stylization results while maintaining the subject's identity and facial features.

Pros

- Offers diverse stylization options, including cartoon, anime, and caricature styles

- Maintains facial identity and features during stylization

- Supports both image and video stylization

- Provides pre-trained models for easy use

Cons

- Requires significant computational resources for training and inference

- Limited to portrait stylization, not suitable for general image stylization

- May produce inconsistent results across different frames in video stylization

- Requires manual parameter tuning for optimal results

Code Examples

- Stylizing a single image:

from vtoonify import VToonify

vtoonify = VToonify(style='cartoon')

stylized_image = vtoonify.stylize('input_image.jpg')

stylized_image.save('output_image.jpg')

- Stylizing a video:

from vtoonify import VToonify

vtoonify = VToonify(style='anime')

vtoonify.stylize_video('input_video.mp4', 'output_video.mp4')

- Adjusting stylization strength:

from vtoonify import VToonify

vtoonify = VToonify(style='caricature')

stylized_image = vtoonify.stylize('input_image.jpg', strength=0.7)

stylized_image.save('output_image.jpg')

Getting Started

To get started with VToonify, follow these steps:

- Install the required dependencies:

pip install vtoonify torch torchvision

-

Download pre-trained models from the project's GitHub repository.

-

Use VToonify in your Python script:

from vtoonify import VToonify

vtoonify = VToonify(style='cartoon', model_path='path/to/pretrained_model.pth')

stylized_image = vtoonify.stylize('input_image.jpg')

stylized_image.save('output_image.jpg')

For more advanced usage and customization options, refer to the project's documentation on GitHub.

Competitor Comparisons

PhotoMaker [CVPR 2024]

Pros of PhotoMaker

- Offers more versatile style transfer capabilities, including photorealistic and artistic styles

- Provides a user-friendly interface for easy customization and control

- Supports batch processing for efficient handling of multiple images

Cons of PhotoMaker

- Requires more computational resources due to its advanced features

- May have a steeper learning curve for beginners compared to VToonify

- Limited to specific art styles, while VToonify focuses on cartoon-like transformations

Code Comparison

PhotoMaker:

from photomaker import PhotoMaker

pm = PhotoMaker()

result = pm.stylize(image_path, style='anime')

result.save('output.png')

VToonify:

from vtoonify import VToonify

vt = VToonify()

result = vt.toonify(image_path)

result.save('output.png')

Both repositories offer image transformation capabilities, but PhotoMaker provides a broader range of style options and features, while VToonify specializes in cartoon-style transformations. PhotoMaker may be more suitable for users seeking diverse artistic effects, while VToonify is ideal for those specifically interested in toonification.

GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

Pros of GFPGAN

- Focuses on face restoration and enhancement, particularly effective for old or low-quality photos

- Utilizes a pre-trained model, making it easier to use out-of-the-box

- Supports both CPU and GPU inference

Cons of GFPGAN

- Limited to face restoration, not designed for full-body or scene stylization

- May produce less diverse stylistic outputs compared to VToonify

- Requires more computational resources for high-resolution images

Code Comparison

GFPGAN usage:

from gfpgan import GFPGANer

restorer = GFPGANer(model_path='experiments/pretrained_models/GFPGANv1.3.pth', upscale=2)

restored_img, _ = restorer.enhance(img, has_aligned=False, only_center_face=False, paste_back=True)

VToonify usage:

from models.vtoonify import VToonify

model = VToonify(backbone='dualstylegan', style_dim=512)

model.load_state_dict(torch.load('checkpoints/vtoonify_d_cartoon.pt'))

output = model(input_image, style_degree=0.5)

Both repositories offer image manipulation capabilities, but GFPGAN specializes in face restoration while VToonify focuses on stylization. GFPGAN is more user-friendly for immediate use, while VToonify offers greater flexibility in style application. The code snippets demonstrate that GFPGAN is simpler to set up and use, while VToonify requires more configuration but allows for style degree adjustment.

[NeurIPS 2022] Towards Robust Blind Face Restoration with Codebook Lookup Transformer

Pros of CodeFormer

- Focuses on face restoration and enhancement, offering more specialized functionality for facial images

- Provides a web-based demo for easy testing and visualization of results

- Includes pre-trained models for immediate use, reducing setup time

Cons of CodeFormer

- Limited to face-specific tasks, less versatile for general image stylization

- May require more computational resources due to its complex architecture

- Less customizable for different artistic styles compared to VToonify

Code Comparison

CodeFormer:

from basicsr.utils import imwrite, img2tensor, tensor2img

from facelib.utils.face_restoration_helper import FaceRestoreHelper

from torchvision.transforms.functional import normalize

img = img2tensor(img, bgr2rgb=True, float32=True)

normalize(img, (0.5, 0.5, 0.5), (0.5, 0.5, 0.5), inplace=True)

VToonify:

from PIL import Image

import torch

import torchvision.transforms as transforms

transform = transforms.Compose([

transforms.Resize((256, 256)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

])

Both repositories use similar image preprocessing techniques, but CodeFormer's approach is more tailored for face restoration tasks, while VToonify's code is more general-purpose for image stylization.

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

Pros of Real-ESRGAN

- Focuses on general image super-resolution and enhancement

- Provides pre-trained models for various use cases

- Supports both CPU and GPU inference

Cons of Real-ESRGAN

- Limited to image enhancement and upscaling tasks

- May not preserve artistic style as effectively as VToonify

- Requires more computational resources for high-resolution images

Code Comparison

VToonify:

from vtoonify import VToonify

vtoonify = VToonify(backbone='dualstylegan')

toonified_image = vtoonify(image, style_id=0)

Real-ESRGAN:

from basicsr.archs.rrdbnet_arch import RRDBNet

from realesrgan import RealESRGANer

model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32)

upsampler = RealESRGANer(scale=4, model_path='path/to/model.pth', model=model)

output, _ = upsampler.enhance(input_img)

Summary

VToonify specializes in stylizing images with various artistic styles, while Real-ESRGAN focuses on general image enhancement and super-resolution. VToonify offers more control over the artistic output, whereas Real-ESRGAN provides broader image quality improvements. The choice between the two depends on the specific use case and desired outcome.

A latent text-to-image diffusion model

Pros of stable-diffusion

- More versatile, capable of generating a wide range of images from text prompts

- Larger community and more extensive documentation

- Supports various fine-tuning and customization options

Cons of stable-diffusion

- Requires more computational resources and longer processing times

- Less specialized for toonification or stylization tasks

- More complex to set up and use for specific image transformation tasks

Code Comparison

VToonify:

from vtoonify import VToonify

vtoonify = VToonify(model_name='cartoon')

toonified_image = vtoonify(input_image)

stable-diffusion:

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5")

image = pipe("A cartoon style portrait").images[0]

Summary

VToonify is specialized for toonification and stylization tasks, offering simpler implementation for these specific use cases. stable-diffusion, on the other hand, provides a more comprehensive image generation toolkit with broader applications but requires more setup and resources. Choose VToonify for quick and easy toonification, or stable-diffusion for more diverse image generation capabilities.

Official PyTorch repo for JoJoGAN: One Shot Face Stylization

Pros of JoJoGAN

- Focuses specifically on anime-style face transformation

- Provides a user-friendly Colab notebook for easy experimentation

- Includes pre-trained models for various anime styles

Cons of JoJoGAN

- Limited to face transformation only, unlike VToonify's full-body capabilities

- Fewer style options compared to VToonify's diverse toonification choices

- Less flexibility in adjusting the degree of stylization

Code Comparison

JoJoGAN:

def style_mix_forward(self, x, styles, levels=[]):

styles = [styles[i] for i in levels]

latent = styles[0].unsqueeze(1).repeat(1, self.n_latent, 1)

return self.generator([latent], input_is_latent=True, randomize_noise=False)[0]

VToonify:

def forward(self, x, y=None, alpha=1):

if self.is_style:

return self.net(x, y, alpha)

else:

return self.net(x)

The code snippets show that JoJoGAN uses a style mixing approach, while VToonify offers a more flexible forward pass with optional style input and blending factor.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

VToonify - Official PyTorch Implementation

This repository provides the official PyTorch implementation for the following paper:

VToonify: Controllable High-Resolution Portrait Video Style Transfer

Shuai Yang, Liming Jiang, Ziwei Liu and Chen Change Loy

In ACM TOG (Proceedings of SIGGRAPH Asia), 2022.

Project Page | Paper | Supplementary Video | Input Data and Video Results

Abstract: Generating high-quality artistic portrait videos is an important and desirable task in computer graphics and vision. Although a series of successful portrait image toonification models built upon the powerful StyleGAN have been proposed, these image-oriented methods have obvious limitations when applied to videos, such as the fixed frame size, the requirement of face alignment, missing non-facial details and temporal inconsistency. In this work, we investigate the challenging controllable high-resolution portrait video style transfer by introducing a novel VToonify framework. Specifically, VToonify leverages the mid- and high-resolution layers of StyleGAN to render high-quality artistic portraits based on the multi-scale content features extracted by an encoder to better preserve the frame details. The resulting fully convolutional architecture accepts non-aligned faces in videos of variable size as input, contributing to complete face regions with natural motions in the output. Our framework is compatible with existing StyleGAN-based image toonification models to extend them to video toonification, and inherits appealing features of these models for flexible style control on color and intensity. This work presents two instantiations of VToonify built upon Toonify and DualStyleGAN for collection-based and exemplar-based portrait video style transfer, respectively. Extensive experimental results demonstrate the effectiveness of our proposed VToonify framework over existing methods in generating high-quality and temporally-coherent artistic portrait videos with flexible style controls.

Features:

High-Resolution Video (>1024, support unaligned faces) | Data-Friendly (no real training data) | Style Control

Updates

- [02/2023] Integrated to Deque Notebook.

- [10/2022] Integrate Gradio interface into Colab notebook. Enjoy the web demo!

- [10/2022] Integrated to ð¤ Hugging Face. Enjoy the web demo!

- [09/2022] Input videos and video results are released.

- [09/2022] Paper is released.

- [09/2022] Code is released.

- [09/2022] This website is created.

Web Demo

Integrated into Huggingface Spaces ð¤ using Gradio. Try out the Web Demo

Installation

Clone this repo:

git clone https://github.com/williamyang1991/VToonify.git

cd VToonify

Dependencies:

We have tested on:

- CUDA 10.1

- PyTorch 1.7.0

- Pillow 8.3.1; Matplotlib 3.3.4; opencv-python 4.5.3; Faiss 1.7.1; tqdm 4.61.2; Ninja 1.10.2

All dependencies for defining the environment are provided in environment/vtoonify_env.yaml.

We recommend running this repository using Anaconda (you may need to modify vtoonify_env.yaml to install PyTorch that matches your own CUDA version following https://pytorch.org/):

conda env create -f ./environment/vtoonify_env.yaml

â Install on Windows: https://github.com/williamyang1991/VToonify/issues/50#issuecomment-1443061101 and https://github.com/williamyang1991/VToonify/issues/38#issuecomment-1442146800

â If you have a problem regarding the cpp extention (fused and upfirdn2d), or no GPU is available, you may refer to CPU compatible version.

(1) Inference for Image/Video Toonification

Inference Notebook

To help users get started, we provide a Jupyter notebook found in ./notebooks/inference_playground.ipynb that allows one to visualize the performance of VToonify.

The notebook will download the necessary pretrained models and run inference on the images found in ./data/.

Pre-trained Models

Pre-trained models can be downloaded from Google Drive, Baidu Cloud (access code: sigg) or Hugging Face:

| Backbone | Model | Description |

|---|---|---|

| DualStyleGAN | cartoon | pre-trained VToonify-D models and 317 cartoon style codes |

| caricature | pre-trained VToonify-D models and 199 caricature style codes | |

| arcane | pre-trained VToonify-D models and 100 arcane style codes | |

| comic | pre-trained VToonify-D models and 101 comic style codes | |

| pixar | pre-trained VToonify-D models and 122 pixar style codes | |

| illustration | pre-trained VToonify-D models and 156 illustration style codes | |

| Toonify | cartoon | pre-trained VToonify-T model |

| caricature | pre-trained VToonify-T model | |

| arcane | pre-trained VToonify-T model | |

| comic | pre-trained VToonify-T model | |

| pixar | pre-trained VToonify-T model | |

| Supporting model | ||

| encoder.pt | Pixel2style2pixel encoder to map real faces into Z+ space of StyleGAN | |

| faceparsing.pth | BiSeNet for face parsing from face-parsing.PyTorch | |

The downloaded models are suggested to be arranged in this folder structure.

The VToonify-D models are named with suffixes to indicate the settings, where

_sXXX: supports only one fixed style withXXXthe index of this style._swithoutXXXmeans the model supports examplar-based style transfer

_dXXX: supports only a fixed style degree ofXXX._dwithoutXXXmeans the model supports style degrees ranging from 0 to 1

_c: supports color transfer.

Style Transfer with VToonify-D

â A quick start HERE

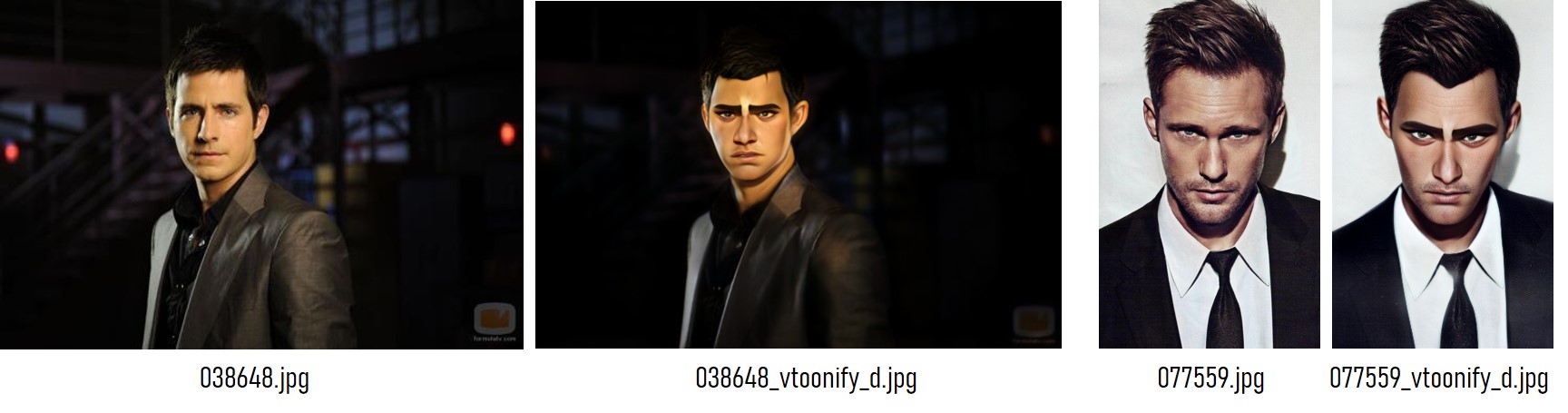

Transfer a default cartoon style onto a default face image ./data/077436.jpg:

python style_transfer.py --scale_image

The results are saved in the folder ./output/, where 077436_input.jpg is the rescaled input image to fit VToonify (this image can serve as the input without --scale_image) and 077436_vtoonify_d.jpg is the result.

Specify the content image and the model, control the style with the following options:

--content: path to the target face image or video--style_id: the index of the style image (find the mapping between index and the style image here).--style_degree(default: 0.5): adjust the degree of style.--color_transfer(default: False): perform color transfer if loading a VToonify-Dsdc model.--ckpt: path of the VToonify-D model. By default, a VToonify-Dsd trained on cartoon style is loaded.--exstyle_path: path of the extrinsic style code. By default, codes in the same directory as--ckptare loaded.--scale_image: rescale the input image/video to fit VToonify (highly recommend).--padding(default: 200, 200, 200, 200): left, right, top, bottom paddings to the eye center.

Here is an example of arcane style transfer:

python style_transfer.py --content ./data/038648.jpg \

--scale_image --style_id 77 --style_degree 0.5 \

--ckpt ./checkpoint/vtoonify_d_arcane/vtoonify_s_d.pt \

--padding 600 600 600 600 # use large padding to avoid cropping the image

Specify --video to perform video toonification:

python style_transfer.py --scale_image --content ./data/YOUR_VIDEO.mp4 --video

The above style control options (--style_id, --style_degree, --color_transfer) also work for videos.

Style Transfer with VToonify-T

Specify --backbone as ''toonify'' to load and use a VToonify-T model.

python style_transfer.py --content ./data/038648.jpg \

--scale_image --backbone toonify \

--ckpt ./checkpoint/vtoonify_t_arcane/vtoonify.pt \

--padding 600 600 600 600 # use large padding to avoid cropping the image

In VToonify-T, --style_id, --style_degree, --color_transfer, --exstyle_path are not used.

As with VToonify-D, specify --video to perform video toonification.

(2) Training VToonify

Download the supporting models to the ./checkpoint/ folder and arrange them in this folder structure:

| Model | Description |

|---|---|

| stylegan2-ffhq-config-f.pt | StyleGAN model trained on FFHQ taken from rosinality |

| encoder.pt | Pixel2style2pixel encoder that embeds FFHQ images into StyleGAN2 Z+ latent code |

| faceparsing.pth | BiSeNet for face parsing from face-parsing.PyTorch |

| directions.npy | Editing vectors taken from LowRankGAN for editing face attributes |

| Toonify | DualStyleGAN | pre-trained stylegan-based toonification models |

To customize your own style, you may need to train a new Toonify/DualStyleGAN model following here.

Train VToonify-D

Given the supporting models arranged in the default folder structure, we can simply pre-train the encoder and train the whole VToonify-D by running

# for pre-training the encoder

python -m torch.distributed.launch --nproc_per_node=N_GPU --master_port=PORT train_vtoonify_d.py \

--iter ITERATIONS --stylegan_path DUALSTYLEGAN_PATH --exstyle_path EXSTYLE_CODE_PATH \

--batch BATCH_SIZE --name SAVE_NAME --pretrain

# for training VToonify-D given the pre-trained encoder

python -m torch.distributed.launch --nproc_per_node=N_GPU --master_port=PORT train_vtoonify_d.py \

--iter ITERATIONS --stylegan_path DUALSTYLEGAN_PATH --exstyle_path EXSTYLE_CODE_PATH \

--batch BATCH_SIZE --name SAVE_NAME # + ADDITIONAL STYLE CONTROL OPTIONS

The models and the intermediate results are saved in ./checkpoint/SAVE_NAME/ and ./log/SAVE_NAME/, respectively.

VToonify-D provides the following STYLE CONTROL OPTIONS:

--fix_degree: if specified, model is trained with a fixed style degree (no degree adjustment)--fix_style: if specified, model is trained with a fixed style image (no examplar-based style transfer)--fix_color: if specified, model is trained with color preservation (no color transfer)--style_id: the index of the style image (find the mapping between index and the style image here).--style_degree(default: 0.5): the degree of style.

Here is an example to reproduce the VToonify-Dsd on Cartoon style and the VToonify-D specialized for a mild toonification on the 26th cartoon style:

python -m torch.distributed.launch --nproc_per_node=8 --master_port=8765 train_vtoonify_d.py \

--iter 30000 --stylegan_path ./checkpoint/cartoon/generator.pt --exstyle_path ./checkpoint/cartoon/refined_exstyle_code.npy \

--batch 1 --name vtoonify_d_cartoon --pretrain

python -m torch.distributed.launch --nproc_per_node=8 --master_port=8765 train_vtoonify_d.py \

--iter 2000 --stylegan_path ./checkpoint/cartoon/generator.pt --exstyle_path ./checkpoint/cartoon/refined_exstyle_code.npy \

--batch 4 --name vtoonify_d_cartoon --fix_color

python -m torch.distributed.launch --nproc_per_node=8 --master_port=8765 train_vtoonify_d.py \

--iter 2000 --stylegan_path ./checkpoint/cartoon/generator.pt --exstyle_path ./checkpoint/cartoon/refined_exstyle_code.npy \

--batch 4 --name vtoonify_d_cartoon --fix_color --fix_degree --style_degree 0.5 --fix_style --style_id 26

Note that the pre-trained encoder is shared by different STYLE CONTROL OPTIONS. VToonify-D only needs to pre-train the encoder once for each DualStyleGAN model.

Eight GPUs are not necessary, one can train the model with a single GPU with larger --iter.

Tips: [how to find an ideal model] we can first train a versatile model VToonify-Dsd, and navigate around different styles and degrees. After finding the ideal setting, we can then train the model specialized in that setting for high-quality stylization.

Train VToonify-T

The training of VToonify-T is similar to VToonify-D,

# for pre-training the encoder

python -m torch.distributed.launch --nproc_per_node=N_GPU --master_port=PORT train_vtoonify_t.py \

--iter ITERATIONS --finetunegan_path FINETUNED_MODEL_PATH \

--batch BATCH_SIZE --name SAVE_NAME --pretrain # + ADDITIONAL STYLE CONTROL OPTION

# for training VToonify-T given the pre-trained encoder

python -m torch.distributed.launch --nproc_per_node=N_GPU --master_port=PORT train_vtoonify_t.py \

--iter ITERATIONS --finetunegan_path FINETUNED_MODEL_PATH \

--batch BATCH_SIZE --name SAVE_NAME # + ADDITIONAL STYLE CONTROL OPTION

VToonify-T only has one STYLE CONTROL OPTION:

--weight(default: 1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0): 18 numbers indicate how the 18 layers of the ffhq stylegan model and the finetuned model are blended to obtain the final Toonify model. Here is the--weightwe use in the paper for different styles. Please refer to toonify for the details.

Here is an example to reproduce the VToonify-T model on Arcane style:

python -m torch.distributed.launch --nproc_per_node=8 --master_port=8765 train_vtoonify_t.py \

--iter 30000 --finetunegan_path ./checkpoint/arcane/finetune-000600.pt \

--batch 1 --name vtoonify_t_arcane --pretrain --weight 0.5 0.5 0.5 0.5 0.5 0.5 0.5 1 1 1 1 1 1 1 1 1 1 1

python -m torch.distributed.launch --nproc_per_node=8 --master_port=8765 train_vtoonify_t.py \

--iter 2000 --finetunegan_path ./checkpoint/arcane/finetune-000600.pt \

--batch 4 --name vtoonify_t_arcane --weight 0.5 0.5 0.5 0.5 0.5 0.5 0.5 1 1 1 1 1 1 1 1 1 1 1

(3) Results

Our framework is compatible with existing StyleGAN-based image toonification models to extend them to video toonification, and inherits their appealing features for flexible style control. With DualStyleGAN as the backbone, our VToonify is able to transfer the style of various reference images and adjust the style degree in one model.

Here are the color interpolated results of VToonify-D and VToonify-Dc on Arcane, Pixar and Comic styles.

Citation

If you find this work useful for your research, please consider citing our paper:

@article{yang2022Vtoonify,

title={VToonify: Controllable High-Resolution Portrait Video Style Transfer},

author={Yang, Shuai and Jiang, Liming and Liu, Ziwei and Loy, Chen Change},

journal={ACM Transactions on Graphics (TOG)},

volume={41},

number={6},

articleno={203},

pages={1--15},

year={2022},

publisher={ACM New York, NY, USA},

doi={10.1145/3550454.3555437},

}

Acknowledgments

The code is mainly developed based on stylegan2-pytorch, pixel2style2pixel and DualStyleGAN.

Top Related Projects

PhotoMaker [CVPR 2024]

GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

[NeurIPS 2022] Towards Robust Blind Face Restoration with Codebook Lookup Transformer

Real-ESRGAN aims at developing Practical Algorithms for General Image/Video Restoration.

A latent text-to-image diffusion model

Official PyTorch repo for JoJoGAN: One Shot Face Stylization

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot