Yet-Another-EfficientDet-Pytorch

Yet-Another-EfficientDet-Pytorch

The pytorch re-implement of the official efficientdet with SOTA performance in real time and pretrained weights.

Top Related Projects

Detectron2 is a platform for object detection, segmentation and other visual recognition tasks.

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

OpenMMLab Detection Toolbox and Benchmark

Models and examples built with TensorFlow

Implementation of paper - YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

Quick Overview

Yet-Another-EfficientDet-Pytorch is an implementation of the EfficientDet object detection algorithm in PyTorch. It aims to provide a flexible and efficient implementation of the EfficientDet architecture, allowing for easy training and deployment of object detection models.

Pros

- High-performance implementation of EfficientDet in PyTorch

- Supports various EfficientDet variants (D0 to D7)

- Includes pre-trained models for quick start and fine-tuning

- Provides tools for training, evaluation, and inference

Cons

- Limited documentation and examples

- May require deep understanding of EfficientDet architecture

- Dependency on specific versions of libraries may cause compatibility issues

- Not as actively maintained as some other object detection libraries

Code Examples

- Loading a pre-trained model and performing inference:

from effdet import get_efficientdet_config, EfficientDet, DetBenchPredict

from effdet.efficientdet import HeadNet

config = get_efficientdet_config('efficientdet_d0')

net = EfficientDet(config, pretrained_backbone=False)

net.class_net = HeadNet(config, num_outputs=num_classes)

checkpoint = torch.load('path/to/checkpoint.pth')

net.load_state_dict(checkpoint)

bench = DetBenchPredict(net)

image = torch.rand(1, 3, 512, 512)

detections = bench(image)

- Training a custom EfficientDet model:

from effdet import get_efficientdet_config, EfficientDet, DetBenchTrain

from effdet.efficientdet import HeadNet

config = get_efficientdet_config('efficientdet_d1')

net = EfficientDet(config, pretrained_backbone=True)

net.class_net = HeadNet(config, num_outputs=num_classes)

bench = DetBenchTrain(net, config)

loss, det_loss, class_loss, box_loss = bench(images, targets)

- Evaluating model performance:

from effdet import get_efficientdet_config, EfficientDet, DetBenchEval

from effdet.evaluator import CocoEvaluator

config = get_efficientdet_config('efficientdet_d2')

net = EfficientDet(config, pretrained_backbone=False)

net.load_state_dict(torch.load('path/to/model.pth'))

evaluator = CocoEvaluator(dataset)

bench = DetBenchEval(net, config)

for images, targets in dataloader:

output = bench(images)

evaluator.add_predictions(output, targets)

results = evaluator.evaluate()

Getting Started

- Install the required dependencies:

pip install torch torchvision effdet

- Clone the repository:

git clone https://github.com/zylo117/Yet-Another-EfficientDet-Pytorch.git

cd Yet-Another-EfficientDet-Pytorch

- Download pre-trained weights or train your own model:

from effdet import get_efficientdet_config, EfficientDet, DetBenchPredict

config = get_efficientdet_config('efficientdet_d0')

model = EfficientDet(config, pretrained_backbone=True)

model.load_state_dict(torch.load('path/to/pretrained_weights.pth'))

bench = DetBenchPredict(model)

# Use bench for inference

Competitor Comparisons

Detectron2 is a platform for object detection, segmentation and other visual recognition tasks.

Pros of Detectron2

- Comprehensive library with support for multiple object detection architectures

- Extensive documentation and community support

- Modular design allowing easy customization and extension

Cons of Detectron2

- Steeper learning curve due to its complexity

- Potentially higher computational requirements for some models

Code Comparison

Detectron2:

from detectron2.config import get_cfg

from detectron2.engine import DefaultPredictor

cfg = get_cfg()

cfg.merge_from_file("path/to/config.yaml")

predictor = DefaultPredictor(cfg)

outputs = predictor(image)

Yet-Another-EfficientDet-Pytorch:

from backbone import EfficientDetBackbone

import torch

model = EfficientDetBackbone(compound_coef=0, num_classes=80)

model.load_state_dict(torch.load('weights/efficientdet-d0.pth'))

outputs = model(images)

Both repositories provide implementations for object detection, but Detectron2 offers a more comprehensive suite of tools and models. Yet-Another-EfficientDet-Pytorch focuses specifically on EfficientDet, making it potentially easier to use for this particular architecture. Detectron2's code is more abstracted, while Yet-Another-EfficientDet-Pytorch's implementation is more straightforward for EfficientDet models.

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

Pros of YOLOv5

- Higher performance and faster inference speed on various hardware platforms

- More extensive documentation and community support

- Regular updates and maintenance, including new features and bug fixes

Cons of YOLOv5

- Larger model size, which may impact deployment on resource-constrained devices

- Steeper learning curve for beginners due to its more complex architecture

Code Comparison

YOLOv5:

from models.experimental import attempt_load

model = attempt_load('yolov5s.pt', map_location=device)

results = model(img)

Yet-Another-EfficientDet-Pytorch:

from backbone import EfficientDetBackbone

model = EfficientDetBackbone(compound_coef=0, num_classes=len(obj_list))

model.load_state_dict(torch.load(weights_path, map_location=device))

features, regression, classification, anchors = model(img)

YOLOv5 offers a more streamlined approach to model loading and inference, while Yet-Another-EfficientDet-Pytorch provides more granular control over the model's components. YOLOv5's code is generally more concise and easier to use for quick implementations, whereas Yet-Another-EfficientDet-Pytorch may be preferred for more customized applications or when working with EfficientDet architectures specifically.

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

Pros of Mask_RCNN

- Implements instance segmentation in addition to object detection

- Well-documented with extensive tutorials and examples

- Supports multiple backbones (ResNet50, ResNet101)

Cons of Mask_RCNN

- Slower inference speed compared to EfficientDet

- Higher memory consumption during training and inference

- Less flexible in terms of model scaling and customization

Code Comparison

Mask_RCNN:

import mrcnn.model as modellib

model = modellib.MaskRCNN(mode="inference", config=config, model_dir=MODEL_DIR)

model.load_weights(COCO_MODEL_PATH, by_name=True)

results = model.detect([image], verbose=1)

Yet-Another-EfficientDet-Pytorch:

from effdet import EfficientDetBackbone

model = EfficientDetBackbone(compound_coef=0, num_classes=len(obj_list))

model.load_state_dict(torch.load(weights_path))

features, regression, classification, anchors = model(images)

The Mask_RCNN code focuses on loading a pre-trained model and performing inference, while the EfficientDet code demonstrates model initialization and forward pass. EfficientDet's implementation appears more modular, allowing for easier customization of the model architecture.

OpenMMLab Detection Toolbox and Benchmark

Pros of mmdetection

- Comprehensive library with support for multiple object detection algorithms

- Extensive documentation and active community support

- Modular design allowing easy customization and extension

Cons of mmdetection

- Steeper learning curve due to its complexity and extensive features

- Potentially higher computational requirements for training and inference

Code comparison

mmdetection:

from mmdet.apis import init_detector, inference_detector

config_file = 'configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py'

checkpoint_file = 'checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth'

model = init_detector(config_file, checkpoint_file, device='cuda:0')

result = inference_detector(model, 'test.jpg')

Yet-Another-EfficientDet-Pytorch:

from backbone import EfficientDetBackbone

import cv2

import numpy as np

model = EfficientDetBackbone(compound_coef=0, num_classes=80)

model.load_state_dict(torch.load('weights/efficientdet-d0.pth'))

image = cv2.imread('test.jpg')

detections = model(image)

Both repositories provide implementations of object detection algorithms, but mmdetection offers a more comprehensive suite of tools and models, while Yet-Another-EfficientDet-Pytorch focuses specifically on EfficientDet implementations. mmdetection's code structure is more modular and configurable, while Yet-Another-EfficientDet-Pytorch provides a more straightforward implementation for EfficientDet models.

Models and examples built with TensorFlow

Pros of models

- Comprehensive collection of official TensorFlow models and examples

- Extensive documentation and community support

- Regular updates and maintenance by Google's TensorFlow team

Cons of models

- Larger and more complex repository structure

- Steeper learning curve for beginners

- May include unnecessary components for specific use cases

Code Comparison

models:

import tensorflow as tf

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as viz_utils

model = tf.saved_model.load('path/to/saved_model')

category_index = label_map_util.create_category_index_from_labelmap(PATH_TO_LABELS)

Yet-Another-EfficientDet-Pytorch:

from backbone import EfficientDetBackbone

import torch

model = EfficientDetBackbone(compound_coef=0, num_classes=len(obj_list))

model.load_state_dict(torch.load(weights_path, map_location='cpu'))

model.requires_grad_(False)

model.eval()

The models repository offers a wide range of pre-implemented models and utilities, making it suitable for various tasks. However, its complexity may be overwhelming for specific projects. Yet-Another-EfficientDet-Pytorch focuses solely on EfficientDet implementation, providing a more streamlined approach for object detection tasks using PyTorch.

Implementation of paper - YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

Pros of YOLOv7

- Higher performance: YOLOv7 generally achieves better accuracy and speed compared to EfficientDet implementations

- More versatile: Supports a wider range of tasks, including instance segmentation and pose estimation

- Active development: Regularly updated with new features and improvements

Cons of YOLOv7

- More complex architecture: Can be harder to understand and modify compared to EfficientDet

- Larger model size: YOLOv7 models tend to be larger, which may impact deployment on resource-constrained devices

- Steeper learning curve: Requires more expertise to fine-tune and optimize for specific use cases

Code Comparison

YOLOv7:

from models.experimental import attempt_load

from utils.datasets import LoadImages

from utils.general import non_max_suppression

model = attempt_load(weights, map_location=device)

dataset = LoadImages(source, img_size=imgsz, stride=stride)

Yet-Another-EfficientDet-Pytorch:

from backbone import EfficientDetBackbone

from efficientdet.utils import BBoxTransform, ClipBoxes

model = EfficientDetBackbone(compound_coef=compound_coef, num_classes=len(obj_list))

regressBoxes = BBoxTransform()

clipBoxes = ClipBoxes()

Both repositories provide implementations of popular object detection architectures, but YOLOv7 offers higher performance and more features at the cost of increased complexity.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

Yet Another EfficientDet Pytorch

The pytorch re-implement of the official EfficientDet with SOTA performance in real time, original paper link: https://arxiv.org/abs/1911.09070

Having troubles training? I might train it for you

If you have troubles training a dataset, and if you are willing to share your dataset with the public or it's open already, post it on Issues with help wanted tag, I might try to help train it for you, if I'm free, which is not guaranteed.

Requirements:

-

The total number of the image of the dataset should not be larger than 10K, capacity should be under 5GB, and it should be free to download, i.e. baiduyun.

-

The dataset should be in the format of this repo.

-

If you post your dataset in this repo, it is open to the world. So PLEASE DO NOT upload your confidential datasets!

-

If the datasets are against the law or invade one's privacy, feel free to contact me to delete it.

-

Most importantly, you can't demand me to train unless I wanted to.

I'll post the trained weights in this repo along with the evaluation result.

Hope it help whoever wants to try efficientdet in pytorch.

Training examples can be found here. tutorials. The trained weights can be found here. weights

Performance

Pretrained weights and benchmark

The performance is very close to the paper's, it is still SOTA.

The speed/FPS test includes the time of post-processing with no jit/data precision trick.

| coefficient | pth_download | GPU Mem(MB) | FPS | Extreme FPS (Batchsize 32) | mAP 0.5:0.95(this repo) | mAP 0.5:0.95(official) |

|---|---|---|---|---|---|---|

| D0 | efficientdet-d0.pth | 1049 | 36.20 | 163.14 | 33.1 | 33.8 |

| D1 | efficientdet-d1.pth | 1159 | 29.69 | 63.08 | 38.8 | 39.6 |

| D2 | efficientdet-d2.pth | 1321 | 26.50 | 40.99 | 42.1 | 43.0 |

| D3 | efficientdet-d3.pth | 1647 | 22.73 | - | 45.6 | 45.8 |

| D4 | efficientdet-d4.pth | 1903 | 14.75 | - | 48.8 | 49.4 |

| D5 | efficientdet-d5.pth | 2255 | 7.11 | - | 50.2 | 50.7 |

| D6 | efficientdet-d6.pth | 2985 | 5.30 | - | 50.7 | 51.7 |

| D7 | efficientdet-d7.pth | 3819 | 3.73 | - | 52.7 | 53.7 |

| D7X | efficientdet-d8.pth | 3983 | 2.39 | - | 53.9 | 55.1 |

Update Log

[2020-07-23] supports efficientdet-d7x, mAP 53.9, using efficientnet-b7 as its backbone and an extra deeper pyramid level of BiFPN. For the sake of simplicity, let's call it efficientdet-d8.

[2020-07-15] update efficientdet-d7 weights, mAP 52.7

[2020-05-11] add boolean string conversion to make sure head_only works

[2020-05-10] replace nms with batched_nms to further improve mAP by 0.5~0.7, thanks Laughing-q.

[2020-05-04] fix coco category id mismatch bug, but it shouldn't affect training on custom dataset.

[2020-04-14] fixed loss function bug. please pull the latest code.

[2020-04-14] for those who needs help or can't get a good result after several epochs, check out this tutorial. You can run it on colab with GPU support.

[2020-04-10] warp the loss function within the training model, so that the memory usage will be balanced when training with multiple gpus, enabling training with bigger batchsize.

[2020-04-10] add D7 (D6 with larger input size and larger anchor scale) support and test its mAP

[2020-04-09] allow custom anchor scales and ratios

[2020-04-08] add D6 support and test its mAP

[2020-04-08] add training script and its doc; update eval script and simple inference script.

[2020-04-07] tested D0-D5 mAP, result seems nice, details can be found here

[2020-04-07] fix anchors strategies.

[2020-04-06] adapt anchor strategies.

[2020-04-05] create this repository.

Demo

# install requirements

pip install pycocotools numpy opencv-python tqdm tensorboard tensorboardX pyyaml webcolors

pip install torch==1.4.0

pip install torchvision==0.5.0

# run the simple inference script

python efficientdet_test.py

Training

Training EfficientDet is a painful and time-consuming task. You shouldn't expect to get a good result within a day or two. Please be patient.

Check out this tutorial if you are new to this. You can run it on colab with GPU support.

1. Prepare your dataset

# your dataset structure should be like this

datasets/

-your_project_name/

-train_set_name/

-*.jpg

-val_set_name/

-*.jpg

-annotations

-instances_{train_set_name}.json

-instances_{val_set_name}.json

# for example, coco2017

datasets/

-coco2017/

-train2017/

-000000000001.jpg

-000000000002.jpg

-000000000003.jpg

-val2017/

-000000000004.jpg

-000000000005.jpg

-000000000006.jpg

-annotations

-instances_train2017.json

-instances_val2017.json

2. Manual set project's specific parameters

# create a yml file {your_project_name}.yml under 'projects'folder

# modify it following 'coco.yml'

# for example

project_name: coco

train_set: train2017

val_set: val2017

num_gpus: 4 # 0 means using cpu, 1-N means using gpus

# mean and std in RGB order, actually this part should remain unchanged as long as your dataset is similar to coco.

mean: [0.485, 0.456, 0.406]

std: [0.229, 0.224, 0.225]

# this is coco anchors, change it if necessary

anchors_scales: '[2 ** 0, 2 ** (1.0 / 3.0), 2 ** (2.0 / 3.0)]'

anchors_ratios: '[(1.0, 1.0), (1.4, 0.7), (0.7, 1.4)]'

# objects from all labels from your dataset with the order from your annotations.

# its index must match your dataset's category_id.

# category_id is one_indexed,

# for example, index of 'car' here is 2, while category_id of is 3

obj_list: ['person', 'bicycle', 'car', ...]

3.a. Train on coco from scratch(not necessary)

# train efficientdet-d0 on coco from scratch

# with batchsize 12

# This takes time and requires change

# of hyperparameters every few hours.

# If you have months to kill, do it.

# It's not like someone going to achieve

# better score than the one in the paper.

# The first few epoches will be rather unstable,

# it's quite normal when you train from scratch.

python train.py -c 0 --batch_size 64 --optim sgd --lr 8e-2

3.b. Train a custom dataset from scratch

# train efficientdet-d1 on a custom dataset

# with batchsize 8 and learning rate 1e-5

python train.py -c 1 -p your_project_name --batch_size 8 --lr 1e-5

3.c. Train a custom dataset with pretrained weights (Highly Recommended)

# train efficientdet-d2 on a custom dataset with pretrained weights

# with batchsize 8 and learning rate 1e-3 for 10 epoches

python train.py -c 2 -p your_project_name --batch_size 8 --lr 1e-3 --num_epochs 10 \

--load_weights /path/to/your/weights/efficientdet-d2.pth

# with a coco-pretrained, you can even freeze the backbone and train heads only

# to speed up training and help convergence.

python train.py -c 2 -p your_project_name --batch_size 8 --lr 1e-3 --num_epochs 10 \

--load_weights /path/to/your/weights/efficientdet-d2.pth \

--head_only True

4. Early stopping a training session

# while training, press Ctrl+c, the program will catch KeyboardInterrupt

# and stop training, save current checkpoint.

5. Resume training

# let says you started a training session like this.

python train.py -c 2 -p your_project_name --batch_size 8 --lr 1e-3 \

--load_weights /path/to/your/weights/efficientdet-d2.pth \

--head_only True

# then you stopped it with a Ctrl+c, it exited with a checkpoint

# now you want to resume training from the last checkpoint

# simply set load_weights to 'last'

python train.py -c 2 -p your_project_name --batch_size 8 --lr 1e-3 \

--load_weights last \

--head_only True

6. Evaluate model performance

# eval on your_project, efficientdet-d5

python coco_eval.py -p your_project_name -c 5 \

-w /path/to/your/weights

7. Debug training (optional)

# when you get bad result, you need to debug the training result.

python train.py -c 2 -p your_project_name --batch_size 8 --lr 1e-3 --debug True

# then checkout test/ folder, there you can visualize the predicted boxes during training

# don't panic if you see countless of error boxes, it happens when the training is at early stage.

# But if you still can't see a normal box after several epoches, not even one in all image,

# then it's possible that either the anchors config is inappropriate or the ground truth is corrupted.

TODO

- re-implement efficientdet

- adapt anchor strategies

- mAP tests

- training-scripts

- efficientdet D6 support

- efficientdet D7 support

- efficientdet D7x support

FAQ

Q1. Why implement this while there are several efficientdet pytorch projects already.

A1: Because AFAIK none of them fully recovers the true algorithm of the official efficientdet, that's why their communities could not achieve or having a hard time to achieve the same score as the official efficientdet by training from scratch.

Q2: What exactly is the difference among this repository and the others?

A2: For example, these two are the most popular efficientdet-pytorch,

https://github.com/toandaominh1997/EfficientDet.Pytorch

https://github.com/signatrix/efficientdet

Here is the issues and why these are difficult to achieve the same score as the official one:

The first one:

- Altered EfficientNet the wrong way, strides have been changed to adapt the BiFPN, but we should be aware that efficientnet's great performance comes from it's specific parameters combinations. Any slight alteration could lead to worse performance.

The second one:

-

Pytorch's BatchNormalization is slightly different from TensorFlow, momentum_pytorch = 1 - momentum_tensorflow. Well I didn't realize this trap if I paid less attentions. signatrix/efficientdet succeeded the parameter from TensorFlow, so the BN will perform badly because running mean and the running variance is being dominated by the new input.

-

Mis-implement of Depthwise-Separable Conv2D. Depthwise-Separable Conv2D is Depthwise-Conv2D and Pointwise-Conv2D and BiasAdd ,there is only a BiasAdd after two Conv2D, while signatrix/efficientdet has a extra BiasAdd on Depthwise-Conv2D.

-

Misunderstand the first parameter of MaxPooling2D, the first parameter is kernel_size, instead of stride.

-

Missing BN after downchannel of the feature of the efficientnet output.

-

Using the wrong output feature of the efficientnet. This is big one. It takes whatever output that has the conv.stride of 2, but it's wrong. It should be the one whose next conv.stride is 2 or the final output of efficientnet.

-

Does not apply same padding on Conv2D and Pooling.

-

Missing swish activation after several operations.

-

Missing Conv/BN operations in BiFPN, Regressor and Classifier. This one is very tricky, if you don't dig deeper into the official implement, there are some same operations with different weights.

illustration of a minimal bifpn unit P7_0 -------------------------> P7_2 --------> |-------------| â â | P6_0 ---------> P6_1 ---------> P6_2 --------> |-------------|--------------â â â | P5_0 ---------> P5_1 ---------> P5_2 --------> |-------------|--------------â â â | P4_0 ---------> P4_1 ---------> P4_2 --------> |-------------|--------------â â |--------------â | P3_0 -------------------------> P3_2 -------->For example, P4 will downchannel to P4_0, then it goes P4_1, anyone may takes it for granted that P4_0 goes to P4_2 directly, right?

That's why they are wrong, P4 should downchannel again with a different weights to P4_0_another, then it goes to P4_2.

And finally some common issues, their anchor decoder and encoder are different from the original one, but it's not the main reason that it performs badly.

Also, Conv2dStaticSamePadding from EfficientNet-PyTorch does not perform like TensorFlow, the padding strategy is different. So I implement a real tensorflow-style Conv2dStaticSamePadding and MaxPool2dStaticSamePadding myself.

Despite of the above issues, they are great repositories that enlighten me, hence there is this repository.

This repository is mainly based on efficientdet, with the changing that makes sure that it performs as closer as possible as the paper.

Btw, debugging static-graph TensorFlow v1 is really painful. Don't try to export it with automation tools like tf-onnx or mmdnn, they will only cause more problems because of its custom/complex operations.

And even if you succeeded, like I did, you will have to deal with the crazy messed up machine-generated code under the same class that takes more time to refactor than translating it from scratch.

Q3: What should I do when I find a bug?

A3: Check out the update log if it's been fixed, then pull the latest code to try again. If it doesn't help, create a new issue and describe it in detail.

Known issues

- Official EfficientDet use TensorFlow bilinear interpolation to resize image inputs, while it is different from many other methods (opencv/pytorch), so the output is definitely slightly different from the official one.

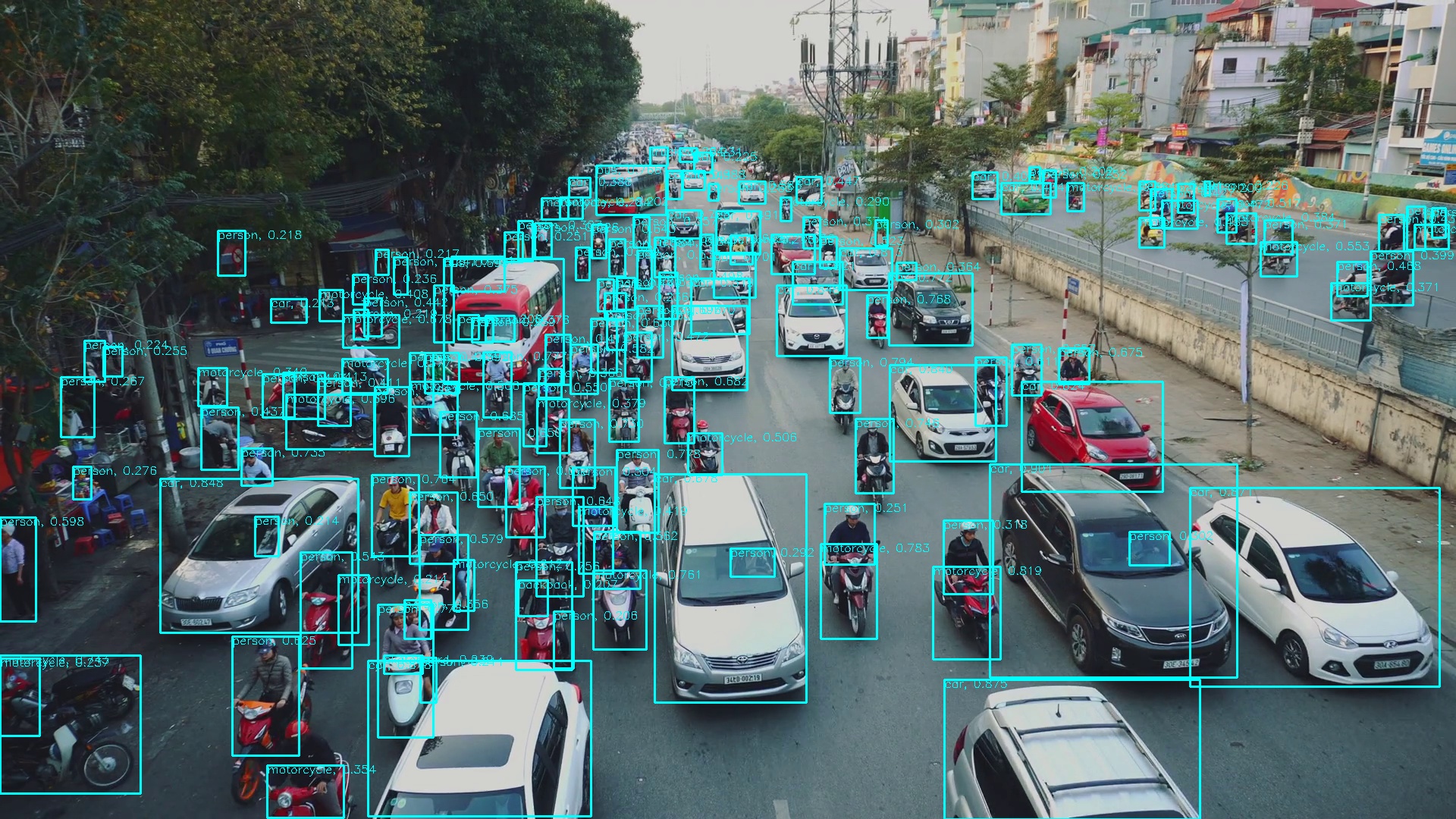

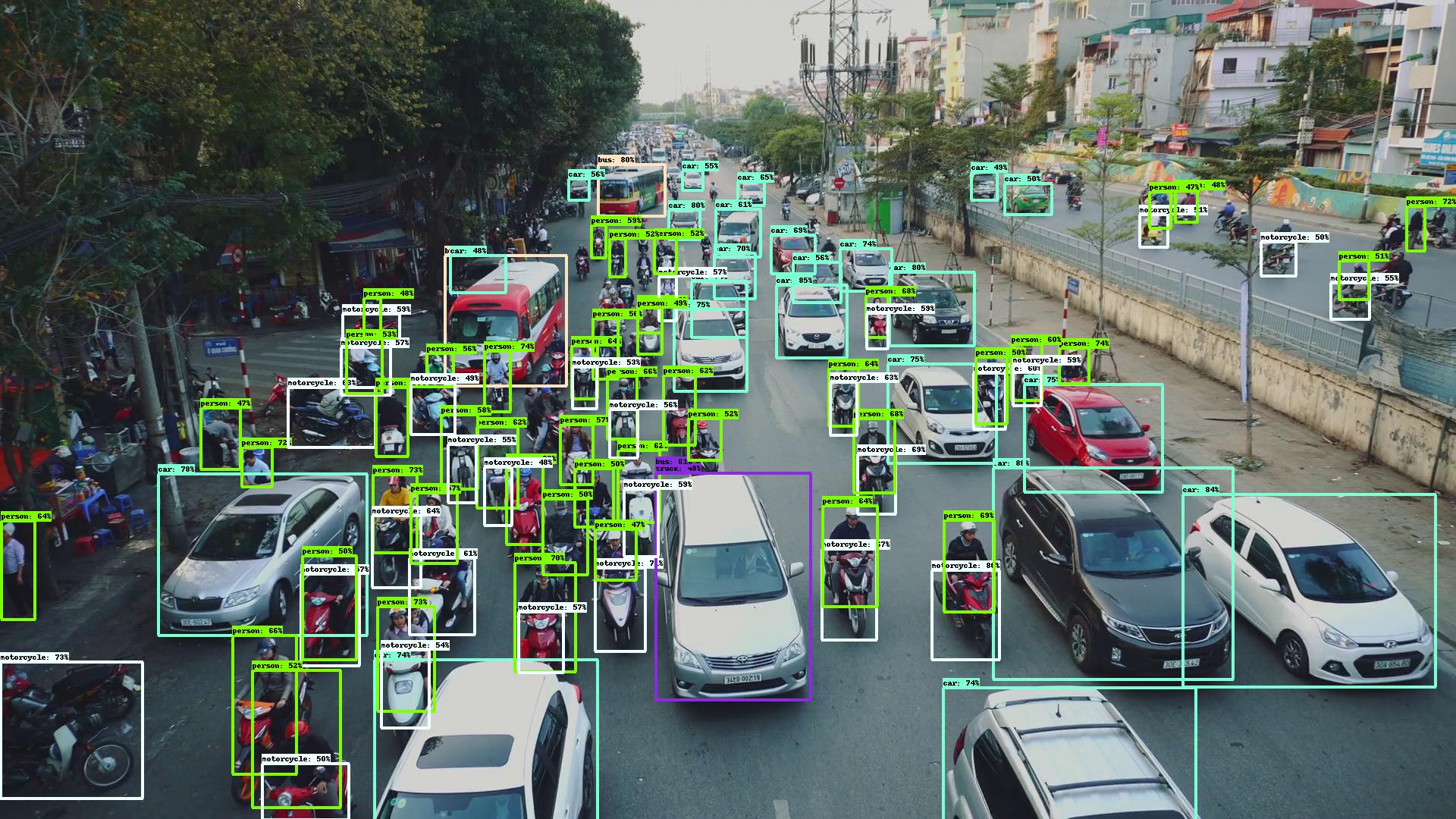

Visual Comparison

Conclusion: They are providing almost the same precision. Tips: set force_input_size=1920. Official repo uses original image size while this repo uses default network input size. If you try to compare these two repos, you must make sure the input size is consistent.

This Repo

Official EfficientDet

References

Appreciate the great work from the following repositories:

- google/automl

- lukemelas/EfficientNet-PyTorch

- signatrix/efficientdet

- vacancy/Synchronized-BatchNorm-PyTorch

Donation

If you like this repository, or if you'd like to support the author for any reason, you can donate to the author. Feel free to send me your name or introducing pages, I will make sure your name(s) on the sponsors list.

Sponsors

Sincerely thank you for your generosity.

Top Related Projects

Detectron2 is a platform for object detection, segmentation and other visual recognition tasks.

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow

OpenMMLab Detection Toolbox and Benchmark

Models and examples built with TensorFlow

Implementation of paper - YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot