mediapipe

mediapipe

Cross-platform, customizable ML solutions for live and streaming media.

Top Related Projects

Cross-platform, customizable ML solutions for live and streaming media.

A WebGL accelerated JavaScript library for training and deploying ML models.

Open Source Computer Vision Library

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Detectron2 is a platform for object detection, segmentation and other visual recognition tasks.

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

Quick Overview

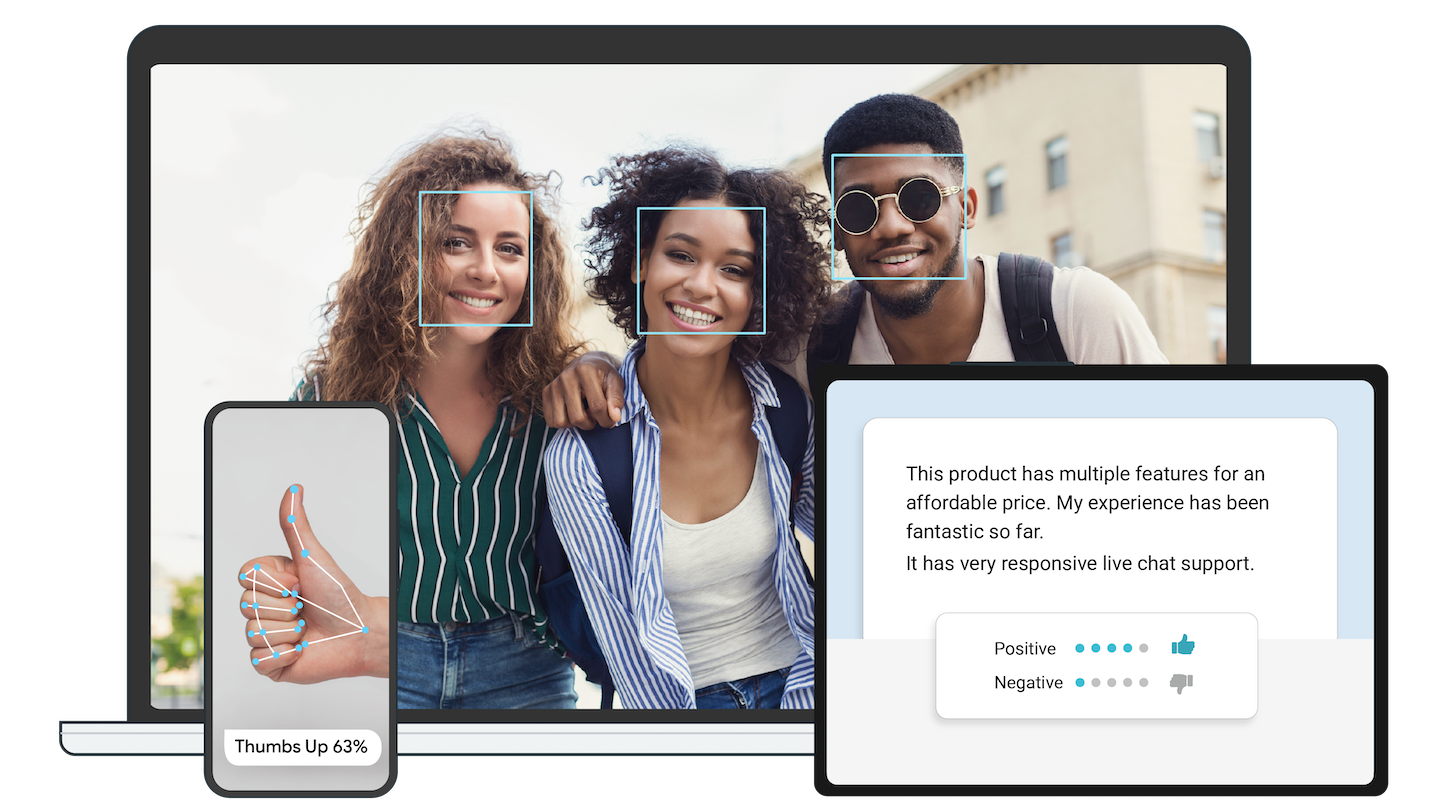

MediaPipe is an open-source framework developed by Google for building multimodal (video, audio, and sensor data) applied machine learning pipelines. It provides a set of pre-built solutions for various tasks such as face detection, pose estimation, and hand tracking, which can be easily integrated into mobile, web, and IoT applications.

Pros

- Cross-platform compatibility (Android, iOS, web, desktop)

- Optimized for real-time performance on edge devices

- Extensive collection of pre-built ML solutions

- Easy integration with popular ML frameworks like TensorFlow

Cons

- Steep learning curve for beginners

- Limited customization options for some pre-built solutions

- Documentation can be inconsistent or outdated in some areas

- Dependency management can be complex for certain platforms

Code Examples

- Face detection using MediaPipe:

import cv2

import mediapipe as mp

mp_face_detection = mp.solutions.face_detection

mp_drawing = mp.solutions.drawing_utils

with mp_face_detection.FaceDetection(min_detection_confidence=0.5) as face_detection:

image = cv2.imread('image.jpg')

results = face_detection.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

if results.detections:

for detection in results.detections:

mp_drawing.draw_detection(image, detection)

cv2.imshow('Face Detection', image)

cv2.waitKey(0)

- Hand tracking using MediaPipe:

import cv2

import mediapipe as mp

mp_hands = mp.solutions.hands

mp_drawing = mp.solutions.drawing_utils

cap = cv2.VideoCapture(0)

with mp_hands.Hands(min_detection_confidence=0.5, min_tracking_confidence=0.5) as hands:

while cap.isOpened():

success, image = cap.read()

if not success:

break

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = hands.process(image)

if results.multi_hand_landmarks:

for hand_landmarks in results.multi_hand_landmarks:

mp_drawing.draw_landmarks(image, hand_landmarks, mp_hands.HAND_CONNECTIONS)

cv2.imshow('Hand Tracking', cv2.cvtColor(image, cv2.COLOR_RGB2BGR))

if cv2.waitKey(5) & 0xFF == 27:

break

cap.release()

- Pose estimation using MediaPipe:

import cv2

import mediapipe as mp

mp_pose = mp.solutions.pose

mp_drawing = mp.solutions.drawing_utils

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

image = cv2.imread('pose.jpg')

results = pose.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

if results.pose_landmarks:

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS)

cv2.imshow('Pose Estimation', image)

cv2.waitKey(0)

Getting Started

To get started with MediaPipe, follow these steps:

- Install MediaPipe:

pip install mediapipe

- Import the necessary modules:

import mediapipe as mp

import cv2

- Choose a solution (e.g., face detection, hand tracking, pose estimation) and initialize it:

mp_face_detection = mp.solutions.face_detection

face_detection = mp_face_detection.FaceDetection(min_detection_confidence=0.5)

- Process images or video frames using the chosen solution:

image = cv2.imread('image.jpg')

results = face_detection.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

Competitor Comparisons

Cross-platform, customizable ML solutions for live and streaming media.

Pros of MediaPipe

- Comprehensive cross-platform solution for building multimodal ML pipelines

- Extensive library of pre-built components for various ML tasks

- Optimized for real-time performance on mobile and edge devices

Cons of MediaPipe

- Steeper learning curve due to complex architecture

- Limited customization options for some pre-built components

- Larger binary size compared to more specialized libraries

Code Comparison

MediaPipe example:

import mediapipe as mp

mp_hands = mp.solutions.hands

hands = mp_hands.Hands()

results = hands.process(image)

if results.multi_hand_landmarks:

# Process hand landmarks

Note: As the comparison is between the same repository (google-ai-edge/mediapipe), there isn't a meaningful code comparison to be made. The code example provided is a typical usage of MediaPipe for hand detection.

Summary

MediaPipe is a powerful and versatile framework for building machine learning pipelines, particularly suited for real-time applications on mobile and edge devices. While it offers a wide range of pre-built components and optimized performance, it may require more effort to learn and integrate compared to simpler, more specialized libraries. The trade-off between its comprehensive features and complexity makes it an excellent choice for projects that require advanced multimodal ML capabilities and cross-platform support.

A WebGL accelerated JavaScript library for training and deploying ML models.

Pros of TensorFlow.js

- Runs machine learning models directly in the browser or Node.js

- Supports a wide range of pre-trained models and custom model creation

- Extensive documentation and community support

Cons of TensorFlow.js

- Performance may be slower compared to native implementations

- Limited support for mobile devices and edge computing

- Larger file size and longer initial load times for web applications

Code Comparison

MediaPipe (C++):

#include "mediapipe/framework/calculator_framework.h"

#include "mediapipe/framework/formats/landmark.pb.h"

class HandLandmarkCalculator : public CalculatorBase {

public:

static ::mediapipe::Status GetContract(CalculatorContract* cc);

};

TensorFlow.js (JavaScript):

import * as tf from '@tensorflow/tfjs';

const model = await tf.loadLayersModel('path/to/model.json');

const prediction = model.predict(tf.tensor2d([[1, 2, 3, 4]]));

console.log(prediction.dataSync());

MediaPipe focuses on efficient, cross-platform ML solutions for mobile and edge devices, while TensorFlow.js prioritizes running ML models in web browsers and JavaScript environments. MediaPipe offers optimized C++ implementations, whereas TensorFlow.js provides a more accessible JavaScript API for web developers.

Open Source Computer Vision Library

Pros of OpenCV

- Broader scope and functionality, covering a wide range of computer vision tasks

- Larger community and more extensive documentation

- Better performance for traditional computer vision algorithms

Cons of OpenCV

- Steeper learning curve for beginners

- Less focus on mobile and edge devices

- Heavier resource requirements for some applications

Code Comparison

MediaPipe example (Python):

import mediapipe as mp

mp_hands = mp.solutions.hands

hands = mp_hands.Hands()

results = hands.process(image)

if results.multi_hand_landmarks:

# Process hand landmarks

OpenCV example (Python):

import cv2

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.1, 4)

for (x, y, w, h) in faces:

cv2.rectangle(image, (x, y), (x+w, y+h), (255, 0, 0), 2)

MediaPipe focuses on specific tasks like hand tracking, while OpenCV provides a more general-purpose toolkit for computer vision. MediaPipe offers easier implementation for certain AI-powered tasks, while OpenCV excels in traditional computer vision algorithms and offers more flexibility for custom solutions.

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Pros of OpenPose

- Higher accuracy in multi-person pose estimation

- More robust in complex scenarios with occlusions

- Supports 3D pose estimation

Cons of OpenPose

- Slower processing speed, especially on CPU

- Higher computational requirements

- Less suitable for real-time applications on mobile devices

Code Comparison

OpenPose:

#include <openpose/pose/poseExtractor.hpp>

auto poseExtractor = op::PoseExtractorCaffe::getInstance(poseModel, netInputSize, outputSize, keypointScaleMode, num_gpu_start);

poseExtractor->forwardPass(netInputArray, imageSize, scaleInputToNetInputs);

MediaPipe:

import mediapipe as mp

mp_pose = mp.solutions.pose

pose = mp_pose.Pose(static_image_mode=False, min_detection_confidence=0.5)

results = pose.process(image)

OpenPose offers more detailed configuration options and is implemented in C++, while MediaPipe provides a simpler Python API. OpenPose is better suited for high-accuracy research applications, whereas MediaPipe is more appropriate for real-time, mobile, and web-based applications due to its lighter computational footprint and easier integration.

Detectron2 is a platform for object detection, segmentation and other visual recognition tasks.

Pros of Detectron2

- More comprehensive and flexible object detection framework

- Better suited for advanced research and custom model development

- Extensive documentation and community support

Cons of Detectron2

- Steeper learning curve and more complex setup

- Higher computational requirements for training and inference

- Less optimized for mobile and edge devices

Code Comparison

MediaPipe example:

import mediapipe as mp

mp_face_detection = mp.solutions.face_detection

face_detection = mp_face_detection.FaceDetection()

results = face_detection.process(image)

Detectron2 example:

from detectron2.engine import DefaultPredictor

from detectron2.config import get_cfg

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml"))

predictor = DefaultPredictor(cfg)

outputs = predictor(image)

MediaPipe is more straightforward for simple tasks, while Detectron2 offers more flexibility and customization options. MediaPipe is better suited for real-time applications on mobile devices, whereas Detectron2 excels in research and complex computer vision tasks on more powerful hardware.

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

Pros of YOLOv5

- Faster inference speed and real-time object detection capabilities

- Extensive documentation and community support

- Easier to fine-tune and adapt for custom object detection tasks

Cons of YOLOv5

- Limited to object detection tasks, while MediaPipe offers a broader range of computer vision solutions

- May require more computational resources for training compared to some MediaPipe models

Code Comparison

YOLOv5:

import torch

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

results = model('image.jpg')

results.print()

MediaPipe:

import mediapipe as mp

mp_face_detection = mp.solutions.face_detection

with mp_face_detection.FaceDetection(min_detection_confidence=0.5) as face_detection:

results = face_detection.process(image)

YOLOv5 focuses on object detection with a simple API, while MediaPipe offers a variety of pre-built solutions for different computer vision tasks. YOLOv5 is more suitable for custom object detection projects, while MediaPipe provides ready-to-use models for specific applications like face detection, pose estimation, and hand tracking.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

layout: forward target: https://developers.google.com/mediapipe title: Home nav_order: 1

Attention: We have moved to https://developers.google.com/mediapipe as the primary developer documentation site for MediaPipe as of April 3, 2023.

Attention: MediaPipe Solutions Preview is an early release. Learn more.

On-device machine learning for everyone

Delight your customers with innovative machine learning features. MediaPipe contains everything that you need to customize and deploy to mobile (Android, iOS), web, desktop, edge devices, and IoT, effortlessly.

Get started

You can get started with MediaPipe Solutions by by checking out any of the developer guides for vision, text, and audio tasks. If you need help setting up a development environment for use with MediaPipe Tasks, check out the setup guides for Android, web apps, and Python.

Solutions

MediaPipe Solutions provides a suite of libraries and tools for you to quickly apply artificial intelligence (AI) and machine learning (ML) techniques in your applications. You can plug these solutions into your applications immediately, customize them to your needs, and use them across multiple development platforms. MediaPipe Solutions is part of the MediaPipe open source project, so you can further customize the solutions code to meet your application needs.

These libraries and resources provide the core functionality for each MediaPipe Solution:

- MediaPipe Tasks: Cross-platform APIs and libraries for deploying solutions. Learn more.

- MediaPipe models: Pre-trained, ready-to-run models for use with each solution.

These tools let you customize and evaluate solutions:

- MediaPipe Model Maker: Customize models for solutions with your data. Learn more.

- MediaPipe Studio: Visualize, evaluate, and benchmark solutions in your browser. Learn more.

Legacy solutions

We have ended support for these MediaPipe Legacy Solutions as of March 1, 2023. All other MediaPipe Legacy Solutions will be upgraded to a new MediaPipe Solution. See the Solutions guide for details. The code repository and prebuilt binaries for all MediaPipe Legacy Solutions will continue to be provided on an as-is basis.

For more on the legacy solutions, see the documentation.

Framework

To start using MediaPipe Framework, install MediaPipe Framework and start building example applications in C++, Android, and iOS.

MediaPipe Framework is the low-level component used to build efficient on-device machine learning pipelines, similar to the premade MediaPipe Solutions.

Before using MediaPipe Framework, familiarize yourself with the following key Framework concepts:

Community

- Slack community for MediaPipe users.

- Discuss - General community discussion around MediaPipe.

- Awesome MediaPipe - A curated list of awesome MediaPipe related frameworks, libraries and software.

Contributing

We welcome contributions. Please follow these guidelines.

We use GitHub issues for tracking requests and bugs. Please post questions to

the MediaPipe Stack Overflow with a mediapipe tag.

Resources

Publications

- Bringing artworks to life with AR in Google Developers Blog

- Prosthesis control via Mirru App using MediaPipe hand tracking in Google Developers Blog

- SignAll SDK: Sign language interface using MediaPipe is now available for developers in Google Developers Blog

- MediaPipe Holistic - Simultaneous Face, Hand and Pose Prediction, on Device in Google AI Blog

- Background Features in Google Meet, Powered by Web ML in Google AI Blog

- MediaPipe 3D Face Transform in Google Developers Blog

- Instant Motion Tracking With MediaPipe in Google Developers Blog

- BlazePose - On-device Real-time Body Pose Tracking in Google AI Blog

- MediaPipe Iris: Real-time Eye Tracking and Depth Estimation in Google AI Blog

- MediaPipe KNIFT: Template-based feature matching in Google Developers Blog

- Alfred Camera: Smart camera features using MediaPipe in Google Developers Blog

- Real-Time 3D Object Detection on Mobile Devices with MediaPipe in Google AI Blog

- AutoFlip: An Open Source Framework for Intelligent Video Reframing in Google AI Blog

- MediaPipe on the Web in Google Developers Blog

- Object Detection and Tracking using MediaPipe in Google Developers Blog

- On-Device, Real-Time Hand Tracking with MediaPipe in Google AI Blog

- MediaPipe: A Framework for Building Perception Pipelines

Videos

Top Related Projects

Cross-platform, customizable ML solutions for live and streaming media.

A WebGL accelerated JavaScript library for training and deploying ML models.

Open Source Computer Vision Library

OpenPose: Real-time multi-person keypoint detection library for body, face, hands, and foot estimation

Detectron2 is a platform for object detection, segmentation and other visual recognition tasks.

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot