SimCLR

SimCLR

PyTorch implementation of SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

Top Related Projects

PyTorch implementation of MoCo: https://arxiv.org/abs/1911.05722

SimCLRv2 - Big Self-Supervised Models are Strong Semi-Supervised Learners

A python library for self-supervised learning on images.

[arXiv 2019] "Contrastive Multiview Coding", also contains implementations for MoCo and InstDis

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

Quick Overview

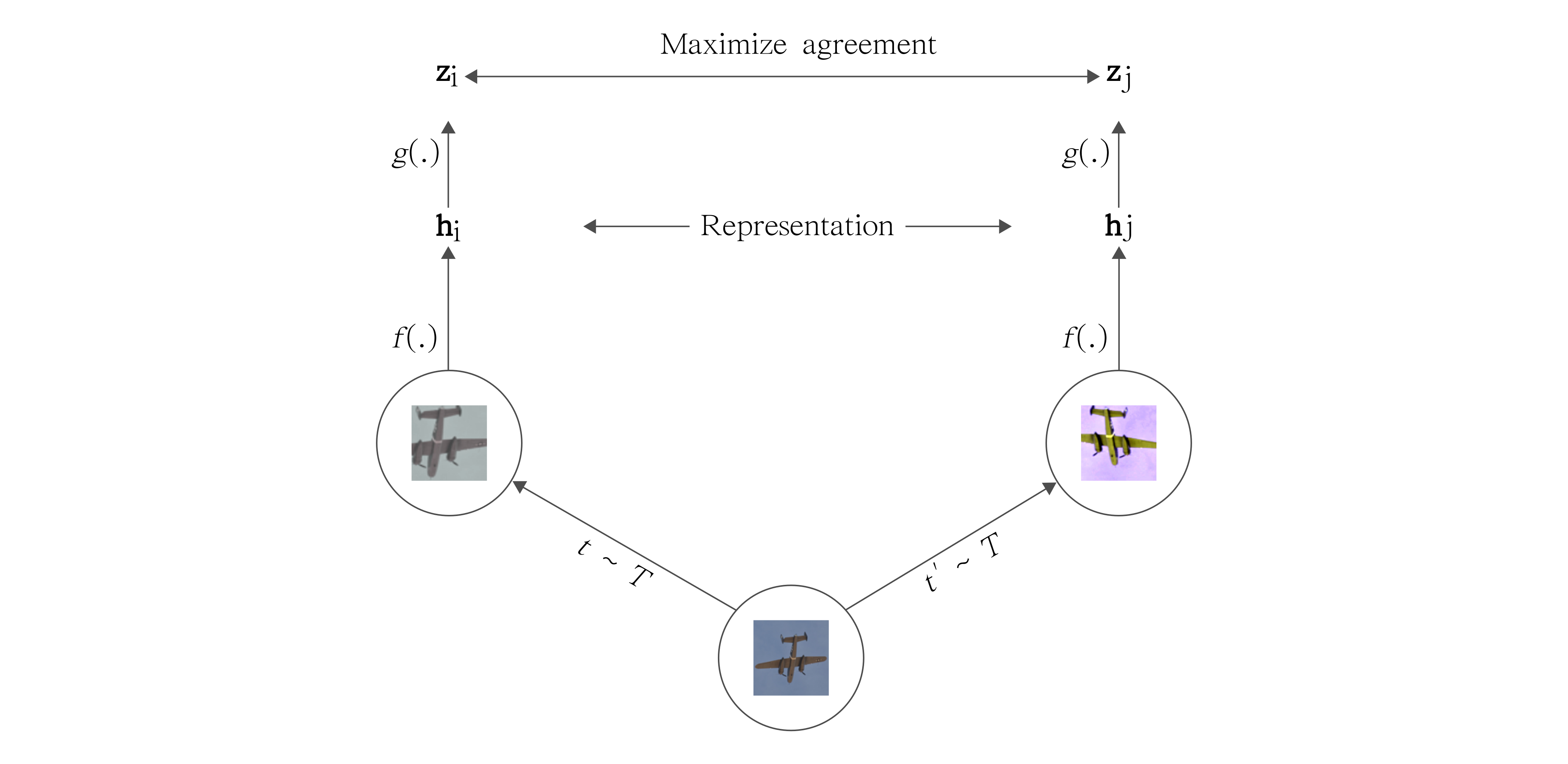

SimCLR is a PyTorch implementation of the paper "A Simple Framework for Contrastive Learning of Visual Representations" by Chen et al. It provides a self-supervised learning approach for training visual representations without labeled data, using contrastive learning techniques.

Pros

- Implements a state-of-the-art self-supervised learning method for computer vision tasks

- Provides a flexible and modular codebase for easy experimentation and customization

- Includes pre-trained models and evaluation scripts for quick testing and benchmarking

- Supports distributed training for faster experimentation on multiple GPUs

Cons

- Requires significant computational resources for training on large datasets

- Limited documentation and examples for advanced usage scenarios

- May require deep understanding of contrastive learning concepts for optimal use

- Dependency on specific versions of PyTorch and other libraries may cause compatibility issues

Code Examples

- Loading a pre-trained SimCLR model:

from simclr import SimCLR

model = SimCLR.load_from_checkpoint('path/to/checkpoint.ckpt')

model.eval()

- Extracting features from an image:

import torch

from torchvision import transforms

from PIL import Image

transform = transforms.Compose([

transforms.Resize(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

image = Image.open('path/to/image.jpg')

input_tensor = transform(image).unsqueeze(0)

with torch.no_grad():

features = model.encoder(input_tensor)

- Training a SimCLR model:

from simclr import SimCLR

from pytorch_lightning import Trainer

model = SimCLR(num_samples=50000, batch_size=256, num_epochs=100)

trainer = Trainer(gpus=1, max_epochs=100)

trainer.fit(model)

Getting Started

To get started with SimCLR:

-

Clone the repository:

git clone https://github.com/sthalles/SimCLR.git cd SimCLR -

Install dependencies:

pip install -r requirements.txt -

Run the training script:

python run.py --dataset_name=STL10 --gpus=1 --num_workers=8 --max_epochs=100 -

Evaluate the trained model:

python eval.py --model_path=path/to/checkpoint.ckpt --dataset_name=STL10

Competitor Comparisons

PyTorch implementation of MoCo: https://arxiv.org/abs/1911.05722

Pros of MoCo

- More memory-efficient, allowing for larger batch sizes

- Supports a wider range of architectures and tasks

- Better performance on some downstream tasks, especially object detection

Cons of MoCo

- Slightly more complex implementation due to the momentum encoder

- May require more careful hyperparameter tuning

- Potentially slower training due to the queue mechanism

Code Comparison

MoCo:

# Momentum update

self._momentum_update_key_encoder()

# Compute key features

with torch.no_grad():

k = self.encoder_k(im_k)

k = nn.functional.normalize(k, dim=1)

SimCLR:

# Compute features for both augmented views

z_i = self.encoder(x_i)

z_j = self.encoder(x_j)

# Normalize embeddings

z_i = F.normalize(z_i, dim=1)

z_j = F.normalize(z_j, dim=1)

Both MoCo and SimCLR are self-supervised learning frameworks for visual representation learning. MoCo uses a momentum encoder and a queue of negative samples, while SimCLR relies on large batch sizes and in-batch negatives. The code snippets highlight the key differences in their approaches to feature computation and normalization.

SimCLRv2 - Big Self-Supervised Models are Strong Semi-Supervised Learners

Pros of simclr

- Official implementation by Google Research, likely more aligned with the original paper

- Supports distributed training across multiple GPUs and TPUs

- More comprehensive documentation and examples

Cons of simclr

- More complex codebase, potentially harder to understand and modify

- Requires TensorFlow 2.x, which may not be preferred by all users

- Larger repository size with additional dependencies

Code Comparison

SimCLR:

class SimCLR(nn.Module):

def __init__(self, base_encoder, projection_dim):

super(SimCLR, self).__init__()

self.encoder = base_encoder(num_classes=projection_dim)

simclr:

class SimCLRModel(tf.keras.Model):

def __init__(self, num_classes, width_multiplier, resnet_depth):

super(SimCLRModel, self).__init__()

self.resnet_model = resnet.resnet(

resnet_depth=resnet_depth,

width_multiplier=width_multiplier,

cifar_stem=False)

The SimCLR implementation is more concise and uses PyTorch, while simclr uses TensorFlow and includes additional parameters for customization. SimCLR is easier to understand at a glance, but simclr offers more flexibility in model architecture.

A python library for self-supervised learning on images.

Pros of Lightly

- More comprehensive self-supervised learning framework with multiple methods

- Actively maintained with regular updates and new features

- Includes tools for data curation and dataset management

Cons of Lightly

- Steeper learning curve due to more complex API

- Requires more setup and configuration compared to SimCLR

- May have higher computational requirements for some features

Code Comparison

SimCLR:

model = SimCLR(encoder, projection_dim, n_features)

criterion = NT_Xent(batch_size, temperature, world_size)

optimizer = LARS(model.parameters(), lr=0.3, weight_decay=1e-6)

Lightly:

model = SimCLRModel(backbone)

criterion = NTXentLoss()

optimizer = LARS(model.parameters(), lr=0.1, weight_decay=1e-6)

collate_fn = SimCLRCollateFunction()

Both implementations follow similar patterns, but Lightly offers more flexibility in model configuration and data handling. SimCLR provides a more straightforward implementation focused specifically on the SimCLR method, while Lightly's approach is more modular and extensible.

[arXiv 2019] "Contrastive Multiview Coding", also contains implementations for MoCo and InstDis

Pros of CMC

- Implements Contrastive Multiview Coding (CMC), which can handle multiple views of data

- Provides more flexibility in terms of data augmentation strategies

- Includes additional loss functions and architectures beyond SimCLR

Cons of CMC

- Less actively maintained compared to SimCLR

- Documentation is not as comprehensive

- May require more setup and configuration for certain use cases

Code Comparison

SimCLR:

class SimCLR(nn.Module):

def __init__(self, base_encoder, projection_dim=128):

super(SimCLR, self).__init__()

self.encoder = base_encoder(num_classes=projection_dim)

CMC:

class CMC(nn.Module):

def __init__(self, base_encoder, n_views=2, feat_dim=128):

super(CMC, self).__init__()

self.encoder = nn.ModuleList([base_encoder() for _ in range(n_views)])

self.head = nn.ModuleList([nn.Linear(feat_dim, feat_dim) for _ in range(n_views)])

The main difference in the code is that CMC supports multiple views by using ModuleList for both the encoder and projection head, while SimCLR uses a single encoder with a specified projection dimension.

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

Pros of pytorch-metric-learning

- More comprehensive library with a wider range of metric learning techniques

- Actively maintained with regular updates and improvements

- Extensive documentation and examples for various use cases

Cons of pytorch-metric-learning

- Steeper learning curve due to its broader scope

- May have more overhead for simple tasks compared to SimCLR's focused approach

Code Comparison

SimCLR:

class SimCLR(nn.Module):

def __init__(self, base_encoder, projection_dim=128):

super(SimCLR, self).__init__()

self.encoder = base_encoder(pretrained=False)

self.projector = nn.Sequential(

nn.Linear(self.encoder.fc.in_features, projection_dim),

nn.ReLU(),

nn.Linear(projection_dim, projection_dim)

)

pytorch-metric-learning:

from pytorch_metric_learning import losses, miners, distances

loss_func = losses.NTXentLoss(temperature=0.07)

mining_func = miners.MultiSimilarityMiner()

distance = distances.CosineSimilarity()

loss = loss_func(embeddings, labels, indices_tuple=mining_func(embeddings, labels))

The SimCLR code focuses on the model architecture, while pytorch-metric-learning provides a more modular approach with separate components for loss functions, mining strategies, and distance metrics.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual CopilotREADME

PyTorch SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

Blog post with full documentation: Exploring SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

See also PyTorch Implementation for BYOL - Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning.

Installation

$ conda env create --name simclr --file env.yml

$ conda activate simclr

$ python run.py

Config file

Before running SimCLR, make sure you choose the correct running configurations. You can change the running configurations by passing keyword arguments to the run.py file.

$ python run.py -data ./datasets --dataset-name stl10 --log-every-n-steps 100 --epochs 100

If you want to run it on CPU (for debugging purposes) use the --disable-cuda option.

For 16-bit precision GPU training, there NO need to to install NVIDIA apex. Just use the --fp16_precision flag and this implementation will use Pytorch built in AMP training.

Feature Evaluation

Feature evaluation is done using a linear model protocol.

First, we learned features using SimCLR on the STL10 unsupervised set. Then, we train a linear classifier on top of the frozen features from SimCLR. The linear model is trained on features extracted from the STL10 train set and evaluated on the STL10 test set.

Check the notebook for reproducibility.

Note that SimCLR benefits from longer training.

| Linear Classification | Dataset | Feature Extractor | Architecture | Feature dimensionality | Projection Head dimensionality | Epochs | Top1 % |

|---|---|---|---|---|---|---|---|

| Logistic Regression (Adam) | STL10 | SimCLR | ResNet-18 | 512 | 128 | 100 | 74.45 |

| Logistic Regression (Adam) | CIFAR10 | SimCLR | ResNet-18 | 512 | 128 | 100 | 69.82 |

| Logistic Regression (Adam) | STL10 | SimCLR | ResNet-50 | 2048 | 128 | 50 | 70.075 |

Top Related Projects

PyTorch implementation of MoCo: https://arxiv.org/abs/1911.05722

SimCLRv2 - Big Self-Supervised Models are Strong Semi-Supervised Learners

A python library for self-supervised learning on images.

[arXiv 2019] "Contrastive Multiview Coding", also contains implementations for MoCo and InstDis

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

Convert  designs to code with AI

designs to code with AI

Introducing Visual Copilot: A new AI model to turn Figma designs to high quality code using your components.

Try Visual Copilot